- Introduction to Azure Kubernetes Service (AKS)

- Key Features of AKS

- How AKS Works

- Benefits of Using AKS

- AKS vs Traditional Kubernetes Deployment

- Setting Up AKS: Step-by-Step Guide

- Scaling and Auto-Scaling in AKS

- Integrating AKS with Azure DevOps

- Monitoring and Logging in AKS

- AKS Pricing and Cost Optimization

- Common AKS Issues and Troubleshooting

- Conclusion

Introduction to Azure Kubernetes Service (AKS)

Azure Kubernetes Service (AKS) is a managed container orchestration service provided by Microsoft Azure. It enables developers and IT teams to deploy, manage, and scale containerized applications using Kubernetes without the complexity of managing the underlying Kubernetes infrastructure. AKS abstracts much of the operational overhead of Kubernetes by automating tasks like provisioning, upgrading, and scaling, allowing teams to focus on deploying and managing their applications. By leveraging AKS, organizations can streamline their container management operations and achieve higher levels of automation and scalability for their applications in the cloud. Additionally, AKS offers seamless integration with other Azure services like Azure Active Directory and Azure DevOps, enhancing productivity. It provides built-in security features such as role-based access control (RBAC) and network policies to protect applications. With AKS, businesses can optimize operational efficiency while ensuring their applications are secure and scalable. Furthermore, AKS allows for simplified monitoring and troubleshooting through integration with Azure Monitor and Azure Log Analytics. This ensures that organizations can proactively manage their applications and minimize downtime.

Key Features of AKS

- Managed Kubernetes Control Plane: AKS offers a fully managed Kubernetes control plane, which means Azure takes care of the infrastructure, ensuring the Kubernetes controller nodes are always running and up to date.

- Integrated Developer Tools: AKS integrates Azure DevOps, Visual Studio Code, and other Azure tools to facilitate continuous integration (CI) and delivery (CD) workflows.

- Multi-region Deployment: AKS allows users to deploy applications in multiple regions, improving the availability and resilience of their services.

- Automatic Scaling: With AKS, you can automatically scale the number of pods or nodes in your cluster based on resource usage or custom metrics, ensuring your application can handle varying loads.

- Easy Monitoring: AKS integrates with Azure Monitor and Azure Log Analytics, enabling you to monitor the health of your clusters and applications with real-time metrics and logs.

- Azure Active Directory Integration: AKS supports Azure Active Directory (AAD) integration, allowing organizations to control access to Kubernetes resources using AAD identities.

- Security and Compliance: AKS includes built-in security features such as role-based access control (RBAC), network policies, and Azure Policy enforcement, helping organizations meet security and compliance requirements.

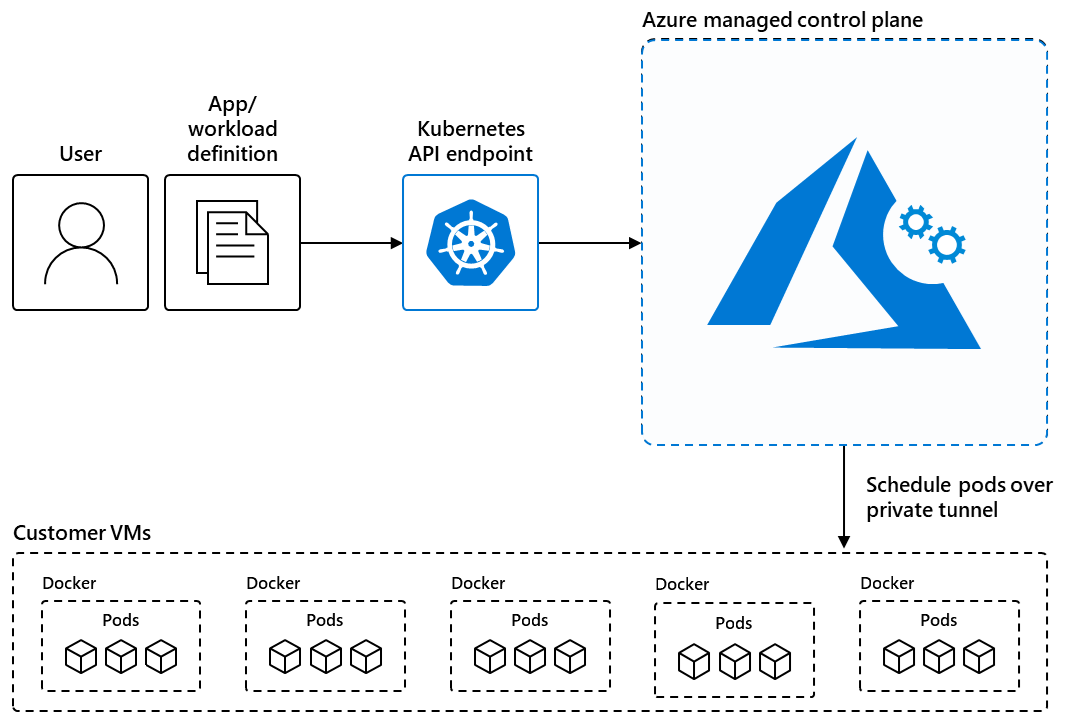

How AKS Works

Azure Kubernetes Service (AKS) abstracts away the complexity of Kubernetes management by automating several critical tasks. AKS manages the Kubernetes control plane, including the controller node, without requiring user involvement. This encompasses API server management, storage, scheduler, and controller manager tasks. In AKS, you define one or more node pools, which are groups of virtual machines (VMs) running containerized applications in your cluster. Node pools can be customized with different VM sizes and configurations based on workload requirements. To deploy applications, you use Kubernetes manifests (YAML files) that describe the desired state of your applications. AKS then orchestrates the deployment of containers as pods, the smallest unit in Kubernetes. AKS also handles automatic scaling of applications, utilizing the Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler to scale pods and nodes according to resource usage. Networking in AKS is enhanced with advanced features like Azure CNI for pod networking, ensuring seamless communication between pods, services, and external resources. Additionally, AKS integrates with Azure Storage services like Azure Disks and Azure Files, providing persistent storage for applications running in Kubernetes. This robust system allows for highly efficient management and scaling of containerized applications, making AKS a powerful solution for modern cloud-native workloads. With AKS, developers can focus more on building applications rather than managing infrastructure, boosting productivity and ensuring scalability.

Benefits of Using AKS

- Simplified Kubernetes Management: AKS abstracts much of the complexity of setting up, managing, and upgrading Kubernetes clusters. This allows teams to focus more on application development and less on infrastructure management.

- Scalability: AKS offers robust scalability options, including horizontal scaling (scaling the number of pods) and vertical scaling (scaling the resources of individual nodes). The Cluster Autoscaler and Horizontal Pod Autoscaler ensure that applications can scale dynamically in response to changing traffic loads.

- Cost Efficiency: Since AKS is a managed service, you only pay for your cluster’s VMs (nodes). The control plane is provided for free, which can significantly reduce operational costs compared to self-managing Kubernetes clusters.

- Integration with Azure Ecosystem: AKS works seamlessly with other Azure services, including Azure DevOps for CI/CD, Azure Monitor for logging and monitoring, and Azure Active Directory for identity and access management.

- Security and Compliance: AKS includes robust security features like RBAC, network policies, and Azure Policy, making it easier to secure applications running in the cloud. Azure’s Compliance Certifications further ensure that organizations can meet regulatory requirements.

- High Availability: AKS clusters can be deployed across multiple availability zones, ensuring high availability and resilience for mission-critical applications.

AKS vs Traditional Kubernetes Deployment

| Feature | AKS (Managed Service) | Traditional Kubernetes |

|---|---|---|

| Control Plane Management | Fully managed by Azure | Managed by the user/organization |

| Setup Complexity | Simplified, automated by Azure | Complex, requires manual configuration |

| Maintenance | Azure manages updates, patches, and upgrades | Manual updates and maintenance |

| Scaling | Automatic scaling options via AKS | Manual scaling via configuration |

| Cost | Pay for nodes; control plane is free | Pay for all resources, including control plane |

| Security | Built-in with Azure Active Directory (AAD) integration, RBAC, etc. | Custom security setup required |

Overall, AKS provides a more straightforward and efficient solution for running Kubernetes workloads on Azure with a more manageable approach compared to self-managing a Kubernetes cluster.

Setting Up AKS: Step-by-Step Guide

- Create an Azure Account: If you don’t have one, create one at https://azure.microsoft.com.

- Install Azure CLI: Download and install the Azure CLI to interact with your Azure resources from your local terminal.

- Create a Resource Group: az group create –name myResourceGroup –location vastus

- Create AKS Cluster: az aks create –resource-group myResourceGroup –name myAKSCluster –node-count 3 –enable-addons monitoring –generate-ssh-keys

- Connect to Your AKS Cluster: baz aks get-credentials –resource-group myResourceGroup –name myAKSCluster

- Deploy Applications: Use kubectl (the Kubernetes command-line tool) to deploy your containerized applications to AKS.

Scaling and Auto-Scaling in AKS

Horizontal Pod Autoscaling (HPA) automatically scales the number of pods in a Kubernetes deployment based on CPU or memory usage. Using the HPA feature, you can set specific resource thresholds to trigger scaling actions, such as kubectl autoscale deployment myapp –cpu-percent=50 –min=1 –max=10, which ensures that the application maintains optimal performance under varying loads. The Cluster Autoscaler further enhances scalability by automatically adjusting the number of nodes in your AKS cluster based on pod resource demands. This can involve adding or removing nodes as needed, ensuring that your cluster always has the right resources available. For manual scaling, you can directly adjust the number of replicas for your application with kubectl scale –replicas=5 deployment/myapp, offering more fine-grained control over your resources. When it comes to security, AKS offers several best practices to help secure your cluster and workloads. Role-Based Access Control (RBAC) can be configured to ensure that only authorized users have access to specific Kubernetes resources, improving the security of your environment. Network policies help restrict communication between pods, preventing unauthorized access and enhancing security between services. Azure Active Directory (AAD) integration provides secure user authentication with Azure identities, enabling seamless and secure access to Kubernetes resources. Secrets management is another crucial security practice, where sensitive data such as passwords and API keys are stored in Azure Key Vault and integrated with Kubernetes secrets. Additionally, Pod Security Policies enforce security controls on pods, ensuring that only trusted and verified containers are deployed, further minimizing the risk of vulnerabilities. By implementing these security practices, you can ensure a robust and secure AKS environment for your applications.

Integrating AKS with Azure DevOps

- Continuous Integration (CI): Set up CI pipelines in Azure DevOps to automatically build and test your code when changes are made to the repository.

- Continuous Deployment (CD): Use Azure DevOps release pipelines to deploy applications to your AKS cluster upon successful builds automatically.

- Helm: Leverage Helm charts for packaging and deploying applications to AKS clusters as part of your DevOps pipeline.

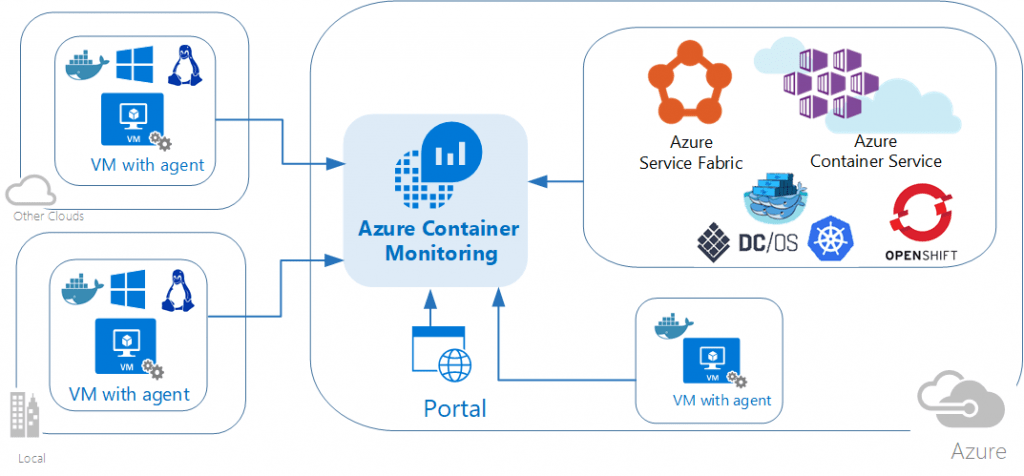

Monitoring and Logging in AKS

Azure Monitor is a powerful tool that helps you collect and analyze metrics, logs, and diagnostic data from your AKS cluster and applications. It provides insights into the health and performance of your resources, allowing you to proactively monitor your environment. By integrating AKS with Azure Log Analytics, you can analyze logs in real-time, detect issues, and identify patterns that could affect application performance. This integration helps you gain deep visibility into your applications running on AKS, making troubleshooting and monitoring easier. Additionally, you can deploy Prometheus and Grafana in your AKS cluster to monitor Kubernetes cluster performance and visualize key metrics, such as resource usage, pod health, and response times. Prometheus collects time-series data, while Grafana allows you to create custom dashboards to visualize and analyze this data. Together, these tools provide a comprehensive monitoring solution that helps maintain the reliability, availability, and performance of your applications in AKS. With such monitoring and analytics in place, you can ensure your applications run smoothly while minimizing potential downtime and performance bottlenecks.

AKS Pricing and Cost Optimization

- Pricing: You only pay for the virtual machines (VMs) in your AKS cluster. The Kubernetes control plane is provided free of charge.

- Scaling Costs: The cost of your AKS deployment increases with the number of nodes in your cluster, so scaling up or adding more VM instances will directly affect the cost.

- Storage Costs: You will also incur charges for any storage used in your AKS environment, such as persistent volumes (PVs), Azure Managed Disks, and other storage resources.

- Networking Costs: Data transfer between Azure resources, especially across regions, can incur additional costs, so it’s important to optimize the placement of resources and minimize inter-region communication where possible.

Cost Optimization Tips:

Use spot instances for non-critical workloads to reduce costs, as they offer significant savings over standard virtual machines. Implement auto-scaling to scale resources based on demand automatically, ensuring that you only use the resources necessary for your application’s current load. Monitor and manage resource usage using Azure Cost Management to track spending and optimize resource allocation. Additionally, set up budgets and alerts to avoid unexpected cost overruns. Consider leveraging reserved instances for predictable workloads to lock in lower pricing for longer-term use. Optimize storage by using appropriate storage tiers and policies based on access frequency and data importance.

Common AKS Issues and Troubleshooting

If your pods are not running, ensure that the node pools have enough resources (CPU, memory) to support your workload. Insufficient resources can prevent pods from being scheduled, causing delays or failures. Additionally, check for network connectivity issues, as network policies or Azure networking configurations may block communication between pods or services. Pod failures can also occur, so it’s important to investigate using kubectl logs to analyze the events or error messages and determine the root cause. Make sure your Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler are correctly configured to automatically scale your applications based on demand. Proper monitoring and alerting can also help in proactively identifying and resolving any resource bottlenecks or scaling issues before they impact your system. Ensure that your pod resource requests and limits are set appropriately to prevent over-provisioning or under-provisioning.

Conclusion

Azure Kubernetes Service (AKS) provides a scalable and efficient solution for running containerized applications on Kubernetes. It abstracts the complexity of Kubernetes management, allowing developers and IT teams to focus on application development and deployment rather than infrastructure. AKS automates key tasks such as patching, scaling, and high availability, simplifying operations. It integrates seamlessly with other Azure services like Azure Active Directory, Azure Monitor, and Azure DevOps, creating a unified platform for application development, monitoring, and security. AKS also offers built-in security features like role-based access control (RBAC) and network policies, ensuring secure application management. Additionally, AKS optimizes costs through elastic pricing models and resource scaling based on demand. With integrated monitoring tools, AKS helps track performance, debug issues, and gain insights into application health. Overall, AKS is an excellent choice for organizations seeking efficient containerized application management within Azure’s cloud ecosystem.