- Introduction to Azure HDInsight

- Key Features of Azure HDInsight

- Supported Apache Frameworks

- Architecture of Azure HDInsight

- Setting Up an HDInsight Cluster

- Data Storage and Processing in HDInsight

- Security and Compliance Features

- Integration with Other Azure Services

- Use Cases of Azure HDInsight

- Performance Optimization Techniques

- Cost and Pricing Model

- Best Practices for Using Azure HDInsight

- Conclusion

Introduction to Azure HDInsight

Azure HDInsight is a fully managed cloud service provided by Microsoft Azure that processes large volumes of data using open-source Apache frameworks. It is an enterprise-grade analytics service that allows organizations to process vast amounts of data in real time and gain insights using popular big data processing frameworks like Apache Hadoop, Apache Spark, Apache Hive, Apache Kafka, and others. HDInsight is designed for businesses to run complex data processing workloads on cloud infrastructures without managing underlying hardware or software, and Microsoft Azure Training can help users efficiently utilize this platform. Azure HDInsight simplifies the deployment and management of significant data clusters and provides seamless integration with other Azure services, making it an essential tool for big data processing and analytics in the cloud.

Key Features of Azure HDInsight

Azure HDInsight offers a wide range of features, making it a powerful solution for big data analytics and processing. These include:

- Fully Managed Service: Azure HDInsight is a fully managed service, which means that Microsoft Azure takes care of cluster management, including provisioning, monitoring, and maintenance. It eliminates the need for users to manually set up and manage infrastructure, allowing them to focus on processing data rather than managing resources.

- Support for Multiple Apache Frameworks: HDInsight supports many popular open-source frameworks, enabling users to process data using the most suitable tools for their workload. These frameworks include Apache Hadoop, Apache Spark, Apache Hive, Apache Kafka, Apache HBase, Apache Storm, and more.

- Scalability: Azure HDInsight is highly scalable, enabling users to scale clusters up or down quickly depending on workload requirements. You can add more nodes or expand storage capacity with minimal effort, allowing the platform to efficiently handle both small and large-scale data processing jobs.

- Integration with Azure Services: HDInsight integrates seamlessly with other Azure services such as Azure Blob Storage, Azure data lake Storage, Azure SQL Database, Azure Cosmos DB, and Power BI. This makes it easy to store data, analyze it, and visualize results within a single cloud ecosystem.

- Security and Compliance: Azure HDInsight offers enterprise-grade security features like encryption, role-based access control (RBAC), and integration with Azure Active Directory (AAD) for user authentication. It also complies with major industry standards, making it suitable for organizations with stringent data security and regulatory requirements.

- Cost Efficiency: Azure HDInsight provides a pay-as-you-go pricing model, allowing you to only pay for the resources you use. This makes it cost-efficient, as businesses can scale up or down based on demand and optimize costs by shutting down clusters when not in use.

Excited to Achieve Your Microsoft Azure Certification? View The Microsoft Azure Online course Offered By ACTE Right Now!

Supported Apache Frameworks

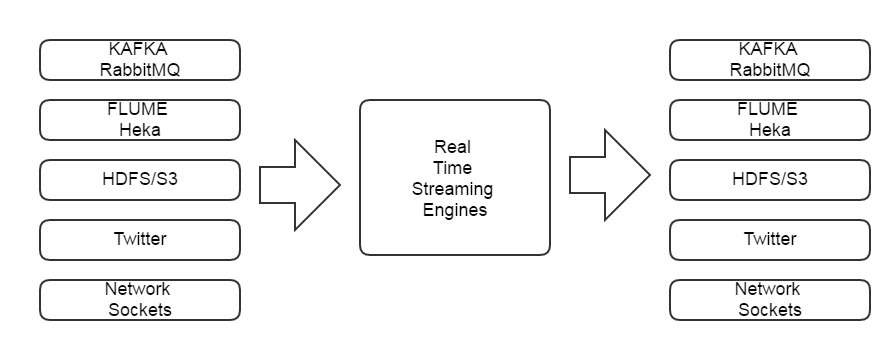

Azure HDInsight supports a broad set of Apache frameworks that cater to various big data processing tasks, enabling users to build robust data analytics and machine learning solutions on the cloud. Some of the most commonly used Apache frameworks supported by HDInsight include Apache Hadoop, a distributed storage and processing framework for handling large-scale data, which provides Hadoop clusters for batch processing vast amounts of data across many nodes. Apache Spark, a fast and general-purpose cluster-computing framework, is used for real-time data processing, analytics, and machine learning workloads, offering high-speed data processing and performance improvements over Hadoop. Apache Hive, a data warehouse framework built on top of Hadoop, allows users to query and manage large datasets with a SQL-like interface. Apache Kafka is a distributed event streaming platform widely used for building real-time data pipelines and processing data streams at scale. Apache HBase, a NoSQL database running on top of Hadoop, is ideal for handling massive amounts of unstructured data. Additionally, Apache Storm, a real-time stream processing framework, enables low-latency data processing, making it ideal for real-time analytics applications. In the Microsoft Azure Course, you will explore how such frameworks integrate with cloud-based solutions for scalable and efficient data processing. These frameworks provide a versatile foundation for diverse big data scenarios, ranging from batch processing to real-time data streaming. HDInsight’s integration with Azure services further enhances its capabilities, allowing seamless data management, scalability, and security for enterprise workloads.

Architecture of Azure HDInsight

The architecture of Azure HDInsight is designed to enable scalable, distributed data processing in the cloud. It consists of several key components:

- Cluster Nodes: An HDInsight cluster consists of different types of nodes, including head nodes (for job coordination), worker nodes (for data processing), and zookeeper nodes (for managing distributed tasks). Each node type is designed for specific roles in the data processing workflow.

- Data Storage: HDInsight data is typically stored in Azure Blob Storage or Azure Data Lake Storage. These storage solutions offer scalable, cost-effective, and secure options for storing both structured and unstructured data.

- Compute Resources: HDInsight clusters are powered by Azure virtual machines (VMs) and Azure Virtual Network . These VMs can be resized or reconfigured to meet the computing requirements of the workload. Users can choose from different VM types, depending on whether they need more memory, CPU power, or I/O performance.

- Apache Frameworks: HDInsight provides a pre-configured environment for running the various Apache frameworks. These frameworks work in a distributed environment across the cluster, enabling parallel data processing.

- Azure Management Layer: HDInsight is managed through the Azure Portal, Azure CLI, or Azure PowerShell, which allows users to deploy, configure, and manage clusters. The platform integrates with Azure Resource Manager (ARM) to provide centralized management.

Setting Up an HDInsight Cluster

Setting up an HDInsight cluster is a straightforward process that can be done efficiently through the Azure portal. First, you need to create a cluster by navigating to the HDInsight service and selecting “Create a new cluster.” During this step, you’ll specify the type of cluster you want, such as Hadoop, Spark, or Hive, and configure essential cluster details, including the number of nodes and the virtual machine (VM) sizes. Next, configure your storage by selecting an appropriate Azure storage account, such as Blob Storage or Data Lake Storage, where your data will be stored. This storage will be used by the HDInsight cluster to read and write data. For security, you can choose authentication options like Azure Azure Directory or SSH keys, and configure encryption and compliance-related settings to ensure data protection. After configuring these parameters, you can deploy the cluster and start running jobs. The Azure portal offers built-in monitoring tools to track cluster performance and resource utilization, helping you optimize the workload and ensure smooth operations. With automated scaling options and integration with other Azure services, you can also adjust resources as needed to accommodate fluctuating workloads.

Data Storage and Processing in HDInsight

Azure HDInsight offers two main options for storing data:

- Azure Blob Storage: A massively scalable object storage service commonly used to store unstructured data like logs, media files, and backups. Blob storage is cost-effective and integrates well with HDInsight for data processing.

- Azure Data Lake Storage: A high-performance data lake solution built for big data analytics. Data Lake Storage is optimized for analytical workloads and can handle structured and unstructured data, making it ideal for use with HDInsight.

- Once data is stored in these services, you can process it using various Apache frameworks such as Hadoop and Spark. For example, Apache Spark can process data stored in Azure Blob Storage and produce results, which can be saved back to storage or pushed to downstream applications.

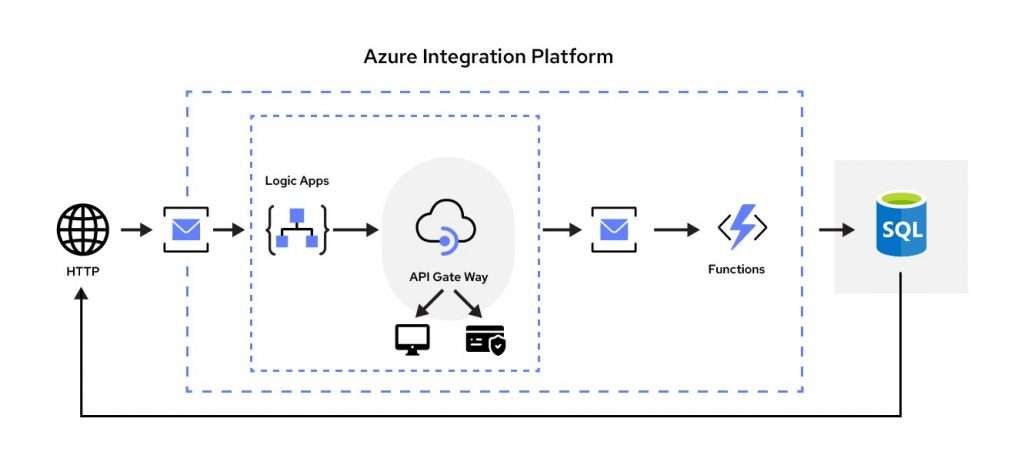

- Azure Machine Learning: You can use Azure HDInsight with Azure Machine Learning to build and deploy machine learning models at scale.

- Azure Data Factory: Azure Data Factory can orchestrate data pipelines and automate data movement between HDInsight and other services such as Azure SQL Database or Azure Cosmos DB.

- Power BI: You can integrate HDInsight with Power BI to visualize significant data results and create actionable business insights.

- Azure Event Hubs and Azure Stream Analytics: Integrating HDInsight with real-time streaming services like Event Hubs allows for real-time data processing with frameworks such as Apache Kafka and Apache Spark.

- Cluster Sizing: Choose the correct number of nodes and VM sizes to meet your workload requirements. You can resize clusters dynamically as your needs change.

- Data Partitioning: For frameworks like Hadoop and Spark, partition your data efficiently to improve parallel processing performance.

- Resource Scaling: Use auto-scaling features to scale your cluster resources dynamically based on workload demand.

- Caching: Use in-memory caching with Apache spark to speed up data processing for repeated queries or transformations.

- Cluster Size and Node Type: Costs depend on the number of nodes, VM sizes, and type of workloads.

- Storage: Charges are applied for data storage in Azure Blob Storage or Azure Data Lake.

- Data Transfer: There may be additional charges for data transfer between Azure regions or between HDInsight clusters and other Azure services.

- Scaling: HDInsight allows automatic scaling based on demand, meaning that you can scale up or down depending on your processing needs, which can help optimize costs by only using resources when necessary.

Excited to Obtaining Your Microsoft Azure Certificate? View The Microsoft Azure Online Training Offered By ACTE Right Now!

Security and Compliance Features

Azure HDInsight provides robust security features to protect your data and applications, ensuring a high level of security and compliance. One of the key features is encryption, which supports both data in transit and data at rest. Data can be encrypted using Azure Storage Service Encryption (SSE) or with customer-managed keys, providing flexibility in how data is secured. Access control is also crucial, and HDInsight uses Role-Based Access Control (RBAC) to define access to resources within the cluster, allowing you to assign specific permissions to users and groups to manage who can access and control the cluster. Additionally, HDInsight integrates with Azure Active Directory (Azure AD), enabling centralized identity and access management for enhanced security by controlling user authentication and authorization. Compliance with industry standards is another critical aspect, as Azure HDInsight meets various regulatory requirements, including GDPR, HIPAA, SOC 2, and ISO 27001. This helps ensure that your data processing and storage meet legal and compliance standards. With these security measures in place, businesses can confidently process and store sensitive data, knowing that it is protected against unauthorized access. Furthermore, HDInsight’s security features are continuously updated to align with the latest security practices, further safeguarding data in the cloud. Additionally, Microsoft Azure Training equips professionals with the necessary skills to effectively manage and secure HDInsight environments.

Integration with Other Azure Services

Azure HDInsight integrates with a variety of other Azure services to streamline data workflows and enhance capabilities. Some key integrations include:

Use Cases of Azure HDInsight

Azure HDInsight is versatile and can be used for a wide range of big data processing and analytics tasks, making it a powerful tool for organizations with diverse data needs. For data warehousing, HDInsight leverages Apache Hive to store and query large datasets at scale, providing a reliable solution for business intelligence. Real-time analytics is another key application, where integrating Apache Kafka and Apache Spark enables efficient real-time data processing, perfect for tasks such as fraud detection and predictive analytics. HDInsight can also process vast amounts of log data, making it an effective tool for log analytics to monitor and troubleshoot application performance. Furthermore, HDInsight supports running machine learning models at scale, utilizing Apache Spark and other frameworks, allowing businesses to build and deploy Machine learning techniques and solutions on large datasets. Additionally, HDInsight is ideal for building complex ETL (Extract, Transform, Load) pipelines to process and transform large datasets efficiently. By using HDInsight, businesses can also gain seamless integration with other Azure services like Azure Machine Learning and Azure Data Lake, enhancing their data analytics capabilities. With its scalability and flexibility, HDInsight offers organizations the ability to manage and analyze their data more effectively while keeping costs under control.

Interested in Pursuing a Big data Master’s Program? Enroll For Big data Master Course Today!

Performance Optimization Techniques

To ensure that your HDInsight clusters perform efficiently, consider the following optimization techniques:

Cost and Pricing Model

Azure HDInsight follows a pay-as-you-go pricing model, which means you only pay for the resources you use. The pricing is based on:

Preparing for Your Microsoft Azure Interview? Check Out Our Blog on Microsft Azure Interview Questions & Answer

Best Practices for Using Azure HDInsight

To ensure the optimal performance and cost-effectiveness of your Azure HDInsight deployment, it’s essential to follow some key best practices. First, use Azure’s monitoring tools to track the performance of your HDInsight clusters, allowing you to optimize resource utilization and make informed decisions regarding scaling. Automating data processing with tools like Azure Data Factory can streamline data pipelines and workflows, improving operational efficiency and reducing manual intervention. When it comes to storage, select the most cost-effective solution based on your processing needs, whether it’s Azure Blob Storage or Azure data lake Storage, to ensure that you are not overspending on unnecessary storage resources. It’s also crucial to secure your data by implementing robust security controls, including encryption, role-based access, and integration with Azure Active Directory to manage user authentication and authorization effectively. Lastly, taking advantage of HDInsight’s scalability options and continuously evaluating the cost-to-performance ratio can help you maintain an efficient cloud infrastructure. By adhering to these best practices, businesses can enhance the security, performance, and overall cost-effectiveness of their Azure HDInsight deployment, ensuring long-term success.

Conclusion

Azure HDInsight provides a fully managed cloud service, enabling seamless deployment and management of open-source frameworks like Hadoop, Spark, and Hive. It supports a variety of data workloads, from batch processing to real-time analytics. With built-in integration to other Azure services like Azure Data Lake Storage and Azure Machine Learning, it simplifies workflows and enhances data processing capabilities. HDInsight also offers automatic scaling, ensuring that businesses can optimize performance based on demand. Microsoft Azure Training can help professionals understand and leverage this feature effectively. Additionally, its robust security features, including encryption and identity management, ensure compliance with industry standards and safeguard sensitive data.