- Introduction to Containerization

- How Containerization Works

- Benefits of Using Containers

- Docker and Kubernetes for Containerization

- Container Orchestration Explained

- Use Cases of Containerization in Cloud

- Conclusion

Introduction to Containerization

Containerization is a lightweight and efficient method of packaging and deploying applications and their dependencies in a portable, consistent, and isolated environment. It allows applications to run seamlessly across different computing environments, whether in development, testing, or production. The key idea behind containerization is to package an application and all of its dependencies, including libraries, configurations, and system tools, into a single, self-contained unit known as a container. Containers are often compared to virtual machines (VMs), but Cloud Computing Course are much more efficient. Unlike VMs, which require a full operating system to run, containers share the host system’s operating system kernel while maintaining isolation. This enables them to be more lightweight and faster to start. The popularity of containerization has surged in recent years, primarily because of the benefits it brings to DevOps, continuous integration and continuous delivery (CI/CD), and cloud-native applications.

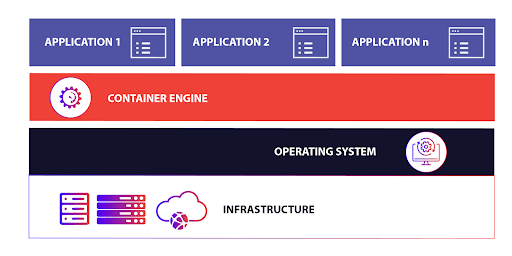

How Containerization Works

Containerization works by encapsulating an application and its environment in a container, which is a portable, standalone executable package. Containers are based on operating system-level virtualization, which means they share the host system’s operating system kernel but run in their own isolated user space. This isolation ensures that containers can run independently without interfering with each other, even when running on the same host.At the core of containerization is the concept of a container image, which is a read-only template that includes the application, its dependencies, libraries, and configuration files. The image acts as the blueprint for creating containers. Docker is one of the most widely used containerization platforms. The Docker engine is responsible for creating, running, and managing containers. It provides the necessary tooling and API for users to interact with containers, from building images to running them on various platforms.

The container runtime is the software that executes containers. Docker uses a container runtime (like containerd) to launch and manage containers. Other runtimes, like CRI-O and rkt, are also used in container orchestration systems. The container host is the physical or virtual machine on which the container runs. Cloud Computing Skills for Career Growth has the necessary container runtime installed and may run multiple containers simultaneously. Containers communicate with each other through networking, which can be set up in several ways, such as bridge networks, host networks, or overlay networks. Networking allows containers to share data, interact with each other, and access external resources. Containers rely on volumes or bind mounts to store persistent data. While containers themselves are ephemeral (temporary), data needs to persist beyond the lifecycle of a container, especially for databases or file storage.

Master Cloud Computing skills by enrolling in this Cloud Computing Online Course today.

Benefits of Using Containers

Containers offer several significant advantages over traditional virtual machines and other deployment methods. These benefits make containers particularly useful in modern software development, especially in DevOps and cloud-native environments:

Portability:

One of the most significant advantages of containerization is portability. A containerized application includes everything it needs to run, making it easy to move across different environments. Whether you’re running your application on a developer’s local machine, in a testing environment, or on a public cloud platform, the container will behave the same way in all these environments.

Efficiency:

Containers are more lightweight than virtual machines because they share the underlying host OS kernel rather than requiring a separate OS for each instance. This makes them faster to deploy, start, and scale. Containers also use fewer resources compared to VMs, which results in improved efficiency and cost savings.

Consistency:

Containers ensure that an application and its dependencies are packaged together in a consistent and reproducible manner. This eliminates issues such as “it works on my machine” by providing a consistent environment across all stages of development, testing, and production.

Isolation:

Containers provide process and file system isolation, meaning that applications running inside containers do not interfere with each other. This isolation also enhances security by ensuring that one container cannot affect the operation of others.

Scalability:

Containers are highly scalable, allowing for rapid scaling of applications. They can be deployed, stopped, or restarted quickly, enabling dynamic scaling to handle varying loads. This is particularly useful for cloud-native applications that need to scale in response to traffic spikes.

Simplified DevOps and CI/CD:

Containers are an ideal solution for Essential Cloud Computing Tools and Beyond , as they can be integrated into continuous integration and continuous delivery (CI/CD) pipelines. They allow for automated testing, integration, and deployment of applications in a consistent environment, accelerating software development cycles.

Cost-Effective:

Since containers are lightweight, they require fewer resources than traditional virtual machines, which results in cost savings for organizations. Multiple containers can run on the same host, optimizing resource utilization and reducing infrastructure costs.

Docker and Kubernetes for Containerization

Docker and Kubernetes are two of the most widely used tools in containerization. While Docker is primarily used to create and run containers, Kubernetes is an orchestration platform that helps manage, scale, and automate the deployment of containers in production environments.

Docker

Docker is the most popular containerization platform. It enables developers to create container images, run containers, and manage their lifecycle. Docker simplifies the process of building, testing, and deploying applications by providing a consistent environment for development and production.

- Docker Engine: The Docker Engine is the core component of Docker. It includes the Docker daemon (which manages containers) and the Docker CLI (which allows users to interact with Docker from the command line).

- Docker Images: A Docker image is a lightweight, standalone package that contains everything needed to run an application. Cloud Computing Course can be shared and versioned using Docker Hub or private registries.

- Docker Containers: A container is a running instance of a Docker image. It is a lightweight, isolated environment where the application runs.

- Docker Compose: Docker Compose is a tool for defining and running multi-container applications. It allows users to define multiple containers and their interactions using a simple YAML file, making it easy to deploy complex applications.

- Pod: A pod is the basic unit in Kubernetes, which can contain one or more containers. Containers within a pod share the same network namespace, which allows them to communicate with each other.

- Cluster: A Kubernetes cluster is a set of nodes that run containerized applications. A cluster includes a master node, which manages the overall cluster, and worker nodes, which run the application workloads.

- Deployment: A deployment defines the desired state of an application, including the number of replicas and other configurations. Kubernetes ensures that the application is always in the desired state by automatically adjusting the number of replicas or Cloud Computing Platforms and Services.

- Service: A service is a logical abstraction that defines how to access a set of pods. It provides load balancing and allows for communication between different parts of an application.

- Horizontal Pod Autoscaling (HPA): HPA is a feature of Kubernetes that automatically adjusts the number of pods based on resource utilization or custom metrics. This ensures that the application scales to meet traffic demands.

- Orchestrators automatically scale applications up or down based on resource utilization, ensuring optimal performance and cost efficiency.

- Orchestration platforms provide load balancing to distribute incoming traffic evenly across containers, preventing any single container from being overwhelmed.

- Orchestrators monitor container health and Virtualization in Cloud Computing restart or replace containers if they fail, ensuring high availability and reliability.

- Containers often need to communicate with each other. Orchestration tools manage service discovery, ensuring that containers can locate and interact with other services in the system.

- Orchestration platforms can perform rolling updates, deploying new versions of applications without downtime. This ensures continuous delivery and minimizes disruptions.

Enhance your knowledge in Cloud Computing. Join this Cloud Computing Online Course now.

Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. While Docker helps create and run containers, Kubernetes takes container management to the next level by providing features like automated scaling, self-healing, load balancing, and more.

Together, Docker and Kubernetes form a powerful combination that simplifies the process of managing and scaling containerized applications in production environments.

Want to lead in Cloud Computing? Enroll in ACTE’s Cloud Computing Master Program Training Course and start your journey today!

Container Orchestration Explained

Container orchestration refers to the process of managing the lifecycle of containers, including their deployment, scaling, networking, and monitoring. As organizations move to containerized applications, managing a large number of containers manually becomes impractical. Container orchestration platforms like Kubernetes, Docker Swarm, and Apache Mesos automate these tasks, ensuring that containers are deployed efficiently and scaled as needed.

Key Features of Container Orchestration include:

By automating the management of containerized applications, container orchestration reduces operational complexity and improves the reliability of applications in production environments.

Preparing for a job interview? Explore our blog on Cloud Computing Interview Questions and Answers!

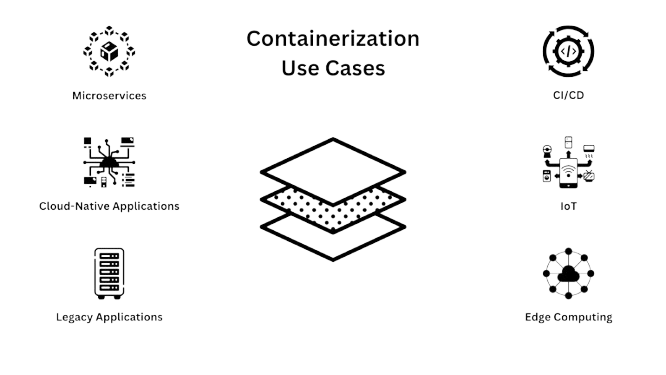

Use Cases of Containerization in Cloud

Containerization has become a critical component in the development and deployment of cloud-native applications. Cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer managed services for containerization, making it easier for organizations to adopt containers in their cloud environments. Containers are ideal for deploying microservices, where each service is packaged and deployed in its own container. This allows teams to independently develop, deploy, and scale individual components of an application. Containers enable automated CI/CD pipelines by ensuring that the application environment remains consistent across all stages of development, testing, and production. This eliminates environment-specific issues and speeds up the delivery process. Containers provide portability, making it easy to deploy applications across different Security Challenges in Cloud Computing and on-premises environments. This is especially useful for hybrid and multi-cloud architectures.

Containers play a crucial role in DevOps by enabling consistent, automated deployment processes. Infrastructure as Code (IaC) tools like Terraform can be used to provision containerized applications across cloud environments.Containers can be used to run serverless applications in the cloud, allowing for event-driven architectures and scaling based on demand without worrying about server management.Containers are also used for deploying data-intensive applications and AI models in the cloud. By packaging machine learning models and data processing pipelines in containers, organizations can easily scale and manage big data workloads.

Conclusion

In conclusion, containerization offers significant advantages in terms of portability, scalability, and efficiency, making it an essential tool for modern software development. Docker and Kubernetes, as the leading technologies for containerization and orchestration, provide robust solutions for managing applications in dynamic Cloud Computing Course . With its ability to streamline application deployment and reduce operational overhead, containerization is poised to play a key role in the future of cloud computing and DevOps. The flexibility it offers enables faster development cycles and more reliable deployment processes. Additionally, containerization supports microservices architecture, allowing for more modular, maintainable, and scalable applications. As cloud environments continue to evolve, containerization is expected to remain a cornerstone of infrastructure management and application delivery.