40+ [REAL-TIME] Apache Ambari Interview Questions and Answers

Last updated on 17th Nov 2021, Blog, Interview Questions

Apache Ambari serves as a robust and user-friendly platform for managing, monitoring, and provisioning Hadoop clusters. Developed as an open-source project under the Apache Software Foundation, Ambari simplifies the complexities associated with Hadoop ecosystem deployment and administration. Its primary objective is to provide a centralized management interface, enabling administrators to configure, monitor, and maintain the diverse components of a Hadoop ecosystem seamlessly. Ambari also plays a key role in version management, supporting the implementation of configurations through “blueprints” that define cluster architecture. This blueprint-based approach ensures consistency in cluster deployments across different environments, aiding in scalability and reproducibility.

1. Describe Apache Ambari.

Ans:

Apache Ambari serves as an open-source management and monitoring tool specifically crafted to streamline the complexities associated with deploying, configuring, and overseeing Apache Hadoop clusters. Its primary objective is to provide system administrators with a user-friendly web-based interface, empowering them to efficiently manage various Hadoop-related services and components within a cluster environment.

2. Which OS systems is Apache Ambari compatible with?

Ans:

This comprehensive utility is compatible with a variety of operating systems, including popular Linux distributions such as CentOS, Red Hat Enterprise Linux (RHEL), SUSE Linux Enterprise Server (SLES), and Ubuntu. Because of its adaptability, it may be used in a variety of corporate contexts with various operating system preferences.

3. List the Apache Ambari’s benefits.

Ans:

- Streamlined Hadoop Cluster Management

- Centralized Configuration and Monitoring

- Scalability and Extensibility

- Automated Software Installation and Updates

4. Which tasks does Ambari primarily assist the system administrators with?

Ans:

Cluster Provisioning: Simplifying the process of setting up Hadoop clusters, reducing manual efforts and potential errors.

Configuration Management: Centralizing configuration settings for Hadoop services, enhancing consistency and ease of maintenance.

Service Monitoring: Providing real-time monitoring capabilities, allowing administrators to promptly identify and address issues.

Troubleshooting: Offering troubleshooting tools and insights, improving the overall stability and dependability of the Hadoop system.

5. Describe the Apache Ambari architecture.

Ans:

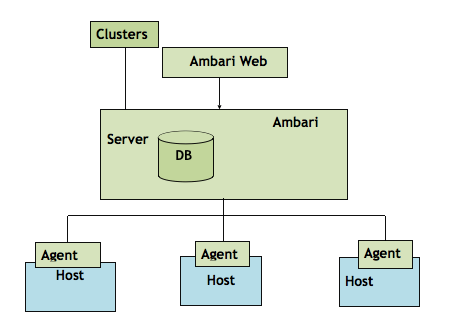

The architecture of Apache Ambari revolves around a server-agent model. The server communicates with agents installed on cluster nodes, facilitating the execution of tasks and management of configurations. The web-based user interface complements this architecture, offering a graphical representation of the cluster’s health and status for enhanced visibility.

6. What is the repository?

Ans:

- Within the Ambari context, the repository denotes a curated collection of software packages and dependencies essential for deploying and managing Hadoop services.

- This repository proves integral during installation and upgrade processes, ensuring the availability of the required components.

7. Which features are new in the most recent version?

Ans:

New versions of Ambari often introduce features such as enhanced UI/UX, support for additional services, heightened security features, and bug fixes. The specifics can be gleaned from the release notes accompanying each version.

8. What is yum?

Ans:

- ‘yum’ serves as a package management tool prevalent in Linux environments, particularly in Red Hat and CentOS.

- Its utility lies in simplifying tasks related to installing, updating, and managing software packages.

9. What is the number of levels that Apache Ambari supports for Hadoop components?

Ans:

Ambari’s support for multiple levels in organizing Hadoop components provides a hierarchical view of the cluster. This feature assists administrators in efficiently organizing services and components, contributing to streamlined management and monitoring. The precise number of levels can depend on the version and specific cluster configurations.

10. Which kinds of Ambari repositories are there?

Ans:

Ambari supports different types of repositories, including public repositories provided by vendors (e.g., Hortonworks, Cloudera) and local repositories. Public repositories offer pre-packaged versions of Hadoop components, while local repositories allow organizations to host their own packages, ensuring control over the distribution and versioning of software.

11. What are the advantages of establishing a local repository?

Ans:

Control: Organizations have control over software versions and updates.

Customization: Custom packages and configurations can be included.

Reduced External Dependencies: Reduces dependence on external repositories, ensuring availability.

12. What is the difference between Apache Ambari and Apache Ranger?

Ans:

| Feature | Apache Ambari | Apache Ranger | |

| Deployment |

Simplifies deployment of Hadoop clusters |

Manages and secures Hadoop cluster access | |

| Configuration | Provides a centralized platform for configuration | Defines and enforces security and access policies | |

| Monitoring | Real-time monitoring of Hadoop component health | Monitors and audits access to Hadoop resources | |

| Security Integration |

Integrates with various security measures |

Focuses on fine-grained access control and policies |

13. Describe the various life cycle commands in Ambari.

Ans:

- Ambari provides life cycle commands for managing services in a cluster, including ‘start’, ‘stop’, ‘restart’, ‘status’, ‘install’, and ‘uninstall’.

- These commands enable administrators to control the state and configuration of Hadoop services within the cluster.

14. Can Ambari oversee more than one cluster?

Ans:

Yes, Ambari possesses the capability to oversee and manage multiple clusters. This feature is invaluable for enterprises with diverse Hadoop environments. Administrators can utilize a single Ambari instance to centrally monitor, configure, and manage multiple Hadoop clusters, streamlining administrative tasks and ensuring consistency across environments.

15. What are the specific roles that Ambari’s ganglia play?

Ans:

Real-Time Metrics Monitoring: Ganglia collects real-time metrics related to the performance and health of individual nodes within the Hadoop cluster.

Cluster Visualization: Ganglia offers a visually intuitive representation of the cluster’s performance trends over time.

Resource Utilization Analysis: Administrators can leverage Ganglia’s metrics to analyze resource utilization patterns.

Alerting and Notification: Ganglia may be set up to create alerts depending on predetermined thresholds or abnormalities in the metrics of the cluster.

16. When will you utilize a local repository?

Ans:

A local repository is utilized in scenarios where organizations desire greater control over the distribution and versioning of software packages. This is particularly relevant in environments with restricted or no internet access, ensuring that required packages are readily available without relying on external repositories. It grants organizations autonomy in managing and customizing their software distribution strategy.

17. What features does Nagios specifically offer in Ambari?

Ans:

- Alerting and Notification

- System Monitoring

- Customizable Monitoring Checks

18. What is the Ambari shell made of?

Ans:

The Ambari Shell is a collection of command-line tools and utilities that empower administrators to interact with Ambari programmatically. Composed of scripts and commands, the Ambari Shell facilitates automation of tasks related to cluster management, configuration, and monitoring. This command-line interface enhances the efficiency of administrative tasks and supports scripting for streamlined operations within the Hadoop environment.

19. What is RBAC (Role-Based Access Control) in the context of Ambari?

Ans:

- RBAC in Ambari provides a robust security layer by controlling user access based on predefined roles.

- Admins assign roles like Admin, Cluster Operator, or View User, limiting access to specific functionalities.

- This approach ensures secure and controlled access, adhering to the principle of least privilege for enhanced Hadoop cluster security.

20. What is the role of Ambari Agents in a Hadoop cluster?

Ans:

Ambari Agents play a pivotal role in the seamless functioning of a Hadoop cluster managed by Apache Ambari. Acting as intermediaries between the Ambari Server and individual nodes within the cluster, these agents are entrusted with executing various tasks directed by the server. This includes critical functions such as software installations, configurations, and routine health checks on each node.

21. How does Ambari support the scalability and extensibility of Hadoop clusters?

Ans:

Scalability: Ambari aids scalability by facilitating the seamless addition of new nodes to the cluster.

Extensibility: The extensible architecture allows easy integration of new services and components to accommodate evolving cluster requirements without compromising stability.

22. Explain the purpose of the local repository in Ambari.

Ans:

The local repository in Ambari serves to provide organizations with autonomy over software distribution. It allows the hosting of software packages and dependencies locally, ensuring control over versions and updates. This is particularly useful in environments with restricted internet access, enabling organizations to manage their Hadoop cluster software distribution efficiently.

23. What are the essential hardware and software prerequisites for installing Ambari?

Ans:

- Hardware prerequisites include a cluster of machines meeting specifications and proper network connectivity.

- Software prerequisites involve a supported operating system, a Java Development Kit (JDK), Python, and a PostgreSQL or MySQL database for Ambari Server.

24. Describe the life cycle commands in Ambari and their use.

Ans:

Ambari provides life cycle commands such as ‘start’, ‘stop’, ‘restart’, ‘status’, ‘install’, and ‘uninstall’. These commands play a vital role in managing Hadoop services throughout their life cycle, allowing administrators to start, stop, or restart services, install new components, and monitor the status of the entire cluster.

25. How can you troubleshoot issues in a Hadoop cluster using Ambari?

Ans:

Ambari simplifies Hadoop cluster troubleshooting by providing exact metrics, logs, and an easy-to-use interface. Administrators may use the web-based console to swiftly identify and fix problems. Ambari also offers diagnostic tools, which provide a logical approach to resolving issues with settings, resource utilisation, or service failures.

26. What is the purpose of the Ambari Shell?

Ans:

- The Ambari Shell serves as a command-line interface for administrators to interact programmatically with Ambari.

- It supports automation and scripting tasks, enabling efficient management of cluster configurations, monitoring, and maintenance.

27. Can Ambari be integrated with LDAP for user authentication?

Ans:

Yes, Ambari can be linked with LDAP for user authentication. This connection enables organizations to utilize their current LDAP infrastructure, guaranteeing easy and centralized authentication for Ambari users. LDAP integration improves security by synchronizing user credentials and permissions with Ambari and simplifies user administration.

28. Explain the role of metrics and alerts in Ganglia for cluster monitoring.

Ans:

Ganglia in Ambari collects real-time metrics on cluster performance. These metrics include CPU usage, memory utilization, and network activity. Alerts in Ganglia notify administrators of deviations from predefined norms, enabling proactive identification and resolution of potential issues and ensuring the health and stability of the Hadoop cluster.

29. How does Ambari handle the installation and configuration of non-Hadoop services?

Ans:

- Ambari extends its capabilities to non-Hadoop services, handling their installation and configuration.

- This versatility allows Ambari to serve as a comprehensive management tool for diverse service ecosystems.

30. What are some common challenges in managing Hadoop clusters?

Ans:

Common challenges in managing Hadoop clusters include scalability issues, complex configurations, resource optimization, and troubleshooting complexities. Ambari addresses these challenges by providing a centralized platform for streamlined management, configuration consistency, and robust monitoring, contributing to the overall reliability and performance of Hadoop clusters.

31. How can you customize monitoring checks in Nagios for specific Hadoop components?

Ans:

To customize monitoring checks in Nagios for Hadoop components, identify key metrics, create custom plugins, configure Nagios commands, and define service checks. This allows administrators to gain granular insights into component performance and proactively address potential issues.

32. What is Blueprint in Ambari, and how is it used in cluster provisioning?

Ans:

- A Blueprint in Ambari serves as a powerful tool for consistent and repeatable cluster provisioning.

- It acts as a declarative definition of a cluster’s architecture, specifying the layout, properties of services, and other relevant configurations in advance.

33. Explain how Ambari supports the management of Hive databases and tables.

Ans:

Ambari simplifies the management of Hive databases and tables through its intuitive interface. By accessing the Hive service within the Ambari Dashboard, administrators can leverage the Hive View to create, modify, or delete databases and tables seamlessly.

34. How does Ambari handle the configuration of YARN?

Ans:

- Access YARN service: Navigate to the YARN service in the Ambari Dashboard.

- Modify configurations: Adjust YARN configurations based on cluster requirements, such as resource allocation and scheduler settings.

- Save and apply changes: Save the configuration modifications and apply them to update the YARN service.

35. What is the significance of the Apache Hadoop ZooKeeper service in Ambari?

Ans:

The Apache Hadoop ZooKeeper service holds significant importance within the Ambari ecosystem. It serves as a centralized service for distributed coordination, ensuring synchronization among various Hadoop components.

36. What role does the Ambari Metrics service play in monitoring Hadoop clusters?

Ans:

The Ambari Metrics service assumes a pivotal role in cluster monitoring by collecting and storing metrics data. This service provides a historical perspective on cluster performance, facilitating trend analysis and proactive issue identification. By serving as a repository for crucial performance metrics, the Ambari Metrics service contributes significantly to maintaining the health and stability of the Hadoop cluster.

37. How can you configure and manage Apache HBase using Ambari?

Ans:

Access HBase service: Navigate to the HBase service in the Ambari Dashboard.

Modify configurations: Adjust HBase configurations as needed, such as region server settings and storage options.

Save and apply changes: Save the modified configurations and apply them to update the HBase service.

38. Explain the purpose of stack and stack version in the context of Ambari.

Ans:

In the context of Ambari, a Stack represents a collection of software components, versions, and configurations essential for the Hadoop ecosystem. The Stack Version, on the other hand, specifies a particular release or version of these software components within the Stack.

39. How does Ambari assist in managing Hadoop cluster configurations?

Ans:

Ambari stands out in assisting administrators with Hadoop cluster configurations through its centralized management capabilities. This platform provides a user-friendly interface for configuring various Hadoop services, ensuring consistency across the cluster.

40. What is the purpose of the Ambari REST API, and how is it utilized?

Ans:

- Powerful tool for programmatically interacting with Ambari.

- Automate tasks, retrieve cluster information, and perform operations.

- Integration into custom scripts or third-party tools.

41. What are some best practices for optimizing the performance of a Hadoop cluster?

Ans:

Effective resource management, fine-tuning configurations for particular workloads, guaranteeing sufficient hardware resources, utilising data compression methods, and routinely monitoring and fine-tuning the cluster to adjust to evolving needs are all part of optimising Hadoop cluster performance.

42. How does Ambari contribute to the efficient management of Apache Spark?

Ans:

- Centralized platform for configuring, monitoring, and managing Spark services.

- Adjust Spark configurations, allocate resources, and monitor performance.

43. Explain the integration of Apache Ranger with Ambari for Hadoop cluster security.

Ans:

Apache Ranger integrates seamlessly with Ambari to enhance Hadoop cluster security. Ambari simplifies the configuration and management of Apache Ranger policies, providing a centralized platform for defining and enforcing security policies across various Hadoop components. This integration streamlines access control and authorization, contributing to a robust security framework for the Hadoop ecosystem.

44. Can Ambari manage clusters with mixed versions of Hadoop components?

Ans:

- Ambari simplifies configuration and management of Apache Ranger policies.

- Centralized platform for defining and enforcing security policies.

- Streamlines access control and authorization.

45. Describe the role of the Ambari SmartSense service in optimizing Hadoop cluster performance.

Ans:

The Ambari SmartSense service plays a crucial role in optimizing Hadoop cluster performance by collecting and analyzing cluster metrics, identifying potential issues, and providing recommendations for improvement. It assists administrators in making informed decisions regarding configuration tuning, resource allocation, and overall cluster optimization, contributing to enhanced performance and stability.

46. Explain the concept of Ambari Stacks.

Ans:

Ambari Stacks are collections of Hadoop ecosystem software components, settings, and services. Stacks contain the whole software stack, including Hadoop components and other services, allowing administrators to use Ambari to manage and version software components reliably across Hadoop clusters.

47. What is the purpose of the Ambari Infra service?

Ans:

- Foundation for centralized infrastructure-related services.

- Supports log collection, search, and analysis.

- Enhances cluster visibility and log-based troubleshooting.

48. How can you monitor and manage the Hadoop HDFS using Ambari?

Ans:

Ambari simplifies Hadoop HDFS monitoring and administration by offering a centralised interface for establishing HDFS services, modifying settings, and monitoring the health and performance of HDFS components. Administrators may use Ambari to monitor storage consumption, manage replication factors, and resolve issues, ensuring that HDFS operates efficiently inside the Hadoop cluster.

49. Describe the process of setting up and managing Oozie workflows using Ambari.

Ans:

The process of setting up and managing Oozie workflows using Ambari involves accessing the Ambari Dashboard, navigating to the Oozie service, and configuring properties such as workflow execution engines and database settings. Once configured, administrators can create, submit, and monitor Oozie workflows through the Ambari interface, streamlining the entire workflow management process.

50. How does Ambari contribute to the monitoring and management of Apache Kafka?

Ans:

- Centralized platform for Kafka configuration and management.

- User-friendly Ambari Dashboard for ease of use.

- Real-time monitoring of Kafka brokers and topics.

- Alerting capabilities for proactive issue identification.

51. Describe the steps for implementing High Availability in a Hadoop cluster.

Ans:

Implementing High Availability (HA) in a Hadoop cluster is crucial for ensuring continuous availability and mitigating the risk of downtime. The process involves configuring multiple instances of essential components to provide redundancy and failover capabilities. Key steps include enabling HA for the Hadoop Distributed File System (HDFS), configuring ResourceManager HA for YARN, and implementing NameNode HA for HDFS.

52. Can Ambari manage Apache Kudu in Hadoop clusters?

Ans:

Yes, Ambari can effectively manage Apache Kudu in Hadoop clusters. Ambari provides a seamless integration for deploying, configuring, and monitoring Apache Kudu services. Administrators can use the Ambari Dashboard to set up Kudu instances, adjust configurations to meet specific requirements, and monitor the performance and health of Kudu tables and nodes.

53. What are some strategies for securing the Ambari Server?

Ans:

- SSL/TLS Encryption: Use SSL/TLS to encrypt communication between the Ambari Server and its agents.

- Authentication: Implement robust authentication mechanisms, such as Kerberos, to control access to Ambari.

- Authorization: Set up proper authorization policies to restrict user access based on roles and permissions.

- Firewall Rules: Configure firewalls to allow only necessary network traffic to and from Ambari Server.

54. Describe the integration of Ambari with Apache Kylin.

Ans:

Apache Ambari can be integrated with Apache Kylin to manage and monitor Kylin services. Ambari provides a centralized platform for managing and monitoring Hadoop clusters, and by integrating with Kylin, it extends its capabilities to include the management of OLAP cube services. Ambari allows users to install, configure, and manage Kylin instances through its web-based interface.

55. How does Ambari manage Apache HBase configurations?

Ans:

- Ambari allows users to manage Apache HBase configurations through its web interface.

- Users can view, modify, and update HBase configuration settings using the Ambari interface.

- This includes adjusting parameters related to HBase performance, security, and other aspects.

56. Explain the role of Ambari SmartSense.

Ans:

Ambari SmartSense is a component that focuses on collecting and analyzing cluster usage patterns, performance metrics, and configurations. It aids in the identification of possible problems, the recommendation of optimisations, and the provision of insights into the general health and efficiency of the Hadoop cluster.

57. How does Ambari handle the upgrade process for Apache Hive?

Ans:

- Ambari simplifies the upgrade process for Apache Hive by providing a user-friendly interface for initiating and managing upgrades.

- Users can follow a step-by-step wizard to upgrade Hive components across the cluster.

- Ambari automates many of the tasks involved in the upgrade, making it a smoother process.

58. How can Ambari be configured for SSL/TLS encryption?

Ans:

To configure Ambari for SSL/TLS encryption, start by obtaining SSL/TLS certificates for Ambari Server. Next, configure the Ambari Server to use these certificates and update Ambari Agents to communicate securely using SSL/TLS. Adjust the Ambari web settings to enforce HTTPS, ensuring secure communication. It’s crucial to extend SSL/TLS encryption to all intra-cluster communication for a comprehensive security enhancement.

59. What is the purpose of Ambari Metrics Collector?

Ans:

Collects Data: Gathers metrics from Hadoop services.

Real-time Monitoring: Provides live insights into cluster metrics.

Visualization: Presents cluster health through a web interface.

60. Describe the process of setting up Apache Atlas using Ambari.

Ans:

To set up Apache Atlas using Ambari, navigate to the Ambari dashboard, locate the “Add Service” option, and select Apache Atlas. Follow the wizard to configure parameters such as database connection details and Atlas-specific settings. After completing the installation, restart affected services. Post-installation, use the Ambari interface for managing and monitoring Apache Atlas services.

61. What is the role of the Ambari Tez View?

Ans:

- Ambari Tez View provides a web-based interface for managing and monitoring Apache Tez applications.

- It allows users to visualize, analyze, and troubleshoot Tez jobs running in Hadoop clusters.

62. How does Ambari contribute to Apache Storm configuration?

Ans:

Ambari simplifies the configuration of Apache Storm by providing a user-friendly interface for managing Storm components. Users can adjust configurations, set up topologies, and monitor Storm clusters through the Ambari dashboard. This integration makes it easier to deploy and administer Apache Storm services in the Hadoop environment.

63. Explain the integration of Ambari with Apache Slider.

Ans:

Ambari integrates with Apache Slider to facilitate the management and monitoring of Slider applications.

Users can add Slider to their cluster through Ambari, configure parameters, and monitor Slider application status and performance.

64. What is the purpose of Ambari ZooKeeper View?

Ans:

Centralized Management: Manages ZooKeeper services centrally.

Monitoring: Monitors ZooKeeper instance status.

Configuration: Adjusts ZooKeeper configurations via Ambari.

Troubleshooting: Aids in issue identification and troubleshooting.

Simplification: Streamlines ZooKeeper administration in the Hadoop cluster.

65. How does Ambari manage Apache HBase configurations?

Ans:

Ambari allows users to manage Apache HBase configurations through its web interface. Users can view, modify, and update HBase configuration settings using the Ambari interface. This includes adjusting parameters related to HBase performance, security, and other aspects.

66. What is the significance of Ambari Log Search?

Ans:

- Centralized Analysis

- Efficient Troubleshooting

- Real-time Monitoring

- Operational Streamlining

- Optimization Insights

67. What is the purpose of the Ambari Hive View?

Ans:

Ambari Hive View offers a user-friendly web interface for managing and querying Apache Hive databases. It simplifies the interaction with Hive by providing a visual representation of tables, schemas, and queries. Users can execute SQL queries, visualize query results, and manage Hive resources through the Ambari Hive View.

68. Explain the role of Ambari Metrics Collector.

Ans:

Ambari Metrics Collector gathers and stores metrics data from various services in the Hadoop cluster. It enables real-time monitoring, visualization, and analysis of performance metrics.

69. How does Nagios enhance cluster reliability in Ambari?

Ans:

- Nagios, when integrated with Ambari, enhances cluster reliability by providing robust monitoring capabilities.

- Nagios monitors various aspects of the Hadoop cluster, alerting administrators to potential issues or deviations from normal behavior.

70. Explain the purpose of Apache Falcon in Ambari.

Ans:

Apache Falcon, seamlessly integrated with Ambari, plays a pivotal role in managing the data lifecycle within Hadoop clusters. It serves as a comprehensive solution for defining, scheduling, and monitoring data workflows, ensuring efficient coordination of data pipeline activities. This simplifies the management of metadata and dependencies, facilitating reliable and timely data processing across the Hadoop ecosystem.

71. What is the role of Ambari ZooKeeper View?

Ans:

- Centralized Management: Provides a centralized interface for managing Apache ZooKeeper services.

- Monitoring: Allows users to monitor ZooKeeper instance status.

- Configuration: Facilitates adjusting ZooKeeper configurations through a unified Ambari interface.

- Troubleshooting: Streamlines issue identification and troubleshooting for ZooKeeper in the Hadoop ecosystem.

72. How can Ambari be configured to manage Apache Ranger?

Ans:

To configure Ambari for managing Apache Ranger, users can leverage the Ambari dashboard to add and install the Apache Ranger service. Configuration of Apache Ranger settings and policies can be accomplished through the Ambari web interface, offering a centralized location for policy management. Ambari further facilitates the easy start, stop, and monitoring of Apache Ranger services.

74. What is the purpose of the Ambari Blueprint?

Ans:

Cluster Definition: Ambari Blueprint defines the cluster architecture, including components, configurations, and layout.

Consistent Deployment: Enables consistent and repeatable cluster deployments across different environments.

75. Describe the steps for implementing HA in Ambari.

Ans:

Implementing High Availability (HA) in Ambari involves configuring a load balancer for distributing requests, setting up a highly available database for Ambari Server, deploying multiple instances for redundancy, updating Ambari Agents for communication, and ensuring high availability for critical components like the Ambari Database and Server.

76. What are the best practices for optimizing Hadoop clusters in Ambari?

Ans:

- Regular Performance Monitoring

- Capacity Planning

- Configuration Tuning

- Security Measures

- Regular Software Updates

77. How does Ambari handle Apache Solr configurations?

Ans:

Ambari handles Apache Solr configurations via its web interface, enabling users to change factors such as heap size and Solr-specific variables. It also provides monitoring features for Solr instances, allowing users to define alarms and assure the health and performance of Solr components.

78. Explain the purpose of the Ambari Infra service.

Ans:

Log Collection: Ambari Infra Service collects and aggregates logs from various Hadoop components.

Centralized Logging: Provides a centralized platform for managing and accessing logs for monitoring and troubleshooting.

Log Search: Integrates with Ambari Log Search to enhance log analysis capabilities.

79. How does Ambari assist in managing Apache Kafka?

Ans:

Ambari assists in managing Apache Kafka by allowing users to add and manage Kafka as a service through the Ambari dashboard. It facilitates centralized configuration adjustments, monitoring of Kafka performance using Ambari Metrics, and configuring alerts for proactive issue identification and resolution. Users can seamlessly start, stop, and manage Apache Kafka services through Ambari.

80. What role does Ambari play in Apache Nifi-Registry versioning?

Ans:

- Ambari facilitates the deployment of Apache Nifi-Registry as a service.

- Users can configure and manage versioning settings for Nifi-Registry through Ambari.

- Ambari provides a centralized platform for overseeing and controlling versioning aspects of Nifi-Registry.

81. How does Ambari configure and manage Apache Phoenix?

Ans:

Ambari streamlines the configuration and management of Apache Phoenix within Hadoop clusters through its user-friendly interface. Users leverage the Ambari dashboard to seamlessly integrate Apache Phoenix as a service. This integration allows for centralized control over Phoenix configurations, enabling administrators to efficiently adjust settings for optimal performance.

82. Can Ambari oversee Apache Superset for data visualization?

Ans:

Ambari extends its capabilities to oversee Apache Superset for data visualization. Administrators can effortlessly add and manage Superset as a service through the Ambari dashboard. This integration allows for centralized configuration of Superset settings and monitoring of its instances.

83. How does Ambari contribute to Apache Mahout configuration?

Ans:

- Ambari facilitates the integration of Apache Mahout as a service.

- Users can adjust Mahout configurations through the Ambari web interface.

- Ambari streamlines the configuration and management of Mahout for machine learning tasks.

84. What is the purpose of the Ambari Metrics System in cluster analysis?

Ans:

The Ambari Metrics System is instrumental in cluster analysis, collecting and providing real-time insights into cluster metrics. This system serves as a centralized platform for monitoring and analyzing performance metrics, supporting administrators in making informed decisions for cluster optimization and resource allocation.

85. Explain Ambari’s role in Apache Zeppelin Notebook setup.

Ans:

- Ambari facilitates the deployment of Apache Zeppelin as a service.

- Users can configure Zeppelin settings centrally using the Ambari web interface.

- Ambari streamlines the setup of Zeppelin Notebooks for interactive data analysis and visualization.

86. How does Ambari support Apache Hudi for incremental data processing?

Ans:

For Apache Hudi and incremental data processing, Ambari supports administrators by allowing the addition and management of Hudi as a service. Through Ambari, users can configure Hudi settings, and the platform provides monitoring capabilities for Hudi instances. This support ensures efficient handling of incremental data processing tasks, contributing to the overall operational efficiency of Hadoop clusters.

87. What is the significance of Apache Avro in Ambari configurations?

Ans:

- Apache Avro is often utilized as a serialization framework in Hadoop ecosystems.

- Ambari facilitates the configuration of Avro-related settings for efficient data serialization within Hadoop clusters.

88. How can Ambari manage Apache Livy for interactive data processing?

Ans:

Ambari’s capabilities extend to managing Apache Livy for interactive data processing. By adding and managing Livy as a service through the Ambari dashboard, users can configure Livy settings centrally. This integration allows administrators to monitor Livy instances, supporting efficient interactive data processing within Hadoop clusters.

89. Describe the integration of Ambari with Apache Flink for stream processing.

Ans:

- Ambari allows users to add and manage Apache Flink as a service.

- Users can configure Flink settings through the Ambari web interface.

- Ambari contributes to monitoring Flink instances, facilitating efficient stream processing within Hadoop clusters.

90. How does Ambari assist in managing Apache Drill?

Ans:

Ambari enables the integration and management of Apache Drill as a service. Through Ambari, administrators can configure Apache Drill settings centrally, supporting the setup and maintenance of interactive SQL queries on large-scale datasets within Hadoop clusters.