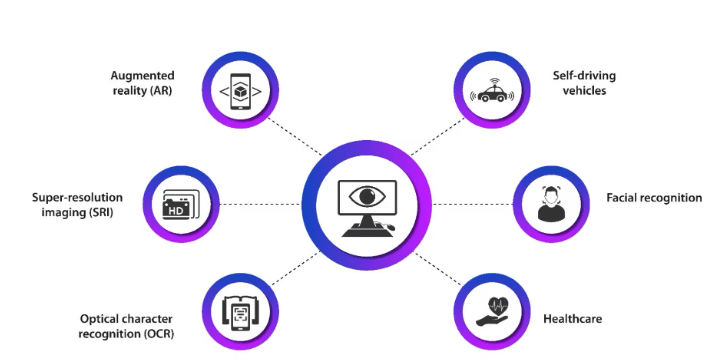

OpenCV, commonly called the Open Source Computer Vision Library, is an effective framework for real-time computer vision and image processing. It provides various tools and algorithms for manipulating and analyzing visual data. In robotics, augmented reality, and healthcare, OpenCV supports tasks like object detection, facial recognition, and motion tracking, making it essential in computer vision research and development.

1. What does OpenCV mean?

Ans:

The term “Open Source Computer Vision Library” OpenCV. It’s an open-source library for computer vision and machine learning. Initially developed by Intel, it is now maintained by the OpenCV community. The library is cross-platform and available under the BSD license. It is compatible with numerous programming languages, including Java, Python, and C++. OpenCV includes over 2,500 optimized algorithms. These algorithms are used for real-time computer vision tasks.

2. What does computer vision mean?

Ans:

One subfield of artificial intelligence is computer vision. It focuses on enabling computers to interpret visual information, including methods for capturing, processing, and analyzing images. The aim is to automate tasks that the human eye can do. Applications range from object recognition to motion analysis. Computer vision intersects with areas like pattern recognition and machine learning. It is essential in fields such as robotics, autonomous vehicles, and surveillance.

3. What are OpenCV’s limitations?

Ans:

- Due to its complexity, OpenCV can be challenging for beginners. It may deliver the highest performance for only some use cases.

- Some advanced computer vision algorithms are not included. Real-time processing might be complicated on low-end hardware.

- Documentation can sometimes be incomplete or inconsistent. Integration with other libraries can be challenging. It might not be ideal for highly specialized tasks.

4. What characteristics does OpenCV have?

Ans:

- OpenCV is designed for high computational efficiency. It supports a broad range of image and video processing techniques.

- The library is cross-platform, running on Windows, Linux, macOS, and mobile OS.

- It offers both high-level and low-level programming interfaces. OpenCV integrates well with hardware acceleration libraries. The library has extensive documentation and a large user community.

- It supports deep learning frameworks like TensorFlow and PyTorch.

5. What are OpenCV’s benefits?

Ans:

OpenCV is free and open-source, promoting innovation and collaboration. It provides a vast collection of functions for image and video analysis. Performance-wise, the library is highly optimized. It supports real-time applications, making it suitable for interactive systems. OpenCV works with a variety of programming languages. Regular updates bring enhanced content and new features to the library. It has a large, active community for support and collaboration.

6. What uses does computer vision have?

Ans:

Self-driving cars employ computer vision for obstacle detection and navigation. Computer vision plays a critical role in medical imaging for diagnostics and surgery. Surveillance systems use it for object tracking and security purposes. In retail, it helps with inventory management and customer behaviour analysis. Computer vision enhances augmented reality experiences. It is used in manufacturing for quality inspection. Social media platforms use it for facial recognition and photo tagging.

7. How is OpenCV used in Python applications?

Ans:

- OpenCV can be installed in Python using pip with the command pip install opencv-python. It provides an extensive range of image-processing functions.

- OpenCV can read and write images and videos. It also enables feature detection, such as edge, corner, and blob detection.

- It supports machine learning models for object detection and recognition.

- Python’s simplicity, combined with OpenCV’s power, makes it popular for prototyping. It is widely used in educational projects and research applications.

8. Describe the modules in the OpenCV Library.

Ans:

- The core module provides essential data structures like Mat and basic functions, while the imgproc module includes image processing functions.

- The highgui module handles image and video input/output operations. The video module is used for video analysis tasks such as motion detection.

- The ML module offers machine learning tools for training models, and the object module includes object detection algorithms, such as face detection.

- The control module contains experimental and non-free algorithms.

9. What are the differences between OpenCV’s cv::Mat and cv::UMat data structures?

Ans:

| Feature | cv::Mat | cv::UMat |

|---|---|---|

| Memory Management | Managed by the user | Managed by OpenCV’s unified memory system |

| Access Speed | Slower due to potential data copies | Faster due to asynchronous data transfers and GPU acceleration |

| Usage | Suitable for small to medium-sized matrices | Suitable for large matrices and GPU operations |

| Platform Support | Supported on CPU | Supported on both CPU and GPU |

| Concurrency | Limited concurrency support | Better support for concurrent execution on multi-core CPUs and GPUs |

10. How Does OpenCV Define a Digital Image?

Ans:

OpenCV defines a digital image as a matrix of pixel values. Each pixel value represents the intensity of light at that point. Images can be grayscale, where each pixel is a single intensity value. Colour images use multiple channels (e.g., RGB) to represent colours. Pixels are stored in a Mat object, OpenCV’s primary image data structure. The image can have various depths (8-bit, 16-bit, 32-bit) and channels. OpenCV provides extensive functions for manipulating these pixel values.

11. Elaborate on quantization and sampling?

Ans:

- Quantization and sampling are critical processes in digitizing analogue signals. Sampling involves measuring the signal at discrete intervals.

- The sample rate determines the frequency of measurements. Quantization assigns each sampled value to the nearest value within a range of discrete levels.

- Higher quantization levels yield better quality but require more storage.

- These steps convert a continuous signal into a digital form suitable for processing by computers. They are fundamental in fields like digital audio and image processing.

12. How many different kinds of image filters does OpenCV have?

Ans:

- OpenCV provides a variety of image filters to perform different image processing tasks.

- There are linear filters such as Gaussian, Median, and Bilateral filters. It also includes non-linear filters for noise reduction, edge detection, and image enhancement.

- Some common types include box, blur, and Sobel filters. Each filter serves a unique purpose, from smoothing images to highlighting edges.

- In total, OpenCV offers dozens of filters catering to various image processing needs. The exact count can vary with updates and versions.

13. In OpenCV, how are the images stored?

Ans:

In OpenCV, images are stored as matrices (Mat objects). Each Mat object consists of a matrix of pixel values, where each pixel can have multiple channels (e.g., RGB for colour images). The matrix structure allows easy manipulation and access to pixel data. Images can be loaded into Mat objects from files or captured from devices. These Mat objects facilitate a wide range of image-processing operations. They provide a flexible and efficient way to handle image data. The Mat class is central to OpenCV’s image handling.

14. What are the Mat class constructions?

Ans:

The Mat class in OpenCV can be constructed in several ways. You can create an empty matrix with a specific size and type. Mat img = new Mat(rows, cols, type);. Another way is to load an image file into a Mat object using read (). You can also initialize a Mat with existing data or by copying another Mat. Additionally, you can define a Mat as a region of interest (ROI) within another Mat. These constructions offer flexibility for various image-processing tasks.

15. How can use a OpenCV to convert a Mat to a Buffered image?

Ans:

- To convert a Mat to a BufferedImage in OpenCV, follow these steps. First, ensure you have the Java OpenCV library.

- Use Imgcodecs. imdecode() to convert Mat to byte array. Create a DataBufferByte from the byte array. Construct a Buffered Image using the DataBufferByte and the image’s dimensions.

- Set the image type according to the number of channels (e.g., TYPE_3BYTE_BGR for colour images).

- Finally, create a Raster from the Buffered Image. This method integrates OpenCV images with Java’s BufferedImage format.

16. How is OpenCV installed on different operating systems?

Ans:

- Installing OpenCV varies by operating system. On Windows, download the pre-built binaries from the OpenCV website and set up environment variables.

- On macOS, use Homebrew: brew install opencv. For Linux, use the package manager: sudo apt-get install libopencv-dev.

- Alternatively, you can build OpenCV from source on any OS by cloning the GitHub repository and using CMake to configure and build the library.

- Python users can install via pip: pip installs opencv-python. Each method ensures OpenCV is correctly integrated into your development environment.

17. Describe the OpenCV image thresholding concept.

Ans:

Setting pixel values to a threshold is a technique called image thresholding that is used to segment images. Pixels above the threshold are assigned one value (e.g., white), and those below are assigned another (e.g., black). OpenCV provides functions like cv. threshold() and cv.adaptiveThreshold(). Simple thresholding applies a global threshold, while adaptive thresholding adjusts the threshold for different regions. This is useful for images with varying lighting conditions.

18. What is image segmentation in OpenCV, and how is it achieved?

Ans:

Image segmentation is the process of dividing a picture into meaningful parts. In OpenCV, segmentation can be achieved using methods like thresholding, contour detection, and the watershed algorithm. Cv.findContours() identifies the boundaries of objects. The watershed algorithm locates the watershed lines by treating the image as a topographic surface. Cv.grabCut() is another method that segments the foreground from the background. These techniques isolate regions for analysis or object recognition.

19. How is edge detection performed using OpenCV?

Ans:

- Edge detection in OpenCV is performed using algorithms like Canny, Sobel, and Laplacian.

- The Canny edge detector is widely used and involves steps like noise reduction, gradient calculation, non-maximum suppression, and edge tracking by hysteresis.

- The Sobel operator calculates the gradient of the image’s intensity. Laplacian detects edges by finding zero-crossings of the second derivative.

- These methods highlight the boundaries of objects within an image. Edge detection is essential for image analysis and computer vision applications.

20. Describe the process of object detection with OpenCV.

Ans:

- Object detection with OpenCV involves identifying and locating objects within an image.

- Among the strategies are deep learning-based approaches, HOG (Histogram of Oriented Gradients), and pre-trained models such as Haar cascades.

- Haar cascades use features trained with positive and negative samples. HOG descriptors combined with SVM can detect objects like pedestrians.

- Deep learning models, such as those from the DNN module, use networks like YOLO or SSD for more accurate detection.

21. What are contours in OpenCV, and how can they be found?

Ans:

Contours in OpenCV are curves that connect contiguous points along a boundary with the same colour or intensity. They are helpful in object identification and form analysis. To find contours, you use the cv2.findContours function. This function retrieves contours from a binary image. The input image should be converted to grayscale and thresholded. A hierarchy and a list of contours are the functions’ outputs.

22. Explain the concept of image pyramids in OpenCV.

Ans:

Image pyramids in OpenCV are collections of images at different scales. They are used for multi-scale image analysis, such as detecting objects of various sizes. There are two types of pyramids: Gaussian and Laplacian. Gaussian pyramids involve downsampling an image to reduce its resolution. Laplacian pyramids highlight edges by subtracting the Gaussian blurred image from the original. The cv2.pyrDown and cv2.pyrUp functions are used for these operations.

23. How can image transformations be performed using OpenCV?

Ans:

- Image transformations in OpenCV alter an image’s geometry. Common transformations include translation, rotation, scaling, and flipping.

- The cv2.warpAffine function applies an affine transformation using a 2×3 transformation matrix.

- For more complex transformations, cv2.warpPerspective uses a 3×3 matrix. Functions like cv2.getRotationMatrix2D and cv2.getAffineTransform help create these matrices.

- These transformations are crucial in various image-processing tasks.

24. What is histogram equalization in OpenCV, and why is it used?

Ans:

- Histogram equalization in OpenCV is a method for improving an image’s contrast. It spreads out the most frequent intensity values, enhancing image clarity.

- The cv2.equalizeHist function performs this on a grayscale image. By redistributing pixel intensity values, it makes the histogram of the output image flat or nearly flat.

- This method enhances the visibility of features in an image and is widely used in medical imaging and other fields.

25. How is OpenCV used for face detection?

Ans:

OpenCV uses pre-trained models for face detection, such as Haar cascades and DNNs—the cv2.CascadeClassifier function loads the face detection model. Using the detectMultiScale method, you can detect faces in an image. A list of rectangles with faces detected is the result of this procedure. For DNN-based detection, cv2.dnn.readNetFromCaffe loads the model—the cv2.dnn.blobFromImage function preprocesses the image and net. Forward performs the detection.

26. Explain the concept of Hough Transform in OpenCV.

Ans:

The Hough Transform in OpenCV is a feature extraction technique used to detect geometric shapes. It is beneficial for detecting lines and circles in an image—the cv2. The HoughLines function detects lines using the standard Hough Transform. cv2.HoughLinesP is a probabilistic variant that returns the endpoints of detected lines. For circles, cv2.HoughCircles is used. The transform works by mapping points in the image to curves or lines in parameter space.

27. What are the critical points in OpenCV, and how are they detected?

Ans:

- Points in OpenCV are distinctive points in an image used for feature detection and matching. They represent interesting parts of the image, such as corners, edges, or blobs.

- Keypoints are detected using algorithms like SIFT, SURF, and ORB. Functions like cv2. SIFT_create, cv2. SURF_create, and cv2. ORB_create initialize these detectors.

- The detect method identifies key points in an image. These key points are then used in applications like object recognition and image stitching.

28. How is template matching performed with OpenCV?

Ans:

- Template matching in OpenCV involves finding a smaller image (template) within a larger image.

- The cv2.matchTemplate function performs this by sliding the template over the input image.

- It calculates a similarity metric at each position. Common methods include cv2. TM_CCOEFF, cv2. TM_CCORR, and cv2. TM_SQDIFF. The cv2.minMaxLoc function identifies the location of the best match.

- This technique is used in applications like object detection and image alignment.

29. Describe the use of the Cascade Classifier in OpenCV.

Ans:

The Cascade Classifier in OpenCV is used for object detection, especially for faces. It uses a series of stages, each consisting of a weak learner. These stages are trained using positive and negative samples—the cv2.CascadeClassifier function loads the pre-trained model. The detectMultiScale method detects objects at different scales and positions. It returns a list of rectangles where objects are detected. This method is efficient and widely used in real-time applications.

30. What is the role of the cv2? VideoCapture function in OpenCV?

Ans:

The cv2.VideoCapture function in OpenCV is used to capture video from a file or a camera. It initializes the video capture object, allowing access to frames. To capture from a camera, pass the camera index (usually 0 for the default camera). To capture from video files, provide the file path. The read method retrieves frames from the video source. This function is essential for tasks like video processing, real-time object detection, and motion analysis.

31. How can OpenCV be utilized to read and write images?

Ans:

- To read an image in OpenCV, employ cv2.imread(‘image_path’), returning a NumPy array representing the image.

- For writing images, utilize cv2.imwrite(‘output_path’, image), where the image is the NumPy array.

- Ensure careful handling of file paths and permissions for successful operations.

32. Explain the concept of image blending in OpenCV.

Ans:

- Image blending in OpenCV merges two images seamlessly to create a new image.

- This process involves techniques like weighted summation, in which pixel values from each image are combined based on specified weights.

- The cv2.addWeighted() function facilitates this blending by allowing adjustments in blending parameters such as alpha (weight of the first image) and beta (weight of the second image).

33. What is the use of the cv2.imread() function in OpenCV?

Ans:

OpenCV’s cv2.imread() function reads an image from a specified file path into a NumPy array. This format facilitates processing and manipulation with OpenCV’s extensive image-processing functions. It can handle several image types, such as BMP, PNG, and JPEG. This flexibility makes it easy to handle different image types and integrate with other data processing workflows. Additionally, it enables seamless integration with machine-learning models for advanced image analysis.

34. How can one perform color space conversions in OpenCV?

Ans:

Color space conversions in OpenCV are executed via the cv2.cvtColor() function. This function accepts the input image and a conversion flag (cv2.COLOR_BGR2GRAY, cv2.COLOR_BGR2HSV, etc.) to transform the image into the desired colour space. This capability is crucial for tasks involving colour information extraction, feature enhancement, and image preparation for various computer vision algorithms.

35. Describe the role of morphological operations in OpenCV.

Ans:

- Morphological operations in OpenCV are fundamental for processing images based on their shapes.

- Operations such as dilation, erosion, opening, and closing are applied to binary images to eliminate noise, isolate individual elements, and enhance features.

- These operations play a critical role in activities such as object detection, picture segmentation, and image filtering, facilitating the effective manipulation and refinement of image structures.

36. How can objects be detected and tracked in a video using OpenCV?

Ans:

Object detection and tracking in videos with OpenCV involve employing techniques such as background subtraction (cv2.createBackgroundSubtractorMOG2()), object detection algorithms (e.g., Haar cascades or deep learning models), and object tracking algorithms (e.g., centroid tracking or Kalman filters). Integrating these methods enables the identification and monitoring of objects across video frames, supporting applications in surveillance, motion analysis, and automated video processing.

37. Explain the concept of background subtraction in OpenCV.

Ans:

Background subtraction in OpenCV separates foreground objects (moving objects) from the static background in a video sequence. This technique detects changes within a scene over time by generating a foreground mask that highlights areas of motion. It is instrumental in tasks such as object detection, motion tracking, and activity recognition in video surveillance and computer vision applications.

38. How is OpenCV utilized for feature matching?

Ans:

OpenCV facilitates feature matching through algorithms like ORB (Oriented FAST and Rotated BRIEF), SIFT (Scale-Invariant Feature Transform), and SURF (Speeded-Up Robust Features). These algorithms detect vital points and descriptors within images, enabling the identification of corresponding features across different images. Feature matching is essential for applications such as image alignment, object recognition, and image stitching in the realm of computer vision.

39. What is the role of the cv2.imshow() function in OpenCV?

Ans:

- The cv2.imshow() function in OpenCV is employed to display an image within a graphical window.

- It requires two arguments: a window name (string) and the image (NumPy array).

- This function proves invaluable during the development and debugging phases of computer vision applications, offering a convenient means to visualize image processing results, algorithm outputs, and intermediate steps in real time.

40. How is image stitching performed with OpenCV?

Ans:

- Image stitching in OpenCV involves merging multiple overlapping images to create a panoramic view.

- This process utilizes techniques such as feature detection and matching algorithms (e.g., SIFT, SURF), perspective transformation (homography), and blending techniques to merge image borders seamlessly.

- Through accurate image alignment and blending, OpenCV enables the creation of high-resolution panoramas and wide-angle views for applications ranging from photography to visual inspection.

41. Explain the concept of the convex hull in OpenCV.

Ans:

The convex hull in OpenCV is a boundary that outlines the smallest convex polygon that can enclose a set of points or contours. It helps simplify the shape for analysis and processing. OpenCV provides functions like cv2.convexHull()to calculate a set of points’ convex hulls or contours. This concept is crucial in shape analysis, object detection, and gesture recognition tasks, where understanding the outermost boundary of a shape or object is essential.

42. How is camera calibration performed using OpenCV?

Ans:

Camera calibration in OpenCV involves estimating the intrinsic and extrinsic parameters of a camera to correct distortions and accurately map pixel coordinates to real-world coordinates. This process typically requires capturing images of a calibration pattern, such as a checkerboard, from various angles and distances. OpenCV provides functions like cv2.findChessboardCorners() for corner detection and cv2.calibrateCamera() to compute camera matrix and distortion coefficients.

43. What is the purpose of the cv2.waitKey() function in OpenCV?

Ans:

- The cv2.waitKey() function in OpenCV is used to introduce a delay and capture user-generated events when displaying images or videos.

- It waits for a critical event for a specified amount of milliseconds. If a key is pressed during this time, it returns the ASCII value of the key.

- This function is crucial for interactive applications where user input, such as closing a window or progressing to the next frame, needs to be handled synchronously with image display.

44. How are geometric transformations performed in OpenCV?

Ans:

- Geometric transformations in OpenCV involve operations like translation, rotation, scaling, and affine transformations on images.

- These transformations are achieved using functions such as cv2.warpAffine() for affine transformations and cv2.warpPerspective() for perspective transformations.

- These operations are fundamental for tasks like image registration, alignment, and correcting perspective distortion in images or videos.

45. Describe the process of face recognition using OpenCV.

Ans:

Face recognition in OpenCV typically involves detecting faces using algorithms like Haar cascades or deep learning-based methods, followed by feature extraction and matching. OpenCV provides pre-trained models like LBPH (Local Binary Patterns Histograms) and Eigenfaces for recognition. The process includes detecting facial landmarks, encoding features, and comparing them against a database or known identities. Face recognition finds applications in security systems, access control, and personalized user experiences.

46. What is the use of the cv2.filter2D() function in OpenCV?

Ans:

The cv2.filter2D() function in OpenCV is used to perform custom spatial filtering on images. It applies an arbitrary linear filter to an image, allowing users to define a kernel that specifies how neighbouring pixels influence each other. This function is essential for tasks such as image sharpening, blurring, edge detection, and noise reduction. By applying convolution operations defined by the kernel, cv2.filter2D() enables precise control over image enhancements and feature extraction in computer vision applications.

47. How is image sharpening typically performed using OpenCV?

Ans:

- Image sharpening in OpenCV can be achieved using techniques like the Laplacian operator or unsharp masking.

- The cv2.filter2D() function is often used with specific kernels, such as the Laplacian or Gaussian derivative, to enhance edges and details in an image.

- Additionally, functions like cv2.addWeighted() combine the original image with its sharpened version to achieve the desired results.

48. Explain the concept of optical flow in OpenCV.

Ans:

- Optical flow in OpenCV refers to the pattern of apparent motion of objects between consecutive frames in a sequence. It estimates the velocity field of pixel movements by tracking feature points or dense motion vectors across frames.

- OpenCV provides algorithms like Lucas-Kanade and Dense Optical Flow methods to compute optical flow.

- This concept is essential for motion analysis, object tracking, video stabilization, and understanding dynamic scenes in applications such as surveillance, robotics, and autonomous driving.

49. How is histogram analysis performed in OpenCV?

Ans:

With OpenCV, histogram analysis entails calculating and displaying the distribution of pixel intensities in a picture. Functions like cv2.calcHist() calculate histograms for grayscale or colour images, which represent the frequency of intensity values across different bins. Histogram equalization techniques like cv2.equalizeHist() adjust image contrast by redistributing pixel intensities. Histogram analysis is vital for image enhancement, thresholding, and understanding image characteristics in fields like medical imaging, remote sensing, and quality inspection.

50. What is the role of the cv2.resize() function in OpenCV?

Ans:

The cv2.resize() function in OpenCV resizes images to a specified width and height or scale factor. It allows scaling images up or down, maintaining the aspect ratio or adjusting as needed. This function is essential for preparing images for processing, display, or storage in computer vision applications. cv2.resize() supports various interpolation methods like linear, cubic, or nearest neighbour for resizing, ensuring flexibility and quality in resizing operations.

51. How can perspective transformation be performed in OpenCV?

Ans:

- Perspective transformation in OpenCV involves using the cv2.getPerspectiveTransform() function to define a transformation matrix based on four source points and their corresponding destination points.

- This matrix is applied using cv2.warpPerspective() to warp the image, adjusting the perspective to correct distortion or change viewpoint, such as in rectifying images for further processing like OCR or object detection.

52. Describe the process of motion detection using OpenCV.

Ans:

- Motion detection in OpenCV typically involves background subtraction techniques like cv2.createBackgroundSubtractorMOG2() to isolate moving objects from the background.

- By comparing successive frames from a video feed, changes in pixel values are detected, and contours or bounding boxes around moving objects are drawn using functions like cv2.findContours() or cv2.rectangle() to track and analyze motion in real-time applications like surveillance or object tracking systems.

53. How is OpenCV used for OCR (Optical Character Recognition)?

Ans:

OpenCV for OCR involves preprocessing images using functions like cv2.cvtColor() to convert to grayscale and cv2.threshold() for binarization, enhancing text contrast for better recognition. Utilizing libraries like Tesseract OCR integrated with OpenCV (pytesseract) allows text to be extracted from images or video frames. This process enables automated text extraction from documents, video streams, or real-world scenarios, supporting applications in automated data entry, document digitization, and accessibility tools.

54. Explain the concept of corner detection in OpenCV.

Ans:

Corner detection in OpenCV identifies points in an image where two edges meet, indicating significant changes in brightness or intensity. Methods like Harris corner detection (cv2.cornerHarris()) compute a corner response for each pixel, identifying regions with high responses as corners. These points are crucial for feature matching, object tracking, and geometric transformation tasks in computer vision applications such as augmented reality, image stitching, and 3D reconstruction.

55. How is OpenCV used for 3D reconstruction?

Ans:

- 3D reconstruction with OpenCV involves capturing stereo images or using multiple viewpoints to create a depth map (cv2.StereoBM_create() or cv2.StereoSGBM_create()).

- Using camera calibration (cv2.calibrateCamera()) and triangulation techniques (cv2.triangulatePoints()), 3D coordinates of points are computed and visualized using libraries like matplotlib or rendered into 3D models.

- This process supports applications in robotics, medical imaging, and virtual reality, enabling spatial perception from 2D images.

56. What is the use of the cv2.cvtColor() function in OpenCV?

Ans:

- The cv2.cvtColor() function in OpenCV converts colour space, transforming images between different colour representations such as RGB, HSV, grayscale, etc.

- This function helps standardize image inputs for processing algorithms and enhance colour-based segmentation, feature extraction, and visualization tasks in computer vision applications such as object detection, image enhancement, and medical imaging.

57. How can image rotation be performed using OpenCV?

Ans:

Image rotation in OpenCV is achieved using the cv2.getRotationMatrix2D() function to create a rotation matrix based on a specified rotation angle and centre of rotation. Applying this matrix with cv2.warpAffine() rotates the image around its centre. This technique is essential for correcting image orientation, augmenting training datasets for machine learning, and aligning images in panoramic stitching and geometric transformations.

58. Explain the concept of image dilation in OpenCV.

Ans:

Image dilation in OpenCV is a morphological operation that expands or thickens the boundaries of objects in binary or grayscale images. It uses a structuring element (cv2.getStructuringElement()) to probe and enlarge regions where pixel values meet specific criteria. This technique is valuable for noise reduction, enhancing object connectivity, and feature extraction in applications like image segmentation, text recognition, and fingerprint analysis.

59. What is image erosion in OpenCV, and how is it used?

Ans:

- Image erosion in OpenCV is a morphological operation that shrinks or thins the boundaries of objects in binary or grayscale images.

- It uses a structuring element to remove pixels at the object boundaries where the element fits entirely under the foreground pixels.

- Erosion is employed in applications like separating touching objects, extracting thin lines or text features, and preparing masks for further morphological processing or shape analysis.

60. How can image blurring be performed using OpenCV?

Ans:

- Image blurring in OpenCV involves applying smoothing filters (cv2.blur(), cv2.GaussianBlur(), cv2.medianBlur(), etc.) to reduce noise and detail in images.

- These filters convolve the image with a kernel matrix, averaging pixel values or applying weighted averages based on the kernel size and type.

- Blurring is essential for preprocessing noisy images, reducing high-frequency components, and improving performance in tasks like edge detection, feature extraction, and image segmentation.

61. Describe the use of the cv2—GaussianBlur () function in OpenCV.

Ans:

The cv2.The GaussianBlur() function in OpenCV applies a Gaussian filter to smooth images, reducing noise and detail. It takes parameters like the input image, kernel size (width and height of the kernel), and standard deviation in X and Y directions to control the amount of blurring. This function is commonly used as a preprocessing step before tasks like edge detection to enhance the quality of image gradients.

62. How can circles be detected in an image using OpenCV?

Ans:

Circle detection in OpenCV is performed using the Hough Circle Transform implemented in the cv2.HoughCircles() function. This method identifies circles based on edge information, requiring parameters such as the image, detection method, resolution factor, minimum distance between detected centres, and minimum and maximum radii of the circles. It returns a list of detected circles, each represented as a tuple containing centre coordinates and radius.

63. What is the role of the cv2?Canny() function in OpenCV?

Ans:

- The cv2.The Canny() function is crucial for edge detection in images. It utilizes the Canny edge detection algorithm to identify significant changes in intensity and mark them as edges.

- The parameters include the input image, minimum and maximum thresholds for edge detection, and an optional aperture size for gradient computation.

- This function outputs a binary image where detected edges are marked with white pixels on a black background.

64. How do you use OpenCV for real-time face recognition?

Ans:

- Detecting faces using methods like Haar cascades or deep learning-based detectors.

- Preprocessing detected faces (e.g., normalization).

- Extracting facial features or using a pre-trained model for feature extraction.

- Comparing these features against a database using techniques like Euclidean distance or deep learning classifiers.

65. Explain the concept of image histograms in OpenCV.

Ans:

Image histograms in OpenCV are graphical representations of pixel intensity distributions in an image. The cv2.calcHist() function computes histograms, visualizing intensity values along the horizontal axis and frequency of occurrence along the vertical axis. Histograms help analyze image contrast, brightness, and dynamic range, aiding tasks like image enhancement and thresholding. They are essential in image processing for understanding and manipulating pixel intensity distributions.

66. How do you perform contour approximation with OpenCV?

Ans:

Contour approximation in OpenCV simplifies complex contours while preserving their shape. The cv2.approxPolyDP() function approximates contours using the Douglas-Peucker algorithm, requiring parameters such as the contour to approximate, epsilon (maximum distance from contour to approximated contour), and a Boolean flag for closed contours. This method reduces the number of vertices in a contour representation, enabling efficient shape analysis and recognition in tasks like object detection.

67. What is the role of the cv2.boundingRect() function in OpenCV?

Ans:

- The cv2.boundingRect() function computes the bounding rectangle for a contour in OpenCV.

- It takes the contour as input and returns the coordinates (x, y) of the top-left corner, the width, and the height of the bounding rectangle.

- This rectangle encapsulates the entire contour, providing valuable geometric information used in object localization, image cropping, and feature extraction tasks.

- It is fundamental in various computer vision applications for defining regions of interest and simplifying subsequent image processing steps.

68. How can affine transformations be performed in OpenCV?

Ans:

- Affine transformations in OpenCV, such as scaling, rotation, translation, and shearing, are executed using the cv2.warpAffine() function.

- This function applies a specified transformation matrix to an image, defined by parameters such as the input image, transformation matrix, output image size, and interpolation method.

- Affine transformations preserve parallel lines and distances between points, which is crucial for tasks like image registration, alignment, and geometric correction in computer vision and image processing applications.

69. Describe the use of the cv2.bitwise_and() function in OpenCV.

Ans:

The cv2.bitwise_and() function in OpenCV performs bitwise AND operation between two images, pixel by pixel. It takes input images and an optional mask as parameters, computing the result where each pixel in the output image is set to 255 (white) only if the corresponding pixels in both input images and mask are also 255. This function is essential for tasks like image masking, selective filtering, and region-of-interest operations, enabling precise control over image processing operations based on pixel values.

70. How does one apply a mask to an image in OpenCV?

Ans:

Applying a mask to an image in OpenCV involves using the cv2.bitwise_and() function with the image and mask as inputs. The mask, typically a binary image with white pixels indicating regions of interest and black pixels for areas to be ignored, is bitwise ANDed with the input image. This operation results in an output image where pixels outside the mask are set to zero, effectively masking out unwanted regions and focusing processing on specific areas of interest.

71. What is the role of the cv2.dilate() function in OpenCV?

Ans:

- The cv2.dilate() function in OpenCV is used for morphological dilation, which expands the boundaries of foreground objects in an image.

- It works by adding pixels to the boundaries of objects, making them thicker and filling in small gaps.

- This function is essential for tasks like removing noise, enhancing objects’ features, and joining broken parts.

72. How is OpenCV used for gesture recognition?

Ans:

Gesture recognition with OpenCV involves:

- Capturing live video frames.

- Preprocessing them to isolate the hand or relevant parts.

- Extracting features such as contours or key points.

- Machine learning or pattern recognition algorithms are used to classify gestures.

Techniques like background subtraction, skin colour detection, and morphological operations are used for accurate hand segmentation, followed by gesture classification based on predefined models or algorithms.

73. Explain the concept of the distance transform in OpenCV.

Ans:

The distance transform in OpenCV calculates the shortest distance from every pixel to a set of background pixels. It assigns each pixel a value representing its distance to the nearest background pixel, enabling analysis of shape and size properties. This transformation is crucial for tasks like shape matching, skeletonization, and analyzing object boundaries in image processing and computer vision applications.

74. How is image skeletonization performed with OpenCV?

Ans:

- Image skeletonization in OpenCV involves thinning the foreground objects in binary images to their skeleton representation, preserving the essential structure while reducing thickness.

- Techniques like morphological operations, distance transforms, and pruning are used iteratively to achieve this.

- Skeletonization is helpful for shape analysis, pattern recognition, and feature extraction tasks in various computer vision applications.

75. What is the use of the cv2.findContours() function in OpenCV?

Ans:

- The cv2.findContours() function identifies and extracts contours from binary images or images with intensity gradients.

- Contours are curves joining continuous points with the same colour or intensity.

- This function is fundamental for shape analysis, object detection, and image segmentation tasks.

- It returns a list of contours and can also be used to approximate contours and compute their area, perimeter, and bounding boxes.

76. How is image segmentation performed using GrabCut in OpenCV?

Ans:

GrabCut segmentation in OpenCV initializes with a bounding box around the object of interest, iteratively refining the segmentation based on colour and texture similarity. It uses graph cuts to partition images into foreground and background regions. This method is effective for interactive image segmentation, where user input guides the segmentation process, making it useful for applications like object extraction and image editing.

77. Describe the role of cv2.erode() in image processing.

Ans:

The cv2.erode() function in OpenCV is used for morphological erosion, which reduces the boundaries of foreground objects in binary images. It works by eroding the pixels at the edges of objects, making them thinner or breaking them apart. This function is essential for tasks like noise reduction, separating connected objects, and shrinking object boundaries.

78. How can OpenCV be utilized for detecting barcodes and QR codes?

Ans:

Preprocessing pictures to improve contrast and lower noise is a necessary step in the OpenCV barcode and QR code detection process. Techniques like edge detection or feature extraction detect candidate regions, and decoding these regions extracts information using specialized libraries or algorithms. Techniques like contour detection, image thresholding, and pattern matching are used for accurate detection and decoding, making it suitable for applications in logistics, retail, and mobile applications.

79. What is the purpose of the cv2.pyrUp() and cv2.pyrDown() functions?

Ans:

- The cv2.pyrUp() and cv2.pyrDown() functions in OpenCV are used for image pyramid operations. cv2.pyrDown() reduces the image resolution, making it smaller by smoothing and subsampling.

- cv2.pyrUp() increases image resolution by upsampling and applying a smoothing filter.

- These functions are used for hierarchical multi-scale image processing tasks like image blending, texture analysis, and feature detection, improving computational efficiency and performance.

80. How can one implement k-means clustering for image segmentation in OpenCV?

Ans:

- Implementing k-means clustering for image segmentation in OpenCV involves flattening the image into a feature vector, initializing cluster centres randomly, iteratively assigning pixels to clusters based on similarity, and updating cluster centres until convergence.

- This technique partitions the image into k clusters based on pixel intensity or feature similarity, facilitating tasks like colour quantization, object segmentation, and image compression in various computer vision applications.

81. Explain the concept of mean shift segmentation in OpenCV.

Ans:

Mean shift segmentation in OpenCV is a method for clustering similar pixels in an image based on their color or intensity. It iteratively shifts the centre of clusters towards the mean of pixels in a region until convergence, effectively grouping pixels into segments. This technique is beneficial for segmenting images with varying lighting conditions and backgrounds, providing robust segmentation results.

82. How is noise reduction typically performed in images using OpenCV?

Ans:

Noise reduction in images using OpenCV involves techniques like Gaussian blurring, median filtering, or bilateral filtering. Gaussian blurring smooths images by convolving with a Gaussian kernel, reducing high-frequency noise. Median filtering replaces each pixel’s value with the median of neighbouring pixels, which is effective for salt-and-pepper noise. Bilateral filtering preserves edges while reducing noise by considering both spatial and intensity differences.

83. What is the use of the cv2?HoughLines() function in OpenCV?

Ans:

- The cv2.The HoughLines() function in OpenCV detects straight lines in an image using the Hough Transform technique.

- It converts points to lines in parameter space (rho, theta), where rho represents The angle with the x-axis being the theta or distance from the origin.

- This function is valuable for line detection tasks in images, such as lane detection in autonomous driving or line extraction in medical imaging.

84. How can text be detected and recognized in images using OpenCV and Tesseract?

Ans:

- Text detection and recognition using OpenCV and Tesseract involves first detecting text regions using techniques like contour detection or EAST text detector.

- Extracted regions are then preprocessed (e.g., binarized) before being passed to the Tesseract OCR engine for text recognition.

- Tesseract converts image text into machine-readable text output, facilitating applications like document scanning, text extraction from images, or real-time text recognition in videos.

85. Describe the process of video stabilization using OpenCV.

Ans:

Video stabilization in OpenCV involves detecting feature points (e.g., corners) in successive frames using algorithms like Shi-Tomasi or FAST. Corresponding points are matched across frames using feature descriptors (e.g., SIFT, ORB). Transformation matrices are computed to align frames based on matched points, and frames are warped to stabilize motion. This process reduces camera shake and smooths video output, which is crucial for enhancing video quality in applications like surveillance or action cameras.

86. How is face alignment typically performed using OpenCV?

Ans:

Face alignment in OpenCV aligns detected face landmarks (e.g., eyes, nose) to a canonical form for analysis or recognition tasks. It involves detecting facial landmarks using techniques like the Dlib library or Haar cascades. Landmark points are used to compute affine or perspective transformations, correcting head pose variations. Face alignment ensures consistent facial feature positioning across images, enhancing accuracy in applications like facial recognition or emotion detection.

87. What is the role of the cv2.addWeighted() function in OpenCV?

Ans:

- The cv2.addWeighted() function in OpenCV blends two images or arrays using weighted sums.

- It computes a weighted sum of input arrays where weights control the contribution of each array to the output.

- This function is commonly used for image blending, contrast adjustment, or creating special effects in images by controlling transparency or intensity levels between images.

88. How can multi-object tracking be performed using OpenCV?

Ans:

- Multi-object tracking with OpenCV involves detecting objects using techniques like background subtraction, contour detection, or deep learning-based object detection models (e.g., YOLO, SSD).

- Detected objects are tracked across frames using algorithms like the Kalman filter, MeanShift, or CAMShift.

- Tracking algorithms predict object positions, handle occlusions, and update object states over time.

- This process is essential for real-time applications like surveillance, traffic monitoring, or human-computer interaction.

89. Explain the use of Haar cascades for object detection in OpenCV.

Ans:

Haar cascades in OpenCV are classifiers used for rapid object detection based on features described in Haar-like patterns. They utilize a cascade of classifiers trained to detect specific objects or features (e.g., faces, eyes) by analyzing image regions at multiple scales. Haar cascades are efficient for real-time applications due to their fast detection speed and ability to handle complex background variations, making them suitable for tasks like facial recognition, gesture detection, or object tracking.

90. How can filters be applied to images using OpenCV?

Ans:

Applying filters to images in OpenCV involves using functions like cv2.filter2D() for custom kernel-based filtering, cv2.GaussianBlur() for Gaussian smoothing, cv2.medianBlur() for median filtering, and cv2.bilateralFilter() for bilateral filtering. Filters modify image pixels to achieve effects such as noise reduction, edge detection, or image enhancement. Parameters like kernel size and filter type are adjusted to control the filter’s impact on image characteristics, ensuring desired visual outcomes in image processing applications.

91. Describe the use of the cv2.threshold() function in OpenCV.

Ans:

- The cv2.threshold() function in OpenCV is used for image thresholding, which separates regions of an image based on pixel intensity values.

- It takes an input image, a threshold value, and a maximum value. Pixels are classified as foreground (above threshold) or background (below threshold).

- Various thresholding types, such as binary, inverse binary, and truncation, can be specified.

- Thresholding is vital for image segmentation, object detection, and creating binary images for further processing.

92. How do you perform edge detection using the Sobel operator in OpenCV?

Ans:

- Edge detection with the Sobel operator in OpenCV involves applying Sobel kernels (vertical and horizontal gradients) to an image using cv2.Sobel().

- This calculates gradients in both directions, highlighting changes in intensity that correspond to edges.

- After applying Sobel filters, combining gradient magnitudes detects edges.

- Adjusting kernel size and handling image noise improves edge detection accuracy. Sobel edge detection is fundamental in computer vision for feature extraction, object recognition, and image enhancement tasks.

93. What is the role of the cv2?Laplacian() function in OpenCV?

Ans:

The cv2.The Laplacian() function computes the Laplacian of an image, which represents the rate of change of pixel intensity in the image. It detects edges and highlights regions where pixel intensity changes abruptly, irrespective of direction. This function is pivotal for edge detection, image sharpening, and feature extraction. Adjusting the kernel size and handling noise enhances the accuracy of Laplacian edge detection. In OpenCV, it is combining Laplacian with other techniques like thresholding and morphology yields effective image analysis results.

94. How do you create a panoramic image using OpenCV?

Ans:

Creating a panoramic image in OpenCV involves several steps. First, detect critical points and descriptors in images using algorithms like SIFT or ORB. Then, match key points between consecutive images. Estimate the homography matrix using RANSAC to align images. Finally, warp and blend images using cv2.warpPerspective() and techniques like feathering or blending to create a seamless panoramic image.

95. Explain the concept of line detection using the Probabilistic Hough Line Transform in OpenCV.

Ans:

The Probabilistic Hough Line Transform in OpenCV detects straight lines in images based on probabilistic voting. It efficiently identifies lines by randomly sampling points and checking if they lie on lines. This method reduces computational complexity compared to the standard Hough Transform. Parameters like minimum line length and maximum gap between line segments refine line detection.