Multithreading is a programming technique where multiple threads within a process execute independently, allowing concurrent execution of tasks. It enables efficient utilization of modern multicore processors by dividing tasks to run simultaneously, improving application responsiveness and performance. However, it requires careful management of shared resources to avoid issues like race conditions and deadlocks.

1. What is the happens-before relationship in Java concurrency?

Ans:

The happens-before relationship in Java concurrency determines the order in which operations are performed in various threads. If the first action occurs before the second, its outcome is evident in the second action. Regarding the visibility of modifications made by one thread to another thread, this relationship offers assurances. In a multithreaded system, it guarantees that the effects of previous activities are apparent in later actions.

2. Define atomicity in multithreading.

Ans:

- The term “atomicity” describes an operation’s ability to finish completely or not at all, with no intermediate state that is visible to other threads.

- Atomic operations in multithreading are inseparable, which means that other threads cannot stop them.

- In concurrent programming, atomicity is essential for guaranteeing accuracy and consistency.

3. How can you achieve thread safety without using synchronization?

Ans:

A variety of strategies, including thread-local variables, immutable objects, and atomic classes from the `java. Util. The Concurrent} package can be used to achieve thread safety without synchronization. By offering atomic operations like compare-and-swap, atomic classes make sure that operations on shared data remain atomic even in the absence of explicit locking. Immutable objects are intrinsically thread-safe because, once created, they cannot be altered.

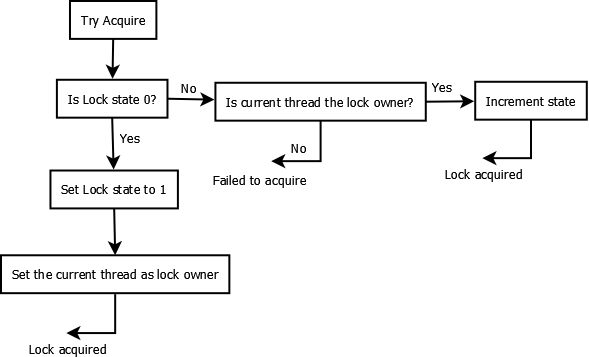

4. Explain the concept of reentrant locks.

Ans:

Reentrant locks allow a thread to acquire the same lock again without generating a deadlock. This implies that a thread with a reentrant lock can return to a previously synchronized code block or procedure without being blocked. This feature allows for more flexible synchronization management and helps to avoid deadlocks when a thread has to access the same lock multiple times during nested code execution.

5. What are the advantages and disadvantages of using synchronized methods?

Ans:

- Simplicity: Protecting shared resources from concurrent access can be done easily with synchronized methods.

- Built-in mechanism: Java comes with synchronized keywords that make thread safety implementation simple.

- Blocking: When synchronous methods block, threads may be made to wait needlessly, which can cause performance problems.

6. Describe Amdahl’s Law and its relevance to multithreading.

Ans:

Amdahl’s Law is a formula that determines how much a system can theoretically speed up as a result of parallelization. It claims that the percentage of a program that cannot be parallelized will determine how much faster the program can run when employing several processors in parallel computing. Amdahl’s Law emphasizes the significance of recognizing and streamlining a program’s sequential sections in the context of multithreading in order to maximize performance gains from parallel execution.

7. What is thread-local storage (TLS)?

Ans:

Thanks to a method called thread-local storage (TLS), each thread can have a copy of the data. This method guarantees that modifications performed by one thread don’t impact those made by other threads, giving each thread its instance of a variable. Thread-local variables are usually employed when data needs to be accessed and edited independently by many threads without causing synchronization overhead.

8. Explain the concept of thread starvation.

Ans:

- When higher-priority threads repeatedly utilize shared resources or CPU time, a thread that is trying to use them will experience thread hunger.

- This may result in a situation where the starved thread is unable to proceed, which, in severe circumstances, may lead to performance deterioration or deadlock.

9. What are concurrent collections in Java? Provide examples.

Ans:

- Thread-safe variants of normal Java collections, known as concurrent collections, enable concurrent access by numerous threads without requiring external synchronization.

- `CopyOnWriteArrayList}, `ConcurrentLinkedQueue,` and `ConcurrentHashMap` are a few examples.

- For concurrent programming, these collections offer high-performance substitutes for synchronized collections.

10. Differentiate between fair and unfair locks.

Ans:

| Feature | Fair Lock | Unfair Lock |

|---|---|---|

| Order | Respects first-come, first-served | Does not guarantee order of access |

| Waiting | Queues threads in order of request | Allows immediate access for waiting threads |

| Performance | May introduce more overhead | Typically faster due to less overhead |

| Usage | Suitable when fairness is crucial | Preferred for maximizing throughput |

| Implementation | Uses synchronized queues or similar structures | Uses simple locking mechanisms like synchronized blocks |

11. When is it appropriate to use a `ReadWriteLock` in a multithreaded application?

Ans:

When a data structure is read more often than it is updated, ReadWriteLock is employed. While more than one thread can read data at once, only one thread can edit data at once. This enhances performance by lessening disagreement among readers and preserving thread safety for writers. When reads greatly exceed writes and read operations do not change the shared data, ReadWriteLock is appropriate.

12. How do you implement a thread-safe singleton in Java?

Ans:

- Eager Initialization: As soon as the class loads, create the singleton instance with great anticipation.

- Lazy Initialization (with synchronization): To guarantee thread safety, synchronize the creation procedure and lazily initialize the singleton instance when it is initially requested.

- Double-Checked Locking: By syncing the creation process only when required, double-checked locking reduces synchronization overhead. Implement the singleton as an enum since enum types are thread-safe by default.

13. What is the Fork-Join framework in Java used for?

Ans:

A concurrency technique called the Fork-Join Framework was added to Java in order to parallelize divide-and-conquer algorithms. It works especially well for jobs that can be divided into smaller, independent subtasks that may be completed separately before being integrated to yield the desired outcome. The primary Java class for managing Fork-Join tasks is called ForkJoinPool.

14. Describe methods for thread communication in Java.

Ans:

Synchronization: Coordinating threads’ access to shared resources by means of synchronized blocks or procedures. Wait () and notify(): Java offers built-in methods that allow threads to wait for signals from other threads and alert them when specific requirements are fulfilled. Concurrent data structures like BlockingQueue help producer and consumer threads communicate by enabling one thread to wait for another to produce or consume data.

15. Explain the purpose of thread grouping.

Ans:

Thread grouping provides a method for organizing threads into logical units, making it easier to oversee and manage multiple threads concurrently. By grouping threads, resource access can be controlled more efficiently, and thread priorities can be established to ensure optimal performance. Thread groups also facilitate the interruption of multiple threads simultaneously, improving control over their execution.

16. How do you generate a Thread Dump in Java?

Ans:

Generating a Thread Dump in Java: – There are several ways to create a thread dump:

- A SIGQUIT signal is sent to the Java process.

- Making use of the JDK-bundled `jstack} command-line utility.

- ‘ThreadMXBean’ from the ‘java.lang.management’ package is utilized in a programming context.

17. What techniques are used to detect deadlock in Java?

Ans:

Examining thread dumps involves analyzing the state of threads to identify blocking or contention issues. Visual monitoring tools or utilities like jstack are commonly used to pinpoint threads that are blocked or contending for resources. These tools provide insights into thread states such as BLOCKED or WAITING, indicating potential deadlock scenarios where threads are stuck waiting indefinitely for resources held by others.

18. Define Livelock and provide an example.

Ans:

- Livelock – Livelock is the state in which two or more threads continue to react to each other’s actions without advancing the thread count.

- An illustration would be if two people were to cross paths in a small hallway. Despite their best efforts to let the other pass, they keep getting in each other’s way.

19. What are the limitations of multithreading?

Ans:

- Potential race situations and concurrent problems increase complexity and debugging difficulty.

- The challenge of maintaining thread safety and preventing livelocks or deadlocks.

- The overhead is related to synchronization and context switching.

20. How would you measure the performance of multithreaded applications?

Ans:

Measuring the performance of multithreaded applications involves several key metrics: monitoring CPU utilization to ensure efficient thread execution across cores, tracking thread synchronization overhead to minimize contention, evaluating throughput and response times under varying load conditions, assessing scalability by observing how performance scales with increasing thread counts, and analyzing resource consumption like memory and I/O usage to identify bottlenecks.

21. What are common performance bottlenecks encountered in multithreading?

Ans:

- Lock contention: the situation in which several threads vie for the same lock.

- Over-synchronization, which results in overhead and decreased parallelism.

- An uneven division of labor between the threads.

- Competition for resources, including network access or I/O.

22. Describe strategies for optimizing multithreaded code for better performance.

Ans:

- Minimize synchronization: Reduce locks and use lock-free data structures where possible.

- Use thread pooling: Reuse threads to avoid the overhead of thread creation.

- Identify and reduce contention: Balance workloads and optimize access to shared resources.

- Batch processing: Group tasks to minimize overhead from context switching.

23. What is lock contention, and how can you reduce it?

Ans:

- Fine-grained locking: Use smaller, more localized locks to minimize the duration that threads hold locks.

- Lock-free data structures: Implement data structures that use atomic operations or other synchronization techniques to avoid locks altogether.

- Lock hierarchy: Ensure locks are acquired in a consistent order to prevent deadlocks and reduce contention.

- Read-write locks: Use separate locks for reading and writing operations to allow concurrent reads.

24. Explain the concept of thread affinity and its impact on performance.

Ans:

Lock contention arises when multiple threads compete for the same lock, leading to reduced parallelism and potential serialization of tasks. To mitigate lock contention, employing lock-free data structures or algorithms can eliminate the need for locks altogether, promoting concurrency. Alternatively, adopting techniques like lock splitting or fine-grained locking reduces the scope of locks, allowing more concurrent access to shared resources.

25. What methods can you use to identify and eliminate thread contention in Java?

Ans:

Thread affinity refers to the tendency of threads to be consistently scheduled on the same processor core. This practice can enhance performance by reducing cache misses and improving cache locality, as the same core frequently accesses the same cache lines. By keeping threads on the same core, the system benefits from efficient use of the cache. However, excessive thread affinity can hinder scalability by limiting the system’s ability to distribute workloads across multiple cores, potentially leading to load imbalance and reduced overall system performance.

26. Discuss the advantages of using immutable objects in multithreading.

Ans:

Determining and Resolving Thread Conflict in Java: – Employ profiling instruments to pinpoint hotspots and points of contention within multithreaded programming. To reduce contention, use strategies like lock splitting, lock striping, or lock-free algorithms. To cut down on synchronization overhead, use asynchronous I/O and non-blocking data structures.

27. What are thread-local variables, and how do they affect performance?

Ans:

- Thread-local variables are variables that are local to each thread in a multithreaded application.

- They are stored separately for each thread and not shared among them. This can improve performance by reducing contention for shared resources and eliminating the need for synchronization mechanisms like locks.

28. How can you minimize context-switching overhead in multithreading?

Ans:

- Reduce the number of threads: Having fewer threads reduces the frequency of context switches.

- Optimize thread scheduling: Use appropriate scheduling policies and priorities to minimize unnecessary context switches.

- Utilize asynchronous I/O: Use asynchronous operations to perform I/O-bound tasks without blocking threads.

- Employ thread pooling: Reuse threads from a pool rather than creating new ones for each task, reducing overhead.

29. Describe cache coherence and its implications for multithreaded performance.

Ans:

Cache coherence refers to the consistency of shared data in different caches across multiple processors in a multiprocessor system. Maintaining cache coherence is essential for correct multithreaded program execution. Incoherent caches can lead to inconsistencies in shared data, resulting in incorrect program behavior or performance degradation due to unnecessary cache invalidations and memory synchronization operations.

30. What are the key considerations in designing a multithreaded application?

Ans:

- Identifying concurrency opportunities: Determine which parts of the application can be parallelized to maximize performance.

- Synchronization: Properly synchronize access to shared resources to prevent data races and ensure correctness.

- Scalability: Design the application to scale well with increasing numbers of threads or processors.

- Error handling: Implement mechanisms to handle errors and exceptions in a multithreaded environment.

31. Explain best practices for designing concurrent algorithms.

Ans:

- Minimize contention: Reduce the likelihood of threads contending for shared resources by partitioning data or using lock-free algorithms.

- Use appropriate synchronization primitives: Choose the right synchronization mechanisms (e.g., locks, atomic operations) based on the algorithm’s specific requirements.

- Avoid deadlock and livelock: Design algorithms and data structures to prevent deadlock and livelock situations by carefully managing resource acquisition and release.

32. How can work be effectively partitioned among threads in a multithreaded application?

Ans:

In a multithreaded application, partitioning work among threads involves identifying tasks that can be executed concurrently and distributing them effectively. This can be achieved by breaking down the workload into smaller, independent units that different threads can handle simultaneously. Strategies such as data partitioning, where each thread operates on a distinct subset of data, or task partitioning, where different threads handle distinct parts of a larger computation or process, can be employed.

33. What are the benefits of using thread pooling?

Ans:

- Decreased overhead: Thread pooling reduces the overhead associated with producing and killing threads for every activity by recycling them from a pool.

- Better scalability: By reducing the number of concurrent threads and efficiently controlling resource utilization, thread pooling improves scalability.

- Resource management: Thread pools offer controls over the number of running threads, thread lifetime management, and effective job scheduling.

34. Discuss strategies for designing a scalable multithreaded system.

Ans:

- Minimize contention: By dividing up data or utilizing lock-free methods, you can lessen contention for shared resources.

- Divide the workload: To minimize bottlenecks and optimize parallelism, divide the work equally among several threads or processors.

- Employ non-blocking algorithms: To reduce synchronization overhead and contention, use non-blocking data structures and algorithms.

35. Which design patterns are commonly used in multithreading?

Ans:

- Producer-consumer: A term used frequently in message-passing systems that distinguishes between the creation and consumption of a resource.

- Read-write lock: This optimizes workloads that involve a lot of reading by allowing multiple readers and one writer to access a shared resource concurrently.

- Thread pool: Controls a group of worker threads to carry out asynchronous operations, enhancing efficiency and resource use.

36. How do you manage shared resources in a multithreaded environment?

Ans:

Implement atomic operations, locks, or other synchronization strategies to ensure that access to shared resources is properly synchronized. Diminish hostilities. To reduce conflict for shared resources, employ techniques like data partitioning and thread-local storage. Avoid data races by using thread-safe data structures or ensuring that only one thread can modify shared data at a time.

37. Define the concept of thread confinement.

Ans:

Programming techniques such as thread confinement allow only one thread to access and modify a given piece of data or resource. This minimizes the requirement for synchronization and eliminates potential concurrency problems by guaranteeing that the data stays contained within the execution context of a single thread. Because thread confinement eliminates shared state and synchronization costs, it can simplify multithreaded programming and increase performance.

38. How can you design a lock-free data structure?

Ans:

- Using atomic operations: Thread-safe modifications to shared data can be implemented without explicit locking by using atomic operations like compare-and-swap (CAS).

- Using wait-free algorithms: Create algorithms that ensure that every thread advances independently of the others, preventing any thread from being blocked indefinitely.

- Using non-blocking synchronization primitives: To allow concurrent access to data without contention, use non-blocking synchronization primitives like read-write locks or hazard pointers.

39. What challenges are associated with designing a distributed multithreaded system?

Ans:

- Coordination and synchronization: Making sure that distributed threads running on several nodes are consistent and coordinated.

- Network communication: Controlling latency, reliability, and overhead in a distributed setting.

- Fault tolerance: managing network splits, node failures, and maintaining system robustness in the face of setbacks.

- Scalability: Creating systems that can grow to handle more nodes and threads running at once.

40. Describe techniques for testing multithreaded applications.

Ans:

- Unit testing: Create unit tests to verify how distinct parts and functions behave when used separately.

- Stress testing: Place the system under load and stress to assess its performance in situations with a lot of workload and concurrency.

- Random testing: Generate random inputs and scenarios to test various interleavings and edge cases in the application’s execution.

41. What are common pitfalls to avoid when testing multithreaded code?

Ans:

When testing multithreaded code, it’s crucial to avoid several common pitfalls. Firstly, not testing with a variety of inputs and scenarios can overlook potential race conditions or synchronization issues. Secondly, relying solely on manual testing without automated tools or frameworks can miss subtle concurrency bugs. Thirdly, insufficient stress testing under heavy loads may fail to uncover performance bottlenecks or deadlock situations.

42. How do you debug concurrency issues in multithreaded applications?

Ans:

To debug concurrency issues in multithreaded applications, the process typically begins with reviewing the code for potential race conditions and synchronization problems. Debugging tools like thread profilers or monitors are used to identify thread states and interactions. Logging thread activities and synchronization points helps trace execution paths and uncover thread-specific issues. Analyzing stack traces and thread dumps during deadlock scenarios assists in pinpointing problematic code sections.

43. What tools and techniques are commonly used for debugging multithreaded code?

Ans:

- Debuggers: Use interactive debuggers that allow multithreading to investigate thread states, set breakpoints, and examine program execution.

- Profilers: Examine the application’s performance to spot resource utilization trends, thread congestion, and bottlenecks.

- Thread sanitizers: To identify concurrent problems such as deadlocks and data races at runtime, utilize programs such as ThreadSanitizer.

44. How can you simulate race conditions for testing purposes?

Ans:

- Delays: To generate timing-dependent race conditions with other threads, introduce delays or sleep statements in one thread.

- Randomizing thread execution order: To reveal various interleavings and race situations, randomly arrange the threads’ execution order or add randomization to the scheduling.

- Fault injection: To test error handling and robustness, introduce race conditions or false faults into the code using fault injection techniques.

45. Explain stress testing and performance profiling and how they apply to multithreaded applications.

Ans:

Stress testing involves putting the program under a lot of strain or stress to assess its resilience, stability, and functionality in harsh circumstances. Stress testing in multithreaded programs simulates high concurrent activity to find any bottlenecks, race conditions, or synchronization problems. Performance profiling is the process of examining an application’s runtime behavior and performance attributes.

46. What methods are used to detect memory leaks in multithreaded code?

Ans:

- Heap Dump Analysis: Using programs like jmap or VisualVM, take application heap dumps and examine them to find items that ought to have been garbage collected but are still in memory.

- Examine thread dumps to find threads that contain references to items that are too important to be trash collected.

- Memory Profiling Tools: Track memory use over time and spot unusual spikes or leaks with memory profiling tools.

47. Discuss best practices for logging in multithreaded applications.

Ans:

- Employ Thread-Local Logging Context: To avoid log messages from many threads interleaving, keep a distinct logging context for every thread.

- Prevent Operations from Being Blocked: Application performance is less affected by logging frameworks such as Logback or Log4j, which offer asynchronous appenders to offload logging activities to different threads.

- Using Log Levels Sensibly Select log levels carefully, taking into account the severity of the message, to reduce excessive logging overhead.

48. How can you monitor thread activity and resource utilization in Java?

Ans:

In Java, thread activity and resource utilization can be monitored using tools like Java Mission Control (JMC) or VisualVM. These tools provide real-time insights into thread states, CPU usage, memory consumption, and I/O operations. Thread dumps generated by JMC or VisualVM offer detailed information on thread execution paths, waiting states, and locks held. Monitoring frameworks like Java Management Extensions (JMX) facilitate programmatic access to thread and resource metrics, enabling custom monitoring solutions.

49. What measures can you take to ensure thread safety in a multithreaded application?

Ans:

- Synchronization: To safeguard crucial code segments that access shared resources, use synchronized blocks or procedures.

- Immutable Objects: To prevent changeable states shared by threads, use thread-safe data structures or immutable objects whenever possible.

- Atomic Operations: To perform atomic operations on shared variables, use atomic classes such as AtomicInteger or AtomicReference.

50. Explain the concept of deadlock avoidance.

Ans:

Deadlock avoidance is a strategy used in concurrent computing to prevent the occurrence of deadlocks, where multiple processes or threads are blocked indefinitely due to conflicting resource dependencies. Unlike deadlock detection and recovery, which handle deadlocks after they occur, avoidance techniques proactively analyze resource allocation requests to ensure that a safe state, where deadlock cannot occur, is maintained.

51. How do you handle interrupts in Java multithreading?

Ans:

In Java multithreading, interrupts are managed using the interrupt() method and the isInterrupted() method of the Thread class or by using Thread.interrupted(). When a thread is interrupted, it sets an internal flag indicating the interrupt request. The thread can check this flag periodically using isInterrupted() to determine if it should gracefully terminate its operations.

52. Describe the role of synchronized blocks in Java.

Ans:

- Ensure mutual exclusion and thread safety.

- There is only one thread that can execute the synchronized code block at once.

- Other threads are halted until the lock is released in order to prevent race conditions.

53. What is the volatile keyword used in Java?

Ans:

In Java, the volatile keyword is used to indicate that a variable’s value may be modified by multiple threads concurrently. When a variable is declared as volatile, its value is always read directly from main memory and not from the CPU cache, ensuring visibility of changes across threads. This prevents issues like stale or inconsistent reads when multiple threads access the variable simultaneously.

54. How do you coordinate threads using wait and notify in Java?

Ans:

In Java, coordination between threads using wait() and notify() revolves around shared objects and their intrinsic locks. A thread calling wait() releases the lock and enters a waiting state until another thread calls notify() or notifyAll() on the same object, allowing it to resume execution. Typically, these methods are used within synchronized blocks to ensure proper thread synchronization. notify() wakes up a single waiting thread, while notifyAll() wakes up all waiting threads.

55. Discuss the advantages of using the java. Util. Concurrent package.

Ans:

- Thread safety: Less human synchronization is needed because classes are designed with thread safety in mind.

- Elevated-level abstractions: To facilitate concurrent programming, they offer ConcurrentCollections, Executors, and synchronizers like Semaphore and CountDownLatch.

- Performance improvements: Provides efficient concurrent data structures to raise the efficiency of multithreaded systems.

56. What is the significance of the ThreadLocal class in Java?

Ans:

The `Executor` framework provides a high-level abstraction for asynchronously executing actions in a multithreaded context. It decouples the submission of tasks from their execution, relieving you of the burden of managing threads so you can focus on the logic of the tasks. Additionally, it makes creating scalable and efficient concurrent programs easier by facilitating the scheduling, coordination, and thread pooling of concurrent processes.

57. How do you implement thread pooling in Java?

Ans:

A data structure that allows several threads to safely access and modify it simultaneously without running the risk of incorrect or corrupted data is known as thread-safe. Ensuring data integrity and avoiding race conditions are critical in multithreaded environments. Thread-safe data structures often use locks and atomic operations as typical synchronization mechanisms to ensure mutual exclusion and consistency.

58. Explain the purpose of the java.util.concurrent.Executor framework?

Ans:

The `Executor` framework provides a high-level abstraction for asynchronously executing actions in a multithreaded context. It decouples the submission of tasks from their execution, relieving you of the burden of managing threads so you can focus on the logic of the tasks. Additionally, it makes creating scalable and efficient concurrent programs easier by facilitating the scheduling, coordination, and thread pooling of concurrent processes.

59. What is a thread-safe data structure, and why is it important?

Ans:

- A thread-safe data structure permits many threads to safely access and modify it at the same time without risking inconsistent or corrupted data.

- In multithreaded contexts, preventing race situations and guaranteeing data integrity are crucial.

- Locks and atomic operations are common synchronization techniques used by thread-safe data structures to guarantee consistency and mutual exclusion.

60. Describe the concept of concurrent modification and how it can be addressed.

Ans:

- Synchronization: To guarantee mutual exclusion and sequential access to the data structure, use locks or synchronized blocks.

- Fast failure of iterators: Certain data structures, such as `HashMap` and `ArrayList,` offer fail-fast iterators that raise an exception in the event that the underlying collection is changed while the iteration is running.

- Copy-on-write: Make use of data structures with copy-on-write semantics, which ensures thread safety for read operations by creating a new copy of the data structure whenever it is modified.

61. How do you create a custom thread in Java?

Ans:

By extending the `Thread` class and overriding its `run()` function, you can construct a new thread in Java. As an illustration, consider this:

- public class MyThread extends Thread {

- public void run() {

- // Thread logic goes here

- System. out.println(“Custom thread is running…”);

- }

- }

After that, you can use the `start()` method to start an instance of your custom thread.

62. Discuss the differences between the Thread class and the Runnable interface.

Ans:

A thread may be created by extending the `Thread` class, and using the `Runnable` interface, you can implement the `run()` method in a different class and then provide an instance of that class to a `Thread} object. Although a class in Java can only extend one superclass—Thread—it can also implement various interfaces, such as Runnable, which gives the class additional flexibility when utilizing it.

63. What are the potential pitfalls of using multithreading in Java?

Ans:

- Due to simultaneous access to a shared mutable state, race situations, and data corruption, it resulted in

- Deadlocks, in which many threads are stalled while awaiting the release of resources from one another.

- Livelocks are situations in which a thread is caught in a never-ending state of action without moving forward.

- Greater complexity and debugging difficulty as a result of non-deterministic behavior.

64. Explain how the synchronized keyword works in Java.

Ans:

In Java, the’ synchronized’ keyword creates synchronized methods or blocks that are only accessible by one thread at a time. When a thread enters a synchronized method or block, it obtains the intrinsic lock, also called a monitor lock, associated with an object or class. Until the lock is released, other threads are unable to access synchronized methods or blocks on the same object or class.

65. How does Java handle deadlock situations?

Ans:

Java provides several methods for identifying and managing deadlocks. The ThreadMXBean API allows for programmatic detection of deadlocks by analyzing thread states and lock ownership. Additionally, thread dump analysis using tools like jstack can help identify deadlock situations by providing a snapshot of thread activities and locks. To prevent deadlocks, Java encourages best practices such as acquiring locks in a consistent, predictable order and using timeouts with lock attempts.

66. Discuss the benefits of using immutable objects in a multithreaded environment.

Ans:

- Since their state cannot be altered after creation, immutable objects are naturally thread-safe and do not require synchronization.

- By doing away with worries about data corruption and racial circumstances, they streamline concurrent programming.

- Multiple threads can securely exchange immutable objects without running the risk of inconsistent or accidental modification.

67. What are the drawbacks of using synchronized methods?

Ans:

Using synchronized methods in Java introduces several drawbacks. Firstly, they can lead to decreased performance due to the overhead of acquiring and releasing locks, which can cause contention in multithreaded environments. Secondly, synchronized methods can result in potential deadlocks when multiple threads wait indefinitely for each other’s release of resources. Thirdly, they may limit scalability as threads waiting for locks can block others, reducing overall throughput.

68. Describe the role of the java.util.concurrent.locks package in Java?

Ans:

The java.util.concurrent.locks package in Java provides advanced locking mechanisms beyond intrinsic locks (synchronized blocks/methods). It offers explicit lock types such as ReentrantLock, ReadWriteLock, and StampedLock, allowing finer-grained control over thread synchronization. These locks support features like interruptible lock acquisition, timeouts, and fairness policies, enhancing flexibility in concurrent programming.

69. What is the significance of the java.util.concurrent.atomic package?

Ans:

- The java. util. concurrent. atomic’ package provides lock-free, thread-safe classes for atomic operations on primitive types and reference variables.

- These classes improve efficiency in highly concurrent situations by performing atomic updates without explicit locking through the use of compare-and-swap (CAS) operations.

70. How can the producer-consumer pattern be implemented in Java?

Ans:

Separate the threads that produce and process data using a common data structure, such as a queue. To synchronize access to the shared data structure, use locking or blocking queue synchronization algorithms. In order to maintain thread safety and appropriate synchronization, the producer adds data to the shared data structure while the consumer obtains and processes data from it.

71. Discuss techniques for preventing race conditions in multithreaded code.

Ans:

- Use synchronization techniques like locks (‘ synchronized’ blocks or’ ReentrantLock’) to guarantee that only a single thread can access crucial portions of the code at any given moment.

- For straightforward actions like updating variables or incrementing counters, use atomic operations and classes from the `java. Util—concurrent—atomic} package.

- Create thread-safe algorithms and data structures, like concurrent collections from `java.util.concurrent}, that naturally guard against race problems.

72. How do you handle exceptions in multithreaded applications?

Ans:

To handle errors gracefully, catch them at the right abstraction level, ideally within the thread’s execution environment. This will help with debugging and give insight into the behavior of the program, log exceptions, and errors. To handle uncaught exceptions at the thread level and carry out cleanup or recovery, use `Thread.UncaughtExceptionHandler}.

73. What are the advantages of using concurrent collections over synchronized collections?

Ans:

- Superior scalability: Compared to synchronized collections, concurrent collections offer superior speed and scalability by enabling concurrent access by several threads without blocking.

- Enhanced thread safety: By lowering contention and increasing speed, concurrent collections provide more precise locking techniques or non-blocking algorithms.

- Atomic operations included in Concurrent collections make thread-safe programming easier by offering atomic operations for common operations like `putIfAbsent,` `remove,` and `replace}.

74. Describe the ConcurrentHashMap class in Java and its advantages.

Ans:

- Multiple threads can access a concurrent hash table with good performance thanks to the implementation of `ConcurrentHashMap.`

- It uses internal locking methods and partitions the map to reduce congestion, enabling thread-safe operations without the need for external synchronization.

- `ConcurrentHashMap` is appropriate for high concurrency applications because it provides scalable performance for both read and write operations.

75. How do you ensure thread safety in a servlet-based application?

Ans:

Employ servlet container implementations that handle the threading model and request processing in a thread-safe way, such as Apache Tomcat or Jetty. Use local variables or thread-safe data structures in place of servlet instance variables when storing mutable state. Use thread-safe substitutes like atomic variables or concurrent collections to synchronize access to shared resources. Use session management strategies to separate the state of a user’s session or design stateless servlets.

76. Discuss the importance of thread pools in Java.

Ans:

- By managing a pool of reusable threads, thread pools lower the overhead associated with creating and destroying threads.

- By limiting the number of active threads, they avoid overloading and resource fatigue.

- By reducing context switching, thread creation overhead, and reusing threads for numerous activities, thread pools increase performance.

77. How do you handle resource contention in a multithreaded environment?

Ans:

- Access to shared resources can be serialized by using synchronization techniques like locks or atomic operations.

- To control access to limited resources, such as file handles or database connections, use resource pooling strategies.

- Reduce the amount of time spent holding locks and release resources as soon as they are finished using them to maximize resource usage.

78. Explain the concept of thread synchronization in Java.

Ans:

Thread synchronization ensures that multiple threads coordinate access to shared resources in a mutually exclusive and ordered fashion. Synchronization primitives such as `synchronized` blocks, `ReentrantLock,` or concurrent data structures from `java.util.concurrent` are used to do this. Synchronization enforces a happens-before relationship between memory accesses by distinct threads, preventing race situations, data corruption, and inconsistency.

79. Describe the Java Memory Model and its implications for multithreading.

Ans:

In a multithreaded environment, the Java Memory Model (JMM) lays out the guidelines and assurances for how threads communicate with memory. JMM guarantees memory visibility, ordering, and atomicity of operations across threads. It offers happens-before relationships to assure ordering between memory accesses made by several threads, which promotes thread safety and stops data races.

80. How do you implement a thread-safe counter in Java?

Ans:

To create a thread-safe counter in Java, utilize atomic variables from the java.util.concurrent.atomic package, such as LongAdder or AtomicInteger. These classes ensure thread safety by providing atomic operations like increment, decrement, and compare-and-set, without needing explicit synchronization. This approach not only simplifies concurrent access to the counter but also improves performance in high-contention scenarios compared to synchronized methods.

81. What strategies can you use to improve the performance of multithreaded applications?

Ans:

- Diminish conflict: Reduce thread congestion by using synchronization and locks as little as possible.

- Employ thread pools: To save the overhead of creating new threads, reuse existing ones.

- Asynchronous processing: To overlap I/O and computation, use asynchronous programming approaches.

- Partitioning: Break up the burden into smaller, concurrently-executable jobs.

82. Discuss the benefits of using parallel streams in Java 8 for multithreading.

Ans:

- Simplified parallelism: Developers can parallelize activities with little effort since parallel streams abstract away the hassle of managing threads.

- Automatic load balancing: To maximize CPU utilization, parallel streams automatically divide jobs across available threads.

- Integration with current APIs: Parallel streams facilitate the parallelization of existing code by integrating smoothly with current Java 8 APIs such as Lambdas, Streams, and Collections.

83. What is the significance of the java.util.concurrent.Future interface?

Ans:

The interface offers ways to obtain or abort an asynchronous computation, representing the outcome. It makes optimal use of resources by enabling the concurrent execution of asynchronous operations without blocking the caller thread. By letting one thread wait on the outcome of another thread’s computation, `Future} makes it easier for threads to coordinate and synchronize.

84. How do you handle timeouts in multithreaded code?

Ans:

“java.util.concurrent.TimeoutException” should be used: To implement a timeout mechanism, wrap the possibly blocked operation in a `try-catch` block and handle the `TimeoutException.` Place resource timeouts: Configure timeouts on resources like locks or I/O operations using timeout parameters or specific mechanisms offered by concurrency utilities.

85. Describe the ExecutorService interface and its implementations in Java.

Ans:

- `ExecutorService` is a higher-level abstraction that manages thread execution and offers thread pool management and task submission functions.

- `ThreadPoolExecutor}, `ScheduledThreadPoolExecutor}, and `ForkJoinPool` are some of the implementations.

- A general-purpose thread pool implementation that can be customized is called `ThreadPoolExecutor.`

86. How do you coordinate the execution of multiple threads in Java?

Ans:

Employ synchronization techniques to manage access to shared resources, such as locks, semaphores, or barriers. Employ higher-level structures such as `CyclicBarrier` or `CountDownLatch` to synchronize the execution of several threads at particular programmatic points. Use concurrent data structures like `BlockingQueue` to implement producer-consumer patterns by coordinating thread-to-thread communication.

87. What are the challenges of designing concurrent algorithms?

Ans:

- Data races and synchronization: To avoid data corruption and preserve consistency, proper access to a shared mutable state must be ensured.

- Deadlocks and livelocks: Steer clear of circumstances in which threads interact inefficiently or are blocked indefinitely.

- Scalability and performance: Creating algorithms that grow with rising workload and concurrency levels while making effective use of the resources at hand.

88. Explain how you would design a lock-free data structure in Java.

Ans:

Employ non-blocking algorithms, such as compare-and-swap (CAS), that rely on atomic operations to guarantee progress even when there is disagreement. Implement data structures with lock-free methods, such as hazard pointers or optimistic concurrency control, such as queues, stacks, or maps. Create lock-free data structures by utilizing the concurrency primitives (like `AtomicInteger` and `AtomicReference`) offered by the `java.util.concurrent.atomic` package.

89. Discuss the impact of garbage collection on multithreaded applications.

Ans:

- Garbage Collection’s Effect on Multithreaded Applications: In multithreaded applications, garbage collection pauses can cause delay and unpredictable behavior that reduces throughput and responsiveness.

- Concurrent garbage collectors—such as the G1 (Garbage-First) collector—seek to reduce pause times by collecting garbage alongside application execution.

90. How do you handle memory visibility issues in multithreaded code?

Ans:

In multithreaded code, memory visibility issues arise when changes made by one thread to shared variables are not immediately visible to other threads due to caching optimizations or processor reordering. To handle this, synchronization mechanisms like synchronized blocks, volatile variables, or explicit memory barriers (such as using java.util.concurrent classes) can be employed. These ensure that changes made by one thread are correctly propagated and visible to others, preventing inconsistencies.