Deep Learning is Large Neural Networks

- Andrew Ng from Coursera and Chief Scientist at Baidu Research formally founded Google Brain that eventually resulted in the productization of deep learning technologies across a large number of Google services.

- He has spoken and written a lot about what deep learning is and is a good place to start.

- In early talks on deep learning, Andrew described deep learning in the context of traditional artificial neural networks. In the 2013 talk titled “Deep Learning, Self-Taught Learning and Unsupervised Feature Learning” he described the idea of deep learning as:

Using brain simulations, hope to:

- I believe this is our best shot at progress towards real AI

- Later his comments became more nuanced.

- The core of deep learning according to Andrew is that we now have fast enough computers and enough data to actually train large neural networks.

- When discussing why now is the time that deep learning is taking off at ExtractConf 2015 in a talk titled “What data scientists should know about deep learning“, he commented:

- very large neural networks we can now have and … huge amounts of data that we have access to

- He also commented on the important point that it is all about scale. That as we construct larger neural networks and train them with more and more data, their performance continues to increase. This is generally different to other machine learning techniques that reach a plateau in performance.

- for most flavors of the old generations of learning algorithms … performance will plateau. … deep learning … is the first class of algorithms … that is scalable. … performance just keeps getting better as you feed them more data

He provides a nice cartoon of this in his slides:

- Finally, he is clear to point out that the benefits from deep learning that we are seeing in practice come from supervised learning. From the 2015 ExtractConf talk, he commented:

- almost all the value today of deep learning is through supervised learning or learning from labeled data

Earlier at a talk to Stanford University titled “Deep Learning” in 2014 he made a similar comment:

- one reason that deep learning has taken off like crazy is because it is fantastic at supervised learning

- Andrew often mentions that we should and will see more benefits coming from the unsupervised side of the tracks as the field matures to deal with the abundance of unlabeled data available.

- Jeff Dean is a Wizard and Google Senior Fellow in the Systems and Infrastructure Group at Google and has been involved and perhaps partially responsible for the scaling and adoption of deep learning within Google.

- Jeff was involved in the Google Brain project and the development of large-scale deep learning software DistBelief and later TensorFlow.

- In a 2016 talk titled “Deep Learning for Building Intelligent Computer Systems” he made a comment in the similar vein, that deep learning is really all about large neural networks.

- When you hear the term deep learning, just think of a large deep neural net. Deep refers to the number of layers typically and so this kind of the popular term that’s been adopted in the press. I think of them as deep neural networks generally.

- He has given this talk a few times, and in a modified set of slides for the same talk, he highlights the scalability of neural networks indicating that results get better with more data and larger models, that in turn require more computation to train.

Deep Learning algorithms you should know

- Now let’s talk about more complex things. Deep learning algorithms, or put it differently, mechanisms that allow us to use this cutting-edge technology:

Backpropagation

- The backpropagation algorithm is a popular supervised algorithm for training feedforward neural networks for supervised learning.

- Essentially, backpropagation evaluates the expression for the derivative of the cost function as a product of derivatives between each layer from left to right — “backwards” — with the gradient of the weights between each layer being a simple modification of the partial products (the “backwards propagated error”).

- We feed the network with data, it produces an output, we compare that output with a desired one (using a loss function) and we readjust the weights based on the difference. And repeat. And repeat.

- The adjustment of weights is performed using a non-linear optimization technique called stochastic gradient descent.

- Let’s say that for some reason we want to identify images with a tree. We feed the network with any kind of images and it produces an output. Since we know if the image has actually a tree or not, we can compare the output with our truth and adjust the network.

- As we pass more and more images, the network will make fewer and fewer mistakes. Now we can feed it with an unknown image, and it will tell us if the image contains a tree. Pretty cool, right?

Great article to go deeper: Neural networks and back-propagation explained in a simple way

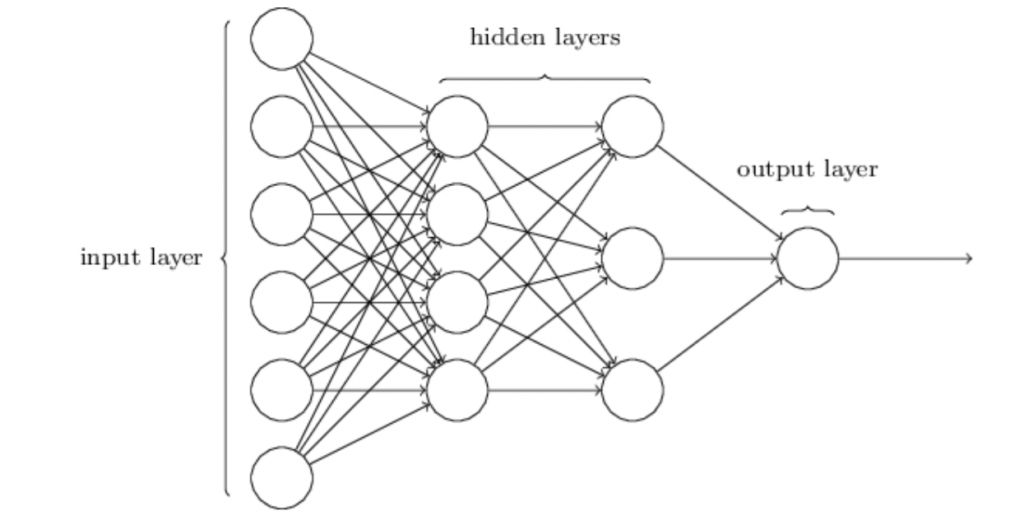

Feedforward Neural Networks (FNN)

- Feedforward Neural Networks are usually fully connected, which means that every neuron in a layer is connected with all the other neurons in the next layers. The described structure is called Multilayer Perceptron and originated back in 1958.

- Single-layer perceptron can only learn linearly separable patterns, but a multilayer perceptron is able to learn non-linear relationships between the data.

- The goal of a feedforward network is to approximate some function f. ??? ???????, ??? ? ??????fi??, ? = ?(x) maps an input x to a category y.

- A feedforward network defines a mapping y = f(x;θ) and learns the value of the parameters θ that result in the best function approximation.

- These models are called feedforward because information flows through the function being evaluated from x, through the intermediate computations used to define f, and finally to the output y.

- There are no feedback connections in which outputs of the model are fed back into itself. When feedforward neural networks are extended to include feedback connections, they are called recurrent neural networks.

Convolutional Neural Networks (CNN)

- ConvNets have been successful in identifying faces, objects, and traffic signs apart from powering vision in robots and self-driving cars.

- From the Latin convolvere, “to convolve” means to roll together. For mathematical purposes, convolution is the integral measuring of how much two functions overlap as one passes over the other.

- Think of convolution as a way of mixing two functions by multiplying them.

- The green curve shows the convolution of the blue and red curves as a function of t, the position indicated by the vertical green line. The gray region indicates the product g(tau)f(t-tau) as a function of t, so its area as a function of t is precisely the convolution.”

- The product of those two functions’ overlap at each point along the x-axis is their convolution. So in a sense, the two functions are being “rolled together.”

- In a way, they try to regularize feedforward networks to avoid overfitting (when the model learns only pre-seen data and can’t generalize), which makes them very good in identifying spatial relationships between the data.

- Another great article I will certainly recommend — The best explanation of Convolutional Neural Networks on the Internet!

Recurrent Neural Networks (RNN)

- Recursive neural networks are very successful in many NLP tasks. The idea of RNN is to consistently use information. In traditional neural networks, it is understood that all inputs and outputs are independent.

- But for many tasks this is not suitable. If you want to predict the next word in a sentence, it is better to consider the words preceding it.

- RNNs are called recurrent because they perform the same task for each element of the sequence, and the output depends on previous calculations.

- Another interpretation of RNN: these are networks that have a “memory” that takes into account prior information.

- The diagram above shows that the RNN is deployed to a complete network. By sweep, we simply write out the network for complete consistency.

- For example, if the sequence is a sentence of 5 words, the sweep will consist of 5 layers, a layer for each word.

The formulas that define the calculations in RNN are as follows:

- x_t — input at time step t. For example, x_1 may be a one-hot vector corresponding to the second word of a sentence.

- s_t is the hidden state in step t. This is the “memory” of the network. s_t depends, as a function, on previous states and the current input x_t: s_t = f (Ux_t + Ws_ {t-1}). The function f is usually non-linear, for example tanh or ReLU. s _ {- 1}, which is required to compute the first hidden state, is usually initialized to zero (zero vector).

- o_t — exit at step t. For example, if we want to predict a word in a sentence, the output may be a probability vector in our dictionary. o_t = softmax (Vs_t)

Generation of image descriptions

- Together with convolutional neural networks, RNNs were used as part of the model for generating descriptions of unlabeled images. The combined model combines the generated words with the features found in the images:

- It is also not impossible not to mention that the most commonly used type of RNNs are LSTMs, which capture (store) long-term dependencies much better than RNNs. But don’t worry, LSTMs are essentially the same as RNNs, they just have a different way of calculating the hidden state.

- The memory in LSTM is called cells, and you can think of them as black boxes that accept the previous state h_ {t-1} and the current input parameter x_t as input.

- Inside, these cells decide which memory to save and which to erase. Then they combine the previous state, current memory and input parameter.

- These types of units are very effective in capturing (storing) long-term dependencies.

Recursive Neural Network

- Recursive Neural Networks are another form of recurrent networks with the difference that they are structured in a tree-like form. As a result, they can model hierarchical structures in the training dataset.

- They are traditionally used in NLP in applications such as Audio to text transcription and sentiment analysis because of their ties to binary trees, contexts, and natural-language-based parsers.

- However, they tend to be much slower than Recurrent Networks