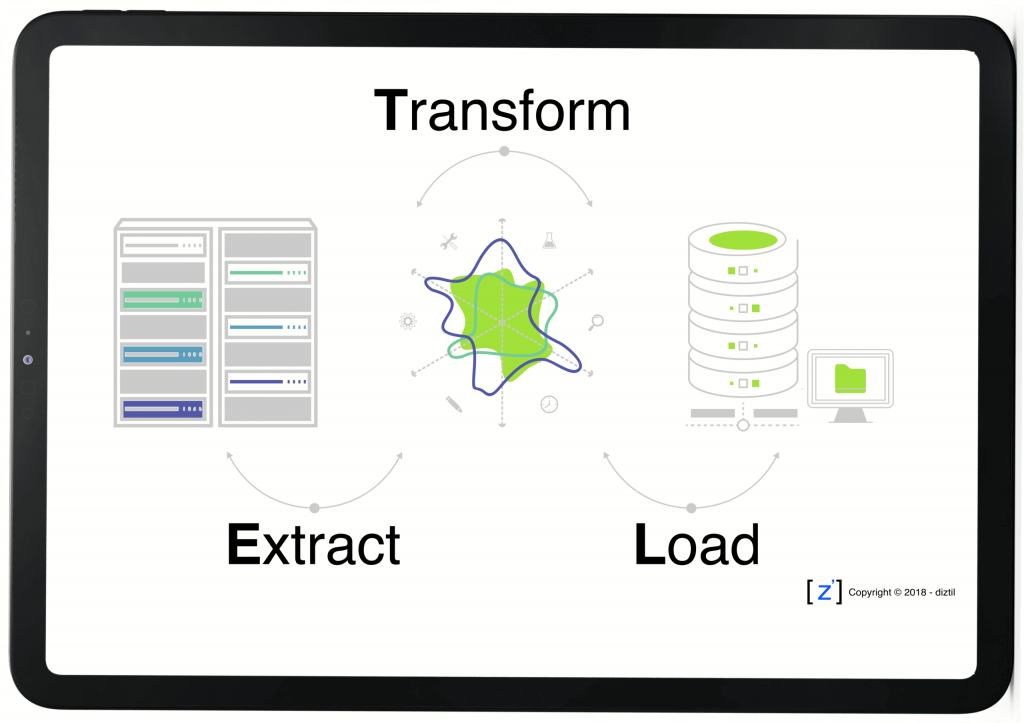

ETL, which stands for extract, transform, and load, is the process data engineers use to extract data from different sources, transform the data into a usable and trusted resource.

- Introduction of ETL

- What does ETL do?

- Benefits of ETL

- Different types of ETL data pipelines

- Informatica’s cloud ETL for data integration

- ETL in industry

- Who Invented ETL?

- What are other features of ETL Tool?

- The characteristics of a Good ETL Tool

- Why is Informatica ETL is in a boom?

- Conclusion

- ETL stands for extract, rework, and load.

- ETL is a three-step information integration method used to synthesize uncooked information from a information supply to a information warehouse, information lake, or relational database. Data migrations and cloud information integrations are not unusualplace use instances for ETL.

- With cloud ETL, you may extract information from a couple of sources, consolidate and rework that information, then load it right into a centralized region in which it is able to be accessed on demand. ETL is frequently used to make information conveniently to be had for analysts, engineers, and selection makers throughout plenty of use instances inside an organization.

Introduction of ETL

- Extraction is the primary segment of ETL. Data is amassed from one or extra records reassets and held in brief garage in which the subsequent steps of ETL might be executed.

- During extraction, validation policies are implemented to check whether or not records conforms to its destination’s requirements. Data that fails validation is rejected and does now no longer preserve to the subsequent steps of ETL.

- In the transformation segment, records is processed to make its values and structure conform consistently with its intended use case. The purpose of transformation is to make all recordshealthy withina uniform schema before it movements onto the final step of ETL.

- Typical changes encompass formatting dates, resorting rows or columns of records, becoming a member of records from values into one, or, conversely, splitting records from one price into .

- Finally, the weight segment movements the converted records right into a permanent, goal database – on-premises or withinside the cloud. Once all records has been loaded, the ETL manner is complete.

- Many agencies frequently carry out ETL to maintain their records warehouse up to date with the cutting-edge records.

What does ETL do?

ETL movements records from a supply to a destination (e.g., records warehouse) in 3 wonderful steps. Here’s a brief summary:-

Extract:

Transform:

Load:

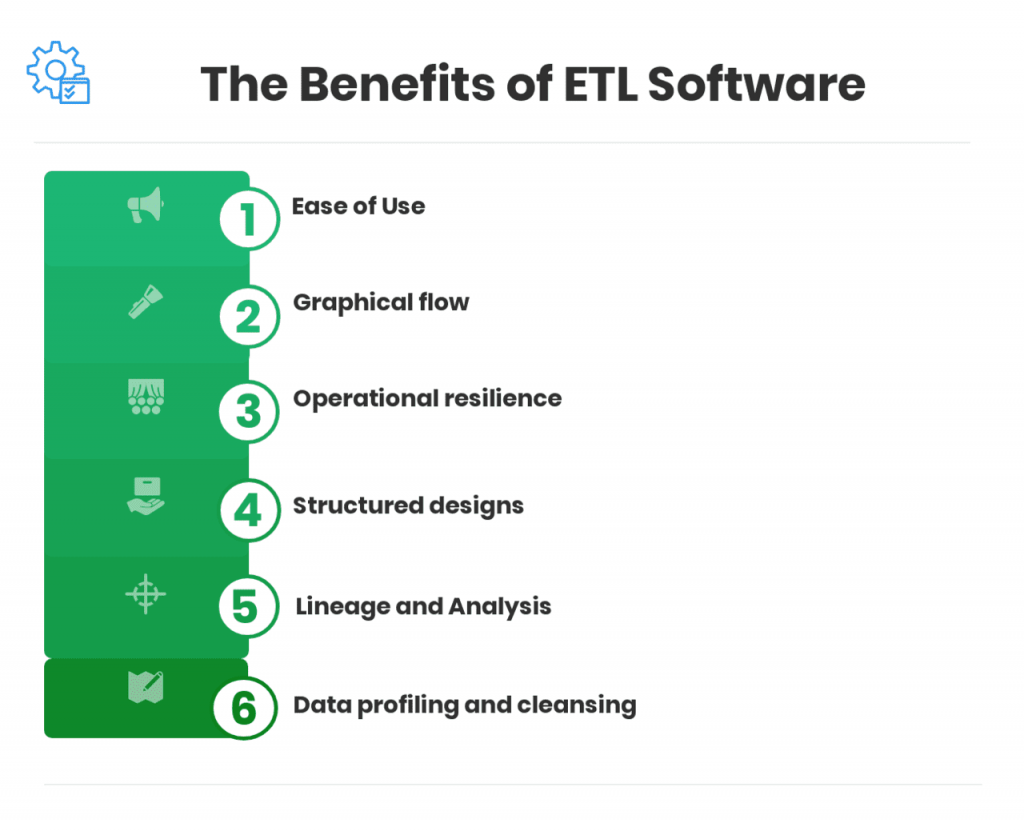

Benefits of ETL

ETL gear paintings in live performance with facts integration gear we guide many facts control use cases – along with facts exceptional, facts governance , virtualization, and metadata. Here are the pinnacle ETL benefits:-

Get deep historic context to your commercial enterprise:

When used with an organization facts warehouse (facts at rest), ETL offers historic context to your commercial enterprise through combining legacy facts with facts accumulated from new structures and packages.

Simplify cloud facts migration:

ETL allows you switch your facts to a cloud facts lake or cloud facts warehouse to boom facts accessibility, software scalability, and security. Businesses depend upon cloud integration to enhance operations now extra than ever.

Deliver a single, consolidated view of your commercial enterprise:

Ingest and synchronize facts from reassets including on-premises databases or facts warehouses, SaaS packages, IoT devices, and streaming packages to a cloud facts lake to set up one view of your commercial enterprise.

Enable commercial enterprise intelligence from any facts at any latency Businesses these days want to investigate more than a few facts types – along with structured, semi-structured, and unstructured – from more than one reassets, including batch, real-time, and streaming.

ETL gear make it simpler to derive actionable insights out of your facts, so that you can become aware of new commercial enterprise possibilities andmanual progresseddecision-making.

Deliver clean, honest facts for decision-making:

Use ETL gear to convert facts whilst retaining lineage and traceability at some point of the facts lifecycle. This approach all facts practitioners – from facts scientists to facts analysts to line-of-commercial enterprise users – can have get entry to to dependable facts, regardless of their facts needs.

By automating crucial facts practices, ETL gear make certain the facts you acquire for evaluation meets the exceptional preferred required to supply relied on insights for decision-making. ETL may be paired with extra facts exceptional gear to assure facts outputs meet your particular specifications.

Automate facts pipelines:

ETL gear permit time-saving automation for arduous and habitual facts engineering tasks. Gain progressed facts control effectiveness and boost up facts delivery. Automatically ingest, process, integrate, enrich, prepare, map, define, and catalog facts.

Operationalize AI and system learning (ML) models:

Data technological know-how workloads are made extra robust, efficient, and smooth to maintain. With cloud ETL gear you could effectively manage the massive facts volumes required through facts pipelines utilized in system learning, DataOps, and MLOps.

- Batch processing is used for conventional analytics and commercial enterprise intelligence use instances in which statistics is periodically accrued, transformed, and moved to a cloud statistics warehouse.

- Users can fast set up high-extent statistics from siloed reassets right into a cloud statistics lake or statistics warehouse and agenda jobs for processing statistics with minimum human intervention. With ETL in batch processing, statistics is accrued and saved throughout an occasion called a “batch window”, to greater correctly control huge quantities of statistics and repetitive tasks.

- Real-time statistics pipelines allow customers to ingest based and unstructured statistics from more than a few streaming reassets, including IoT, related devices, social media feeds, sensor statistics and cellular applications. A high-throughput messaging device guarantees the statistics is captured accurately.

- Data transformation is executed the usage of a real-time processing engine (e.g., Spark streaming) to pressure utility capabilities like real-time analytics, GPS area tracking, fraud detection, predictive maintenance, centered advertising campaigns, or proactive client care.

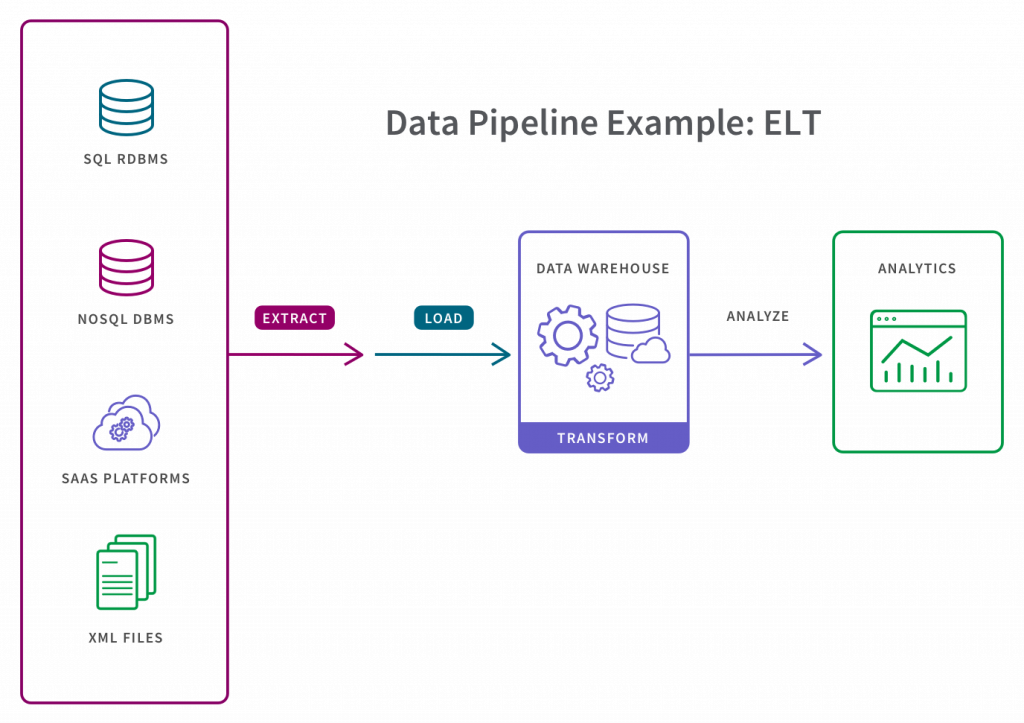

Different types of ETL data pipelines

Data pipelines are labeled primarily based totally on their use case. The maximum not unusualplace kinds of statistics pipelines rent both batch processing or real-time processing:-

Batch processing pipelines:

Real-time processing pipelines:

- As groups continue alongside the information-pushed virtual transformation journey, they’re centralizing their information and analytics in cloud information warehouses and information lakes to pressure superior analytics and information technology use cases. A transformation with such excessive effect capability calls for an enterprise-scale, cloud-local information integration strategy to hastily develop & operationalize stop-to-stop information pipelines, and modernize legacy programs for AI.

- Informatica’s enterprise main solutions, provide the maximum comprehensive, codeless, AI-powered cloud-local information integration. Create your information pipelines throughout a multi-cloud surroundings together with AWS, Azure, GCP, Snowflake, Databricks, etc. Ingest, enrich, transform, prepare, scale and percentage any information at any volume, speed and latency on your information integration or information technology initiatives.

Informatica’s cloud ETL for data integration

ETL in industry

ETL is an crucial factor in records integration initiatives throughout lots of industries. Organizations can also additionally use ETL to in the end growth operational efficiencies, enhance client loyalty, supply omnichannel studies, and discover new sales streams or commercial enterprise models:-

Healthcare:

Healthcare agencies use ETL of their holistic method to records management. By synthesizing disparate records throughout their organization, healthcare organizations are accelerating clinical & commercial enterprise approaches whilst enhancing member, patient, and issuer studies.

Public zone:

Public zone agencies use ETL to floor the insights they want to maximise their efforts running below strict budgets. Tight budgets suggest greater performance is crucial to supplying offerings with restricted to be had resources. Data integration makes it viable for governmental departments to make the pleasant use of each records and funding.

Manufacturing:

Manufacturing leaders remodel their records to optimize operational performance, make sure deliver chain transparency, resiliency, and responsiveness, and enhance omnichannel studies whilst making sure regulatory compliance.

Financial offerings:

Financial establishments use ETL of their records integrations to get right of entry to records this is transparent,holistic,and guarded to develop sales, supply personalised client studies,detect,and save you fraudulent activity, realise speedy fee from mergers and acquisitions whilst complying with new and current regulations.They want to recognize who their clients are and a way to supply offerings that suit their unique needs.

- Ab Initio- a multinational software program corporation primarily based totally out of Lexington, Massachusetts, United States framed a GUI Based parallel processing software program known as ETL. The different anciental modifications regarding the ETL adventure are briefed here.

- If you need to complement your profession and end up a expert in Informatica – a international on-line schooling platform: “Informatica Online Training”. This path will assist you to obtain excellence on this domain.

Who Invented ETL?

- Data through splitting a unmarried record into smaller information files.

- The pipeline lets in numerous additives to run concurrently at the equal information. three. A issue is the executables. Processes concerned walking concurrently on one-of-a-kind information to do the equal job.

- The superior ETL gear like PowerCenter and Metadata Messenger etc., that lets you make faster, automated, and notably impactful based information as in line with your commercial enterprise needs.

- You can ready-made database and metadata modules with drag and drop mechanism on an answer that routinely configures, connects, extracts, transfers, and masses to your goal system.

What are other features of ETL Tool?

Parallel Processing:

ETL is carried out the usage of a idea referred to as Parallel Processing. Parallel Processing is a computation finished on a couple of approaches executing concurrently. ETL can paintings three forms of parallelism:-

Visual ETL:

The characteristics of a Good ETL Tool

1. It must boom statistics connectivity and scalability.

2. It must be able to connecting a couple of relational databases.

3. It must assist even csv-datafiles in order that stop customers can import those documents with much less code or no code.

4. It must have a user-pleasant GUI that makes stop-customers effortlessly combine the statistics with the visible mapper.

5. It must permit the stop-customers to customise the statistics modules as in step with their enterprise needs.

- Accurate and automate deployments.

- Minimizing the dangers concerned in adopting new technologies.

- Highly secured and trackable statistics.

- Self- Owned and customizable get right of entry to to the permission.

- Exclusive statistics catastrophe recovery, statistics monitoring, and statistics maintenance.

- Attractive and inventive visible statistics delivery.

- Centralized and cloud-primarily based totally server.

- Concrete firmware safety to statistics and employer community protocols.

Why is Informatica ETL is in a boom?

Conclusion

In the above weblog submit we blanketed all of the crucial information referring to the informatica ETL tool, in case you locate whatever now no longer blanketed please drop your question withinside the remarks section.