Applicants applying for ISTQB jobs are most times not aware of the kind of questions that they may face during the interview. While knowing the basics of ISTQB is a must without saying, it is also wise to prepare for NLP interview questions that may be specific to the organization and what it does. That way, not only will you be deemed as a suitable fit for the job, but you will also be well-prepared for the role that you are aspiring to take on. ACTE has prepared a list of the top 100 ISTQB interview questions and answers that will help you during your interview.

1. What is software testing?

Ans:

The process evaluates and verifies to improve user happiness and fulfill client expectations. A software or system component must be executed to assess one or more attributes of interest. Software testing is done to find bugs, holes, or needs that aren’t present in the program.

2. Explain the importance of software testing.

Ans:

Testing contributes to quality assurance by ensuring the dependability and quality of software, reducing the likelihood of defects in the production environment.

- Risk Mitigation: Testing helps identify and mitigate software development and deployment risks.

- Customer Satisfaction: Properly tested software is more likely to improve user happiness and fulfill client expectations.

- Cost-Effectiveness: Early detection and correction of defects are more cost-effective than addressing issues in the later stages of development or after deployment.

3. Differentiate between verification and validation.

Ans:

- Verification: The process of evaluating work products (such as requirements, design, or code) during or at the end of each development phase to ensure that they meet specified requirements.

- Validation: assessment of a system or component at the beginning or conclusion of development to see if it meets the criteria

4.Define the term “bug” in software testing.

Ans:

Regarding software testing, a bug refers to a flaw or defect in a software system, leading to inaccurate or surprising outcomes. Bugs can appear in several ways, including coding errors, design flaws, or incorrect system behavior. Identifying, reporting, and addressing bugs is fundamental to software testing and quality assurance.

5. What is the purpose of test cases?

Ans:

Test cases are made to confirm if a software application or system functions as intended. They specify inputs, execution conditions, and expected results to ensure thorough testing of different software aspects, helping identify and fix defects. They contribute to identifying and resolving flaws, guaranteeing that the program satisfies quality and user requirements.

6. Explain the testing life cycle.

Ans:

The testing life cycle includes phases such as:

- Requirements Analysis: Understanding testing requirements.

- Test Planning: Developing a test plan outlining testing strategy and assets.

- Test Design: Formulating test cases according to specifications.

- Test Environment Setup: Preparing the necessary infrastructure for testing.

- Test Execution: Running test cases and capturing results.

- Defect Reporting: Identifying and documenting defects.

Test Closure: Evaluating test completion and summarizing results.

7. What is unit testing?

Ans:

Unit testing involves individual units or components of a software application in isolation. The goal is to verify that each unit functions as designed. Developers usually handle it throughout the development stage. Unit testing is used to confirm that every software unit operates as intended. They ensure that the minor testable parts of the code function correctly. Units typically refer to individual functions, methods, or procedures.

8. Define integration testing.

Ans:

Integration testing involves testing the interactions between different parts or elements of a software program to guarantee they work together as intended. It aims to detect defects in the interfaces and interactions between integrated components. It plays a vital role in identifying defects arising from the interactions between components and contributes to building a robust and well-integrated software system.

9. Explain system testing.

Ans:

System testing is conducted on a complete, integrated system to assess if it complies with the standards as stated. It evaluates the system and ensures that it meets functional and non-functional requirements. It aims to uncover defects or issues that may not be apparent in earlier testing phases and contributes to software reliability and performance assurance.

10. Differentiate between alpha and beta testing.

Ans:

| Alpha Testing: | Beta Testing: |

|---|---|

| The internal development team performs it before making the program available to outside users. It helps identify defects and issues within the software. > | It involves releasing the software to a limited group of external users for testing in a real-world environment. It aims to gather end-user feedback to identify potential issues before a wider release |

11. What is acceptance testing?

Ans:

Acceptance testing is the software’s last stage.

A testing process is conducted to determine whether a software system meets the acceptance criteria set by stakeholders. Acceptance testing’s main objective is to validate that the software fulfills business requirements and is ready for deployment. This testing phase is typically performed by end users, business representatives, or quality assurance teams closely aligned with the end users.

12. Define functional testing.

Ans:

Testing that confirms a software program operates or executes its tasks as intended is known as functional testing. It entails verifying that the application’s features, user interfaces, databases, and APIs meet the necessary specifications by testing them. It guarantees that the program produces the desired functionalities and meets user expectations and business requirements.

13. Explain non-functional testing.

Ans:

- Software testing includes non-functional testing as a subset. That concentrates on assessing a program’s non-functional features.

- Application, which are attributes or characteristics that are not directly related to specific behaviors or functions.

- Non-functional testing assesses performance, usability, reliability, scalability, security, and maintainability. Ensuring the program complies with specific criteria related to these non-functional attributes.

14. What is regression testing?

Ans:

- One kind of software testing called regression testing includes running a series of previously run test cases again to ensure that changes to the code don’t affect any of the existing features. Regression testing aims to locate and detect any unintentional side effects or added faults.

- By new code changes, enhancements, or bug fixes. It helps ensure that the overall integrity of the software is maintained as it evolves.

15. Define performance testing.

Ans:

One kind of software testing called performance testing assesses a software application’s responsiveness, speed, scalability, reliability, and overall performance under various conditions. Performance testing’s main objective is to determine and address any performance-related issues that may impact the user experience, system stability, or the application’s ability to handle expected workloads.

16. What is usability testing?

Ans:

One kind of software testing called usability testing is focuses on evaluating the user interface (UI), user experience (UX), and overall usability of a software application. Usability testing’s main objective is to guarantee that the program is intuitive, user-friendly, and fits the demands and expectations of its intended users. This testing process involves real users interacting with the software to identify usability issues, user interface flaws, and areas for improvement.

17. Explain security testing.

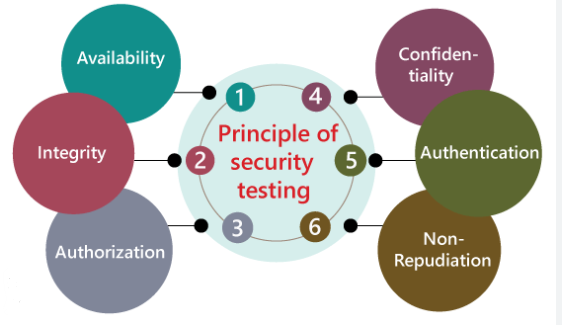

Ans:

One kind of software testing, security testing, is concerned with identifying vulnerabilities, weaknesses, and potential security risks within a software program. Security testing’s primary goal is to evaluate the robustness of a system’s security mechanisms and ensure that it can resist unauthorized access, protect sensitive data, and mitigate potential security threats.

18. Define compatibility testing.

Ans:

That evaluates the compatibility of a software application or system across different environments, configurations, platforms, devices, and browsers. The primary Compatibility testing seeks to verify that the program operates accurately and reliably. Across various conditions, we are providing a seamless user experience for a diverse user base.

19. What is test planning?

Ans:

Test planning is a crucial phase in the software testing process that involves defining the approach, scope, resources, schedule, and deliverables for testing a software application or system. The main objective of exam preparation is to Outline a comprehensive strategy for testing activities, ensuring the process is well organized and aligned with project goals and requirements.

20. Explain the importance of a test strategy.

Ans:

An overview document called a test strategy outlines a software project’s testing approach and objectives. Its importance lies in:

- Providing a roadmap for testing activities.

- Defining testing scope and priorities.

- Allocating resources effectively.

- Guiding the selection of testing techniques and tools.

- Communicating the overall testing strategy to stakeholders.

- Ensuring systematic and organized testing.

21. Define test execution.

Ans:

The process of executing test cases is known as test execution. Against a software program to confirm its operation. It involves the actual implementation of the test cases, capturing the results, and comparing the actual outcomes with expected outcomes. Executing tests is an essential part of the testing process to identify defects and ensure that the software meets its requirements.

22. What is test monitoring?

Ans:

Test monitoring involves tracking and overseeing the progress of testing activities. It includes collecting data on test execution, analyzing, and ensuring that testing aligns with the defined test plan. Test monitoring helps identify and make immediate decisions and provides accurate information about the testing process.

23. How do you approach testing for different mobile devices and platforms?

Ans:

- Identify the target devices and platforms based on user demographics.

- Prioritize testing on the most popular and critical devices.

- Utilize cloud-based testing services for a diverse device and platform coverage.

- Consider device-specific functionalities and features during test design.

- Regularly update and expand the test suite to accommodate new devices and platforms.

24. Explain equivalence partitioning.

Ans:

Equivalence partitioning is a software testing method where the software’s input data is divided. Application into groups of equivalent or similar data. The primary objective of equivalence partitioning is to minimize the number of test instances while guaranteeing thorough test coverage. By selecting representative test cases from each partition, testes can efficiently validate the behavior of the software and identify potential defects.

25. Define boundary value analysis.

Ans:

Boundary value analysis is software testing that concentrates on examining the data at the edges or boundaries of input domains. Boundary value analysis aims to identify potential errors or defects at the boundaries of acceptable input ranges. This technique is closely related to equivalence partitioning and is often applied in conjunction.

26. What is decision table testing?

Ans:

- Decision table testing is a systematic and structured test design technique used in software testing. It is particularly effective for handling complex business rules and conditions.

- Decision tables represent combinations of conditions and corresponding actions or outcomes. This technique helps testers create a comprehensive set of test cases to verify that a software application behaves correctly under different combinations of input conditions.

27. Explain state transition testing.

Ans:

Software testing methods such as state transition testing focus on the transitions between different states of a system. This technique particularly applies to systems that exhibit behavior changes based on internal and external events. State transition testing helps ensure the software behaves as expected as it transitions from one state to another.

28. Define use case testing.

Ans:

Case testing focuses on validating the functionality of a software application based on its use cases. A use case represents a specific interaction between a user (or an external system) and the software, outlining the steps and conditions under which the system must perform a particular action to achieve a specific goal.

29. What is a defect?

Ans:

In software development and testing, a defect refers to an imperfection, flaw, or issue in a software product that deviates from its intended behavior or specifications. Defects are also commonly known as bugs or software errors. These problems might appear in several ways, such as functional errors, design flaws, performance issues, and other unexpected behaviors impact the software’s functionality, reliability, or usability.

30. Explain the defect life cycle.

Ans:

- The bug life cycle, often called The faulty life cycle, has many stages. A defect goes through its identification to its closure in software development and testing.

- Each stage represents a specific state or phase of the defect, and the defect progresses through these stages as it is reported, analyzed, tested, and ultimately verified for closure.

- The defect life cycle helps teams manage and track the status of defects in the software development process.

31. Define severity and priority.

Ans:

- Severity: How a flaw affects the system’s operation. It symbolizes the flaw’s severity and capacity to cause harm. Severity levels are often categorized.

- Priority: The level of importance assigned to a flaw according to how it affects the project and business goals. Priority helps determine the order in which defects should be addressed and fixed.

32. What is a defect report

Ans:

A defect report, also known as a bug report or an issue report, is a document that provides detailed information about a defect or issue identified during the software testing process. The defect report serves as a formal communication tool between the testing and development teams, providing essential information to facilitate the understanding, analysis, and resolution of the reported defect.

33. Explain the concept of defect clustering.

Ans:

Defect clustering, also known as the “Pesticide Paradox,” is a concept in software testing that suggests that a small number of modules or functionalities in a software application tend to contain most defects. In other words, defects tend to concentrate or cluster around specific areas of the software rather than being evenly distributed across the entire codebase.

34. What is manual testing?

Ans:

- Executing test cases by hand is known as manual testing. Without the use of automated testing tools or scripts. In manual testing, a tester interacts with the software application, explores various features, and verifies its behavior by following predefined test scenarios.

- This type of testing relies on the tester’s skills, experience, and attention to detail to identify defects and ensure that the software meets specified requirements.

35. Define automated testing.

Ans:

Software testing methods like automated testing employ exceptional specialized pre-written test cases and compare the results to what was expected. The testing procedure is automated in automated testing through testing tools, scripts, and frameworks, reducing the need for manual intervention in the execution of tests. Automated testing is employed to improve the testing process’s efficiency, repeatability, and accuracy.

36. Explain smoke testing.

Ans:

Another name for smoke testing is build verification testing. Sanity testing is a preliminary and high-level testing activity performed on a software build to determine whether it is stable enough for more in-depth testing. Smoke testing aims to quickly identify significant issues or defects in the software build before proceeding with more extensive and detailed testing.

37. Define sanity testing.

Ans:

- Sanity testing, also known as double-check software testing or build verification testing, is performed to quickly evaluate whether a specific set of functionalities or components of an application are working correctly after a new build or changes to the software.

- The purpose of sanity testing is to ensure that the critical features are operational and to confirm that the application is stable enough for more detailed testing.

38. What is exploratory testing?

Ans:

Exploratory testing is a dynamic and flexible software testing methodology in which testers concurrently create and implement test cases while exploring the functionalities of an application. Unlike scripted testing, where tests are predefined and follow a script or test case document, exploratory testing relies on the tester’s creativity, domain knowledge and experience to uncover software defects, vulnerabilities, or unexpected behaviors.

39. Explain ad-hoc testing.

Ans:

- Ad-hoc testing is an informal and unstructured software testing method where testers execute test cases without predefined test plans, test cases, or documentation.

- The primary objective of ad-hoc testing is to discover defects, vulnerabilities, or unexpected behaviors in the software through spontaneous and exploratory testing efforts.

- Testers rely on their intuition, experience, and domain knowledge to identify issues during the testing process.

40. Name a few test management tools.

Ans:

- Jira: A widely used tool for issue tracking and project management that supports test case management.

- TestRail: A web-based test management tool for organiorganizinganaging test cases, test plans, and test runs.

- qTest: A comprehensive test management platform with features for test case management, execution, and reporting.

- TestLink: An open-source tool that facilitates test case management, execution, and reporting.

41. What is the purpose of version control systems in testing?

Ans:

In testing, version control systems (VCS) are crucial to oversee and monitor modifications to the source code, test scripts, and other artifacts. The primary purposes include:

- Cooperation: Several team members can work simultaneously on a project without conflicts.

- History Tracking: VCS maintains a history of changes, allowing teams to understand when, why, and by whom changes were made.

- Rollback: Teams can revert to previous versions in case of issues, ensuring stability.

- Branching and Merging: VCS enables the creation of branches for parallel development and merges change back into the main codebase.

42. Explain the use of defect tracking tools.

Ans:

Defect-tracking tools help manage and monitor defects throughout their lifecycle. They provide a centra centralized tory for defect information and aid in:

- Recording Defects: Capturing detailed information about identified defects.

- Assigning and Prioritizing defects to appropriate team members and prioritizing based on severity and impact.

- Tracking Progress: Monitoring the status of defect resolution and testing.

- Communication: Facilitating communication between developers, testers, and other stakeholders.

43. Define performance testing tools.

Ans:

Tools for performance testing are used to assess performance. Speed, scalability, and responsiveness of a software application under different conditions. Examples include:

- Apache JMeter

- LoadRunner

- Gatling

- Apache Benchmark (ab)

44. What is the purpose of automation testing tools?

Ans:

Automation testing tools are designed to automate the execution of test cases, increasing efficiency and repeatability. The primary purposes include:

- Faster Execution: Automation speeds up testing, allowing quicker feedback on software modifications.

- Reusability: Test scripts may be applied to many

- releases and environments.

- Regression Testing: Automation facilitates the continuous execution of regression tests to identify defects introduced by code changes.

- Increased Test Coverage: Automated tests can cover more scenarios than manual testing alone.

45. What are testing metrics?

Ans:

Testing metrics are quantitative measures that provide insights into the testing process’s effectiveness and quality. Examples include:

- Defect Density

- Test Case Pass Rate

- Code Coverage

- Test Execution Time

- Open Defect Count

46. Explain the concept of test coverage.

Ans:

In software testing, test coverage is a statistic that assesses how much a set of test cases exercises the source code of a program. It provides insight s into which parts of the code have been tested and which still need testing. Test coverage analysis aims to ensure that the testing effort is thorough and that critical areas of the software are adequately tested.

47. Define defect density.

Ans:

Defect density is a software quality metric that quantifies the number of defects or issues identified in a software product relative to a specific unit of measurement, often expressed per unit size or effort. It offers perceptions of the caliber of software and its dependability by measuring the defects’ concentration in a given portion of the code or project.

The formula for calculating defect density is typically:

- Defect Density = Number of Defects / Unit of Measurement

48. What is the significance of test efficiency?

Ans:

Test efficiency is a crucial aspect of software testing that measures how effectively testing activities are conducted within a given time frame and with available resources. It contributes significantly to the overall software development and testing process, impacting the quality of the software, time-to-market, and the cost-effectiveness of the testing effort.

49. Explain Agile testing methodology.

Ans:

Agile testing is a collaborative, iterative method of software testing. That aligns with Agile development principles. It emphasizes continuous testing throughout the development cycle, frequent communication, and flexibility to accommodate changing requirements. Agile testing methodologies include Scrum, Kanban, and Extreme Programming (XP).

50. Define the role of a tester in Agile.

Ans:

In Agile, a tester plays a collaborative and integrated role throughout the development lifecycle. Responsibilities include:

- Test Planning: Collaborating with the team to plan testing activities for each iteration.

- Continuous Testing: Performing testing activities continuously during the development cycle.

- Collaboration: Engaging in daily stand-ups, sprint planning, and retrospectives with the development team.

- Automation: C contributing to and maintaining automated test scripts for regression testing.

- Feedback: Providing feedback on user stories, acceptance criteria, and potential risks.

- Adaptability: Being flexible and adapting to changing requirements and priorities.

51. What is the purpose of sprint testing in Agile?

Ans:

Sprint testing in Agile is conducted within a specific sprint or iteration. The purpose is to ensure that the increments of the product developed during the sprint meet the defined acceptance criteria and are of high quality. Sprint testing helps identify and address defects early in development, enabling continuous improvement and finishing each sprint with a potentially shippable product.

52. Explain the concept of continuous testing.

Ans:

Continuous testing is an approach where testing activities are integrated seamlessly into the software delivery pipeline. It involves the automated execution of tests throughout the development lifecycle, providing rapid feedback on the quality of code changes. Continuous testing identifies defects early, promotes faster release cycles, and ensures the software meets business requirements.

53. What is a test plan?

Ans:

- A test plan is a document that outlines the overall strategy, objectives, resources, schedule, and approach to be used for a specific software testing effort.

- It functions as a thorough manual that offers details on how testing will be conducted, what features or functionalities will be tested, the test environment, the scope of testing, and the duties and obligations of the team members participating in the testing process

54. Define test cases.

Ans:

Test cases are detailed specifications that describe the conditions, inputs, actions, and expected outcomes for the execution of a specific test scenario. They act as the fundamental components of the testing process, providing step-by-step instructions for testers to follow when evaluating the functionality of a software application. Test cases are designed to verify whether the software behaves as intended and to find any flaws or differences between the anticipated and actual outcomes.

55. Explain the purpose of a test summary report.

Ans:

A test summary report is a document that summarizes the testing activities and results at the end of a testing phase or project. It includes information on test execution, test coverage, defects found and fixed, and any deviations from the test plan. The test summary report provides stakeholders an overview of the testing process and the software’s current state.

56. Define the traceability matrix.

Ans:

- A document that creates a traceability matrix is called a relationship between different levels of documentation throughout the software development and testing process.

- It provides a clear and systematic way to trace and track the alignment between various project artifacts, ensuring that requirements, test cases, and other deliverables are linked and in sync.

- The primary purpose of a traceability matrix is to enable better visibility, manageability, and validation of project requirements, testing efforts, and their interdependencies.

57. Why do you want to pursue ISTQB certification?

Ans:

An internationally recognized qualification is the ISTQB (International Software Testing Qualifications Board) certification. for software testers. Pursuing ISTQB certification demonstrates a commitment to best practices in software testing, a standardized understanding of testing concepts, and proficiency in industry-accepted terminology.Practical experience, problem-solving skills, and a deep understanding of the specific context in which testing is conducted are also crucial for success in the field of software testing.

58. What are the benefits of ISTQB certification for a tester?

Ans:

- Global Recognition: ISTQB certification is recognized worldwide, enhancing career opportunities globally.

- Standardized Knowledge: Provides a standardized understanding of testing concepts and terminology.

- Professional Credibility: Enhances professional credibility and trust among employers and peers.

- Quality Assurance: Demonstrates a commitment to quality and best practices in software testing.

- Opportunities for professional progression are created by career advancement. and higher-level positions.

59. Explain the difference between mobile app testing and web app testing.

Ans:

- Mobile app testing focuses on native mobile applications installed on devices.

- Web app testing targets applications accessed through web browsers.

- Mobile app testing considers device-specific features and capabilities.

- Web app testing addresses cross-browser compatibility and responsiveness.

60. What is continuous improvement in testing?

Ans:

Continuous improvement in testing is the ongoing process of enhancing testing processes, methodologies, and practices to achieve better efficiency, effectiveness, and quality. It involves regularly evaluating testing activities, identifying areas for improvement, implementing changes, and learning from experiences to continually enhance the testing process. Agile emphasizes continuous improvement as a core concept. Methodologies, promoting adaptation and optimization throughout the software development lifecycle.

61. Define the purpose of a test process.

Ans:

The purpose of a test process is to systematically and effectively evaluate a software application to ensure its quality and readiness for release. The test process includes planning, designing test cases, executing tests, identifying and managing defects, and providing stakeholders with relevant information about the software’s quality. The overall goal is to deliver a reliable and high-quality software product.

62. Explain the importance of lessons learned in testing.

Ans:

Lessons learned in testing provide valuable insights and knowledge gained from past testing experiences. They are important for:

- Identifying areas for improvement in testing processes and methodologies.

- Avoiding the repetition of mistakes and pitfalls.

- Enhancing the efficiency and effectiveness of future testing efforts.

- Facilitating continuous improvement and adaptation to changing project requirements.

63. How do you handle tight deadlines in testing?

Ans:

To handle tight deadlines in testing:

- Prioritize testing activities based on critical functionalities.

- Focus on high-priority test cases and critical paths.

- Work closely with the development team in order to resolve critical issues promptly.

- Automate repetitive and time-consuming tasks.

- Communicate effectively with stakeholders about potential risks and trade-offs.

64. Describe a challenging situation you faced in testing and how you resolved it.

Ans:

In a challenging situation, such as unexpected changes to project requirements,

- Collaborated with the development team to understand the changes.

- Conducted a quick impact analysis to identify affected test cases.

- Adjusted the test plan and prioritize test cases based on critical functionalities.

- Communicated effectively with stakeholders about potential delays and risks.

- Closely collaborated with the team to carry out testing efficiently and meet the adjusted deadlines.

65. How do you prioritize test cases?

Ans:

Test cases can be prioritized based on factors such as:

- Criticality of functionalities.

- Business impact of potential defects.

- Frequency of use by end-users.

- Dependencies between test cases.

- Risks associated with specific features.

66. You discover a critical defect just before a software release. What would you do?

Ans:

In the event of discovering a critical defect just before a release, I would:

- Immediately report the defect to the development team and stakeholders.

- Provide detailed information about the defect’s impact and potential risks.

- Collaborate with the team to assess the severity and explore potential workarounds.

- Discuss possible mitigation strategies, such as a quick fix or delaying the release.

- Work closely with the development team to expedite the resolution process

67. How do you approach testing in an environment with constantly changing requirements?

Ans:

In an environment with constantly changing requirements:

- Embrace Agile testing methodologies to adapt to changes efficiently.

- Collaborate closely with the development team and stakeholders.

- Prioritize flexibility and maintain open communication channels.

- Use risk-based testing to focus on critical functionalities.

- Implement continuous testing practices to catch and address changes promptly.

68. Describe a situation where automated testing was more effective than manual testing.

Ans:

In a situation where a large set of repetitive regression tests needed to be executed quickly and consistently, automated testing was more effective than manual testing. Automated tests ensured rapid and accurate execution, allowing for frequent regression testing without significant time and resource investments. This increased test coverage and helped identify defects early in the development process.

69. Explain code coverage.

Ans:

Code coverage is a statistic that quantifies how much a software application’s source code is used through a series of test cases. It displays the proportion of code branches, lines, or paths that have been executed during testing. Code coverage helps assess the thoroughness of testing and identifies areas that lack adequate coverage.

70. What is control flow in white box testing?

Ans:

Control flow in white box testing refers to the path followed by the execution of the program’s instructions. It involves analyzing the logical sequence of statements, branches, loops, and conditions within the code. Understanding the control flow is crucial for designing test cases that cover different paths through the code and ensuring comprehensive testing of the software’s functionality.

71. Define data flow in white box testing.

Ans:

- Data flow in white box testing refers to the movement of data within a software application during its execution.

- White box testers analyze how data is input, processed, and output by examining the paths through which data travels within the code.

- Understanding data flow helps identify potential points of failure, uncover data-related defects, and ensure data integrity.

72. What is equivalence class partitioning in black box testing?

Ans:

Partitioning equivalency classes is a form of black box testing. Technique where input values are divided into groups or classes based on similar characteristics. Test cases are then designed to represent each equivalence class. This method ensures that there are fewer test cases while still maintaining each class is adequately tested, as inputs within the same class are expected to behave similarly.

73. Explain boundary value analysis in black box testing.

Ans:

Boundary Value Analysis (BVA) is a black-box testing technique used to design test cases around the boundaries or edges of input domains. The idea behind this technique is that defects often occur at the boundaries of input ranges, where the behavior of the software is more likely to be incorrect or inconsistent. BVA is commonly applied to variables that have a range of valid input values.

74. Define state transition testing in black box testing.

Ans:

Transition testing used for systems that exhibit different behaviors based on their internal state. Test cases are designed to cover transitions between different states of the system and ensure that the system behaves as expected during these transitions. This technique is commonly applied to finite state machines.

75. How do you identify and prioritize risks in testing?

Ans:

Identifying Risks:

- Review project documentation, requirements, and design.

- Conduct risk brainstorming sessions with the project team.

- Analyze past project data and lessons learned.

- Use risk checklists and templates.

Prioritizing Risks:

- Assess the possibility and effect of every risk that has been identified.

- Sort hazards according to how they could affect the goals of the project.

- Consider the level of uncertainty associated with each risk.

- Collaborate with stakeholders to determine risk tolerance.

76. Explain the concept of risk-based testing.

Ans:

Software testing methodology known as “risk-based testing” prioritizes and focuses testing efforts based on the identified risks associated with a software application. The goal of risk-based testing is to allocate testing resources efficiently by concentrating on areas of the application that are considered to be high-risk, where defects are more likely to occur and have a significant impact on the project or business.

77. What is the purpose of a risk management plan?

Ans:

A risk management plan outlines how project risks will be identified, assessed, monitored, and mitigated throughout the project lifecycle. The purposes include:

- Providing a structured approach to risk management.

- Communicating the risk management strategy to stakeholders.

- Guiding risk identification and assessment activities.

- Defining roles and responsibilities for risk management.

78. Define a test environment.

Ans:

A test environment refers to a controlled and configured setup that mimics the real-world production environment in which software applications are tested. It provides the necessary infrastructure, hardware, software, and network configurations to conduct testing activities effectively. The test environment aims to replicate the conditions under which the software will operate in production, allowing testers to assess its behavior, performance, and functionality in a controlled and predictable manner.

79. Explain the importance of test data in testing.

Ans:

Test data is crucial for testing because:

- It represents scenarios that help verify the functionality of the software.

- It uncovers defects by testing under various conditions.

- It ensures the software handles different types of inputs correctly.

- It validates that the system processes data accurately.

80. How do you set up a test environment?

Ans:

To set up a test environment:

- Identify hardware and software requirements.

- Install necessary applications, databases, and dependencies.

- Configure network settings to mimic the production environment.

- Populate the environment with appropriate test data.

- Ensure the environment is isolated and controlled.

- Validate that the test environment is replicating production conditions accurately.

81. What is a test execution plan?

Ans:

A test execution plan is a document that outlines the approach, resources, schedule, and activities for executing the test cases during a specific testing phase. It includes details such as test execution strategy, test environment setup, entry and exit criteria, test data requirements, and the allocation of responsibilities among team members.The level of detail in a Test Execution Plan may change based on the scope and intricacy of the project.

82. Explain the concept of smoke testing.

Ans:

- Smoke testing, also known as a “build verification test” or “smoke test suite,” is a preliminary and minimal set of tests conducted on a software build or release.

- Smoke testing’s main objective is to quickly determine whether the most critical functionalities of the application work correctly after a new build or version is deployed.

- It is typically performed early in the testing process, often right after the software is built, to catch major defects before more in-depth testing is conducted.

83. What is regression testing, and why is it important?

Ans:

Software testing techniques such as regression testing are used to confirm that new features, improvements, or bug fixes made to the program have not negatively impacted its current functionality. Regression testing’s main objective is to ensure that modifications to the codebase have not introduced new defects or unintended side effects in previously working areas of the software.

84. How do you handle flaky tests in automated testing?

Ans:

To handle flaky tests:

- Investigate and address the root cause of flakiness.

- Improve test case design and make tests more robust.

- Retry flaky tests within the automated testing framework.

- Analyze test results over multiple test runs.

- Regularly review and update automated tests to maintain reliability.

85. What is the purpose of test closure activities?

Ans:

Test closure activities include:

- Summarizing test results and metrics.

- Preparing a test summary report.

- Evaluating the testing process against exit criteria.

- Documenting lessons learned.

- Closing test environments and test data.

86. Define the test summary report.

Ans:

A report known as a test summary offers an overview of the testing activities performed during a testing phase or the entire project. It includes details on test execution status, test coverage, defects found and fixed, and an assessment of the overall software quality. The test summary report is typically prepared during test closure activities.

87. How do you ensure that all test cases are executed before closing a testing cycle?

Ans:

To ensure all test cases are executed before closing a testing cycle:

- Use a test management tool to track test case execution.

- Monitor progress against the test plan and schedule.

- Implement traceability matrices to link test cases to requirements.

- Regularly update and review test execution status with the testing team.

88. Define performance testing.

Ans:

One kind of software testing called performance testing is concerned with evaluating how a system performs under specific conditions, particularly in terms of responsiveness, speed, scalability, stability, and resource usage. Performance testing’s main objective is to guarantee that a system or software program complies with the performance expectations and requirements defined by stakeholders, such as end-users, customers, and business specifications.

89. Explain the types of performance testing.

Ans:

- Load Testing: Evaluates the system’s ability to handle expected loads.

- Stress Testing: Tests the system’s behavior under extreme conditions.

- Scalability Testing: Assesses the system’s performance as load increases.

- Endurance Testing: Evaluates the system’s performance over a prolonged period.

- Volume Testing: Tests the system’s ability to handle large amounts of data.

90. What is the purpose of load testing?

Ans:

Load testing’s objective is to assess the performance of a software application or system under realistic and expected levels of load, traffic, and user interactions. Performance testing includes load testing as a subset. and involves subjecting the system to a specified amount of work or load to assess its behavior, responsiveness, and ability to handle concurrent user activity.

91. How do you analyze performance test results?

Ans:

Analyzing performance test results involves:

- Identifying performance metrics such as response time, throughput, and resource utilization.

- Comparing results against performance objectives and requirements.

- Investigating any deviations from expected performance.

- Determining the root cause of performance issues.

- Providing recommendations for optimization and improvement.

92. Explain the importance of security testing.

Ans:

Security testing is crucial for:

- Identifying vulnerabilities and weaknesses in the software.

- guaranteeing critical data’s availability, confidentiality, and integrity.

- Protecting against unauthorized access, data breaches, and malicious attacks.

93. What are the common security vulnerabilities in web applications?

Ans:

- SQL Injection

- Cross-Site Scripting (XSS)

- Cross-Site Request Forgery (CSRF)

- Security Misconfigurations

- Insecure Direct Object References (IDOR)

- Broken Authentication and Session Management

94. How do you perform security testing in a networked environment?

Ans:

- Conduct penetration testing to identify vulnerabilities.

- Evaluate network security controls, such as firewalls and intrusion detection systems.

- Assess the security of communication channels and data transmission.

- Implement security best practices for network configurations.

- Perform security audits and code reviews

95. Define usability testing.

Ans:

A sort of software testing called usability testing assesses how simple and efficient it is for people to engage with a product, website, or software program. Finding problems with the user interface (UI) and user experience (UX) is the main objective of usability testing.by observing real users as they perform specific tasks within the system. The insights gained from usability testing help designers and developers enhance the overall usability and user satisfaction of the product.