- Introduction to Azure stream analytics

- Constraints of Azure stream analytics

- Asset logs

- Following stages

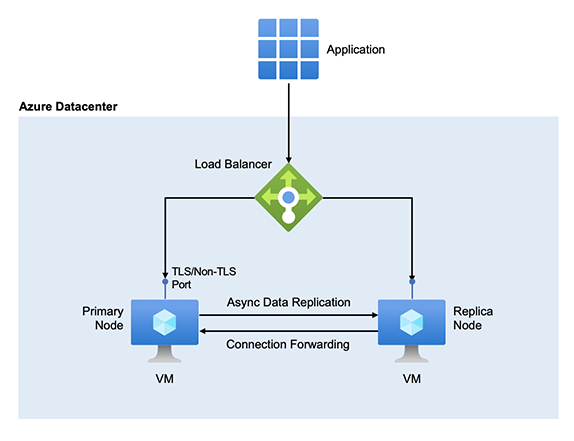

- Make an Azure Cache for Redis occurrence

- Mistake taking care of and retries

- Known issues

- Tidy up assets

- Sign in to the Azure gateway

- Use Cases Of Azure Stream Analytics

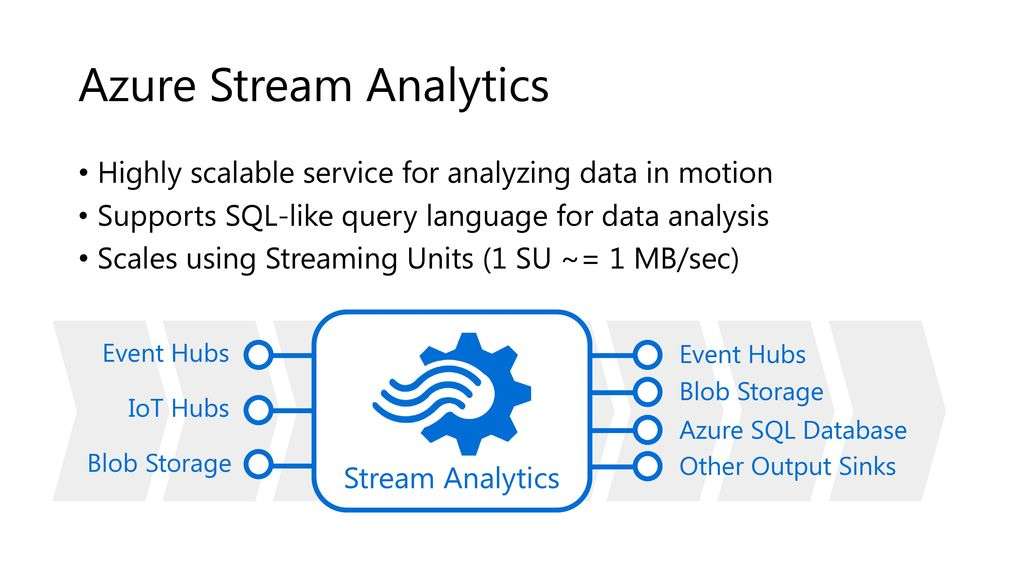

- Azure Stream Analytics

- Key capacities and advantages

- Conclusion

- JavaScript client characterized capacities

- JavaScript client characterized totals

- C# client characterized capacities (utilizing Visual Studio)

- Azure Machine Learning

- You can involve these capacities for situations, for example, continuous scoring utilizing AI models, string controls, complex numerical computations, encoding and unraveling information.

- JavaScript client characterized capacities in Azure Stream Analytics

- Azure Stream Analytics JavaScript client characterized totals

- Create .NET Standard client characterized capacities for Azure Stream Analytics occupations

- Coordinate Azure Stream Analytics with Azure Machine Learning.

- Make and run a Stream Analytics work

- Make an Azure Cache for Redis occurrence

- Make an Azure Function

- Really look at Azure Cache for Redis for results

- On the off chance that you don’t have an Azure membership, make a free record before you start.

- Arrange a Stream Analytics task to run a capacity

- This segment exhibits how to arrange a Stream Analytics task to run a capacity that composes information to Azure Cache for Redis. The Stream Analytics work peruses occasions from Azure Event Hubs, and runs a question that summons the capacity. This capacity peruses information from the Stream Analytics work, and composes it to Azure Cache for Redis.

- Make a Stream Analytics work with Event Hubs as information

- Follow the Real-time extortion location instructional exercise to make an occasion center, start the occasion generator application, and make a Stream Analytics work. Skirt the means to make the question and the result. All things considered, see the accompanying areas to set up an Azure Functions yield.

- Make a reserve in Azure Cache for Redis by utilizing the means portrayed in Create a store.

- After you make the reserve, under Settings, select Access Keys. Make a note of the Primary association string.

- Make a capacity in Azure Functions that can compose information to Azure Cache for Redis.

- Azure Functions runtime form 4

- .NET 6.0

- StackExchange.Redis 2.2.8

- Make a default HttpTrigger work application in Visual Studio Code by following this instructional exercise.

- Introduce the Redis customer library by running the accompanying order in a terminal situated in the undertaking organizer:

- The capacity can now be distributed to Azure.

- Open your Stream Analytics work on the Azure gateway.

- Peruse to your capacity, and select Overview > Outputs > Add. To add another result, select Azure Function for the sink choice.

- Assuming that a disappointment happens while sending occasions to Azure Functions, Stream Analytics retries most activities. All http special cases are retried until progress except for http blunder 413 (element excessively huge). A substance too enormous mistake is treated as an information blunder that is exposed to the retry or drop strategy.

- Retrying for breaks might bring about copy occasions kept in touch with the result sink. At the point when Stream Analytics retries for a bombed group, it retries for every one of the occasions in the clump.. Expect that Azure Functions requires 100 seconds to deal with the initial 10 occasions in that bunch. After the 100 seconds pass, Stream Analytics suspends the solicitation since it has not gotten a positive reaction from Azure Functions, and one more solicitation is sent for a similar cluster

- In the Azure entry, when you attempt to reset the Max Batch Size/Max Batch Count worth to discharge (default), the worth changes back to the recently entered esteem upon save. Physically enter the default esteems for these fields for this situation.

- The utilization of HTTP steering on your Azure Functions is as of now not upheld by Stream Analytics.

- Backing to associate with Azure Functions facilitated in a virtual organization isn’t empowered.

- From the left-hand menu in the Azure entry, click Resource gatherings and afterward click the name of the asset you made.

- On your asset bunch page, click Delete, type the name of the asset to erase in the text box, and afterward click Delete

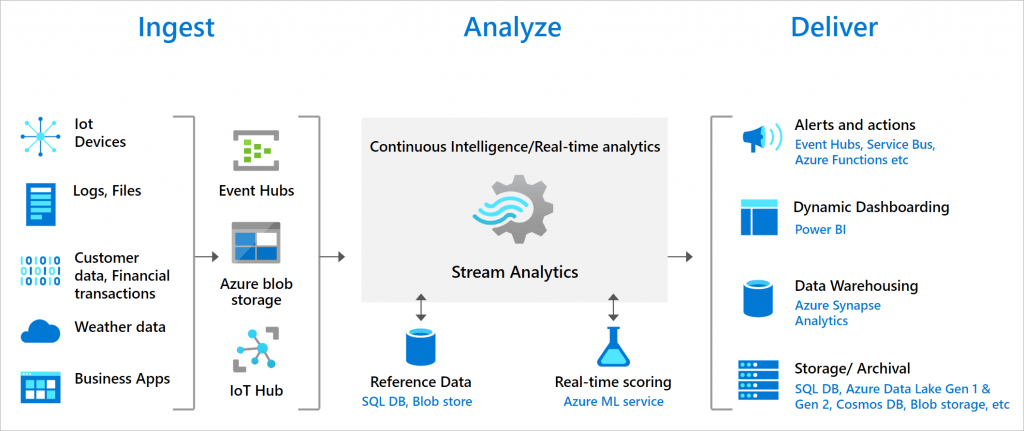

- Generally, examination arrangements have been founded on abilities like ETL (remove, change, burden) and information warehousing, where information is put away before investigation. Evolving necessities, including all the more quickly showing up information, are stretching this current model to the edge. The capacity to examine information inside moving streams preceding capacity is one arrangement, and keeping in mind that it’s anything but another ability, the methodology has not been broadly embraced across all industry verticals.

- Microsoft Azure gives a broad inventory of examination advancements that are fit for supporting a variety of various arrangement situations and prerequisites. Choosing which Azure administrations to send for a start to finish arrangement can be a test given the broadness of contributions. This paper is intended to depict the capacities and interoperation of the different Azure administrations that help an occasion streaming arrangement. It additionally clarifies a portion of the situations in which clients can profit from this sort of approach.

- Naturally test approaching information from input. Azure Stream Analytics consequently gets occasions from your streaming sources of info. You can run questions on the default test or set a particular time span for the example.

- a. The serialization type for your information is naturally distinguished assuming that its JSON or CSV. You can physically transform it too to JSON, CSV, AVRO by changing the choice in the dropdown menu.

- b. Utilize the selector to see your information in Table or Raw organization.

- c. On the off chance that your information shown isn’t current, select Refresh to see the most recent occasions.

- Find your current Stream Analytics work and select it.

- On the Stream Analytics work page, under the Job Topology heading, select Query to open the Query manager window.

- To test your question with a nearby document, select Upload test input on the Input see tab.

- All testing will be run with a task that makes them stream Unit.

- The break size is one moment. So any question with a window size more noteworthy than one moment can’t get any information.

- Change and investigate information continuously.

- Continuous dashboarding with Power BI (observing purposes)

- send alarms.

- Settle on choices continuously

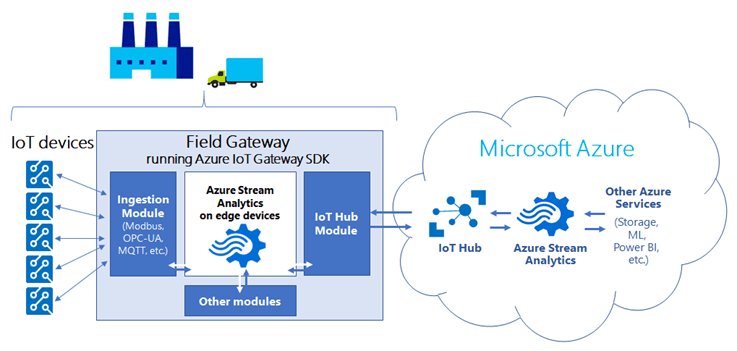

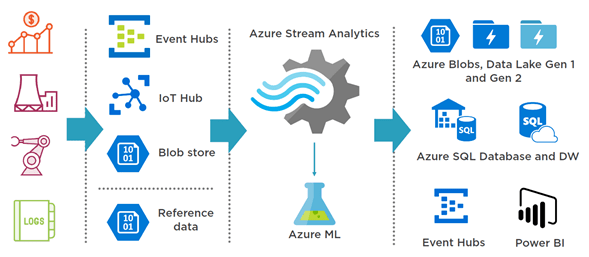

- Stream Analytics ingests information from Azure Event Hubs (counting Azure Event Hubs from Apache Kafka), Azure IoT Hub, or Azure Blob Storage. The inquiry, which depends on SQL question language, can be utilized to effectively channel, sort, total, and join streaming information throughout some undefined time frame.

- Stock-exchanging investigation and alarms.

- Extortion identification, information, and recognize securities.

- Installed sensor and actuator examination.

- Stream Analytics begins with a wellspring of streaming information. The information can be ingested into Azure from a gadget utilizing an Azure occasion center point or IoT center. The information can likewise be pulled from an information store like Azure Blob Storage.

- To analyze the stream, you make a Stream Analytics work that indicates from where the information comes. The occupation likewise determines a change; how to search for information, examples, or connections. For this errand, Stream Analytics upholds a SQL-like inquiry language to channel, sort, total, and join streaming information throughout a time span.

- Send an order to change gadget settings.

- Send information to an observed line for additional activity in view of discoveries.

- Send information to a Power BI dashboard.

- Send information to capacity like Data Lake Store, Azure SQL Database, or Azure Blob stockpiling.

- You can change the quantity of occasions handled each second while the gig is running. You can likewise create indicative logs for investigating.

- Get everything rolling by trying different things with data sources and questions from IoT gadgets.

- Assemble a start to finish Stream Analytics arrangement that inspects phone metadata to search for false calls.

- Observe replies to your Stream Analytics inquiries in the Azure Stream Analytics discussion.

Introduction to Azure stream analytics:-

Azure Stream Analytics doesn’t track all capacities summons and brought outcomes back. To ensure repeatability – for instance, re-running your occupation from more seasoned timestamp produces similar outcomes again – don’t to utilize capacities like Date.GetData() or Math.random(), as these capacities don’t return a similar outcome for every conjuring.

Azure Stream Analytics upholds the accompanying four capacity types:

Constraints of Azure stream analytics :-

Client characterized capacities are stateless, and the return worth must be a scalar worth. You can’t shout to outside REST endpoints from these client characterized capacities, as it will probably affect execution of your work.

Asset logs :-

Any runtime blunders are viewed as lethal and are surfaced through action and asset logs. It is suggested that your capacity handles all special cases and blunders and return a substantial outcome to your question. This will keep your occupation from going to a Failed state.

Special case taking care of:

Any exemption during information handling is viewed as a horrendous disappointment while consuming information in Azure Stream Analytics. Client characterized capacities have a higher potential to toss special cases and cause the handling to stop. To stay away from this issue, utilize an attempt get block in JavaScript or C# to discover special cases during code execution. Special cases that are gotten can be logged and treated without causing a framework disappointment. You are urged to consistently enclose your custom code by an attempt get square to abstain from tossing startling exemptions for the handling motor.

Following stages :-

In this instructional exercise, you figure out how to:

Make an Azure Cache for Redis occurrence :-

See the Create a capacity application part of the Functions documentation. This example was based on:

Mistake taking care of and retries :-

Known issues :-

Tidy up assets :-

At the point when presently not required, erase the asset bunch, the streaming position, and every connected asset. Erasing the occupation abstains from charging the streaming units consumed by the gig. In the event that you’re wanting to involve the occupation in future, you can stop it and restart it some other time when you really want. On the off chance that you won’t keep on utilizing this work, erase all assets made by this quickstart by utilizing the accompanying advances:

Sign in to the Azure gateway :-

Find and select your current Stream Analytics work. On the Stream Analytics work page, under the Job Topology heading, select Query to open the Query supervisor window.

Transfer test information from a neighborhood record. Rather than utilizing live information, you can utilize test information from a neighborhood record to test your Azure Stream Analytics question.

Sign in to the Azure gateway.

Impersonations: Time strategy isn’t upheld in gateway testing:

Mixed up: all approaching occasions will be requested.

Late appearance: There won’t be late appearance occasion since Stream Analytics can involve existing information for testing. C# UDF isn’t upheld.

AI isn’t upheld.

The example information API is choked after five solicitations in a 15-minute window. After the finish of the 15-minute window, you can accomplish more example information demands.

Investigating

Assuming that you get this blunder “The solicitation size is too large. Kindly diminish the information size and attempt once more”. Diminish inquiry size – To test a determination of question, select a part of question then, at that point, click Test chosen question.

Use Cases Of Azure Stream Analytics :-

Store streaming information and make it accessible to other cloud administrations for additional investigation, detailing, and so on:

Azure Stream Analytics :-

Azure Stream Analytics is an overseen occasion handling motor set up continuous insightful calculations on streaming information. The information can emerge out of gadgets, sensors, sites, web-based media takes care of, utilizations, framework frameworks, and that’s only the tip of the iceberg.

Use Stream Analytics to analyze high volumes of information gushing from gadgets or cycles, separate data from that information stream, recognize examples, patterns, and connections. Utilize those examples to trigger different cycles or activities, similar to alarms, mechanization work processes, feed data to a detailing instrument, or store it for later examination.

A few models:

Web clickstream investigation.

At last, the work indicates a result for that changed information. You control how to treat reaction to the data you’ve investigated. For instance, because of the examination, you may:

Key capacities and advantages :-

Stream Analytics is intended to be not difficult to utilize, adaptable, and versatile to any work size.

Associate data sources and results

Stream Analytics associates straightforwardly to Azure Event Hubs and Azure IoT Hub for stream ingestion, and to Azure Blob stockpiling administration to ingest chronicled information. Join information from occasion center points with Stream Analytics with different information sources and handling motors. Work info can likewise incorporate reference information (static or slow-evolving information). You can join streaming information to this reference information to perform query tasks the same way you would with data set queries.1

Course Stream Analytics work yield in numerous headings. Keep in touch with capacity like Azure Blob, Azure SQL Database, Azure Data Lake Stores, or Azure Cosmos DB. From that point, you could run group examination with Azure HDInsight. Or then again send the result to one more help for utilization by another cycle, like occasion centers, Azure Service Bus, lines, or to Power BI for perception.

Easy to utilize

To characterize changes, you utilize a basic, decisive Stream Analytics inquiry language that allows you to make modern examinations with no programming. The inquiry language accepts streaming information as its feedback. You can then channel and sort the information, total qualities, perform computations, join information (inside a stream or to reference information), and use geospatial capacities. You can alter inquiries in the entryway, utilizing IntelliSense and punctuation checking, and you can test questions utilizing test information that you can extricate from the live stream.

Extensible inquiry language

You can broaden the abilities of the inquiry language by characterizing and conjuring extra capacities. You can characterize work brings in the Azure Machine Learning administration to exploit Azure Machine Learning arrangements. You can likewise incorporate JavaScript client characterized capacities (UDFs) to perform complex computations as section a Stream Analytics question.

Versatile

Stream Analytics can deal with up to 1 GB of approaching information each second. Combination with Azure Event Hubs and Azure IoT Hub permits tasks to ingest a huge number of occasions each second coming from associated gadgets, clickstreams, and log records, to give some examples. Utilizing the parcel component of occasion centers, you can segment calculations into consistent advances, each with the capacity to be additionally apportioned to build versatility.

Minimal expense

As a cloud administration, Stream Analytics is advanced for cost. Pay in view of streaming-unit use and how much information handled. Utilization is inferred in light of the volume of occasions handled and how much registering power provisioned inside the gig group.

Solid

As an oversaw administration, Stream Analytics forestalls information misfortune and gives business coherence. Assuming that disappointments happen, the help gives worked in recuperation capacities. With the capacity to inside keep up with express, the assistance gives repeatable outcomes guaranteeing it is feasible to file occasions and reapply handling later on, continuously getting similar outcomes. This empowers you to travel once again into the past and research calculations when doing underlying driver investigation, imagine a scenario where examination, etc.

Subsequent stages

Conclusion :-

The Azure Stream Analytics is created in such a way that can help with the adaptable working interaction and can scale examples of information. It is likewise very affordable to utilize. By utilizing Azure Stream Analytics, you can set up the association with many results and data sources. It can build up an immediate association with the Event Hubs for the smooth help between wellsprings of information and states. Then again, Azure Stream Analytics is very simple to utilize. The help allows you to get to a language which is very modern. As such, you can channel information and can utilize its flexible capacities. One would likewise have the component to address information designs. In this specific circumstance, it is intriguing to take note of that Azure Stream Analytics is very low in cost and subsequently is utilized by countless experts. It is dependable and has a fast recuperation period.