- About Data Warehousing

- Kinds of Data warehousing

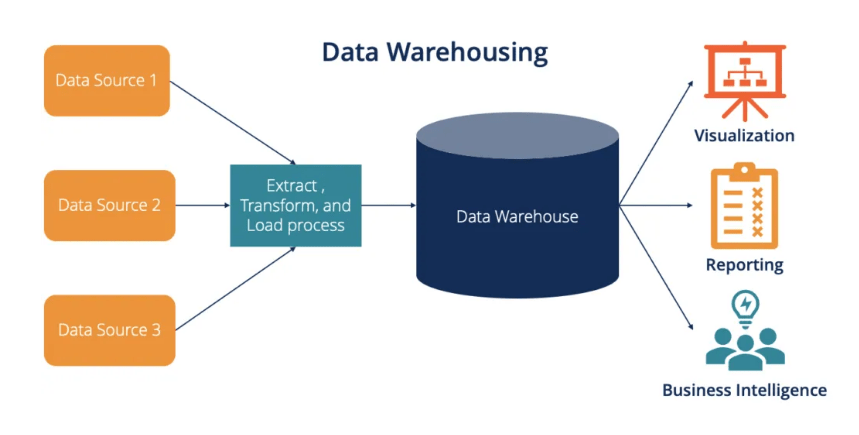

- Process of data warehouse

- Key Elements of a Data Warehouse

- Data Warehouse Architecture

- The ETL Process

- Benefits of Using a Data Warehouse

- Best Practices to Implement Data Warehouse

- Data Warehouse Challenges

- Conclusion

About Data Warehousing

This would require an organization to develop a rigid framework for data storage and analysis to solve problems through vast amounts of data; hence, we have the Data Warehouse. A Data Warehouse is a centralized repository for analytical queries and reporting. It is different from an operational database optimized for transaction processing one where query efficiency, along with the integration of data from multiple sources. Data warehousing is an independent data warehouse tutorial that covers the foundation of key concepts, the architecture of data warehousing, the ETL process, and the benefits and best practices in implementation. Additionally, incorporating tools like Commvault can enhance data management and protection within a data warehousing environment.

Interested in obtaining your Data Warehouse Professional? View the Data Warehouse Certification Course offered by ACTE right now!

Kinds of Data warehousing

- Enterprise Data Warehouse (EDW):An Enterprise Data Warehouse is a central repository that combines data from several sources within the organization. It aids decision-making across the organization with a consolidated view of all the data inside an organization.

- Operational Data Store (ODS):An Operational Data Store is designed for real-time operational data. An Operational Data Store is a middle layer between the transactional systems and the data warehouse, allowing fast access to data and, thus – reporting.

- Data Mart:A Data Mart is a part of a data warehouse. It is restricted to a specific business line or department. It is intended to supply access to relevant data adapted to the needs of specific users.

- Cloud Data Warehouse: A Cloud Data Warehouse exploits the available cloud resources for storing and managing data. It is scalable, adaptable, and more economical compared to the traditional on-premises data warehouses.

- Hybrid Data Warehouse: A Hybrid Data Warehouse is an approach that aggregates the on-premises and cloud data storage solutions. By employing this method, an organization can hold its sensitive data onsite while leveraging the power of the cloud for its less sensitive data.

- Real-time Data Warehouse: A Real-time Data Warehouse is designed to support real-time processing and analytics. It enables organizations to analyze data as and when it is generated, thus immediately giving insights into a decision.

- Federated Data Warehouse:A Federated Data Warehouse consolidates information from various heterogeneous sources without moving it into a central repository. It allows users to query information across different systems as if it were in a single data warehouse.

- NoSQL Data Warehouse: The NoSQL Data Warehouse is a facility to manage unorganized or semi-organized data. Its schema is flexible; hence, it can maintain diverse data formats and is suitable for big data applications.

Process of data warehouse

- Data Extraction:Data can be extracted from source systems such as databases, transactional systems, and flat files, as well as external data sources, such as APIs or social media. Full extraction includes pulling all the data from the source. Incremental extraction includes pulling the new or changed data since the last extraction.

- Data Transformation: Once data is extracted, transformation ensures consistency, quality, and usability. This stage involves cleaning processes like removing duplicates, correcting errors, and applying normalization standards. Data aggregation summarizes information to aid analysis, such as calculating totals or averages. For further insights, explore a Snowflake tutorial on effective data management in cloud data warehousing.

- Loading Data:Transformed data is loaded to the Data Warehouse. At this stage, structured and organized data is placed, making it ready for quick querying and analysis. Methods of Loading: Full Load: All data is loaded in the warehouse. Incremental Only changed or added data since the last load.

- Storage of Data:All historical data is stored in the data warehouse, usually in the schema of fact and dimension tables.Schema Star Schema A central fact table connected to dimension tables, optimizing query performance.Snowflake Schema A more normalized version of the star schema, where dimension tables are further divided into related tables.

- Data Access and Analysis:Users access the data warehouse through various business intelligence (BI) tools for analysis and reporting.Tools and Techniques Reporting Tools Standard report generation, Tableau or Power BI OLAP enables users to view data in multiple dimensions from various perspectives.

- Data Maintenance:Processes ensure the data warehouse remains fresh, relevant, and accurate. This involves maintaining an overview of data quality and performance. ActivitiesRegular ETL Updates Ensure that the latest data is available in the warehouse. Data Quality ManagementContinuous monitoring and improvement of data quality.

- User Insights: The data warehousing process aims to create insights that drive business decisions. Results Identification of trends over time. Performance Measures Closely tracking the right KPIs to guide strategy and tactics.

In this tutorial, you’ll also explore various data warehousing models, such as star and snowflake schemas, and learn how to design a robust data warehouse that meets your organization’s needs. Additionally, the data warehouse tutorial will provide insights into modern tools and technologies that facilitate data warehousing, including cloud-based solutions, and highlight real-world use cases to demonstrate how effective data warehousing can drive business intelligence and decision-making. By the end of this tutorial, you’ll be equipped with practical strategies and best practices to implement and optimize your data warehouse for maximum impact.

Key Elements of a Data Warehouse

- Data Sources include relational databases like Oracle and MySQL, flat files such as CSV and Excel, CRM systems like Salesforce, ERP systems like SAP, web services, and APIs.

- A staging area is a temporary data storage location where input data is processed before it is put into the data warehouse. Thus, this stage allows for cleansing, transforming, and validating data.

- Data pipeline management requires the use of ETL tools. These tools help extract data from various sources, transform it into an appropriate format, and load it into the data warehouse. Effective ETL processes are essential for successful Master Data Management, ensuring that data is accurate, consistent, and ready for analysis.

- This is the hub location where the preprocessed data resides. It is designed for speedy querying and analysis, often using a star or snowflake schema.

- These BI tools are connected to data warehouses, providing typical reporting, visualization, and analytical functionality. They include Tableau, Power BI, Looker, and QlikView, among others.

To earn your Data Warehouse certification, gain insights from leading Data Warehouse experts and advance your career with ACTE’s Data Warehouse Certification Course.

Data Warehouse Architecture

- Data Warehouse: At this bottom-tier level comes the data warehouse itself. The data warehouse is usually built on top of a relational database management system (RDBMS) and handles data storage and retrieval.

- OLAP Servers: This middle tier provides OLAP servers, which perform complex queries and multidimensional analysis. OLAP servers allow users to view data from different perspectives, thus enhancing analytic capabilities.

- Top Tier: Clients or Applications: The top tier contains client applications, such as BI tools and dashboards, that enable the user to view the data in front of them and to access and analyze it in the warehouse.

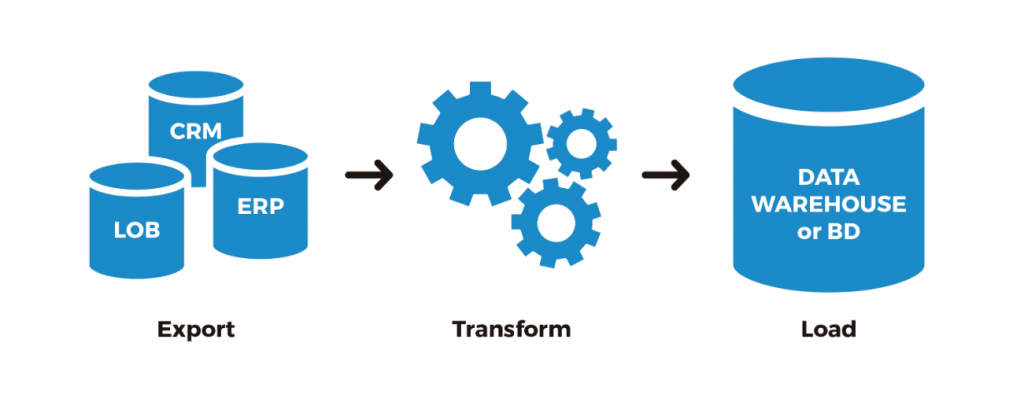

The ETL Process

In other words, the ETL process is a method for filling up the data warehouse. It consists of three operational steps extract, Transform, and Load. The data to be extracted can be from databases, flat files, APIs, or even other sources. It is extracted in the hope that, whatever the format, all relevant information can be used for analysis.

Once data is extracted, transformation happens; it brings up data cleaning in the sense of cleaning the data, removing any duplicates and correcting any errors in association with aggregation, to sum up information such as totals or averages, then formatting data so that all sources maintain consistency, and so on; transformation makes this step important for maintaining the quality of the data. Finally, the transformed data is loaded into the Data warehouse using the full or incremental load: a full load that loads all data in one go and an incremental load that updates only new or changed data since the last load. The loading frequency can vary from real-time updates to scheduled batch processes, as the business requires.

Looking to master cloud computing? Check out the Cloud Computing Master Program offered by ACTE today!

Benefits of Using a Data Warehouse

- Improved Decision Making: The data warehouse centralizes data from multiple sources; organizations can then view their operations in an integrated view. This integral view then makes for more informed decisions.

- Improved Data Quality: An ETL ensures that the data flowing into the data warehouse is quality and congruent. By investing in data quality, organizations can have confidence in the insights gathered from their data.

- Historical Intelligence: Data warehouses hold historical data, allowing the analysis of trends over time. They are fundamental tools used in forecasting and strategy planning.

- Performance: The data warehouse is optimized for highly read-intensive operations, which makes queries faster and more efficient than traditional databases. This is material to analytical workloads.

- Scalability: As organizations grow, so do their data requirements. Data warehouses may be structured to support scalability for larger datasets and the solution of more complicated queries.

Best Practices to Implement Data Warehouse

- Clearly Define Goals: Always define your data warehousing goals before starting the process. Know what you want to gain out of the data and who will use it. A clear vision sets a guide for the design and development process.

- Quality Data Investment: It should start with quality data first. Good practices should be developed for data cleaning and validation while performing ETL so that the information stored in the warehouse can be trusted and relied upon.

- Appropriate Technology Selection: An appropriate solution must be selected based on an organization’s requirements, including properties such as scalability, user-friendliness, and integration with existing systems.

- Optimize Performance: Regularly analyze and optimize query performance, including indexing, partitioning data, and archiving older data, to speed up analytics.

- Ensure Security and Compliance: Implement a very strong security measure to protect sensitive data and ensure compliance with regulations safeguarding client information to maintain trust.

- Train Users: Invest in training for users to ensure they can effectively utilize the data warehouse. Ongoing support and resource provision will heighten user adoption rates and maximize the tool’s investment value.

Getting ready for an Data Warehouse job interview? Check out our extensive list of Data Warehouse Interview Questions to help you prepare!

Data Warehouse Challenges

- Data Quality:Data quality is probably the biggest challenge in implementing a data warehouse. Poor or incomplete data can lead to bad decision-making or wrong conclusions. For instance, this can be caused by human error during input, outdated records that no longer reflect current conditions, or inconsistencies in two different sources of data using different definitions for similar information.

- Scalability:Scalability is vital in data warehousing, especially as organizations grow and their data volumes increase. Businesses accumulate vast amounts of data from various sources, including transactional systems, customer interactions, social media, and IoT devices. Solutions like Teradata are designed to manage this growth effectively. This growing influx of data can strain existing data warehouse architectures if they are not designed to scale effectively.

- Real-time Processing of Data:Real-time processing of data has become ever so important in today’s business world as organizations need to make timely decisions based on the latest information available. In other words, this “crunching” of data as soon as it is produced and often termed real-time or near-real-time analytics, is of paramount importance in today’s fast-paced business environment, where companies quickly respond to changes in the market, shifts in customer behaviours, and operational challenges.

Conclusion

A data warehouse is a vital component of modern business intelligence strategies. By centralizing data and providing tools for analysis, organizations can gain valuable insights, improve operational efficiency, and make informed decisions. By following best practices in implementation and management, you can unlock the full potential of your Data warehouse and drive business success.

This data warehouse tutorial will delve into various data warehousing models, such as star and snowflake schemas, and explore how to choose the right model for your organization’s needs. We will also highlight the latest trends and technologies in data warehousing, including cloud solutions, data virtualization, and SQL Server Integration Services, to help you stay ahead in a rapidly evolving landscape. Whether you’re a beginner or looking to enhance your existing knowledge, this guide aims to equip you with the skills necessary to effectively manage and leverage your data warehouse for strategic advantage.