What is Apache Cassandra?

Apache Cassandra is a distributed open source database that can be referred to as a “NoSQL database” or a “wide column store.” Cassandra was originally developed at Facebook to power its “Inbox” feature and was released as an open source project in 2008. Cassandra is designed to handle “big data” workloads by distributing data, reads and writes (eventually) across multiple nodes with no single point of failure.

How does Cassandra work?

Cassandra is a peer-to-peer distributed system made up of a cluster of nodes in which any node can accept a read or write request. Similar to Amazon’s Dynamo DB, every node in the cluster communicates state information about itself and other nodes using the peer-to-peer gossip communication protocol.

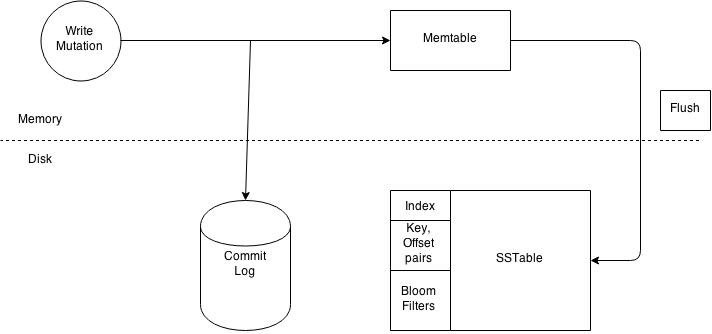

At its core, Cassandra uses a Log Structured Merge (LSM) storage engine. The following are the key elements of the Cassandra storage engine…

Commit log

Each node in a Cassandra cluster also maintains a sequential commit log of write activity on disk to ensure data integrity. These writes are indexed and written to an in-memory structure called a memtable.

Memtable

A memtable can be thought of as a write-back cache where write I/O is directed to cache with its completion immediately confirmed by the host. This has the advantage of low latency and high throughput. The memtable structure is kept in Java heap memory by default. However, as of Cassandra 2.1, there is the option to store memtable outside of Java heap to alleviate garbage collection (GC) pressure.

SSTables

When the commit log gets full, a flush is triggered and the contents of the memtable are written to disk into an SSTables data file. At the completion of this process the memtable is cleared and the commit log is recycled. Cassandra automatically partitions these writes and replicates them throughout the cluster.

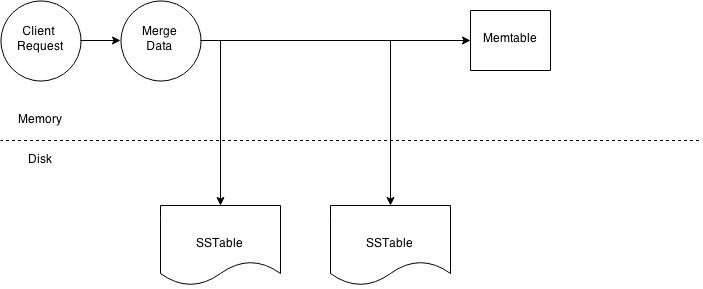

Compaction

Cassandra periodically consolidates SSTables using a process called “compaction.” The frequency of these “compactions” are dictated by several parameters set in Cassandra’s yaml configuration file or through commands using the Cassandra Query Language (CQL). In a compaction operation, Cassandra merges keys, combines columns, evicts tombstones (data that has been marked as obsolete), consolidates SSTables and creates new indexes.

YugaByte DB has a similar LSM storage engine design as Cassandra, but with additional benefits to performance and durability:

Commit log

Cassandra uses majority writes to update the commit logs of the replicas. This results in dirty reads, deletes resurfacing and lower performing quorum reads. YugaByte DB uses the Raft protocol to update commit logs while maintaining strong consistency and avoiding these issues.

Memtable

Java is notorious for GC pauses when running on large memory machines. Cassandra’s off-heap storage is an attempt to alleviate the issue, but Java GC still needs to be tuned carefully in order to run Cassandra on large memory machines. YugaByte DB is written in C++ so it avoids Java’s GC problems all together.

Compaction

YugaByte DB schedules multi-threaded compactions based on size thresholds, resulting in more predictable performance both in terms of the ingest rate, as well as, p99 read latencies.

Key Concepts, Data Structures and Algorithms

In order to understand Cassandra’s architecture it is important to understand some key concepts, data structures and algorithms frequently used by Cassandra.

- Data Partitioning – Apache Cassandra is a distributed database system using a shared nothing architecture. A single logical database is spread across a cluster of nodes and thus the need to spread data evenly amongst all participating nodes. At a 10000 foot level Cassandra stores data by dividing data evenly around its cluster of nodes. Each node is responsible for part of the data. The act of distributing data across nodes is referred to as data partitioning.

- Consistent Hashing – Two main problems crop up when trying to distribute data efficiently. One, determining a node on which a specific piece of data should reside on. Two, minimising data movement when adding or removing nodes. Consistent hashing enables us to achieve these goals. A consistent hashing algorithm enables us to map Cassandra row keys to physical nodes. The range of values from a consistent hashing algorithm is a fixed circular space which can be visualised as a ring. Consistent hashing also minimises the key movements when nodes join or leave the cluster. On average only k/n keys need to be remapped where k is the number of keys and n is the number of slots (nodes). This is in stark contrast to most hashing algorithms where a change in the number of slots results in the need to remap a large number of keys.

- Data Replication – Partitioning of data on a shared nothing system results in a single point of failure i.e. if one of the nodes goes down part of your data is unavailable. This limitation is overcome by creating copies of the data, know as replicas, thus avoiding a single point of failure. Storing copies of data on multiple nodes is referred to as replication. Replication of data ensures fault tolerance and reliability.

- Eventual Consistency – Since data is replicated across nodes we need to ensure that data is synchronized across replicas. This is referred to as data consistency. Eventual consistency is a consistency model used in distributed computing. It theoretically guarantees that, provided there are no new updates, all nodes/replicas will eventually return the last updated value. Domain Name System (DNS) are a good example of an eventually consistent system.

- Tunable Consistency – Cassandra provides tunable consistency i.e. users can determine the consistency level by tuning it via read and write operations. Eventual consistency often conjures up fear and doubt in the minds of application developers. The key thing to keep in mind is that reaching a consistent state often takes microseconds.

- Consistency Level – Cassandra enables users to configure the number of replicas in a cluster that must acknowledge a read or write operation before considering the operation successful. The consistency level is a required parameter in any read and write operation and determines the exact number of nodes that must successfully complete the operation before considering the operation successful.

- Data Centre, Racks, Nodes – A Data Centre (DC) is a centralised place to house computer and networking systems to help meet an organisation’s information technology needs. A rack is a unit that contains multiple servers all stacked one on top of another. A rack enables data centres to conserve floor space and consolidates networked resources. A node is a single server in a rack. Why do we care? Often Cassandra is deployed in a DC environment and one must replicate data intelligently to ensure no single point of failure. Data must be replicated to servers in different racks to ensure continued availability in the case of rack failure. Cassandra can be easily configured to work in a multi DC environment to facilitate fail over and disaster recovery.

- Snitches and Replication Strategies – As mentioned above it is important to intelligently distribute data across DC’s and racks. In Cassandra the distribution of data across nodes is configurable. Cassandra uses snitches and replication strategies to determine how data is replicated across DC’s, racks and nodes. Snitches determine proximity of nodes within a ring. Replication strategies use proximity information provided by snitches to determine locality of a particular copy.

- Gossip Protocol – Cassandra uses a gossip protocol to discover node state for all nodes in a cluster. Nodes discover information about other nodes by exchanging state information about themselves and other nodes they know about. This is done with a maximum of 3 other nodes. Nodes do not exchange information with every other node in the cluster in order to reduce network load. They just exchange information with a few nodes and over a period of time state information about every node propagates throughout the cluster. The gossip protocol facilitates failure detection.

- Bloom Filters – A bloom filter is an extremely fast way to test the existence of a data structure in a set. A bloom filter can tell if an item might exist in a set or definitely does not exist in the set. False positives are possible but false negatives are not. Bloom filters are a good way of avoiding expensive I/O operation.

- Merkle Tree – Merkle tree is a hash tree which provides an efficient way to find differences in data blocks. Leaves contain hashes of individual data blocks and parent nodes contain hashes of their respective children. This enables efficient way of finding differences between nodes.

- SSTable – A Sorted String Table (SSTable) ordered immutable key value map. It is basically an efficient way of storing large sorted data segments in a file.

- Write Back Cache – A write back cache is where the write operation is only directed to the cache and completion is immediately confirmed. This is different from Write-through cache where the write operation is directed at the cache but is only confirmed once the data is written to both the cache and the underlying storage structure.

- Memtable – A memtable is a write back cache residing in memory which has not been flushed to disk yet.

- Cassandra Keyspace – Keyspace is similar to a schema in the RDBMS world. A keyspace is a container for all your application data. When defining a keyspace, you need to specify a replication strategy and a replication factor i.e. the number of nodes that the data must be replicate too.

- Column Family – A column family is analogous to the concept of a table in an RDBMS. But that is where the similarity ends. Instead of thinking of a column family as RDBMS table think of a column family as a map of sorted map. A row in the map provides access to a set of columns which is represented by a sorted map. Map<RowKey, SortedMap<ColumnKey, ColumnValue>> Please note in CQL (Cassandra Query Language) lingo a Column Family is referred to as a table.

- Row Key – A row key is also known as the partition key and has a number of columns associated with it i.e. a sorted map as shown above. The row key is responsible for determining data distribution across a cluster.

Cluster Topology and Design

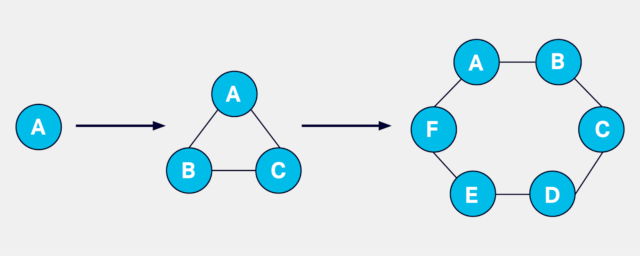

Cassandra is based on distributed system architecture. In its simplest form, Cassandra can be installed on a single machine or in a docker container, and it works well for basic testing. A single Cassandra instance is called a node. Cassandra supports horizontal scalability achieved by adding more than one node as a part of a Cassandra cluster. The scalability works with linear performance improvement if the resources are configured optimally.

Cassandra works with peer to peer architecture, with each node connected to all other nodes. Each Cassandra node performs all database operations and can serve client requests without the need for a master node. A Cassandra cluster does not have a single point of failure as a result of the peer-to-peer distributed architecture.

Nodes in a cluster communicate with each other for various purposes. There are various components used in this process:

- Seeds: Each node configures a list of seeds which is simply a list of other nodes. A seed node is used to bootstrap a node when it is first joining a cluster. A seed does not have any other specific purpose, and it is not a single point of failure. A node does not require a seed on subsequent restarts after bootstrap. It is recommended to use two to three seed nodes per Cassandra data center (data centers are explained below), and keep the seeds list uniform across all the nodes.

- Gossip: Gossip is the protocol used by Cassandra nodes for peer-to-peer communication. The gossip informs a node about the state of all other nodes. A node performs gossip with up to three other nodes every second. The gossip messages follow specific format and version numbers to make efficient communication.

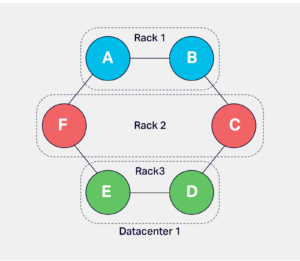

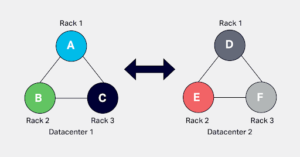

A cluster is subdivided into racks and data centers. These terminologies are Cassandra’s representation of a real-world rack and data center. A physical rack is a group of bare-metal servers sharing resources like a network switch, power supply etc. In Cassandra, the nodes can be grouped in racks and data centers with snitch configuration. Ideally, the node placement should follow the node placement in actual data centers and racks. Data replication and placement depends on the rack and data center configuration.

A rack in Cassandra is used to hold a complete replica of data if there are enough replicas, and the configuration uses Network Topology Strategy, which is explained later. This configuration allows Cassandra to survive a rack failure without losing a significant level of replication to perform optimally.

There are various scenarios to use multiple data centers in Cassandra. Few common scenarios are:

- Build a Cassandra cluster with geographically distinct data centers which cater to clients from distinct locations, e.g.a cluster with three data centers in US, EU, and APAC serving local clients with low latency.

- Separate Cassandra data centers which cater to distinct workloads using the same data, e.g. separate data centers to serve client requests and to run analytics jobs.

- Active disaster recovery by creating geographically distinct data centers, e.g. a cluster with data centers in each US AWS region to support disaster recovery.

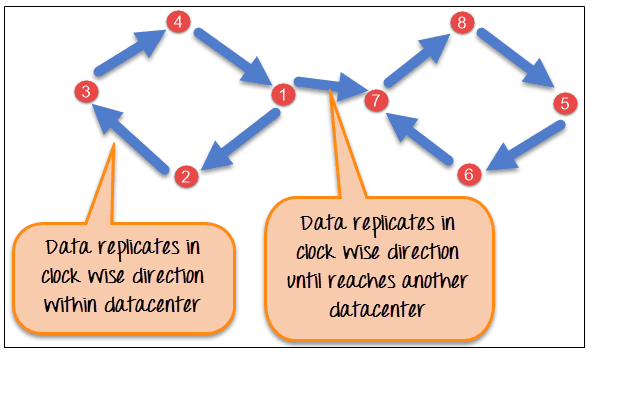

Data Replication in Cassandra

In Cassandra, one or more of the nodes in a cluster act as replicas for a given piece of data. If it is detected that some of the nodes responded with an out-of-date value, Cassandra will return the most recent value to the client. After returning the most recent value, Cassandra performs a read repair in the background to update the stale values.

The following figure shows a schematic view of how Cassandra uses data replication among the nodes in a cluster to ensure no single point of failure.

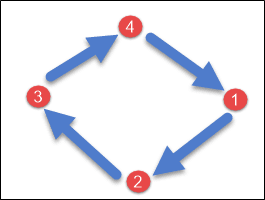

In Apache Cassandra, there are two main replication strategies that can be used to determine how replicas of data are placed across the nodes in the cluster:

SimpleStrategy:

SimpleStrategy is used for single data center deployments or when you want to have a single replication strategy for the entire cluster. In this strategy, all replicas are placed in a clockwise manner starting from the node responsible for the data according to the partitioner.

For example, if you have a replication factor of 3 and the following nodes in your cluster: Node A, Node B, Node C, and Node D, the replicas for a given piece of data would be placed as follows:

- Data written to Node A will have replicas on Node A, Node B, and Node C.

- Data written to Node B will have replicas on Node B, Node C, and Node D.

- Data written to Node C will have replicas on Node C, Node D, and Node A.

- Data written to Node D will have replicas on Node D, Node A, and Node B.

This strategy is suitable for single data center deployments or when you don’t require different replication configurations for different data centers.

Here is a picture of how the SimpleStrategy is represented.

Network Topology Strategy

NetworkTopologyStrategy is used for multi-data center deployments. This strategy allows you to define how many replicas are stored in each data center separately. It is especially useful when you have a Cassandra cluster spanning multiple geographical regions or data centers.

With NetworkTopologyStrategy, you can set different replication factors for each data center to ensure data availability and fault tolerance even if one or more data centers experience failures or network partitions.

For example, if you have two data centers, DC1 and DC2, and you set a replication factor of 3 with NetworkTopologyStrategy, the replicas for a given piece of data would be placed as follows:

- Data written to DC1 will have replicas on three nodes in DC1 (e.g., Node A, Node B, and Node C).

- Data written to DC2 will have replicas on three nodes in DC2 (e.g., Node X, Node Y, and Node Z).

- NetworkTopologyStrategy allows you to tailor the replication configuration based on your specific requirements for each data center.

The network topology technique is shown visually in the following image.

Components of Cassandra

The key components of Cassandra are as follows −

- Node − It is the place where data is stored.

- Data center − It is a collection of related nodes.

- Cluster − A cluster is a component that contains one or more data centers.

- Commit log − The commit log is a crash-recovery mechanism in Cassandra. Every write operation is written to the commit log.

- Mem-table − A mem-table is a memory-resident data structure. After commit log, the data will be written to the mem-table. Sometimes, for a single-column family, there will be multiple mem-tables.

- SSTable − It is a disk file to which the data is flushed from the mem-table when its contents reach a threshold value.

- Bloom filter − These are nothing but quick, nondeterministic, algorithms for testing whether an element is a member of a set. It is a special kind of cache. Bloom filters are accessed after every query.

Cassandra Query Language

Users can access Cassandra through its nodes using Cassandra Query Language (CQL). CQL treats the database (Keyspace) as a container of tables. Programmers use cqlsh: a prompt to work with CQL or separate application language drivers.

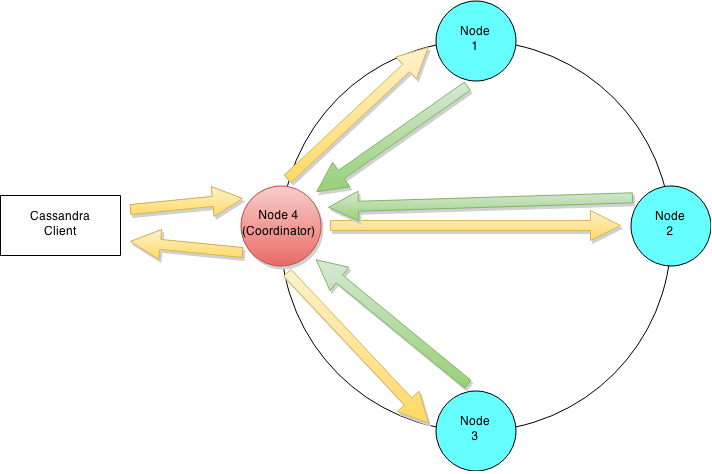

Clients approach any of the nodes for their read-write operations. That node (coordinator) plays a proxy between the client and the nodes holding the data.

Cassandra Cluster/Ring

Every Cassandra cluster must be assigned a name. All nodes participating in a cluster have the same name. Seed nodes are used during start up to help discover all participating nodes. Seeds nodes have no special purpose other than helping bootstrap the cluster using the gossip protocol. When a node starts up it looks to its seed list to obtain information about the other nodes in the cluster. Cassandra uses the gossip protocol for intra cluster communication and failure detection. A node exchanges state information with a maximum of three other nodes. State information is exchanged every second and contains information about itself and all other known nodes. This enables each node to learn about every other node in the cluster even though it is communicating with a small subset of nodes.

Cassandra Write Path

Lets try and understand Cassandra’s architecture by walking through an example write mutation. Let’s assume that a client wishes to write a piece of data to the database. The diagram below illustrates the cluster level interaction that takes place.

Cluster level interaction for a write and read operation.

Since Cassandra is masterless a client can connect with any node in a cluster. Clients can interface with a Cassandra node using either a thrift protocol or using CQL. In the picture above the client has connected to Node 4. The node that a client connects to is designated as the coordinator, also illustrated in the diagram. The coordinators is responsible for satisfying the clients request. The consistency level determines the number of nodes that the coordinator needs to hear from in order to notify the client of a successful mutation. All inter-node requests are sent through a messaging service and in an asynchronous manner. Based on the partition key and the replication strategy used the coordinator forwards the mutation to all applicable nodes. In our example it is assumed that nodes 1,2 and 3 are the applicable nodes where node 1 is the first replica and nodes two and three are subsequent replicas. The coordinator will wait for a response from the appropriate number of nodes required to satisfy the consistency level. QUORUM is a commonly used consistency level which refers to a majority of the nodes.QUORUM can be calculated using the formula (n/2 +1) where n is the replication factor. In our example let’s assume that we have a consistency level of QUORUM and a replication factor of three. Thus the coordinator will wait for at most 10 seconds (default setting) to hear from at least two nodes before informing the client of a successful mutation.

Write Operation Overview:

Client Application:

A client application initiates a write request to store data in the Cassandra cluster. The client communicates with a Cassandra driver or API to interact with the database.

Coordinating Node Selection:

The client selects a coordinating node from the list of available nodes. The coordinating node will handle the write request and coordinate the replication process.

Replication Factor:

The coordinating node determines the replication factor (RF) for the keyspace where the data should be stored. The RF specifies the number of replicas of each piece of data that should be maintained across the cluster.

Consistency Level:

The client can specify a consistency level for the write request, which determines how many replicas must acknowledge the write for it to be considered successful. Consistency levels balance data consistency and system performance.

Write Path:

The coordinating node writes the data locally to its commit log and memtable. The commit log ensures data durability, and the memtable is an in-memory data structure for fast write operations.

Replication:

The coordinating node asynchronously sends the data to the required number of replicas based on the replication factor and consistency level. The write is considered successful when the required number of replicas have acknowledged the write.

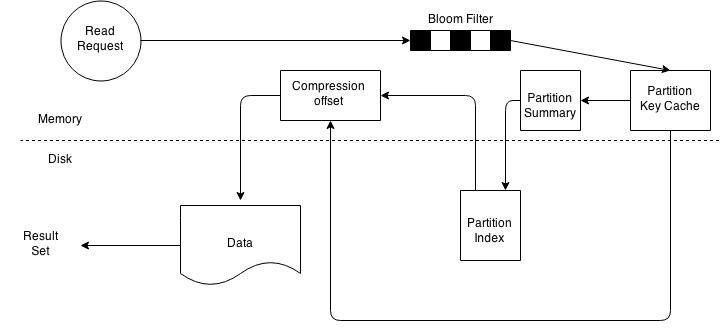

Cassandra Read Path

At the cluster level a read operation is similar to a write operation. As with the write path the client can connect with any node in the cluster. The chosen node is called the coordinator and is responsible for returning the requested data. A row key must be supplied for every read operation. The coordinator uses the row key to determine the first replica. The replication strategy in conjunction with the replication factor is used to determine all other applicable replicas. As with the write path the consistency level determines the number of replica’s that must respond before successfully returning data. Let’s assume that the request has a consistency level of QUORUM and a replication factor of three, thus requiring the coordinator to wait for successful replies from at least two nodes. If the contacted replicas has a different version of the data the coordinator returns the latest version to the client and issues a read repair command to the node/nodes with the older version of the data. The read repair operation pushes the newer version of the data to nodes with the older version.

Node level read operation.

The illustration above outlines key steps when reading data on a particular node. Every Column Family stores data in a number of SSTables. Thus Data for a particular row can be located in a number of SSTables and the memtable. Thus for every read request Cassandra needs to read data from all applicable SSTables ( all SSTables for a column family) and scan the memtable for applicable data fragments. This data is then merged and returned to the coordinator.

Read Operation Overview:

Client Application:

A client application initiates a read request to retrieve data from the Cassandra cluster.

Coordinating Node Selection:

The client selects a coordinating node to handle the read request. The coordinating node will coordinate the read process across the cluster.

Consistency Level:

The client can specify a consistency level for the read request, which determines how many replicas must respond to the read for it to be considered successful.

Data Retrieval:

The coordinating node determines which nodes hold replicas of the requested data based on the partition key. It contacts the necessary replicas and gathers the data based on the consistency level specified.

Read Repair:

If the read consistency level requires data from multiple replicas and the data from different replicas do not match (stale data), Cassandra performs a read repair. Read repair ensures that inconsistencies in the data are resolved, maintaining data consistency across the cluster.

Hinted Handoff and Repair:

If a replica node is temporarily unavailable during a write operation, the coordinating node may store a hint locally until the replica node becomes available again. Once the replica node is back online, the hinted handoff data is delivered. Additionally, Cassandra’s built-in repair mechanism periodically checks and synchronizes data between replicas to ensure data consistency and resolve any discrepancies due to node outages or network issues.

SSTable read path.

On a per SSTable basis the operation becomes a bit more complicated. The illustration above outlines key steps that take place when reading data from an SSTable. Every SSTable has an associated bloom filter which enables it to quickly ascertain if data for the requested row key exists on the corresponding SSTable. This reduces IO when performing an row key lookup. A bloom filter is always held in memory since the whole purpose is to save disk IO. Cassandra also keeps a copy of the bloom filter on disk which enables it to recreate the bloom filter in memory quickly . Cassandra does not store the bloom filter Java Heap instead makes a separate allocation for it in memory. If the bloom filter returns a negative response no data is returned from the particular SSTable. This is a common case as the compaction operation tries to group all row key related data into as few SSTables as possible. If the bloom filter provides a positive response the partition key cache is scanned to ascertain the compression offset for the requested row key. It then proceeds to fetch the compressed data on disk and returns the result set. If the partition cache does not contain a corresponding entry the partition key summary is scanned. The partition summary is a subset to the partition index and helps determine the approximate location of the index entry in the partition index. The partition index is then scanned to locate the compression offset which is then used to find the appropriate data on disk. If you reached the end of this long post then well done. In this post I have provided an introduction to Cassandra architecture. In my upcoming posts I will try and explain Cassandra architecture using a more practical approach.

There are three types of read requests that a coordinator sends to replicas.

- Direct request

- Digest request

- Read repair request

The coordinator sends direct request to one of the replicas. After that, the coordinator sends the digest request to the number of replicas specified by the consistency level and checks whether the returned data is an updated data.

After that, the coordinator sends digest request to all the remaining replicas. If any node gives out of date value, a background read repair request will update that data. This process is called read repair mechanism.

Consistency and Availability

Each distributed system works on the principle of CAP theorem. The CAP theorem states that any distributed system can strongly deliver any two out of the three properties: Consistency, Availability and Partition-tolerance. Cassandra provides flexibility for choosing between consistency and availability while querying data. In other words, data can be highly available with low consistency guarantee, or it can be highly consistent with lower availability. For example, if there are three data replicas, a query reading or writing data can ask for acknowledgments from one, two, or all three replicas to mark the completion of the request. For a read request, Cassandra requests the data from the required number of replicas and compares their write-timestamp. The replica with the latest write-timestamp is considered to be the correct version of the data. Hence, the more replicas involved in a read operation adds to the data consistency guarantee. For write requests, the requested number is considered for replicas acknowledgeing the write.

Naturally, the time required to get the acknowledgement from replicas is directly proportional to the number of replicas requests for acknowledgement. Hence, consistency and availability are exchangeable. The concept of requesting a certain number of acknowledgements is called tunable consistency and it can be applied at the individual query level.

There are a few considerations related to data availability and consistency:

- The replication factor should ideally be an odd number. The common replication factor used is three, which provides a balance between replication overhead, data distribution, and consistency for most workloads.

- The number of racks in a data center should be in multiples of the replication factor. The common number used for nodes is in multiples of three.

- There are various terms used to refer to the consistency levels

- One, two, three: Specified number of replicas must acknowledge the operation.

- Quorum: The strict majority of nodes is called a quorum. The majority is one more than half of the nodes. This consistency level ensures that most of the replicas confirm the operation without having to wait for all replicas. It balances the operation efficiency and good consistency. e.g.Quorum for a replication factor of three is (3/2)+1=2; For replication factor five it is (5/2)+1=3.

- Local_*: This is a consistency level for a local data center in a multi-data center cluster. A local data center is where the client is connected to a coordinator node. The * takes a value of any specific number specified above or quorum, e.g. local_three, local_quorum.

- Each_*: This level is also related to multi data center setup. It denotes the consistency to be achieved in each of the data centers independently, e.g. each_quorum means quorum consistency in each data center.