Deep learning is a subset of machine learning in which artificial neural networks are trained to learn and make intelligent decisions. It emulates the structure and function of the human brain’s interconnected neurons, allowing these networks to extract characteristics and patterns from data automatically. Deep learning has made breakthroughs in tasks such as picture and speech recognition, natural language processing, and strategic game play, contributing to advancements in a variety of industries.

1. Explain deep learning.

Ans:

Deep learning is a subfield of machine learning that models and solves complicated problems using artificial neural networks. It is based on the structure and function of biological neurons in the human brain and is capable of learning complex patterns and correlations within data.

2. What are neural network?

Ans:

Neural networks are a subset of machine learning that are inspired by the form and function of neurons that exist in the human brain. They can recognize complicated patterns and relationships in data. A neural network is made up of node layers that include an input layer, one or more hidden layers, and an output layer.

3. What are advantages and disadvantages of neural networks?

Ans:

Neural networks offer a number of advantages as well as disadvantages. Among the advantages are its adaptability, capacity to identify patterns in incoming data, and capacity to grow and learn over time. However, there are certain disadvantages, such as the requirement for substantial training data sets, the incapacity to draw broad conclusions from restricted training sets, and the inclination to overfit on smaller datasets.

4. What is a deep neural network?

Ans:

An artificial neural network that matches the functioning of the human brain is called a deep neural network. Through developed mathematical modeling, deep neural networks analyse data in ways that are complex and can be used to understand and solve difficult problems. They have the ability to recognize complex links and patterns in data.

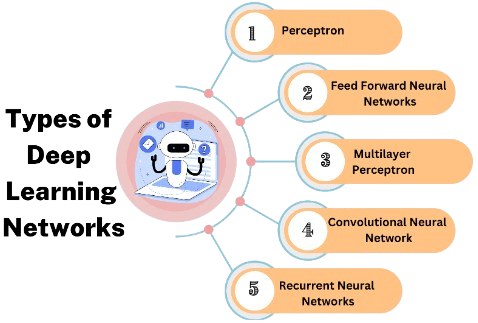

5. What are the types of deep neural networks?

Ans:

Deep neural networks fall into different groups, the most common of which are:

Multi-Layer Perceptrons (MLPs): MLPs are a class of feedforward artificial neural networks.

Convolutional Neural Networks (CNNs): used for image recognition.

Recurrent Neural NetworksRNNs: used for speech recognition tasks.

Other deep neural networks are Artificial Neural Networks (ANN), Perceptrons, Long Short-Term Memory Networks, deep Boltzmann machines are also utilized in deep learning.

6. What is a perceptron?

Ans:

Perceptron is a type of linear machine learning algorithm used for supervised learning of binary categorization tasks. The perceptron model is also seen as one of the most effective and straightforward types of artificial neural networks,A perceptron is a single-layer neural network with four primary parameters: input values, weights, bias, and activation function.

7. What are some popular tools used in deep learning?

Ans:

- TensorFlow

- PyTorch

- Theano

- DL4J (Deeplearning4j)

- Chainer

- Caffe.

8. What exactly is backpropagation?

Ans:

Backpropagation is a supervised learning algorithm for training neural networks. Calculating the gradient of the loss function with respect to the network weights and modifying the weights in the opposite direction of the gradient to minimize the loss is involved.

9. Explain the concept of deep learning overfitting.

Ans:

Overfitting occurs when a model learns too well from the training data, catching noise and outliers rather than the underlying patterns. This can result in poor generalization on fresh, previously unknown data.

10. What is the difference between deep learning and machine learning?

Ans:

- Deep Learning is a particular type of machine learning.

- Machine Learning data is structured and can be numerical or categorized.

- AI is evolving into machine learning.

- Numerous algorithms go into machine learning.

Machine Learning:

- A specific type of machine learning is called deep learning.

- Deep Learning uses a form of unstructured data representation which can be photos, videos, text, or audio.

- Machine learning is evolving into deep learning.

- Deep Learning is made up of numerous other

Deep Learning:

11. What is a convolutional neural network (CNN), and how is it used?

Ans:

CNN is a sort of neural network built for image processing. It employs convolutional layers to automatically and adaptively learn feature spatial hierarchies. CNNs are extensively employed in image and video recognition tasks.

12. In deep learning, what is the vanishing gradient problem?

Ans:

The vanishing gradient problem happens when gradients become very small during backpropagation, resulting in negligible weight updates in deep neural network early layers. This can impede deep network training.

13. What is transfer learning and how it is useful in deep learning?

Ans:

Transfer learning is the process of starting a new task using a pre-trained model on a task that is similar to it. It helps with deep learning since it makes it possible to leverage the knowledge acquired from one task to enhance performance on another, frequently using less data.

14. What is the vanishing gradient problem, and how does it relate to activation functions?

Ans:

The vanishing gradient problem is the result of gradients becoming extremely small during backpropagation, which makes it challenging for early layers to acquire effective learning. ReLU and other activation functions introduce non-linearity and facilitate gradient flow during backpropagation, which helps to alleviate this issue.

15. What is the difference between L1 regularization and L2 regularization in the context of neural networks?

Ans:

- In order to promote sparsity, L1 regularization adds a penalty term proportional to the absolute values of the weights. To keep the weights from getting excessively big.

- L2 regularization adds a penalty term that is proportional to the squared values of the weights, both are utilized for weight regularization.

16. Name some deep learning application uses?

Ans:

Deep learning is ultimately used for all the technology in various industries such as self driving cars, facial recognition, healthcare, finance, Natural language processing, gaming and robotics.

17. What is the distinction between supervised and unsupervised learning?

Ans:

Supervised learning entails training a model on a labeled dataset, where each input corresponds to a corresponding output. Unsupervised learning uses unlabeled data and attempts to uncover patterns or structures in the data without explicit supervision.

18. Explain the dropout idea in neural networks.

Ans:

Dropout is a regularization strategy that ignores randomly picked neurons during training. This prevents overfitting by ensuring that no single neuron becomes unduly reliant on the presence of other neurons.

19. What are prerequisites to become a Data Analyst?

Ans:

- Strong Foundation in Mathematics

- Machine Learning Fundamentals

- Deep Learning Knowledge

- Neural Network Architectures

- Data Handling and Preprocessing Skills

- Understanding of Loss Functions and Optimization

- Problem-Solving and Critical Thinking

- Networking and Collaboration.

20. Describe the idea of neural network weight initialization. Why does it matter?

Ans:

The process of initializing the weights in a neural network is known as weight initialization. For training to be effective, initialization must be done correctly because it helps avoid problems like vanishing or expanding gradients. He initialization for ReLU activations and Xavier/Glorot initialization for sigmoid and tanh activations are common methods.

21. Explain the term batch normalization in the context of deep learning.

Ans:

To enhance the training of deep neural networks, batch normalization is a technique that involves normalizing the inputs of every layer. Through the reduction of internal covariate shift, it speeds up training and helps alleviate problems such as vanishing or bursting gradients.

22. What is the role of activation functions in neural networks?

Ans:

By adding non-linearities to the neural network, activation functions enable the network to learn intricate patterns. The sigmoid and ReLU (Rectified Linear Unit) are examples of common activation functions.

23. What is the role of a learning rate in training deep learning models?

Ans:

The size of the steps executed during optimization depends on the learning rate. It is a hyperparameter that affects the training process’s convergence and stability. Overshooting can occur from a learning rate that is too high, while sluggish convergence can occur from a learning rate that is too low.

24. List a few methods in which you will demonstrate the core concept of machine learning.

Ans:

- Machine learning key principles can be demonstrated using a variety of approaches, ranging from simple visualizations to hands-on coding exercises. Here are a few approaches for illustrating the fundamental concepts of machine learning:

- Interactive Visualizations

- Toy Datasets

- Code Walkthroughs

- Kaggle Kernels or Colab Notebooks

- Model Playground Tools

- Demonstrate Hyperparameter Tuning.

25. Explain what a recurrent neural network (RNN) is. Where is it commonly utilized.

Ans:

A neural network type called an RNN is built for sequential data. It can identify temporal dependencies in data because of its directed cycle-forming connections. Time-series analysis, speech recognition, and natural language processing are three common applications for RNNs.

26. What is the difference between a generative model and a discriminative model?

Ans:

Generative models learn the joint probability distribution of the input and output, allowing them to generate new samples. Discriminative models learn the conditional probability of the output given the input and are used for classification tasks.

27. Explain the gradient descent optimization algorithm’s basic idea.

Ans:

The optimization technique known as gradient descent is employed in training to reduce the loss function. It entails iteratively modifying the model’s parameters in an opposite direction to the loss function’s gradient with regard to those parameters. In deep learning, gradient descent variants like Adam, RMSprop, and Stochastic Gradient Descent (SGD) are frequently employed.

28. State the critical segments of affiliated analyzing strategies in deep learning.

Ans:

Deep learning affiliated analyzing strategies include techniques and methodologies that are directly related to or associated with the essential duties of evaluating and interpreting the performance, behavior, and features of deep learning models. Here are few strategies:

- Performance Metrics and Evaluation

- Confusion Matrix Analysis

- Learning Curves

- ROC and Precision-Recall Curves

- Feature Importance Analysis

29. What distinguishes a convolutional neural network from a feedforward neural network?

Ans:

CNNs are similar to grids, but feedforward neural networks are similar to straight lines. When input data enters a feedforward neural network, it is processed through successive layers until an output is generated. A CNN, on the other hand, is made to work with input that resembles a grid, like photographs, and it uses convolutional layers to automatically discover hierarchical representations of spatial information.

30. Explain the concept of hyperparameter tuning in deep learning.

Ans:

Finding the ideal values for hyperparameters including learning rate, batch size, and architecture parameters is known as hyperparameter tuning. Typically, methods like grid search, random search, or more sophisticated approaches like Bayesian optimization are used to do this.

31. What is the difference between a shallow neural network and a deep neural network?

Ans:

The deep neural network is like a tree with numerous branches, whereas a shallow neural network is like a straight line. A shallow neural network receives input data, processes it successively through layers, and generates an output. Conversely, a deep neural network comprises numerous levels, and each layer can extract various features and abstractions from the data.

32. Explain the softmax function’s concept and how deep learning uses it.

Ans:

In the output layer of a neural network, the softmax function is utilized for multi-class classification. It can be used to assign class probabilities since it transforms a vector of raw scores into a probability distribution.

33. List some of the most basic strategies to avoid overfitting.

Ans:

Overfitting happens when a machine learning model learns the training data too well, capturing noise or random fluctuations that do not represent the underlying patterns in the data. Here are some simple methods for reducing overfitting:

- Cross-Validation: Use cross-validation techniques, such as k-fold cross-validation, to evaluate the performance of your model on multiple subsets of data.

- Feature Selection: Choose relevant characteristics carefully and avoid utilizing features that may be noise or have little impact on the target variable.

- Regularization: To punish big coefficients in the model, use regularization techniques such as L1 or L2 regularization.

- Reduce Model Complexity: Select simpler models that are less likely to overfit. When possible, adopt a linear model rather than a complex nonlinear model.

34. What is the role of the loss function in training a neural network?

Ans:

The loss function measures the difference between the predicted and actual values. Common loss functions include Mean Squared Error (MSE) for regression and Cross-Entropy Loss for classification.

35. Explain the concept of attention mechanisms in deep learning

Ans:

Attention mechanisms allow models to focus on specific parts of input sequences when making predictions. They are often used in sequence-to-sequence tasks, such as machine translation, to selectively attend to relevant information.

36. What is the “curse of dimensionality” and how does it affect deep learning?

Ans:

The phrase “curse of dimensionality” describes how data becomes more sparse and complex as feature counts rise. High-dimensional data can cause overfitting, higher processing costs, and difficulties identifying significant patterns in deep learning.

37. How does AdaBoost works?

Ans:

This is a simplified description of AdaBoost’s operation:

- Initialize weights: Set all of the training set’s data points to the same weights when you initialize the weights.

- Create Weak Learner: Using the current weights on the training data, train a decision tree or other weak learner.

- Compute Error: Determine the error by assessing the weak learner’s performance.

- Calculate Weak Learner’s Weight: Based on the learner’s error rate, assign a weight to them.

- Update Weights: Change the training sample weights by assigning samples that were incorrectly classified a higher weight.

- Repeat: Perform steps 2 through 5 again until a predetermined number of iterations is reached or a stopping requirement is satisfied.

- Final Prediction: By giving each model a weight based on its performance, you may combine the predictions made by all of the weak learners.

38. Explain the difference between online learning and batch learning.

Ans:

Online learning updates the model’s parameters after each training example, while batch learning updates the model after processing the entire dataset. Online learning is often faster but can be more sensitive to noise.

39.How do you choose an appropriate learning rate?

Ans:

The step size during optimization is determined by the learning rate. Divergence can occur from an excessively high learning rate, and sluggish convergence can arise from an excessively low learning rate. Grid search and adaptive learning rate techniques are popular approaches for determining the learning rate.

40. Give an example of when you might choose one activation function over another.

Ans:

The network to learn intricate patterns, the activation function adds non-linearity to it. ReLU, for example, is often chosen for hidden layers because of its ease of use and efficiency, but the output layer uses the softmax function for multi-class categorization.

41. Distinguish between deep learning and fictitious or artificial learning

Ans:

| Aspect | Deep learning | Artificial learning | |

| Definition |

A subtype of machine learning called deep learning uses multiple-layered neural networks, or deep neural networks. It is highly adept at acquiring hierarchical representations from data and is motivated by the composition and operations of the human brain. |

The creation of computers or systems that are capable of carrying out tasks that normally require human intelligence is the goal of the large discipline of computer science known as artificial intelligence. It uses a range of methods, including as deep learning and machine learning, to produce intelligent behavior. | |

| Key aspects | involves many-layered deep neural networks (deep architectures). capable of automatically deriving from raw data hierarchical characteristics. frequently employed for a variety of tasks, including natural language processing, picture and audio recognition, and more. | seeks to develop systems with the ability to perceive, think through problems, reason, and comprehend natural language. incorporates a number of subfields, such as machine learning, robotics, natural language processing, and expert systems. |

42. Explain the convolutional neural network (CNN) weight sharing idea.

Ans:

In CNNs, weight sharing is applying the same set of weights to different input points. This facilitates the network’s ability to recognize similar characteristics, such edges or textures, in many spatial locations.

43. What does padding in CNN do, and how does it affect the feature map’s output size?

Ans:

In order to prevent the size of the output feature map from decreasing, padding enlarges the pixels surrounding the input. In addition to helping to preserve geographical information, it is frequently employed to keep the network symmetric.

44. What is the vanishing gradient problem in RNNs, and how does a Recurrent Neural Network (RNN) handle sequential data?

Ans:

The purpose of RNNs is to extract temporal dependencies from sequential data. The network finds it difficult to acquire long-term dependencies when gradients get very small during backpropagation through time, a phenomenon known as the vanishing gradient problem in RNNs.

45. What is machine learning with inductive reasoning?

Ans:

Making generalizations or predictions based on particular examples or observations is known as inductive reasoning in the domain of machine learning. In order to accurately forecast future, unknown instances, this sort of reasoning entails drawing general principles or patterns from particular cases. The typical application of inductive reasoning in machine learning is as follows:

- Generalization: The machine learning model seeks to generalize its knowledge to produce precise predictions on fresh, untrained data after being trained on the dataset.

- inductive bias: describes the presumptions or limitations that a machine learning model applies while it is learning.

- Evaluation: The model’s performance on a different test dataset is frequently used to gauge how well inductive reasoning works in machine learning.

46. Explain gradient clipping and its function in deep learning model training

Ans:

One method to stop gradients from blowing up during training is to use gradient clipping. To provide steady optimization, it entails scaling the gradients if they rise above a predetermined threshold.

47. How does the learning rate scheduler work, and how does it differ from a fixed learning rate?

Ans:

In training, a learning rate scheduler modifies the learning rate; usually, this results in a gradual decrease. Unlike a set learning rate, this dynamic modification can aid enhance convergence and prevent overshooting.

48. What are the advantages and disadvantages of PAC learning procedure?

Ans:

Advantages:

Theoretical Foundation: PAC learning provides a good theoretical foundation for understanding learning algorithms’ generalization capacity.

Bounding Error Probabilities: PAC learning defines “probably” in terms of bounding the chance of error, providing a probabilistic assurance that the learnt hypothesis is, with high confidence, near to the genuine underlying concept.

Disadvantages:

Assumptions: PAC learning is based on specific assumptions, such as the realizability assumption (the true notion is within the chosen hypothesis class).

Computational difficulty: While PAC learning provides insights into theoretical aspects of learning, it may not directly address the computational difficulty of specific learning algorithms.

49. Can you explain the concept of an autoencoder in deep learning?

Ans:

One kind of neural network used for unsupervised learning is an autoencoder. It is made up of two parts: a decoder that decodes the encoded data back into the original form after it has been compressed into a lower-dimensional representation (encoding).

50. How do tasks like natural language processing involve attention mechanisms?

Ans:

When making predictions, attention methods enable models to concentrate on particular segments of the input sequences. They are used in natural language processing to improve the performance of the model by selectively attending to pertinent words in the source sequence when creating the target sequence, such as in machine translation jobs.

51. Explain the advantages of Long Short-Term Memory (LSTM) networks over conventional RNNs.

Ans:

An RNN type called LSTMs was created to solve the vanishing gradient issue. Compared to conventional RNNs, they are better able to capture long-term dependencies since they include memory cells and gates.

52. What distinguishes data augmentation from dropout, and how do they help with deep learning regularization?

Ans:

Applying changes to the current data allows for the creation of new training examples, whereas dropout occurs when neurons are randomly removed from the training set. By adding variety, both methods lessen the risk of overfitting and enhance generalization.

53. Explain the idea behind generative adversarial networks (GANs) and give an example.

Ans:

A generator and an opposition-trained discriminator make up a GAN. Whereas the discriminator attempts to discern between genuine and produced data, the generator seeks to produce realistic data. GANs are employed in data synthesis, style transfer, and image production, among other applications.

54. What are the key concepts related to tensors?

Ans:

- Rank of a Tensor: A tensor’s rank is determined by how many dimensions it contains.

- Tensor Shape: A tensor’s shape indicates how many elements are present in each dimension.

- Data Types: Tensors can include a variety of data kinds, including int32, int64, float32, and so forth.

- Tensor operations: Tensors can be used for addition, multiplication, and other operations. with reference to deep learning.

55. Describe the three phases involved in creating the deep learning assumption framework.

Ans:

- Define the issue and set goals: Clearly state the issue that you want to use deep learning to address. This entails defining the task type (such as classification, regression,etc), comprehending the characteristics of the input data, and figuring out the model’s objectives or aims.

- Data preparing and preprocessing: Gather and get ready the information needed for assessment and training. Data cleaning, addressing missing values, scaling or normalizing features, and dividing the data into test, validation, and training sets are all part of this process.

- Model Selection and Architectural Design: Select a suitable deep learning architecture according to the data and nature of the challenge. Choosing the kind of neural network (feedforward, convolutional, recurrent, etc.) and creating the architecture, which includes figuring out how many layers there are, how many units are in each layer, activation functions, and other architectural decisions are required for this.

56. Explain the concept of PCA.

Ans:

Principal Component Analysis (PCA) is a technique for reducing dimensionality that is widely used in machine learning and statistics. PCA’s purpose is to convert a high-dimensional dataset into a new coordinate system (principal components) in which the data variance is minimized along the axes. This modification reduces the dimensionality of the dataset while maintaining as much information as possible.

57. Explain the concept of an autonomous form of deep learning.

Ans:

The ability of a system, often an artificial intelligence model or agent, to independently acquire knowledge, make decisions, and improve its performance over time without ongoing human guidance is referred to as autonomous learning in deep learning.

58. What are the steps of forward propagation?

Ans:

- Input layer: The input layer is where the neural network receives its input data at the start of the operation. Every input feature has a corresponding node in the input layer.

- Activation function and weighted sum (hidden layers): A weighted sum of the inputs is computed for every node in the hidden layers. This entails adding up these weighted inputs by multiplying the input values by weights (parameters) connected to the connections.

- Layer of Output: Up until it reaches the output layer, the procedure proceeds through the hidden layers. The final prediction is generated at the output layer by applying an additional weighted sum and activation function.

59. Explain forward propagation.

Ans:

A crucial phase in the training of a neural network, particularly in the setting of supervised learning, is forward propagation. It entails calculating the predictions made by the model for a certain input. The input data is propagated across the network layer by layer during forward propagation, with each layer executing a series of calculations to produce an output or prediction.

60. What is the Fourier transform?

Ans:

A mathematical method called the Fourier Transform breaks down a signal or function into its individual frequencies. The Fourier Transform and similar techniques have applications and linkages to numerous areas of deep learning, even though they are not directly related to it. This is especially true in the context of signal processing and image analysis.

61. How does the Fourier Transform fit into Deep Learning?

Ans:

Deep learning and the Fourier Transform interact in the following ways:

- Signal Processing for Speech and Audio Recognition: The Fourier Transform is frequently employed in activities involving audio and voice processing to transform time-domain information into the frequency domain.

- Style Transfer and Generative Models: The Fourier Transform can help generative models like Generative Adversarial Networks (GANs) and autoencoders with tasks like style transfer.

- Removing noise from an image: One technique used in picture denoising is Fourier analysis. While picture denoising has advanced because to deep learning techniques like autoencoders and denoising autoencoders.

62. Explain the Adam optimization algorithms and What distinguishes it from conventional gradient descent.

Ans:

An optimization approach called Adam (Adaptive Moment Estimation) combines the advantages of momentum and RMSprop. It maintains a running average of both gradients and squared gradients and modifies the learning rates for each parameter separately. It can manage noisy or sparse gradients and converge more quickly as a result.

63. Describe one of the best approaches that is frequently used to overcome the problem of overfitting.

Ans:

L2 Regularization (Decay of Weight): A penalty term equal to the square of the weights’ size is introduced to the loss function during training in L2 regularization. The training procedure then involves minimizing the modified loss function. This extra term helps manage the model’s complexity and reduce overfitting by discouraging the model from giving features unnecessarily high weights.

64. Explain the concept of an additive learning algorithm.

Ans:

A type of machine learning algorithm known as additive learning algorithms constructs a large model by aggregating the predictions of multiple simpler models. The word “additive” in additive learning refers to the idea that the final prediction is created by adding together, or weighting, the individual predictions given by more basic base models.

65. Which are the benefits of utilizing TensorFlow?

Ans:

Here are the some of advantages of using tensorflow:

- Versatility

- Flexible Architecture

- Scalability

- TensorBoard Visualization

- Integration with Other Technologies.

66. What is an example of an additive learning algorithm?

Ans:

Adaptive Boosting, or AdaBoost, is a well-known illustration of an additive learning algorithm. AdaBoost is an ensemble learning technique that builds a strong learner by combining the predictions of weak learners. It is a member of the boosting algorithm family, which concentrates on the shortcomings of the ensemble’s earlier models to gradually construct a robust model.

67. Explain the tensors.

Ans:

A tensor is a multi-dimensional array that is a matrix and vector generalization together. A range of data modalities can be represented by tensors: higher-dimensional arrays, matrices (2-dimensional tensors), vectors (1-dimensional tensors), and scalars (0-dimensional tensors). Neural networks and other machine learning models employ tensors, a basic data structure, to store and analyze information.

68. What does Deep Learning’s model capacity mean?

Ans:

“Model capacity” in the context of deep learning describes a neural network’s power to discover and convey intricate patterns and correlations found in the data. In essence, it’s a gauge of a model’s ability to fit a broad range of functions, taking into account both the noise or random fluctuations seen in the training set and the underlying patterns in the data.

69. What is a computational graph?

Ans:

A computational graph is frequently used in the context of deep learning to describe the forward and backward passes made during training. The nodes in the graph stand for the operations, and the edges, or tensors, represent the data flow between these operations. Generally, each node in the graph represents a mathematical operation, like applying an activation function or multiplying a matrix.

70. What are key components of a computational graph?

Ans:

- Nodes:The operations or computations are represented by nodes in the computational graph. Multiplication, addition, activation functions (like ReLU), and other mathematical processes are a few examples of nodes.

- Edges: The graph’s edges show how data moves between nodes. Tensors, which are multidimensional arrays holding the input and output values of the operations

- Passing Forward: Input data is supplied into the input nodes during the forward pass, and calculations are carried out layer by layer as they propagate through the graph to get the desired result.

71. Why does Deep Learning use the Leaky ReLU function?

Ans:

Leaky ReLU is primarily motivated by the need to address the “dying ReLU” issue, a behavior that is seen in networks that use normal ReLU activations. When a ReLU unit experiences a significant gradient during training, it can result in the “dying ReLU” problem, where the unit consistently outputs zero for all incoming inputs. A ReLU unit stops contributing to learning when it becomes inactive (outputs zero), and its associated weights may cease updating during training.

72. Explain the reasons for leaky ReLU function use in deep learning.

Ans:

Leaky ReLU is employed in deep learning for the following reasons:

- Reducing the Dying ReLU Issue: Leaky ReLU’s non-zero slope for negative inputs keeps units from going into training inactivity.

- Negative Inputs with Non-Zero Output: Leaky ReLU generates a non-zero output for negative inputs, in contrast to normal ReLU, which outputs zero for all negative inputs.

- Prevent Dead Neurons: Leaky ReLU’s non-zero slope keeps neurons from going totally dormant, which is crucial for the network’s expressive capacity.

73. How does an LSTM network work?

Ans:

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) developed to address the vanishing gradient problem, which is a major challenge in training regular RNNs. LSTMs are very effective at managing data sequences, making them useful for tasks such as natural language processing, speech recognition, and time series prediction. The incorporation of memory cells and gating mechanisms in LSTMs allows them to catch and recall long-term dependencies in sequential data.

74. What are a few of Deep Learning’s drawbacks?

Ans:

Deep learning has several difficulties and disadvantages even though it has shown amazing results in many different fields. Some noteworthy disadvantages of deep learning are as follows:

- Data Dependency

- Computational Resources

- Interpretability and Explainability

- Need for Large Datasets

- Training Time

75. What does bagging and boosting mean in Deep Learning?

Ans:

Ensemble learning techniques like bagging and boosting are widely employed in machine learning, particularly deep learning. By merging the predictions of numerous independent models, these strategies aim to improve model performance and robustness. While bagging and boosting both aim to create a powerful ensemble model, their methodologies differ.

76. Why is gradient descent with mini-batch so popular?

Ans:

For various reasons, gradient descent with mini-batch is a well-liked deep learning optimization approach.

- Computational Efficiency: computationally, completing each iteration of the training dataset (batch gradient descent)

- Efficiency of Memory: For large datasets, batch gradient descent could not be practical because it necessitates keeping the whole dataset in memory.

- Parallelization: Modern computing architectures allow for the use of parallelization in mini-batch gradient descent.

77. What are the benefits of using cross-entropy loss over mean squared error in classification tasks?

Ans:

A common loss function used in classification problems—especially when neural networks are involved—is cross-entropy loss, also referred to as log loss. Cross-entropy loss offers several benefits in classification applications, even if mean square error is a legitimate loss function. Here are a few noteworthy advantages:

- Appropriateness for Probability Distributions Promotes Confidence Adjustment Prevents Vanishing Gradients

- Probabilistic Error Sensitivity

- Robust Management of Unbalanced Classes

78. What makes generative adversarial networks (GANs) so popular?

Ans:

For several compelling reasons, Generative Adversarial Networks (GANs) have gained enormous popularity in the field of deep learning. While GANs have achieved tremendous success, they also pose challenges, such as mode collapse, training instability, and the generation of biassed or inappropriate content. Ongoing research seeks to overcome these issues and improve the capabilities of generative adversarial networks.

79. How is the transformer architecture better than RNNs?

Ans:

In deep learning, the transformer design has significant advantages over recurrent neural networks (RNNs):

- Parallelization: When compared to RNNs, transformers allow for more efficient parallelization during training. Transformers are intended to capture long-range dependencies in sequences more effectively than regular RNNs.

- Reduced Vanishing and Exploding Gradient difficulties: During backpropagation across time, RNNs can experience vanishing or exploding gradient difficulties, which can impede learning of long-range dependencies.

80. Describe a scenario where you would use a custom loss function and explain the implementation process.

Ans:

When the normal loss functions are unable to adequately represent the unique needs or limitations of a given activity, using a bespoke loss function may be advantageous. Dealing with imbalanced datasets—where some classes have noticeably fewer examples than others—is one such use case. In these situations, developing a unique loss function that highlights the significance of minority classes may be helpful.

81. Discuss the concept of bias-variance trade-off in terms of neural network weights and model complexity.

Ans:

A fundamental notion in machine learning, particularly neural networks, is the bias-variance trade-off. It relates to the balance of bias and variance in a model’s performance and how it affects the model’s capacity to generalise to unknown data. In neural networks, the bias-variance trade-off entails determining the appropriate level of complexity to balance model simplicity and the capacity to capture underlying patterns in the data. In neural network training, regularisation and accurate model evaluation are critical components of attaining this equilibrium.

82. List a few examples of unsupervised learning algorithms.

Ans:

In order to find patterns and structures in data without explicit labels, unsupervised learning algorithms are utilized. A few instances of unsupervised learning algorithms are as follows:

- K-Means Clustering

- Hierarchical Clustering

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

- PCA (Principal Component Analysis)

- t-SNE (t-Distributed Stochastic Neighbor Embedding)

- K-Nearest Neighbors. (KNN)

83. How does the concept of feature map concatenation effect model performance in networks like DenseNet?

Ans:

Feature map concatenation is a key concept in DenseNet (Densely Connected Convolutional Networks), and it has a significant impact on the model’s performance. DenseNet introduces the idea of densely connecting layers, where each layer receives input not just from the preceding layer but also from all previous layers. In DenseNet, feature map concatenation increases efficient information flow, gradient propagation, and parameter sharing, resulting in better model performance. While it adds computational problems, DenseNet’s advantages in accuracy and feature learning make it a powerful architecture for a variety of computer vision tasks.

84. Is it possible to reset the weights of a network to zero?

Ans:

The weights of a neural network can be reset to zero. It is crucial to note, however, that setting all weights to zero is not a recommended practice in neural network training. Starting all weights at the same value, even zero, can cause symmetry problems and difficulty breaking the symmetry during training. This can lead to poor convergence and poor performance.

85. Explain How would you create a CNN to handle photos of varied sizes as input.

Ans:

Using photographs of varying sizes as input in a Convolutional Neural Network (CNN) requires addressing the issue of variable input size. Here are some ways for developing a CNN that can handle photos of various sizes:

- Resize Images

- Padding

- Dynamic Input Shape

- Global Average Pooling (GAP)

- Multiple Input Sizes These strategies can be used individually or in combination based on the specific requirements of your task and dataset.

86. Distinguish between a single-layer and a multi-layer perceptron.

Ans:

| Aspect | Single-Layer Perceptron (SLP) | Multi-Layer Perceptron (MLP) |

| Architecture |

A single-layer perceptron is made up of only one layer of artificial neurons (sometimes referred to as perceptrons or nodes). |

A multi-layer perceptron is made up of several layers of artificial neurons, which include an input layer, one or more hidden layers, and an output layer. . |

| Functionality | SLPs are typically used to solve binary classification problems. Based on the input attributes, they can make simple linear decisions. | MLPs are adaptable and can be utilized for a variety of applications such as classification, regression, and pattern recognition. |

| Function of Activation | As the activation function in each neuron of the output layer, a step function (or a comparable threshold function) is typically utilized. . | Non-linear activation functions such as the sigmoid, hyperbolic tangent (tanh), and rectified linear unit (ReLU) are often utilized in hidden layer neurons. |

87. Explain the autoencoders.

Ans:

Neural network topologies known as autoencoders are important for unsupervised learning, especially in fields like data compression and feature learning. Getting a small and effective representation of the incoming data is their main goal. Autoencoders have demonstrated efficacy in mitigating dimensionality, ensuring significant features, and streamlining diverse tasks such as generative modeling, noise reduction, and data compression.

88. List a few examples of supervised learning algorithms.

Ans:

A few well-liked supervised learning algorithms in deep learning are as follows:

- Networks with Gated Recurrent Units (GRU)

- Encoders on autoencode

- Neural networks that feed forward (FNN)

- CNNs, or convolutional neural networks

- Siamese Networks

89. What is the swish function in deep learning?

Ans:

Deep learning neural networks employ the Swish function as an activation function. It was suggested by Google researchers, In a number of deep learning applications, such as image classification and natural language processing, the Swish activation has demonstrated encouraging performance. However, the particulars of the task and the dataset may have an impact on how effective it is. It is worthwhile to test out several activation functions to see which works best in a given situation.

90. Name some of the weight initialization methods.

Ans:

- Random Initialization: To disrupt symmetry, initialize weights with small random values. This is a typical strategy that helps neurons avoid learning the same characteristics throughout training.

- Xavier/Glorot Initialization: This method assigns weights to data taken from a distribution with a mean of zero and a variance of one.

- He Initialization: This is similar to the Xavier/Glorot initialization.