- Develop your application and its supporting components using containers.

- The container becomes the unit for distributing and testing your application.

- When you’re ready, deploy your application to your production environment, either as a container or an orchestrated service. It works whether your production environment is a local data centre, a cloud provider, or a hybrid of the two

Docker overview

Docker is an open platform for developing, shipping and running applications. Docker enables you to isolate your applications from your infrastructure so that you can deliver software faster. With Docker, you can manage your infrastructure the same way you manage your applications. By taking advantage of Docker’s methodology for shipping, testing, and deploying code quickly, you can significantly reduce the delay between writing code and running it in production.

Docker platform : Docker provides the ability to package and run an application in a loosely isolated environment called a container. Isolation and security allow you to run multiple containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so you don’t need to rely on what is currently installed on the host. You can easily share containers as you work, and make sure everyone you share gets a container that works in the same way.

Docker provides tooling and a platform to manage the lifecycle of your containers:

What can I use Docker for?

Fast, consistent delivery of your applications: Docker streamlines the development lifecycle by allowing developers to work in a standardised environment using native containers that provide your applications and services. Containers are great for continuous integration and continuous delivery (CI/CD) workflows.

Consider the following example scenario: Your developers write code locally and share their work with their colleagues using Docker containers. They use Docker to push their applications to a test environment and execute automated and manual tests. When developers find bugs, they can fix them in the development environment and redeploy them in the test environment for testing and verification. When testing is complete, getting improvements to the customer is as simple as pushing the updated image to the production environment. Responsive Deployment and Scaling

Docker’s container-based platform allows for highly portable workloads. Docker containers can run on a developer’s local laptop, on physical or virtual machines in a data centre, on cloud providers, or in a mix of environments. The portability and lightweight nature of Docker also makes it easy to dynamically manage workloads, scale or break down applications and services in almost real-time, as the business requires. Docker is lightweight and fast. It provides a viable, cost-effective alternative to hypervisor-based virtual machines, allowing you to make the most of your computing power to achieve your business goals. Docker is perfect for high-density environments and small and medium deployments where you need to do more with fewer resources.

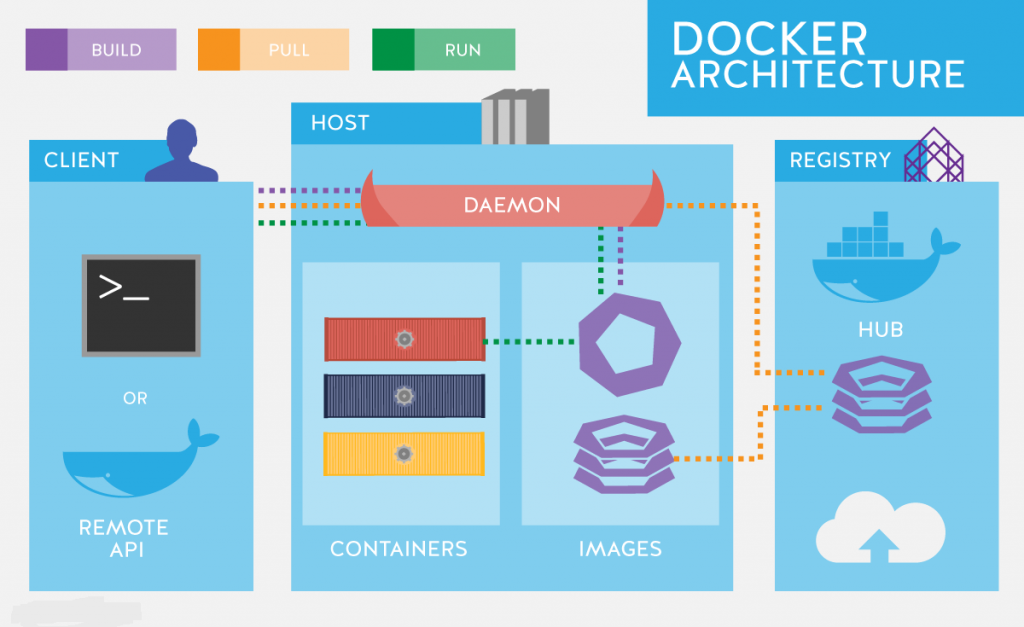

- Docker daemon: The Docker daemon (Dockerd) listens to Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

- Docker client: The Docker client (Docker) is the primary way many Docker users interact with Docker. When you use a command like docker run , the client sends these commands to dockerd, which completes them. Docker commands use the Docker API. Docker clients can communicate with more than one daemon.

- Docker desktop: Docker Desktop is an easy-to-install application for your Mac or Windows environment that enables you to create and share containerized applications and microservices. Docker Desktop includes Docker Daemon (Dockerd), Docker Client (Docker), Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper. See Docker Desktop for more information.

- Docker registries: Docker Registry stores Docker images. Docker Hub is a public registry that anyone can access, and Docker is configured to view images on Docker Hub by default. You can also run your own private registry. When you use the docker pull or docker run commands, the required images are pulled from your configured registry. When you use the docker push command, your image is pushed to your configured registry.

- Docker objects: When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects. This section is a brief description of some of those items.

Docker architecture

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and the daemon can run on the same system, or you can connect the Docker client to a remote Docker daemon. Docker clients and daemons communicate using REST APIs over UNIX sockets or network interfaces. Another Docker client is Docker Compose, which lets you work with applications consisting of a set of containers.

Docker Images

An image is a read-only template containing instructions for building a Docker container. Often, one image is based on another image with some additional customization. For example, you can create an image that is based on the Ubuntu image, but install the Apache web server and your application, as well as the configuration details needed to run your application.

You can create your own images or you can just use those created by others and published in the registry. To build your own image, you create a Dockerfile with a simple syntax to define the steps needed to build the image and run it. Each instruction in the Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only the layers that have changed are rebuilt. It makes images so much lighter, smaller and faster than other virtualization technologies.

Containers: A container is an iterable instance of an image. You can create, start, stop, move or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state. By default, a container is relatively well isolated from other containers and its host machine. You can control how isolated a container’s network, storage, or other underlying subsystems are from other containers or from the host machine. A container is defined by its image as well as any configuration options you provide when you create or start it. When a container is removed, any change in its state that is not stored in persistent storage disappears.

- Standard: Docker created the industry standard for containers, so they can be portable anywhere

- Lightweight: Containers share the machine’s OS system kernel and therefore do not require an OS per application, drive high server efficiencies and reduce server and licensing costs

- Secure: Applications are secure in containers and Docker provides the strongest default isolation capabilities in the industry

Package Software into Standardised Units for Development, Shipment and Deployment :-

A container is a standard unit of software that packages code and all its dependencies so that applications run quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that contains everything needed to run an application: code, runtime, system tools, system libraries, and settings.

Container images become containers at runtime and in case of Docker containers – images become containers when run on the Docker engine. Available for both Linux- and Windows-based applications, containerized software will always run the same, regardless of infrastructure. Containers isolate software from its environment and ensure that it works seamlessly despite the differences between development and staging.

Docker containers running on Docker Engine:

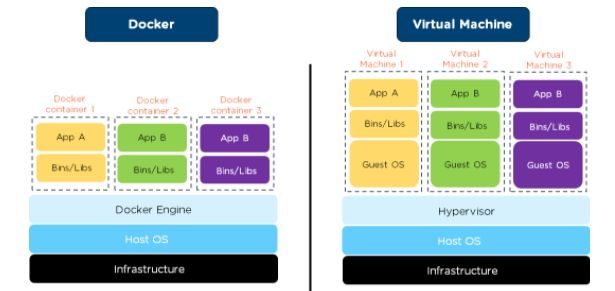

Comparing Containers and Virtual Machines :-

Containers and virtual machines have similar resource isolation and allocation benefits, but function differently because containers virtualize the operating system rather than the hardware. Containers are more portable and efficient.

Containers: The container is an abstraction at the app layer that packages the code and dependencies together. Multiple containers can run on the same machine and share the OS kernel with other containers, each running as separate processes in user space. Containers take up less space than VMs (container images are typically ten MB in size), can handle more applications and require fewer VMs and operating systems.

Virtual machines: Virtual Machines (VMs) are an abstraction of physical hardware that transforms a single server into multiple servers. Hypervisor allows multiple VMs to run on a single machine. Each VM includes a complete copy of an operating system, applications, required binaries, and libraries – which hold tens of GB. VMs can also be slow to boot.

Container Standards and Industry Leadership :-

The launch of Docker in 2013 jump-started a revolution in application development – by democratising software containers. Docker developed a Linux container technology – which is portable, flexible and easy to deploy. Partnered with a worldwide community of contributors to drive Docker open source libcontainer and its development. In June 2015, Docker donated the container image specification and runtime code, now known as runc, to the Open Container Initiative (OCI) to help establish standardisation as the container ecosystem grows and matures.

Following this development, Docker continues to give back with the Containerd project, which Docker donated to the Cloud Native Computing Foundation (CNCF) in 2017. Containerd is an industry-standard container runtime that leverages runc and was built with an emphasis on simplicity, robustness. and portability. Containerd is the main container runtime of the Docker engine.

Conclusion :-

A Docker image is an immutable (immutable) file that contains source code, libraries, dependencies, tools, and other files that are needed to run an application.Since images are, in a way, just templates, you cannot start or run them. What you can do is use that template as a base to make a container. A container is, ultimately, just a running image. Once you create a container, it adds a writable layer on top of the immutable image, which means you can now modify it.

The image-base on which you create a container exists separately and cannot be changed. When you run a containerized environment, you are essentially creating a read-write copy of that filesystem (the Docker image) inside the container. It adds a container layer that allows modifications of the entire copy of the image.

You can build an unlimited number of Docker images from a single image base. Every time you change the initial position of an image and save the existing position, you create a new template with an additional layer on top of it.Therefore, Docker images may consist of a series of layers, each different from but also derived from the previous one. Image layers represent read-only files, to which a container layer is added once to start a virtual environment.

A Docker container is a virtualized run-time environment where users can detach applications from the underlying system. These containers are compact, portable units in which you can quickly and easily launch an application. A valuable feature is the standardisation of the computing environment running inside the container. Not only does this ensure that your application is working under similar conditions, but it also makes it easy to share with other teammates.

Since containers are autonomous, they provide strong isolation, ensuring that they do not disrupt other running containers, as well as the servers that support them. Docker claims that these units “offer the strongest isolation capabilities in the industry”. Hence, you will not need to worry about securing your machine while developing the application.

Unlike virtual machines (VMs), where virtualization occurs at the hardware level, containers perform virtualization at the app layer. They can use a single machine, share its kernel, and virtualize the operating system to run separate processes. This makes containers extremely lightweight, allowing you to maintain valuable resources.