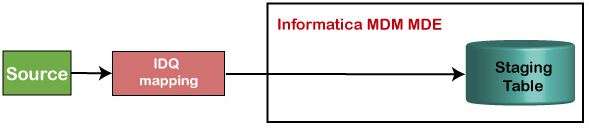

Informatica Data Quality (IDQ) is a robust solution for ensuring the accuracy and reliability of organizational data. With data profiling, cleansing, and deduplication features, IDQ enables organizations to identify and resolve data quality issues effectively. It provides real-time monitoring and reporting capabilities, empowering stakeholders to make informed decisions based on reliable data. IDQ supports data governance initiatives by facilitating the definition of data quality standards and policies. Seamless integration with Informatica’s data integration tools allows efficient data movement and management.

1. What is meant by IDQ?

Ans:

Informatica Data Quality (IDQ) is a comprehensive suite of tools designed to manage and enhance the quality of data within an organization. It encompasses various modules such as profiling, parsing, standardization, matching, and consolidation. These tools empower enterprises to analyze, cleanse, and ensure the accuracy and consistency of their data, ultimately leading to more reliable and effective business insights.

2. What does an independent command task accomplish?

Ans:

- An independent command task within ETL tools like Informatica refers to a task that can be executed autonomously.

- This type of task is often used for administrative or utility purposes, allowing for the execution of system commands or scripts independently of the main workflow.

3. Describe the main elements of IDQ.

Ans:

Informatica Data Quality (IDQ) comprises several integral elements for effective data management. These elements include data profiling, where data characteristics and quality are analyzed comprehensively. Data cleansing is another critical component, ensuring that inconsistencies and errors within the data are rectified. Additionally, data matching is crucial for identifying duplicate or similar records and enhancing data accuracy.

4. What understanding do you have of enterprise data warehouses?

Ans:

Enterprise Data Warehouses serve as centralized repositories that aggregate and integrate data from diverse sources within an organization. They play a pivotal role in supporting reporting, analytics, and decision-making processes. By providing a unified view of data, enterprise data warehouses facilitate a more holistic understanding of an organization’s operations, enabling informed and strategic decision-making.

5. What is the stored procedure transformation used for primarily?

Ans:

- In the realm of ETL tools such as Informatica, a Stored Procedure Transformation is a powerful mechanism used to execute stored procedures directly within a database.

- This transformation enables seamless integration of database operations into ETL workflows, enhancing the efficiency and flexibility of data processing tasks.

6. How can the IDQ SSR findings be posted online or on the Internet?

Ans:

IDQ SSR (Scorecard and Strategy Review) findings can be disseminated online or online. One approach is to export SSR reports or dashboards in formats suitable for online platforms, such as PDF, Excel, or HTML. Alternatively, organizations may leverage data visualization tools or business intelligence platforms to publish and share IDQ SSR findings online. These platforms allow stakeholders to access and review the conclusions conveniently, enabling informed decision-making and collaboration across the organization.

7. Is it possible to export an IDQ object to the PowerCenter tool?

Ans:

It is possible to export IDQ objects, such as mappings, rules, and profiles, to the PowerCenter tool. This capability facilitates seamless integration between data quality processes in IDQ and data integration workflows in PowerCenter. By exporting IDQ objects to PowerCenter, organizations can leverage both tools synergistically, enhancing data management capabilities and ensuring consistency across data quality and integration initiatives.

8. What does the word “Command Task” mean to you?

Ans:

In the Informatica Data Quality (IDQ) context, a “Command Task” refers to a workflow task executing specific commands or scripts. These commands or scripts can perform custom actions, such as running shell scripts or invoking system commands, as part of a more significant data quality or integration process. Command tasks enable automation of tasks and execution of custom actions within IDQ workflows, enhancing efficiency and flexibility in data management operations.

9. How well-versed in the Surrogate Key are you?

Ans:

A Surrogate Key is a system-generated unique identifier assigned to records in a database table, primarily serving as the primary key. Widely utilized in data warehousing environments, surrogate keys offer a stable reference for records and streamline data management tasks, especially in dynamic or intricate relationships between tables. They alleviate challenges associated with altering natural keys or handling complex data structures, ensuring data integrity and facilitating efficient database operations.

10. What is the doctor’s IDQ address?

Ans:

The term “doctor’s IDQ address” lacks specific context within Informatica Data Quality (IDQ) terminology. With further clarification and context, providing a precise explanation or interpretation of this term is more accessible. It may refer to a specific data quality issue, data governance aspect, or an entity within a healthcare-related data quality initiative. Clarification or additional information is necessary to ascertain the term’s meaning in the given context.

11. What does the phrase “Worklet” mean?

Ans:

- In computing or software development, a “worklet” signifies a modular and specialized unit of work within a broader process.

- Analogous to a subroutine or a smaller functional component, workouts are designed to perform specific tasks or operations.

12. What is the primary function of Informatica’s target designers?

Ans:

Informatica’s Target Designers are instrumental components within the Informatica suite, facilitating the design of target data structures for ETL processes. These designers provide intuitive interfaces and tools that empower developers to define the layout, schema, and characteristics of the target data, ensuring alignment with business requirements and compatibility with downstream systems.

13. What distinguishes dynamic cache from static cache, please?

Ans:

| Aspect | Dynamic Cache | Static Cache | |

| Adaptability |

Adapts to changes in data during the session |

Remains constant throughout the session | |

| Update Mechanism | Dynamically updates its content as data evolves | Maintains a fixed set of data, no updates during session | |

| Use Cases |

Suitable for scenarios with changing data |

Appropriate when data remains constant during session | |

| Flexibility | Provides flexibility for evolving data scenarios | Offers stability for consistent data requirements |

14. Define the term “transformation” in IDQ.

Ans:

- In Informatica Data Quality (IDQ), a transformation refers to a process or operation applied to data during the ETL (Extract, Transform, Load) process.

- Transformations in IDQ can include tasks such as data cleansing, standardization, enrichment, and validation, aiming to improve the quality and integrity of the data.

15. What are the key distinctions between static and dynamic lookup caches?

Ans:

Static Lookup Cache: Contains a fixed set of data loaded at the beginning of the session and remains unchanged throughout.

Dynamic Lookup Cache: Allows updates during the session, adapting to changes in the data source. It dynamically reflects modifications, providing flexibility in handling evolving data.

16. What is the update strategy?

Ans:

The update strategy is the approach or methodology to modify existing data in a database or warehouse. It outlines the process for updating records, including how changes are identified, validated, and applied to the database. Common update strategies include incremental updates, where only new or modified data is processed, and complete updates, which involve refreshing the entire dataset. The choice of update strategy depends on factors such as data volume, frequency of updates, and business requirements.

17. Briefly outline the various alternatives in the updating approach.

Ans:

Insert Else Update: Inserts new records; if they already exist, update the existing records.

Update Else Insert: Updates existing records; if not found, inserts new records.

Update Only: Updates existing records, doesn’t insert new records.

Insert Only: Inserts new records, doesn’t update existing ones.

18. What does the Informatica word “mapping parameter file” mean?

Ans:

- The “mapping parameter file” in Informatica refers to an external file containing parameter values that can be used to configure mappings at runtime dynamically.

- This file allows developers to define parameterized values outside the mapping definition, such as connection strings, file paths, or variable values.

19. List the many sorts of loadings possible in Informatica.

Ans:

Full Load: Loads all records from source to target.

Incremental Load: Loads only new or changed records.

Bulk Load: Transfers data in large volumes optimized for performance.

20. Define the functionality of the workflow monitor’s STOP and ABORT options.

Ans:

The STOP option in the workflow monitor facilitates the graceful termination of ongoing tasks, allowing them to complete before halting further execution. This ensures data integrity and minimizes disruptions. On the other hand, the ABORT option forcefully terminates workflow execution immediately, disregarding ongoing tasks, which may lead to data inconsistencies if not carefully managed. These options provide flexibility in managing workflow execution based on the urgency of interruptions and the importance of completing ongoing tasks.

21. List the tools accessible in workflow management.

Ans:

Workflow management tools may vary depending on the software or platform used. However, standard tools accessible in workflow management include:

- Task schedulers (e.g., cron, Windows Task Scheduler)

- Workflow automation platforms (e.g., Apache Airflow, IBM DataStage)

- Enterprise job scheduling software (e.g., Control-M, Autosys)

- Business process management (BPM) software (e.g., IBM BPM, Pega)

- ETL (Extract, Transform, Load) tools with workflow capabilities

22. What was a predefined event?

Ans:

A predefined event refers to an event that is already established within a system or software application. The system developers predefine these events and are typically triggered by specific conditions or actions without user intervention. Predefined events often serve everyday purposes within the system, such as notifying users of task completion, detecting errors, or responding to scheduled time-based triggers. They follow a predetermined set of rules or criteria the system sets and are standardized across all software instances.

23. List the types of dimensions available in Informatica IDQ.

Ans:

In Informatica IDQ, the types of dimensions available are:

- Junk Dimensions

- Conformed Dimensions

- Slowly Changing Dimensions (SCD)

- Degenerate Dimensions

- Role-Playing Dimensions

24. Define what you mean by “workflow”.

Ans:

A workflow refers to a structured sequence of interconnected tasks or activities executed in a predetermined order to achieve a specific objective. In the context of data integration or business processes, workflows define the logical flow of operations, including data extraction, transformation, and loading, orchestrating the entire process from start to finish. Workflows play a pivotal role in automating and streamlining complex processes, ensuring efficiency and consistency in task execution.

25. Where can we locate the Throughput option in Informatica?

Ans:

In Informatica, the “Throughput” option is usually related to session attributes. You can find it by opening the session properties in the Workflow Manager and searching for the “Performance” or “Properties” tab. Optimise the ratio of resource utilisation to performance by adjusting the number of rows processed per transaction committed with the Throughput option.

26. Describe the user-defined event.

Ans:

The user or application developer creates a user-defined event to meet specific business requirements or custom workflows. Unlike predefined events, users can define the conditions or actions that trigger these events based on their unique needs. User-defined events can be tailored to respond to specific data inputs, system states, or user interactions. They offer greater customization and adaptability, enabling users to design event-driven processes that align precisely with their organization’s goals and objectives.

27. Explain how to update a record in the table without using the Update technique.

Ans:

- To update a record in a table without using the Update transformation, use the “Insert Else Update” update strategy in the session settings.

- This approach changes an existing record and inserts a new one if necessary. As an alternative, combine the Lookup and Insert transformations to add new records and find existing ones.

28. What are Informatica sessions and batches?

Ans:

Session: In Informatica, a session is a set of instructions for the ETL server to move data from source to target. It includes information on mapping, workflow, and session properties.

Batch: A batch is a collection of sessions that are grouped for execution. It allows multiple ETL processes to be executed as a single unit.

29. Give an overview of Join Analysis profiling.

Ans:

Join Analysis profiling is a data profiling technique used to analyze relationships between tables in a database by examining join conditions and cardinality. It provides insights into how tables are connected and the potential impact of joint operations on data quality and performance. Join Analysis profiling helps identify standard join keys, assess join selectivity, and detect possible issues such as missing or incorrect join conditions. Organizations can optimize query performance, improve data integration processes, and ensure data accuracy by understanding the join relationships within a database.

30. How can we enhance the performance of Informatica’s aggregator transformation?

Ans:

- Use sorted input data to improve aggregator performance.

- Group data at the source, reducing the amount of data processed by the aggregator.

- Use incremental aggregation for scenarios where data changes are small.

31. Explain the distinction between data integration and power center integration services.

Ans:

Data Integration Services (DIS): Part of the Informatica Intelligent Data Platform, DIS includes various services like metadata management, data profiling, and data quality. It provides a platform-independent environment for data integration tasks.

PowerCenter Integration Services: This is part of Informatica PowerCenter, a comprehensive ETL tool. PowerCenter Integration Services manages the execution of workflows designed in PowerCenter, handling tasks like data extraction, transformation, and loading.

32. What exactly is meant by the goal load order in Informatica IDQ?

Ans:

- Goal Load Order in Informatica Data Quality (IDQ) refers to the desired sequence in which records should be loaded into the target.

- It specifies the order in which records should be processed and loaded based on certain criteria defined by the user or business requirements.

33. Explain about a Data Transformation Manager.

Ans:

A Data Transformation Manager (DTM) is a vital component of data integration, and ETL (Extract, Transform, Load) processes are responsible for orchestrating data transformations. It executes predefined rules and logic to modify data extracted from source systems, preparing it for loading into target destinations. The DTM performs diverse transformations like cleansing, aggregating, and enriching data, adhering to specified transformation rules. Furthermore, it handles error handling by logging errors and implementing appropriate error-handling mechanisms.

34. Name four output files that the information server generates while the session is active.

Ans:

Session Log File: Contains detailed information about the session run.

Output Data File: Contains the transformed or loaded data.

Reject Data File: Stores records that do not meet transformation criteria.

Session Statistics File: Provides statistical information about session performance.

35. Describe reusable transformation.

Ans:

A reusable transformation is a transformation object that can be defined once and reused in multiple locations within a data integration or ETL process. It encapsulates a specific set of data manipulation or transformation logic that can be applied to different datasets or data flows without the need to recreate the logic each time. Reusable transformations promote code reusability, maintainability, and consistency across ETL processes, reducing development time and effort.

36. Can you explain the difference between live data and staged data?

Ans:

Live Data: Live data refers to data that is directly accessed from the source system in real-time. It reflects the most current state of the data as it exists in the source system at the time of access. Live data is dynamic and can change frequently as updates occur in the source system.Staged Data: Staged data, on the other hand, is data that has been extracted from the source system and loaded into a staging area or temporary storage location before further processing. Staging data allows for data cleansing, transformation, and integration processes to be performed on a consistent and controlled dataset.

37. What do you mean by “slowly changing dimensions?”

Ans:

- Slowly Changing Dimensions (SCD) refer to dimensions in a data warehouse that undergo changes over time but at a slower pace.

- SCD types include Type 1 (overwrite existing data), Type 2 (maintain history with new rows), and Type 3 (maintain multiple versions).

38. Define the term “session task”.

Ans:

In the context of ETL (Extract, Transform, Load) or data integration workflows, a session task represents an instance of a session within a workflow. A session is a unit of work in Informatica PowerCenter that defines the ETL process for extracting, transforming, and loading data from source to target. The session task within a workflow specifies the properties and configurations of the session, including source and target connections, mapping details, session parameters, and error-handling settings.

39. Explain the significance of “Reusable Sessions” in Informatica workflows.

Ans:

- Reusable Sessions in Informatica workflows are session tasks that can be reused across multiple workflows.

- By eliminating the need to recreate session tasks for each workflow, reusable sessions reduce redundancy and streamline workflow development.

40. What is the role of “Data Processor Transformation” in Informatica Cloud?

Ans:

The Data Processor Transformation in Informatica Cloud is a transformation type used to perform complex data processing and manipulation tasks within cloud-based data integration workflows. It offers sophisticated data transformation functions, including date conversion, arithmetic computations, conditional logic execution, and string manipulation.

41. Explain what an applet is.

Ans:

An applet is a small application designed to perform a specific function within a larger software environment. In software development, applets provide interactive features or user interfaces within web pages or applications. They are typically written in languages such as Java or JavaScript and can be embedded into web browsers or other applications to enhance functionality. Applets are commonly used for interactive forms, multimedia playback, or dynamic content generation tasks.

42. What sorts of loadings are possible in Informatica?

Ans:

Normal Load: The target table is truncated and then loaded with fresh data.

Bulk Load: Utilizes bulk loading techniques to load data quickly.

Incremental Load: Loads only the changed or new data since the last load.

Constraint-Based Load: Uses constraints defined in the mapping to determine how data should be loaded.

43. Define aggregate cache in terms of aggregator transformation.

Ans:

In the context of an aggregator transformation in Informatica PowerCenter, the aggregate cache is a temporary storage area used to store intermediate results during aggregation operations. When the aggregator transformation processes data, it groups rows based on specified groups by ports and performs aggregate functions such as sum, count, average, etc., on each group. The aggregate cache holds the grouped data and intermediate results, improving performance by reducing the need to read from and write to the target database for each row processed.

44. What sorts of transformations are accessible in Informatica?

Ans:

- Source Qualifier

- Expression

- Filter

- Joiner

- Aggregator

- Router

45. Define the Informatica repository.

Ans:

- The Informatica repository is a metadata repository that stores and manages metadata used by Informatica PowerCenter.

- It includes information about mappings, sessions, workflows, transformations, and connections.

- The repository facilitates version control, sharing of metadata, and centralized management of objects.

46. What is the distinction between a mapplet and a rule?

Ans:

Mapplet: A mapplet is a reusable object in Informatica PowerCenter that contains a set of transformation logic designed to perform specific data manipulation tasks.

Rule: In the context of data quality or data validation, the rule represents a specific condition or criterion used to evaluate data records. Rules define data quality standards or business logic that data must adhere to for validity.

47. What duties does the source qualifier perform?

Ans:

The source qualifier transformation is used in Informatica PowerCenter to represent the source data extracted from a relational database or flat file. It performs various tasks, such as joining data from multiple sources, filtering rows based on specified conditions, and defining the source data types. The source qualifier also generates SQL queries to efficiently retrieve data from the source database. Additionally, it allows developers to specify sorting orders and override default query settings for optimization purposes.

48. What are the different types of lookup caches?

Ans:

Static Cache: The cache is populated at the beginning of the lookup and remains constant throughout the session.

Dynamic Cache: Cache is updated dynamically during the session.

Shared Cache: Multiple transformations share a common cache.

Recache: Clears and repopulates the cache during the session.

49. When is Union Transformation used?

Ans:

The Union transformation in Informatica PowerCenter combines data from multiple pipelines or sources into a single pipeline. It merges rows from two or more source pipelines while preserving the order of rows from each source. The Union transformation is typically used when consolidating data from different sources or performing parallel processing on multiple datasets. It does not perform any data transformation or aggregation; its primary purpose is to concatenate rows from various sources.

50. What is the purpose of the “Dynamic Lookup Cache” in Informatica?

Ans:

The Dynamic Lookup Cache in Informatica PowerCenter is a feature of the Lookup transformation that enhances performance and flexibility when performing lookups on large datasets or dynamic data. Unlike a static cache, which stores all lookup data in memory before the session starts, the Dynamic Lookup Cache dynamically caches lookup data during runtime as needed. This caching strategy minimizes memory consumption and improves performance, especially when dealing with large lookup tables or frequently changing data.

51. Define the term “metadata-driven ETL”.

Ans:

Metadata-driven ETL is an approach where the ETL processes are designed and configured based on metadata specifications. In Informatica, this involves using metadata to define the structure, relationships, and processing logic of data objects. The advantages of metadata-driven ETL in Informatica include improved maintainability, reusability, and adaptability to changes, as modifications can be made at the metadata level without altering the underlying code.

52. Explain the purpose of the “External Transformation” in Informatica.

Ans:

- The External Transformation in Informatica allows the integration of custom code or external programs within a mapping.

- This transformation is particularly useful when specialized or complex logic needs to be applied to the data.

53. What are “lookup transformations” in Informatica?

Ans:

Lookup transformations in Informatica are used to search for and retrieve data from a relational table, view, or flat file. They enhance data integration processes by allowing the mapping to incorporate data from multiple sources based on key relationships. This facilitates the enrichment of data and the integration of information from different tables or systems.

54. Explain the concept of “Pushdown Optimization” in Informatica.

Ans:

Pushdown Optimization in Informatica involves pushing data transformation logic down to the source or target database, leveraging the processing capabilities of the database engine. This optimization can significantly improve performance by reducing the amount of data transferred between Informatica and the database, minimizing network overhead, and taking advantage of database-specific optimizations.

55. What are “source qualifiers” in Informatica?

Ans:

- Source qualifiers in Informatica are transformations that define the properties of the source data, such as the source table or query, filter conditions, and sorting order.

- They play a crucial role in data integration processes by allowing the mapping to retrieve and process data from source systems.

56. Explain the concept of “incremental aggregation”.

Ans:

Incremental aggregation is a technique used in data warehousing to update aggregated data efficiently without reprocessing the entire dataset. Instead of aggregating all the data from the source each time, incremental aggregation identifies and processes only the new or changed data since the last aggregation run. This is achieved by maintaining state information, such as the previous aggregation timestamp or the maximum key value processed, and using it to filter and process only the delta (changed) data.

57. Explain the term “data drift” and how it impacts data integration in Informatica.

Ans:

- Data drift refers to the gradual divergence or inconsistency between data in source systems and their corresponding representations in target systems or data warehouses over time.

- It occurs due to various factors such as data updates, schema changes, data quality issues, or system upgrades. In the context of data integration in Informatica, data drift poses challenges as it can lead to discrepancies, data quality issues, or incorrect analysis results.

58. What is “partitioning” in Informatica?

Ans:

Partitioning in Informatica refers to dividing data into smaller subsets or partitions to improve the performance and scalability of data processing tasks such as sorting, aggregation, and joining. Informatica allows users to partition data at various stages of the data integration process, including source partitioning, transformation partitioning, and target partitioning. Partitioning enables parallel processing by distributing data processing tasks across multiple nodes or resources, reducing processing time and resource contention.

59. How does “workflow dependency” work in Informatica?

Ans:

Workflow dependency in Informatica refers to the relationship between workflow tasks or sessions, where the execution of one task depends on the successful completion of another task. Dependencies define the execution order and ensure that tasks are executed sequentially or in a specific sequence according to their dependencies.

60. Explain the use of the “Persistent Cache”.

Ans:

- Persistent cache in Informatica is a caching mechanism that stores and reuses lookup data across multiple sessions or workflows.

- Persistent cache improves performance by reducing database queries and lookup overhead, especially for large lookup tables or frequently accessed data.

61. Discuss the role of “session logs” in Informatica.

Ans:

Informatica session logs play a crucial role in monitoring and troubleshooting data integration processes. These logs provide detailed information about the execution of sessions, including start and end times, source and target data statistics, transformation logic applied, error messages, and performance metrics. Session logs help developers and administrators track the progress of data integration tasks, identify errors or issues, analyze performance bottlenecks, and ensure data quality and accuracy.

62. Explain the concept of “conformed dimensions” in data warehousing.

Ans:

Conformed dimensions are shared and consistent across multiple data marts or data warehouses. They represent standardized attributes and hierarchies that provide a common reference point for analyzing data across different analytical systems. Conformed dimensions ensure data consistency, integrity, and interoperability by allowing users to perform integrated analysis and reporting across disparate data sources.

63. Define the term “data masking” in the context of Informatica.

Ans:

Data masking in Informatica is a technique used to protect sensitive or confidential information by replacing accurate data with fictitious, anonymized, or scrambled values in non-production environments. Data masking helps organizations comply with data privacy regulations, mitigate the risk of data breaches, and maintain data confidentiality while allowing developers and testers to work with realistic datasets.

64. How can you implement complex business rules in Informatica mappings?

Ans:

Complex business rules in Informatica mappings can be implemented using various techniques such as:

Conditional logic: Using conditional transformations like Filter, Router, or Expression to apply different logic based on specific conditions.Custom transformations: Developing custom transformation logic using Java Transformation to implement complex calculations or data manipulation.

65. Explain the concept of “data lineage” in the context of Informatica.

Ans:

Data lineage in Informatica refers to the ability to trace and visualize the data flow from its source to its destination throughout the data integration process. It provides a comprehensive view of how data is transformed, aggregated, and loaded across various stages of ETL (Extract, Transform, Load) processes. Data lineage helps users understand the origins of data, track data transformations and derivations, identify dependencies between data elements, and ensure data quality and compliance.

66. Define the term “normalization” in the context of data warehousing.

Ans:

- Normalization in data warehousing involves organizing data structures to reduce redundancy and improve data integrity.

- It typically refers to the process of decomposing tables into smaller, related tables to eliminate data duplication.

67. How can you implement error handling in Informatica sessions?

Ans:

Informatica provides various mechanisms for error handling, including:Error logging: Configuring options in session properties to capture error details such as row counts, error codes, and error messages.Error handling transformations: Using transformations such as Error Handling, Error Output, and Conditional Split to redirect error rows to error tables or files for further analysis and resolution.Exception handling: Implementing exception-handling logic in mappings or workflows using conditional expressions, try-catch blocks to handle specific error scenarios gracefully.

68. Define the term “change data capture” (CDC) in Informatica.

Ans:

Change Data Capture (CDC) in Informatica identifies and captures changes made to source data since the last extraction. It enables incremental data extraction by tracking modifications such as inserts, updates, and deletes in the source database tables. CDC mechanisms in Informatica compare the current state of the source data with the previously captured state to identify changed records. CDC can be implemented using various methods such as database triggers, log-based replication, or query-based CDC.

69. Explain the purpose of the “Performance Tuning Wizard” in Informatica.

Ans:

The Performance Tuning Wizard in Informatica is a tool designed to optimize the performance of data integration processes. It analyzes the components of mappings, sessions, and workflows to identify potential bottlenecks and inefficiencies. The wizard provides recommendations and suggestions for tuning options such as partitioning strategies, indexing, caching, pushdown optimization, and session configuration settings. By following the recommendations provided by the Performance Tuning Wizard, developers and administrators can improve efficiency and scalability.

70. What is the purpose of the “Router Transformation” in Informatica?

Ans:

- The Router Transformation in Informatica is used to conditionally direct rows of data to different targets based on specified conditions.

- It acts as a router or distributor of data, allowing you to define multiple output groups with associated filter conditions.

71. Explain the concept of “PowerCenter Domain” in Informatica.

Ans:

In Informatica PowerCenter, a PowerCenter Domain is a centralized administrative unit that manages and governs PowerCenter services, resources, and components within an organization. It serves as a control centre for administering various elements of the PowerCenter architecture, including Repository Service, Integration Service, Reporting Service, and others. The PowerCenter Domain provides user authentication, authorization, security management, and resource allocation functionalities.

72. Explain the purpose of the “Rank Index” in the Rank Transformation in Informatica.

Ans:

- In Informatica’s Rank Transformation, the Rank Index is a calculated output port that represents each row’s ranking position inside a group using a preset sorting criterion.

- The Rank Transformation assigns a unique index value to each row based on its relative rank in the sorted group.

- This index facilitates in distinguishing the top and bottom-ranked rows inside each group, making it easier to rank and filter data integration operations.

73. Explain the concept of “versioning” in Informatica repositories.

Ans:

Versioning in Informatica repositories is the technique of maintaining and recording changes to metadata objects like mappings, workflows, sessions, and workflows across time. Informatica repositories provide version control systems, allowing developers to create, store, and manage various versions of metadata items within the repository. Versioning makes it possible for developers to work together on development projects, compare versions, and swiftly keep track of changes.

74. What is the purpose of the “XML Parser Transformation” in Informatica?

Ans:

- The XML Parser Transformation in Informatica is used to parse and extract data from XML (Extensible Markup Language) documents or messages within data integration processes.

- It converts XML data into a structured format that can be processed and manipulated by downstream transformations or targets.

75. Explain the concept of “concurrent batches” in Informatica workflows.

Ans:

Concurrent batches in Informatica workflows refer to executing multiple workflow tasks or sessions simultaneously within a workflow. Informatica Workflow Manager allows developers to configure workflows with numerous branches or task dependencies, enabling concurrent execution of independent workflow branches or sessions. Concurrent batches enhance workflow performance and throughput by leveraging parallel processing capabilities and optimizing resource utilization.

76. What is “Type 2 Slowly Changing Dimension”?

Ans:

Type 2 Slowly Changing Dimension (SCD Type 2) is a data warehousing concept that tracks historical changes to dimension attributes over time. In SCD Type 2, a new record is inserted into the dimension table whenever there is a change to the dimension attribute values, preserving the history of attribute changes. Each record in the dimension table is assigned a surrogate key and effective date range, indicating when the record was valid.

77. Explain the concept of “commit and rollback”.

Ans:

- Commit and rollback in Informatica refer to the process of either saving or discarding changes made during a session run.

- The commit interval setting determines how often the session commits data to the target database.

- A higher commit interval can improve performance but may impact recovery in case of failure.

78. What is the purpose of the “Reject File” option in Informatica sessions?

Ans:

The “Reject File” option in Informatica sessions allows developers to capture and redirect rows not meeting specified criteria during data integration processes. When enabled, the Reject File option creates a separate file (commonly known as a reject file) to store rows that fail validation rules, data type conversions, or other transformation conditions. This reject file provides a detailed record of rejected rows, including error messages or reasons for rejection, allowing developers to analyze and troubleshoot data quality issues effectively.

79. What is a “data transformation strategy,” and why is it important?

Ans:

- A data transformation strategy is the approach or methodology to transform raw data into a desired format or structure to meet specific business requirements.

- A well-defined data transformation strategy ensures data accuracy, consistency, and relevance in data integration projects.

80. What is the significance of the “Sorter Transformation” in Informatica?

Ans:

The Sorter Transformation in Informatica plays a crucial role in ensuring data integrity and accuracy within data integration processes. By sorting data rows based on specified key columns, it provides a predictable order for subsequent transformations to process. This orderly arrangement is essential for operations such as aggregations, joins, and lookups to produce accurate results. Additionally, the Sorter Transformation facilitates efficient data processing by reducing the time and resources required for downstream transformations.

81. What are the different ways to handle slowly changing dimensions (SCD) Type 2?

Ans:

Date Effective: Adding start and end date columns to track changes.

Versioning: Maintaining multiple versions of the dimension.

Flagging Changes: Using flags to indicate the current and historical records.

82. How can you implement a dynamic lookup condition in the Lookup transformation?

Ans:

To build a dynamic lookup condition in Informatica’s Lookup transformation, utilize a variable port to pass values dynamically. This entails establishing a variable port in the mapping and setting it to the value you wish to use in the lookup condition. Configure the lookup condition to utilize this variable port as one of the conditions. Before conducting the Lookup transformation during session execution, ensure that the variable port has appropriately been given the required value. This technique allows the Lookup transformation to determine a dynamic lookup condition based on the supplied data.

83. Explain the purpose of the “PowerCenter Repository Manager”.

Ans:

- Creating and managing repository connections to metadata repositories.

- Administering repository objects such as folders, mappings, sessions, workflows, and connections.

- Importing and exporting metadata items across repositories.

- Performing versioning, backup, and restore procedures on repository substance.

84. What is the intent behind Informatica’s “Commit Interval” option?

Ans:

The “Commit Interval” option in Informatica controls the frequency of data committed to the target database during session execution. By defining the amount of rows to process before committing them to the target database, this option primarily aims to maximize performance and resource consumption.

85. Explain what a “metadata repository” in Informatica means.

Ans:

In Informatica, a metadata repository is a centralized database that stores metadata information related to data integration projects, including mappings, transformations, sessions, workflows, connections, and other objects. The metadata repository is a central repository for storing, organizing, and managing metadata assets, providing a unified view of data integration processes and components. It enables collaboration, versioning, lineage analysis, impact analysis, and governance of metadata objects across the organization.

86. Why is the “High Precision” significant?

Ans:

In Informatica, “High Precision” is significant for ensuring accurate numeric calculations, especially when dealing with decimal values or large numbers. Enabling “High Precision” ensures that calculations are performed with maximum precision and accuracy, reducing the risk of rounding errors or loss of precision during data transformation processes. This option is significant in financial or scientific applications where precise numerical calculations are critical for accurate results.

87. Define the word “parameter file.”

Ans:

A parameter file is a configuration file that contains settings, variables, and parameters used to control the behaviour and execution of a software application or process. In the context of data integration and ETL (Extract, Transform, Load) processes, a parameter file may include parameters such as database connection details, file paths, transformation rules, and job scheduling information.

88. What is the intent behind Informatica’s “Commit Interval” option?

Ans:

The “Commit Interval” option in Informatica is intended to optimize performance and resource usage during data integration. Users can control the transaction size and frequency of commits by specifying the number of rows to process before committing them to the target database. The primary intent behind this option is to balance factors such as transaction overhead, resource consumption, and recovery requirements based on the characteristics of the target database and the volume of data being processed.

89. Explain the concept of “Parameter Files” in Informatica.

Ans:

Parameter Files in Informatica are external files used to store parameter values that dynamically configure session properties during runtime. These files allow users to define values such as connection strings, file paths, or variable values outside of the mapping definition. Parameter files provide flexibility and reusability in session configurations, enabling users to change parameter values without modifying the mapping logic.

90. What is a “Lookup Cache” in Informatica, and why is it important?

Ans:

A Lookup Cache in Informatica is a temporary memory storage area used to store lookup data during session execution. It improves performance by reducing the number of database calls required to retrieve lookup data, thereby speeding up data retrieval and processing. Lookup caches are important for optimizing session performance, minimizing network overhead, and conserving system resources. By storing frequently accessed lookup data in memory, lookup caches enhance the efficiency and scalability of data integration workflows.