What is Julia?

JULIA(High Level Programming Language)

Julia is a high-level, high-performance, dynamic programming language. While it is a general purpose language and can be used to write any application, many of its features are well-suited for numerical analysis and computational science.Distinctive aspects of Julia’s design include a type system with parametric polymorphism in a dynamic programming language; with multiple dispatch as its core programming paradigm. Julia supports concurrent, (composable) parallel and distributed computing (with or without using MPI and/or the built-in corresponding to “OpenMP-style” threads), and direct calling of C and Fortran libraries without glue code. A just-in-time compiler that is referred to as “just-ahead-of-time” in the Julia community is used.Julia is garbage-collected, uses eager evaluation, and includes efficient libraries for floating-point calculations, linear algebra, random number generation, and regular expression matching. Many libraries are available, including some (e.g., for fast Fourier transforms) that were previously bundled with Julia and are now separate.

Programming Language:

A programming language is a formal language comprising a set of instructions that produce various kinds of output. Programming languages are used in computer programming to implement algorithms.Most programming languages consist of instructions for computers. There are programmable machines that use a set of specific instructions, rather than general programming languages. Early ones preceded the invention of the digital computer, the first probably being the automatic flute player described in the 9th century by the brothers Musa in Baghdad, during the Islamic Golden Age.[1] Since the early 1800s, programs have been used to direct the behavior of machines such as Jacquard looms, music boxes and player pianos.[2] The programs for these machines (such as a player piano’s scrolls) did not produce different behavior in response to different inputs or conditions.Thousands of different programming languages have been created, and more are being created every year. Many programming languages are written in an imperative form (i.e., as a sequence of operations to perform) while other languages use the declarative form (i.e. the desired result is specified, not how to achieve it).

The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning). Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being commoning

Parallel Computing:

Parallel computing is a type of computation in which many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but it’s gaining broader interest due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Parallel computing is closely related to concurrent computing—they are frequently used together, and often conflated, though the two are distinct: it is possible to have parallelism without concurrency (such as bit-level parallelism), and concurrency without parallelism (such as multitasking by time-sharing on a single-core CPU). In parallel computing, a computational task is typically broken down into several, often many, very similar sub-tasks that can be processed independently and whose results are combined afterwards, upon completion. In contrast, in concurrent computing, the various processes often do not address related tasks; when they do, as is typical in distributed computing, the separate tasks may have a varied nature and often require some inter-process communication during execution.

Parallel computers can be roughly classified according to the level at which the hardware supports parallelism, with multi-core and multi-processor computers having multiple processing elements within a single machine, while clusters, MPPs, and grids use multiple computers to work on the same task. Specialized parallel computer architectures are sometimes used alongside traditional processors, for accelerating specific tasks.

In some cases parallelism is transparent to the programmer, such as in bit-level or instruction-level parallelism, but explicitly parallel algorithms, particularly those that use concurrency, are more difficult to write than sequential ones, because concurrency introduces several new classes of potential software bugs, of which race conditions are the most common. Communication and synchronization between the different subtasks are typically some of the greatest obstacles to getting optimal parallel program performance.

A theoretical upper bound on the speed-up of a single program as a result of parallelization is given by Amdahl’s law.

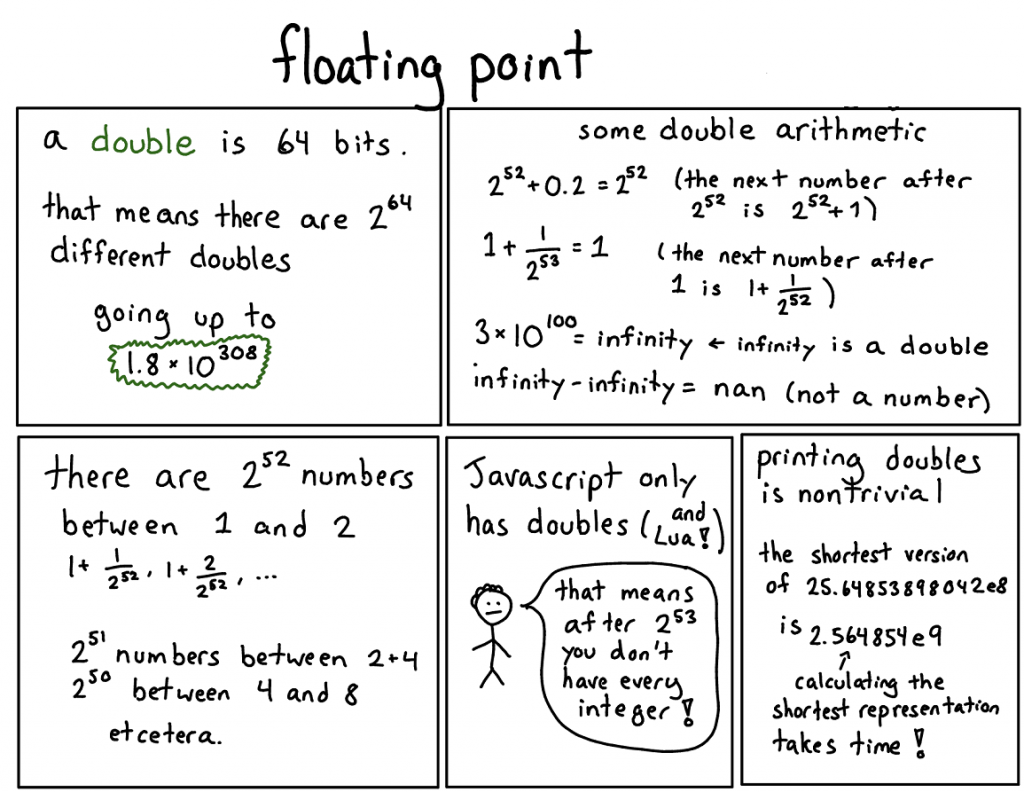

Floating Point:

In computing, floating-point arithmetic (FP) is arithmetic using formulaic representation of real numbers as an approximation to support a trade-off between range and precision. For this reason, floating-point computation is often found in systems which include very small and very large real numbers, which require fast processing times. A number is, in general, represented approximately to a fixed number of significant digits (the significand) and scaled using an exponent in some fixed base; the base for the scaling is normally two, ten, or sixteen. A number that can be represented exactly is of the following form:

- {\displaystyle {\text{significand}}\times {\text{base}}^{\text{exponent}},}

where significand is an integer, base is an integer greater than or equal to two, and exponent is also an integer. For example:

- {\displaystyle 1.2345=\underbrace {12345} _{\text{significand}}\times \underbrace {10} _{\text{base}}\!\!\!\!\!\!^{\overbrace {-4} ^{\text{exponent}}}.}

The term floating point refers to the fact that a number’s radix point (decimal point, or, more commonly in computers, binary point) can “float”; that is, it can be placed anywhere relative to the significant digits of the number. This position is indicated as the exponent component, and thus the floating-point representation can be thought of as a kind of scientific notation.

A floating-point system can be used to represent, with a fixed number of digits, numbers of different orders of magnitude: e.g. the distance between galaxies or the diameter of an atomic nucleus can be expressed with the same unit of length. The result of this dynamic range is that the numbers that can be represented are not uniformly spaced; the difference between two consecutive representable numbers grows with the chosen scale.

Over the years, a variety of floating-point representations have been used in computers. In 1985, the IEEE 754 Standard for Floating-Point Arithmetic was established, and since the 1990s, the most commonly encountered representations are those defined by the IEEE.

The speed of floating-point operations, commonly measured in terms of FLOPS, is an important characteristic of a computer system, especially for applications that involve intensive mathematical calculations.A floating-point unit (FPU, colloquially a math coprocessor) is a part of a computer system specially designed to carry out operations on floating-point numbers.

OpenMP:

The application programming interface (API) OpenMP (Open Multi-Processing) supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, HP-UX, Linux, macOS, and Windows. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior.OpenMP is managed by the nonprofit technology consortium OpenMP Architecture Review Board (or OpenMP ARB), jointly defined by a group of major computer hardware and software vendors, including AMD, IBM, Intel, Cray, HP, Fujitsu, Nvidia, NEC, Red Hat, Texas Instruments, Oracle Corporation, and more.OpenMP uses a portable, scalable model that gives programmers a simple and flexible interface for developing parallel applications for platforms ranging from the standard desktop computer to the supercomputer.

An application built with the hybrid model of parallel programming can run on a computer cluster using both OpenMP and Message Passing Interface (MPI), such that OpenMP is used for parallelism within a (multi-core) node while MPI is used for parallelism between nodes. There have also been efforts to run OpenMP on software distributed shared memory systems,[6] to translate OpenMP and MPI and to extend OpenMP for non-shared memory systems.

Design: OpenMP is an implementation of multithreading, a method of parallelizing whereby a master thread (a series of instructions executed consecutively) forks a specified number of slave threads and the system divides a task among them. The threads then run concurrently, with the runtime environment allocating threads to different processors.The section of code that is meant to run in parallel is marked accordingly, with a compiler directive that will cause the threads to form before the section is executed.Each thread has an id attached to it which can be obtained using a function (called omp_get_thread_num()). The thread id is an integer, and the master thread has an id of 0. After the execution of the parallelized code, the threads join back into the master thread, which continues onward to the end of the program.By default, each thread executes the parallelized section of code independently. Work-sharing constructs can be used to divide a task among the threads so that each thread executes its allocated part of the code. Both task parallelism and data parallelism can be achieved using OpenMP in this way.The runtime environment allocates threads to processors depending on usage, machine load and other factors. The runtime environment can assign the number of threads based on environment variables, or the code can do so using functions. The OpenMP functions are included in a header file labelled omp.h in C/C++.

Core Element:

The core elements of OpenMP are the constructs for thread creation, workload distribution (work sharing), data-environment management, thread synchronization, user-level runtime routines and environment variables.

In C/C++, OpenMP uses #pragmas. The OpenMP specific pragmas are listed below.

Thread creation:

The pragma omp parallel is used to fork additional threads to carry out the work enclosed in the construct in parallel. The original thread will be denoted as master thread with thread ID 0.

Example (C program): Display “Hello, world.” using multiple threads.

- #include <stdio.h>

- #include <omp.h>

- int main(void)

- {

- #pragma omp parallel

- printf(“Hello, world.\n“);

- return 0;

- }

Use flag -fopenmp to compile using GCC:

- $ gcc -fopenmp hello.c -o hello

Output on a computer with two cores, and thus two threads:

Hello, world.

Hello, world.

However, the output may also be garbled because of the race condition caused from the two threads sharing the standard output.

Hello, wHello, woorld.

rld.

Who uses Julia?

Julia is mainly used by research scientists and engineers, In addition to them, it is also utilized by financial analysts, quants and data scientists. The developers of Julia language made sure that the products developed make Julia easily usable, deployable and scalable.

Installing Julia on Windows:

Julia can be installed on various platforms such as Windows, MacOs and Linux as well.

- Here is a download link to install julia: https://julialang.org/downloads/index.html

Features of julia:

The following features make julia a popular programming language:

- Julia uses dynamic typing, resembles scripting, and has good support for interactive use.

- Julia supports high-level syntax which makes it an efficient language for programmers.

- Julia offers a rich language of descriptive data types.

- Julia supports multiple dispatch which makes it easy to compile object-oriented and functional programming code patterns.

- As Julia is open source, all source code is publicly viewable on GitHub.

Julia Packages :

These are some of the favourite packages used by julia developers,

- Interact.jl: Interactive widgets such as dropdowns, sliders and checkboxes to easily implement julia code.

- Generic Linear Algebra : used to extend linear algebra functionality.

- Colors.jl: this is a color manipulation utility for Julia.

- UnicodePlots.jl: scientific plotting based on unicode to work in the terminal.

- Nemo: computer algebra package.

- Revise: update function definitions automatically in a running Julia session

- BenchmarkTools: a benchmarking framework.

- OhMyREPL.jl: bracket highlighting, syntax highlighting and rainbow brackets.

- StaticArrays: framework which provides statically sized arrays.

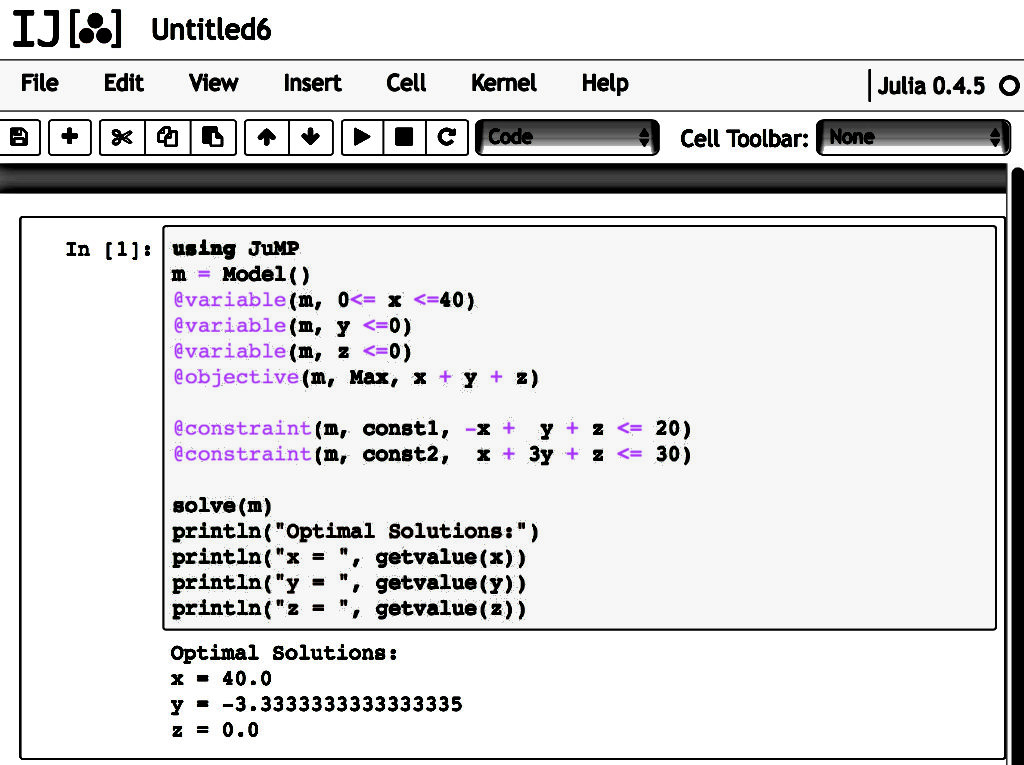

Julia parallelism:

Julia is specifically designed for the purpose of distributed computation and parallelism, using two primitives such as remote calls and remote references. Remote references are of two types: future and remote channel.A future is similar to javascript promise whereas a remote channel can be rewritable and used for interprocess communication, such as Go channel or Unix pipe.

Advantages of Python:

Python – the most important benefits of using this programming language

- Versatile, Easy to Use and Fast to Develop.

- Open Source with a Vibrant Community.

- Has All the Libraries You Can Imagine.

- Great for Prototypes – You Can Do More with Less Code.

- Speed Limitations.

- Problems with Threading.

- Not Native to Mobile Environment.

Disadvantages of Python are:

- Speed. Python is slower than C or C++.

- Mobile Development. Python is not a very good language for mobile development .

- Memory Consumption. Python is not a good choice for memory intensive tasks.

- Database Access. Python has limitations with database access . …

- Runtime Errors.

Notable uses:

Julia has attracted some high-profile users, from investment manager BlackRock, which uses it for time-series analytics, to the British insurer Aviva, which uses it for risk calculations. In 2015, the Federal Reserve Bank of New York used Julia to make models of the United States economy, noting that the language made model estimation “about 10 times faster” than its previous MATLAB implementation. Julia’s co-founders established Julia Computing in 2015 to provide paid support, training, and consulting services to clients, though Julia remains free to use. At the 2017 JuliaCon conference, Jeffrey Regier, Keno Fischer and others announced that the Celeste project used Julia to achieve “peak performance of 1.54 petaFLOPS using 1.3 million threads” on 9300 Knights Landing (KNL) nodes of the Cori II (Cray XC40) supercomputer (then 6th fastest computer in the world). Julia thus joins C, C++, and Fortran as high-level languages in which petaFLOPS computations have been achieved.

Three of the Julia co-creators are the recipients of the 2019 James H. Wilkinson Prize for Numerical Software (awarded every four years) “for the creation of Julia, an innovative environment for the creation of high-performance tools that enable the analysis and solution of computational science problems.” Also, Alan Edelman, professor of applied mathematics at MIT, has been selected to receive the 2019 IEEE Computer Society Sidney Fernbach Award “for outstanding breakthroughs in high-performance computing, linear algebra, and computational science and for contributions to the Julia programming language.”

Sponsors:

Julia has received contributions from over 870 developers worldwide.Dr. Jeremy Kepner at MIT Lincoln Laboratory was the founding sponsor of the Julia project in its early days. In addition, funds from the Gordon and Betty Moore Foundation, the Alfred P. Sloan Foundation, Intel, and agencies such as NSF, DARPA, NIH, NASA, and FAA have been essential to the development of Julia.Mozilla, the maker of Firefox Web browser, with its research grants for H1 2019, sponsored “a member of the official Julia team” for the project “Bringing Julia to the Browser”,meaning to Firefox and other web browsers.

Conclusion:

Conclusion Julia is a flexible dynamic language, appropriate for scientific and numerical computing Julia combines the features of many other programming languages like C, Matlab and Java etc. Existence of JIT Compiler in Julia increases the performance of computing.Hope you have found all the details that you were looking for, in this article.