Prepare for your Deloitte interview with essential questions and answers designed to help you showcase your skills and knowledge. This resource covers a range of topics, including behavioral questions, technical skills, and case studies, ensuring you’re ready for various interview formats. Gain insights into Deloitte’s company culture and values to effectively align your responses.

1. Discuss the benefits and drawbacks of serverless computing.

Ans:

The benefits of serverless computing include cost savings, automatic scaling, and reduced management overhead since the cloud provider manages the infrastructure. It allows developers to focus on code rather than maintaining servers wholly. The disadvantages include the chance for vendor lock-in, limited control over infrastructure, and latency issues. For some use cases and workload requirements, it will all depend on serverless.

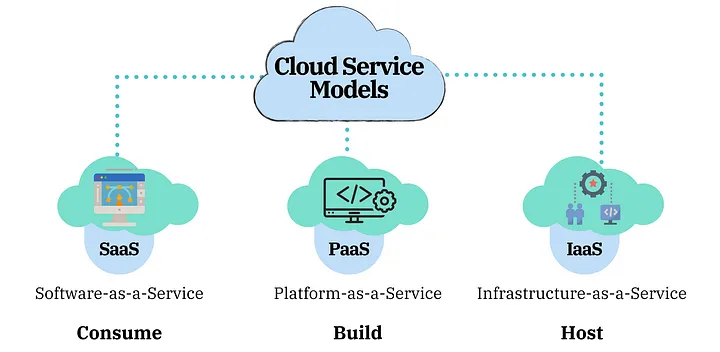

2. What are the different types of cloud service models?

Ans:

The most essential Cloud Service models are IaaS, PaaS, and SaaS. IaaS is the capability provided to the consumer to provision processing power over the Internet. It gives the client control over the infrastructure components, including storage and networking. PaaS provides platforms where developers build applications without managing the underlying infrastructure. SaaS delivers fully functional software applications over the web.

3. How to ensure data security in cloud computing environments?

Ans:

Data security in cloud computing involves encryption, identity and access management (IAM), and multifactor authentication (MFA). Encryption means securing data both in transit and at rest. Proper IAM policies ensure that only the right user can access the specific resources. Conduct of routine audits in terms of security standards like GDPR or HIPAA will help maintain the cloud’s security.

4. What is the difference between Public cloud, Private cloud?

Ans:

| Aspect | Public Cloud | Private Cloud |

|---|---|---|

| Definition | Services are offered over the internet to multiple customers. | Dedicated cloud infrastructure for a single organization. |

| Ownership | Owned and managed by third-party service providers. | Owned and operated by the organization itself or a third party. |

| Scalability | Highly scalable, allowing easy access to resources as needed. | Limited scalability; scaling requires additional resources. |

| Cost | Generally lower costs due to shared resources. | Higher costs due to dedicated infrastructure and maintenance. |

| Security | Lower security control; data is shared with other users. | Higher security and compliance, as resources are isolated. |

| Use Cases | Ideal for non-sensitive applications and variable workloads. | Suitable for sensitive data and critical applications. |

5. What is Microsoft Azure’s role in digital transformation?

Ans:

Microsoft Azure is essential in digital transformation because of its extensive portfolio of cloud services, including AI, IoT, and data analytics. These cloud services help organizations modernize their infrastructure, improve the efficiency of the operation process, and introduce new business models possibly pegged on an organization’s dependence on the cloud. Azure ensures support for hybrid cloud environments so that companies can transition and work comfortably from an on-premises setting to the cloud.

6. How to migrate an on premises system to the cloud?

Ans:

- Migrating an on-premises system to the cloud includes two assessment, planning, and execution stages.

- First, analyze the current infrastructure and determine which applications suit such migration.

- Then, choose a suitable cloud provider and the appropriate service model or architecture, IaaS, PaaS, or SaaS, based on business needs.

- One could either lift and shift or refactor the application for cloud optimization.

7. Define cloud elasticity.

Ans:

- Cloud elasticity, or the automatic scaling of resources up and down with demand on cloud services, allows organizations to use only necessary resources

- At a given point in time since more servers may be provisioned at points of peak traffic and decommissioned during periods of low usage.

- Elasticity is indeed one of the major advantages of cloud computing, ensuring that systems are responsive and cost-efficient at the same time.

8. What are the main differences between AWS, Azure and Google Cloud Platforms?

Ans:

- AWS is generally the largest cloud provider, offering a huge range of services and considered one of the most mature in terms of infrastructure.

- With a global reach, Azure is tightly integrated with Microsoft products and is often preferred by organizations already using some of Microsoft’s technologies.

- Google Cloud Platform is especially strong in data analytics and machine learning services, so it is best suited for big data applications.

- Relatedly, pricing, service availability, and specific toolsets vary between the platforms. Therefore, they are suited to different types of businesses.

9. How does SAP BTP integrate with major cloud providers?

Ans:

It supports multi-cloud infrastructure with major cloud providers, including AWS, Azure, and Google Cloud. This allows businesses to use SAP solutions in several environments and still keep them in an interoperable network. SAP BTP has capabilities for integrating data and applications. It makes creating services from cloud-native services from other providers easier, and it comes with tools to develop and extend SAP applications for hybrid.

10. What is the importance of load balancing in cloud computing?

Ans:

Load balancing distributes incoming traffic across multiple servers, avoiding overload on any single server. This improves system performance and availability. In a cloud environment, it helps smooth out variable workloads and avoid bottlenecks, which means a better overall user experience. Redundancy also means that if one server fails, a suitable alternative can redirect traffic to improve the system’s reliability.

11. Describe the data management ETL.

Ans:

ETL in data management refers to moving data from a source system to a data warehouse or a data lake. The first step in ETL is called “extract.” It collects raw data from several sources, including databases, APIs, or flat files. This “transformation” process cleans, filters, and modifies the data into the proper format or structure. This step uploads the transformed data into the target system for storage and analytics. This is what ensures that the business intelligence database is integrated and structured.

12. What are some activities of designing a data warehouse architecture?

Ans:

- Developing a data warehouse architecture involves the identification of sources of data, determination of ETL processes, and layers for storage and reporting.

- The architecture typically consists of three layers: a staging area where raw data is uploaded for preliminary processing, a data warehouse where cleaned and transformed data is stored.

- The data is usually encapsulated in star or snowflake schemas comprising fact and dimension tables. There are always some critical considerations about consistency, scalability, and security in the design process.

13. What is OLAP, and how to use it in data analysis?

Ans:

- This approach to data analysis allows for fast data analysis from multiple angles through complex querying.

- OLAP systems structure data in multidimensional cubes to be sliced and diced along various dimensions for time, place, or product.

- Applications Common business intelligence usage includes reporting, forecasting, and making decisions.

- OLAP enables activities such as trend analysis, financial reporting, and market analysis with rapid query response in an interactive manner.

14. What are the differences between structured and unstructured data?

Ans:

- Structured data is already prearranged for a predefined structure, like rows and columns in a relational database.

- Unstructured data needs to be more defined and contain various content, such as text, images, videos, and social media posts.

- Although processing structured data has become streamlined with tools normally developed for just such a purpose.

- It often requires advanced techniques specifically for NLP and ML for unstructured data.

15. How to ensure data integrity and consistency over multiple systems?

Ans:

Data quality and consistency require data governance policies, the implementation of validation rules and processes automating such practices. Therefore, data should be cleansed, standardized, and audited regularly to detect any duplicates duplicates or inaccuracies. The use of MDM (Master Data Management) systems ensures that critical data has uniformity through centralizing it. The synchronizing data across various systems achieves uniformity through ETLs or retreat integrations.

16. What are the best practices for data governance?

Ans:

Best Practices for data governance are role and responsibility definition, data stewardship, and setting standards for data quality. A good governance framework should also define policies on data privacy, security, and compliance with regulations such as GDPR. Classification and labelling for sensitive data need to be uniform. Regular audits and reviews ensure policies are implemented while maintaining an open stakeholder relationship helps hold individuals accountable.

17. Define what is a data lake.

Ans:

A data lake is an integrated reservoir that stores raw data in its natural form, either structured, semistructured, or unstructured. Unlike the traditional data warehouse, which needs transformation before actual storage, data lakes support flexible ingestion. That makes them very good for big data analytics since they can hold large volumes of different types of data. Data lakes are popular among users for machine learning, realtime analytics, and advanced exploration.

18. How to handle big data processing and storage?

Ans:

- Big data processing requires distributed computing frameworks such as Hadoop or Apache Spark, in which the data is processed parallelly across several nodes.

- Storage frameworks typically include technologies like HDFS (Hadoop Distributed File System) or even cloud-based solutions such as AWS S3 or Azure Data Lake.

- Processing frameworks allow for batch processing, stream processing, and machine learning. Compression of the data, along with proper partitioning, optimizes storage efficiency and query performance.

19. Experience with Hadoop and Spark for Big Data Solutions.

Ans:

Hadoop is an open-source framework for distributed handling large data sets, using HDFS and MapReduce. Now, if you have any experience in Hadoop, then you must hear about Spark, which is a kind of complementary set of technologies that offers in-memory processing, which makes it. The use of these technologies makes it faster for iterative machine learning tasks as well as realtime analytics. My experience with such technologies includes:

- Setting up clusters.

- Writing jobs in MapReduce.

- Optimizing Spark workloads in ETL and big data analysis.

20. What is the role of SSRS in business reporting?

Ans:

- SQL Server Reporting Services is a reporting tool for producing reports which can be presented as PDF, with Excel files, or through web-based views.

- This enables it to produce highly detailed, interactive reports from structured data sources like SQL databases. SSRS can be applied for both ad-hoc and enterprise-level reports.

- In this regard, it is an indispensable tool in presenting all manner of information throughout an organization, and the scheduling capability of the reports enhances its utility in decision-making.

21. What is a microservices architecture?

Ans:

A microservices architecture breaks down software applications into smaller, independently deployable services, and each service could be responsible for a specific business function. This way, we develop, test, and deploy one service without interfering with others. Implementation entails the development of concrete services, interservice communication, usually through RESTful APIs, and managing it using tools such as Docker for containerization and Kubernetes for orchestration.

22. Describe the critical differences between monolithic and microservices architectures.

Ans:

In a monolithic architecture, all components of an application form one single codebase, which makes updating or scaling particular components extremely tough without impacting the whole system. With microservices, an application is broken down into loosely coupled, independent services that make scalability and deployment easier. While it may be easier to start developing monoliths, microservices provide higher fault tolerance than their counterparts since errors occurring within one service do not necessarily propagate to others.

23. How to achieve fault tolerance in distributed systems?

Ans:

Fault tolerance for a distributed system is achieved through redundancy, replication, and graceful degradation techniques. Databases and services are examples of redundant components, meaning the system can still function if one piece fails. Data distribution across nodes or regions allows continued availability in hardware failure and network failure cases. Monitoring and proactive errordetection mechanisms also reduce the potential faults before it reaches the users.

24. What is the role of Docker and Kubernetes in software development?

Ans:

- The behaviour is consistent across environments, simplifying deployment and reducing conflicts between software versions.

- Kubernetes, is an orchestration system that automatically manages and coordinates containers to scale them up and down.

- It does so through load balancing service discovery and self-healing, ensuring the containers are always running.

- Together, Docker and Kubernetes make the development cycle faster with reduced scalability, hence supporting microservices architectures.

25. How to ensure scalability in a cloud-based application?

Ans:

- Scalability in a cloud-based application is the ability of the system to handle increasing loads by adding resources.

- It would then grow in the direction of more instances of services, which are usually used in cloud environments.

- Load balancers distribute traffic uniformly among many cases so that no single service becomes a bottleneck.

- The autoscaling of resources, as offered by AWS or Azure, regulates itself according to the traffic.

26. What are design patterns and give some names of a few commonly used ones?

Ans:

- Design patterns represent reusable solutions to common problems in software design.

- They give best practices on structuring code to solve recurring challenges in software development.

- Design patterns help ensure good organization, maintainability, and flexibility by providing tested solutions to problems.

27. Describe the SOLID principles as applied in object-oriented design.

Ans:

- A class should have only one reason to change.

- Classes should be open to extension but closed to modification.

- Subtypes must be substitutable for their base types.

- Clients must not be forced to depend upon interfaces they do not use.

- High-level modules should be independent of low-level modules. Both should depend on abstractions.

28. What does a service-oriented architecture (SOA) mean?

Ans:

Service-oriented architecture (SOA) is an approach to design in which software components are structured as independent, reusable services communicating over a network. Each such service isolates a single business function and is loosely coupled, thus providing flexibility, scalability, and straightforward integration of new services. SOA promotes interoperability, enabling different services to work together, often in different languages or platforms.

29. How to manage the versioning of software as well as the dependencies?

Ans:

Semantic versioning is commonly used. The MAJOR version changes with the involvement of major functionality, the MINOR version changes to denote new features without breaking backward compatibility, and the PATCH version increments with each bug fix. Dependency management implies software components such as libraries and frameworks, including maintaining their updates and compatibility.

30. What are some of the most critical factors when designing RESTful APIs?

Ans:

Building RESTful APIs relies heavily on key principles. Among others, each API call’s statelessness contains all the necessary information to conduct the processing and resource-based design, where every resource following, like a user or order, obtains a unique URL. Proper use of HTTP methods is vital for performing CRUD operations. API securityincluding authentication and authorization such as OAuth 2.0is crucial in protecting sensitive data.

31. What are the basic building blocks of a good cyber security strategy?

Ans:

A robust cyber security strategy offers multidimensional defences with preventive and reactive methodologies. Some important components include robust access controls through multifactor authentication and access based on roles so that only the right to access restricted data is extended to authorized users. Security audits, vulnerability assessments, and penetration testing identify weaknesses that must be attended to immediately.

32. What to prevent and respond to a data breach?

Ans:

- Organizations use a multilayered defence approach to prevent data breaches, including firewalls, encryption, intrusion detection systems, and access controls.

- Workers must be made aware of phishing attacks and security best practices. If the software is regularly updated and patched, this will eliminate the known vulnerabilities.

- Acting speedily after the breach is again crucial, as this should initially be done by containing damage and then disconnecting affected systems to allow for its investigation.

33. What role does encryption play in data security?

Ans:

- It ensures that data is secure because ciphertext will be unintelligible with an incorrect key.

- This ensures confidentiality and security are not overstepped. Encryption applies when data is transferred, stored, or transmitted over networks, especially with sensitive information.

- It ensures that even if an unauthorized party accesses the data, they cannot read it without the decryption key, thus significantly reducing breaches.

34. What is the difference between symmetric and asymmetric encryption?

Ans:

- Symmetric encryption makes use of the same decrypting and encrypting key.

- Asymmetric encryption, by contrast, uses two keys: one public key to encrypt data and a private key to decrypt.

- In this regard, private information remains encrypted because only the public key is released to others.

- Asymmetric encryption is widely applied in securing communication channels, such as SSL/TLS.

35. How to achieve secure access to cloud resources?

Ans:

IAM policies enforce the principle of least privilege to ensure secure access to cloud resources, ensuring that nobody has access to any more than is strictly necessary. Multifactor authentication provides an added layer of security, making unauthorized access much harder. Role-based access control (RBAC) and data encryption at rest and in transit further enhance security, with secure API gateways also being used to control access to cloud services.

36. What is MFA? Why is it significant?

Ans:

Multifactor authentication refers to a type of security control that offers any three types of verification factors involved in the user’s authenticating method when accessing a system or resource. It typically requires something the user is aware of (like a password), something they have (like a one-time code on a mobile device), and something they are (such as biometrics from their fingerprint). This ensures that unauthorized users’ access risks are reduced even in cases where the password might have been compromised.

37. How to validate the security of third party software or vendors?

Ans:

Due diligence should also be done on third-party software or vendors in terms of security before engaging with them. This includes their security policies and compliance with specific regulation standards such as ISO 27001, SOC 2, which applies to GDPR. It should also ensure that its practice with respect to handling data is safe, making sure it uses encryption and access controls. They also perform vulnerability assessments, penetration testing, and studying past incidents or breaches.

38. What is the importance of penetration testing?

Ans:

- A penetration test is a proactive approach to identifying security vulnerabilities before attackers can exploit them.

- It simulates real-world attacks on a system or network to help organizations understand how their defences will hold up under actual threat conditions.

- The results give valuable insights into weaknesses in infrastructure, applications, or policies and help organizations strengthen their security posture.

- Security measures must prove effective and up to date with evolving threats through regular penetration testing.

39. Describe zerotrust security models.

Ans:

- A zero-trust security model is one based on the “never trust, always verify” principle, where no user or system inside or outside the network should be trusted automatically.

- All-access requests must be authenticated, authorized, and continually validated based on user identity, device, location, and other risk factors.

- This approach reduces the potential for internal threats, lateral movement within the network, and unauthorized access.

40. How to implement security in a DevOps pipeline?

Ans:

Implementing security in a DevOps pipeline is generally known as DevSecOps. DevSecOps places the integration of security practices throughout all stages of the software development lifecycle. Third, automated security testing becomes part of CI/CD pipelines; therefore, vulnerabilities are caught much earlier in the development cycle. Code scanning tools identify known security flaws and infrastructure as code templates are examined for misconfigurations.

41. What are the Core Modules of SAP S/4HANA?

Ans:

- Finance (FI): This module in finance is about financial accounting and reporting.

- Controlling (CO): This is a module of managerial accounting that presents cost centre accounting.

- Materials Management (MM): This module allows you to manage inventories, procurement, and material planning.

- Sales and Distribution (SD): It consists of ordering management, pricing, and billing modules.

- PP (production planning): Manufacturing processes and capacity planning.

- PM (plant maintenance): Machines’ maintenance and inspection

- HCM (human capital management): Employee details and payroll.

42. In what ways does SAP Fiori make the experience of users in SAP applications better?

Ans:

SAP Fiori is a modern, userfriendly user interface across devices. It simplifies complex transactions in role-based apps with consistent design and responsive performance. Its UI-centric design brings more productivity, easier navigation, and higher user adoption than old SAP GUIs. By prioritizing user experience, Fiori empowers organizations to enhance operational efficiency and improve overall employee satisfaction.

43. What does SAP HANA serve, and how does it differ from traditional databases?

Ans:

- It processes data much faster than traditional disks; data is kept in memory instead of disk.

- Efficiently manages analytical queries, resulting in faster data retrieval.

- Enables the completion of live processing and analysis of large data sets, which can lead to quicker decisionmaking.

44. How to attack an SAP system integration?

Ans:

- Understanding the business processes and system requirements.

- Identification of necessary integration points, tools, and APIs.

- Definition of data flows from one system to another with field mapping and transformation rules.

- Utilization of tools like SAP PI/PO, CPI or even third-party integration platforms between SAP and external system

- Ensuring that the integrated systems work fine by unit, integration, and user acceptance testing

- Monitoring is continued beyond integration to ensure stability and stability of systems.

45. What are the differences in core areas of SAP ECC versus SAP S/4HANA?

Ans:

ECC could run on any database, whereas S/4HANA only on SAP HANA. ECC runs on SAP GUI, whereas S/4HANA is on SAP Fiori. S/4HANA simplifies business processes because it eliminates redundancies, provides realtime real-time analytics, and reduces a firm’s data footprint. Its in-memory architecture leads to quicker data processing and transaction execution. S/4HANA has innovations like embedded analytics, predictive capabilities, and better integration with cloud services.

46. Explain how SAP BTP aids in developing enterprise applications.

Ans:

SAP Business Technology Platform is one platform to develop, integrate and extend applications. SAP BTP supports enterprise applications with offerings like Scalability and hosting of applications. Ability of SAP and nonSAP systems through API and connectors. Processing and storing large volumes of data; Huge data analysis. Lowcode and provide environments for building customized apps.

47. What is the ABAP language for SAP systems?

Ans:

Developing reports, transactions, and interfaces. Develops and expands the integrations between SAP modules and other external systems. Creating programs that handle data processing, including batch jobs, workflows, and user exits. These efforts ensure seamless data flow and optimize business processes across the organization. This comprehensive approach enhances system performance and drives efficiency, ultimately supporting informed decision-making across all departments.

48. How can to help ensure the success of data migration in an SAP implementation?

Ans:

- Early determination of the source and target systems, data formats, and mapping requirements.

- Cleaning off duplicates and errors or ensuring the data migrated is correct.

- Simulating migrations, as well as validation tests, to ensure the information is authentic.

- Utilization of SAP-provided tools such as Data Services, Migration Cockpit, and LSMW.

49. How to integrate SAP with nonSAP systems?

Ans:

- Middleware for ecosystem communication. A cloud-based integration tool for connecting to cloud or on-premise systems.

- Utilize SAP APIs, such as OData and REST, to communicate with a third-party system.

- This capability ensures seamless data flow and interoperability across diverse platforms, enhancing overall system integration.

50. What is SAP BusinessObjects, and how is it used for reporting and analytics?

Ans:

SAP BusinessObjects is the frontend applications suite for business intelligence (BI) reporting and analytics. By using such applications, users can prepare, view, and analyze reports for themselves through:

- Web Intelligence: This application is an ad-hoc query and reporting tool.

- Crystal Reports: Advanced report design.

- Dashboards: Interactive data visualization.

- SAP Lumira: Self-service data discovery.

- SAP Business: Objects allow extract insights from SAP and nonSAP systems.

51. How to design a continuous integration/continuous delivery (CI/CD) pipeline?

Ans:

Employ Git for version control. Auto-trigger builds upon the code commit using Jenkins, Azure Pipelines, etc. Each code change should undergo automated unit, integration, and security testing to deliver high-quality code. Automate the deployment of tested builds through different staging and production environments. Create monitoring for continuous feedback on performance and errors.

52. What are the benefits of DevOps in software development?

Ans:

Continuous integration and delivery allow for faster releases. DevOps fosters collaboration between development, operations, and other teams. Removes manual process, thus reducing errors and time-to-market acceleration. DevOps ensures that systems are built to scale and swallow failure. By emphasizing automation it also enables teams to focus on innovation and improvement, leading to more robust and reliable software solutions.

53. How to ensure High Availability and Scalability using DevOps Practices?

Ans:

- Autoscaling: The resources scale based on demand automatically.

- Redundancy: Should have more than one instance running for the services in different locationsfailover.

- Monitoring and alerting: Configure systems such as Prometheus or ELK stack to monitor and alert on issues across different domains.

54. What is the purpose of infrastructure as code (IaC) in modern IT operations?

Ans:

IaC refers to how to do it or what can be called using code to provision and manage IT infrastructure, for example, tools like Terraform and AWS CloudFormation. The infrastructure can be versioned, tracked, and audited as code. It is always provisioned consistently across environments. It scales easily with changes defined in the code. This approach not only enhances collaboration and reduces configuration drift but also improves overall operational efficiency by enabling rapid deployments and rollbacks.

55. How to incorporate automated testing into a CI/CD pipeline?

Ans:

Tests the individual components to verify that they function. Validates how the various parts of the system interact. Validates the entire workflow to ensure that it behaves as expected. Automated checks of security holes and performance bottlenecks. Tools like Selenium, JUnit, or TestNG are integrated into CI systems, which may have examples like Jenkins.

56. What is the difference between blue-green and canary deployments?

Ans:

There are two identical environments; the new version is deployed to the green environment, while the blue environment serves production. Once validation is completed, traffic is switched to green. Gradually roll out the new version to a subset of users and monitor its performance before rolling it out to everyone. This approach minimizes risk and ensures that issues can be addressed before impacting the entire user base.

57. What is container orchestration, and how does Kubernetes enable it?

Ans:

- Container orchestration refers to the automated management of containerized applications, including deployment, scaling, and networking.

- It helps manage complex microservices architectures by coordinating multiple containers across various hosts. It manages the lifecycle of containers, ensuring they run reliably and efficiently.

- Kubernetes is an open-source platform that facilitates container orchestration by providing tools for automating application container deployment, scaling, and operations.

58. What is a method for handling rollback in case of a deployment failure?

Ans:

- Implementing blue-green deployments is a common method for handling rollback in case of a deployment failure.

- During a deployment, the new version of the application is deployed to the green environment while the blue environment continues to serve traffic.

- If the deployment in the green environment is successful, traffic is switched from blue to green. In case of any issues, traffic can quickly revert to the blue environment, ensuring minimal user disruption.

59. What is the users’ experience with monitoring and logging in DevOps?

Ans:

- Users’ experience with DevOps monitoring and logging typically involves real-time visibility into system performance and application behaviour.

- They benefit from tools like Prometheus and Grafana, which provide dashboards for tracking metrics and alerts for potential issues.

- Centralized logging solutions, such as the ELK Stack, allow users to analyze logs efficiently, facilitating faster troubleshooting.

- Effective monitoring helps users identify bottlenecks and optimize resource usage, enhancing system reliability.

60. How to ensure security in a DevOps environment?

Ans:

Security within DevOps is known as DevSecOps, and it is ensured in the following ways security integration at the early lifecycle phases vulnerability scanning inclusion within CI/CD pipelines, verification of the IaC scripts for security configurations, and secure management of credentials, tokens, and keys with the help of HashiCorp Vault. DevSecOps fosters a proactive culture that minimizes risks and strengthens the overall security posture of applications and infrastructure.

61. What is robotic process automation (RPA), and how does it benefit organizations?

Ans:

Robotic process automation (RPA) is defined as technology that enables software robots to automate repetitive, traditionally human tasks. OrganizationOrganizations benefit from improved efficiency, reduced cost of operations, and minimal errors from such an approach. They can focus on more strategic activities instead of drudgery tasks. RPA can be scaled across departments with relative ease, providing rapid deployment to various business processes.

62. How to implement a business process management (BPM) solution?

Ans:

Implementing a Business Process Management solution normally begins with understanding the existing processes by identifying and documenting their workflow. Then, it will collaborate with stakeholders to ensure that their insight and requirements are added before finalizing a BPM tool required for an organization. These include designing optimized processes, configuring BPM software, integrating packaged software with other systems, monitoring to ensure continued improvement and successful adoption.

63. What role does process mining play in digital transformation?

Ans:

- Process mining is an integral part of digital transformation. It analyzes the data of IT systems to discover, monitor, and improve real processes.

- It provides insights into how a process is being implemented, pinpointing bottlenecks, inefficiencies, and deviations from the intended workflow.

- Continuous improvement efforts are made easier by this. Businesses keep track of market changes while ensuring processes align with strategic goals.

64. Compare process automation with workflow automation.

Ans:

- Process automation uses technology to automate a specific business process, workflow automation, which orchestrates several tasks and stakeholders involved in a workflow.

- Process automation primarily works with individual tasks, such as entering data or reports, for better efficiency and accuracy.

- By contrast, workflow automation controls the flow of tasks so that information moves fluidly between different stages and participants to increase collaboration and visibility of the overall process.

65. How to handle exception cases in an automated business process?

Ans:

- Handling exception cases in an automated business process requires specific rules and protocols defined at the onset when deviations occur.

- This may include sending an exception to some responsible people for examination or sending it for alternative processes.

- Documentation of scenarios and exception results also opens ways to improve the automated process further.

- Periodic analysis and updation of exception handling ensure that the automation does not become weak and ineffective.

66. What are some common tools in BPM and RPA?

Ans:

Common products in BPM are software solutions with solutions including Bizagi, Appian and Pega that will make modelling, automation and optimization of business processes easier. RPA’s popular tools include UiPath, Automation Anywhere, and Blue Prism, which help organizations automate routine tasks across applications. Integration platforms such as Zapier or Microsoft Power Automate complement efforts in BPM and RPA by joining disparate systems and workflows between systems.

67. How to implement RPA with the existing IT structure?

Ans:

Implementing RPA with existing IT infrastructures involves evaluating the existing infrastructure to determine whether it is compatible with the RPA tools. This also requires a good understanding of APIs, databases, and application interfaces. The RPA bots are configured to communicate with legacy systems through screen scraping or API integration. A phased approach would be very useful to contain risks while implementing pilot projects before a largescale implementation.

68. What are the good measures that evaluate the success of an automation project?

Ans:

Some key metrics used to measure the successful execution of an automation project include cost savings, time savings, enhanced precision, and return on investment, ROI. Besides, one can monitor the number of processes that become automated and the effect that follows on the level of effort taken by employees. Finally, the speed at which the processes are completed without errors before implementation and the new situations after automation provide good data to gauge the overall success of the automation effort.

69. How does one ensure adherence to business rules in automated processes?

Ans:

- Keeping up with the business regulations in automatic processes means that rules and standards of the automatic processes are included in compliance checks.

- Regular auditing and monitoring help discover any noncompliance issues as early as possible.

- It ensures cooperation with legal and compliance teams to understand and inculcate all regulations in the automation design.

- Further, training employees to comply with automated processes creates a culture of accountability and awareness.

70. What is AI’s contribution to business process automation?

Ans:

- AI develops business process automation with the emergence of smart decisionmaking capabilities beyond pure task automation.

- It can process vast amounts of data, learn from patterns, and decide real-time executions, making a process efficient and effective.

- It allows the preempting or prediction of analytics, enabling businesses to preempt certain needs so one can work ahead in planning the workflows.

- AI also allows for natural language processing and enhances the quality of customer interactions with chatbots and virtual assistants.

71. What is the fundamental difference between Agile and Waterfall methodologies?

Ans:

- The main difference between Agile and Waterfall is how their approaches are managed.

- A waterfall is a linear, sequential model where each phase needs to be completed before moving on to the next.

- Agile refers to an approach that is fundamentally iterative and incremental and promotes flexibility.

- Agile teams foster collaboration and constantly review their project goals, changing as needed based on feedback and evolving requirements.

72. Describe the Scrum framework and the different roles involved.

Ans:

The Product Owner is responsible for establishing project requirements and prioritizing the backlog. The Scrum master facilitates the Scrum process to help eliminate obstacles. The Development Team is responsible for delivering a product increment.Scrum ceremonies such as daily standups, sprint planning, and retrospectives continuously encourage communication and improvement among team members.

73. How to manage project scope changes in an Agile environment?

Ans:

Managing project scope changes in an Agile environment is about being pliable and allowing for change in requirements. The team often reviews the product backlog, whereby requests are presented and prioritized alongside previous activities. In sprint planning, they will analyze the effect of the change on the current commitments and adjust it within scope. Ensuring continuity of communication with the stakeholders helps manage expectations and note any changes.

74. What are common project management challenges?

Ans:

Scope creep, resource constraints, and communication breakdown are the most common issues in project management. To overcome scope creep, clear project objectives must be well-defined, and a well-defined change control process must be implemented. Resource constraints can be handled by prioritizing the tasks appropriately and then shifting resources accordingly. Improvement in information flow can also be achieved by holding regular status meetings and collaborating through tools.

75. How to measure success in any consulting engagement?

Ans:

- Measuring the success in the consulting engagement is mainly through qualitative and quantitative metrics.

- Quantitative indications of measuring project success in consulting engagements include whether the project was completed on time and within budget, client satisfaction, and predefined objects.

- Part of measuring success through gathering other feedback about the project among stakeholders and how the initial goals are translated against which they were set.

76. What does risk management do during project planning?

Ans:

- It identifies potential risks and develops strategies to minimize the impact on the project’s outcome during project planning.

- This involves a comprehensive risk assessment that will analyze internal and external factors that might affect the execution of a given project.

- Risks can be prioritized according to their likelihood and potential impact, thus allowing teams to distribute their burdens on resources to deal with higher-priority risks.

- Monitoring and reviewing risks at every stage of the project ensures ones that start to emerge at any one time are dealt with proactively, thus keeping the project stable and successful.

77. What to do if the project is running behind schedule?

Ans:

- Project running behind schedule management involves a structured process to identify the causes of running behind schedule.

- After that, a recovery plan is designed that will involve resource reallocations, new priorities, or renegotiation of deadlines.

- Open communication with stakeholders about the situation brings transparency and creates realistic expectations.

- Besides, applying time management techniques and critical path focuses can help get the project back on track.

78. Why is stakeholder management significant in project delivery?

Ans:

In the deliverance phase of a project, stakeholder management is essential so that the interests and expectations of all parties involved are known and catered for. It is, very important to involve all stakeholders in the full life cycle to gain collaboration and buy-in, thus decreasing resistance to change. Good management of stakeholders also allows for earlier identification of risks and ensures that project goals are aligned with the organizational goals for better project results.

79. How does project management balance time, cost, and quality?

Ans:

Balancing time, cost, and quality in project management, also known as the triple constraint, entails making informed trade-offs based on project priorities. Clear project goals and stakeholder expectations can define which aspects are most critical. Project management tools and techniques, such as Gantt charts or critical path analysis, can help track progress effectively. Regular communication with stakeholders is essential to ensure alignment and make adjustments as necessary throughout the project lifecycle.

80. Explain how to approach resource allocation in a large-scale project.

Ans:

Resource allocation starts in the planning phase of a large project; an estimate of the project’s needs, timelines, and deliverables can be very informative. Detailed resource plans include identifying necessary skills, experience, and availability in that specific team. Tasks are prioritized based on prioritization and dependency; so critical activities have their resources assigned on priority. Project management tools show a visualization of resource usage and point out bottlenecks.

81. What is the role of AI and machine learning in modern business solutions?

Ans:

- AI and machine learning transform modern business solutions by automating processes, improving decisionmaking, and providing a personalized customer experience.

- They empower organizations to analyze huge amounts of data at warp speeds so that they can unveil patterns and identify insights that help drive initiatives on key strategies.

- Businesses implement AI in conducting predictions, fraud detection, and even optimal operation. As time passes, machine algorithms learn to prove better, thus refining continuous models and strategies.

82. Explain how blockchain technology supports enterprise applications.

Ans:

- Blockchain technology improves enterprise applications through secure, transparent, and tamperproof data transactions.

- Since it is decentralized, all participants in a network have access to the same information, thus reducing discrepancies and promoting trust.

- It automates processes, as transactions only get executed when predefined conditions are fulfilled, hence streamlining operations.

- Blockchain provides traceability within supply chains, thus enhancing accountability and compliance.

83. What does this Internet of Things mean for a supply chain?

Ans:

- IoT restates supply chain management because it provides real-time information on operational activities through connected devices and sensors.

- This allows many business mechanisms, like monitoring inventory levels following shipments and ensuring equipment is managed proactively.

- Analysis of IoT device data helps optimize logistics, save costs, and maximize customer satisfaction. Predictive maintenance reduces downtime.

84. How to implement a machine learning model in a cloud environment?

Ans:

Some steps for implementing a machine learning model in a cloud environment follow preparing and exploring data and using cloud-based tools for storage and processing. Then, there would be the choice of a machine learning service from AWS SageMaker to Google AI Platform to train the model using prepared data. Once trained, the model can be deployed as a service over cloud facilities for real-time real-time prediction. Cloud infrastructure provides both Scalability and flexibility when dealing with variable workloads.

85. What are some ethical considerations in AI and data analytics?

Ans:

Ethical issues relating to AI and data analytics are currently concerned with bias, privacy, and accountability issues. Algorithms are biased in that they enact regarding what the training data contains, leading to unfair practices that may occur in the outcomes. User data protection and ensuring that any system complies with regulations such as GDPR remain top concern factors for creating a sense of trust. Translucency in how an algorithmic decision is made reinforces accountability and lets the user know what implications this entails.

86. What is Deloitte doing with digital twins in industry solutions?

Ans:

Deloitte applies digital twins to build virtual models of physical assets, processes, or systems. This enables simultaneous monitoring and analysis. This technology enables businesses to model scenarios, enhance operations, and predict outcomes while strengthening the basis of decisions. The idea is that by using digital twins, organizations can gain deeper insights, driving further improvement in performance.

87. How does the business transform through 5G technology?

Ans:

- 5G technology impels business transformation; it’s all about extremely faster transfer speeds, extremely reduced latency, and much greater connectivity.

- This enhanced network capability also aligns with the requirement for more IoT devices to proceed with and analyze in real-time.

- Manufacturers’ real-time care and logistics can leverage 5G for applications around remote monitoring, autonomous cars, and smart factories.

88. How to approach an assessment of how emerging technologies may affect a business?

Ans:

- Emerging technologies’ potential impact on a business is assessed by examining industry trends, and business-specific needs.

- Identifying use cases can be leveraged to evaluate the technology regarding strategic objectives.

- Piloting new technologies in controlled environments will enable an organization to study them for their effectiveness and return on investment before widespread deployment.

89. What are some potential uses for quantum computing in enterprise IT?

Ans:

Quantum computing promises to revolutionize enterprise IT by solving impossible classical problems. These applications comprise supply chain logistics optimization, resource allocation, and many more in which traditional processes may prove inefficient. Using advanced cryptography techniques, Quantum algorithms enable superior machine learning models and protect data even better. Risk analysis and fraud detection by financial institutions can be advanced with quantum computing.

90. How to ensure new technologies properly integrate with legacy systems?

Ans:

Through careful planning, assessment, and execution, one can ensure the successful integration of new technologies into existing legacy systems. This is done by carefully analyzing existing systems and identifying the integration points. There should then be a clear strategy for migration involving incremental upgrades and phased rollouts to minimize disruption. Compatibility can be ensured through APIs or middleware solutions, which allow exchanging data between systems.