What is serverless computing?

- Last year I was talking to a company intern about different architectural patterns and mentioned serverless architecture. He was quick to note that all applications require a server, and cannot run on thin air. The intern had a point, even if he was missing mine. Serverless computing is not a magical platform for running applications.

- In fact, serverless computing simply means that you, the developer, do not have to deal with the server. A serverless computing platform like AWS Lambda allows you to build your code and deploy it without ever needing to configure or manage underlying servers. Your unit of deployment is your code; not the container that hosts the code, or the server that runs the code, but simply the code itself. From a productivity standpoint, there are obvious benefits to offloading the details of where code is stored and how the execution environment is managed. Serverless computing is also priced based on execution metrics, so there is a financial advantage, as well.

What does AWS Lambda cost?

At the time of this writing, AWS Lambda’s price tier is based on number of executions and execution duration:

- Your first million executions per month are free, then you pay $0.20 per million executions thereafter ($0.0000002 per request).

- Duration is computed from the time your code starts executing until it returns a result, rounded to the nearest 100ms. The amount charged is based on the amount of RAM allocated to the function, where the cost is $0.00001667 for every GB-second.

Pricing details and free tier allocations are slightly more complicated than the overview implies. Visit the price tier to walk through a few pricing scenarios.

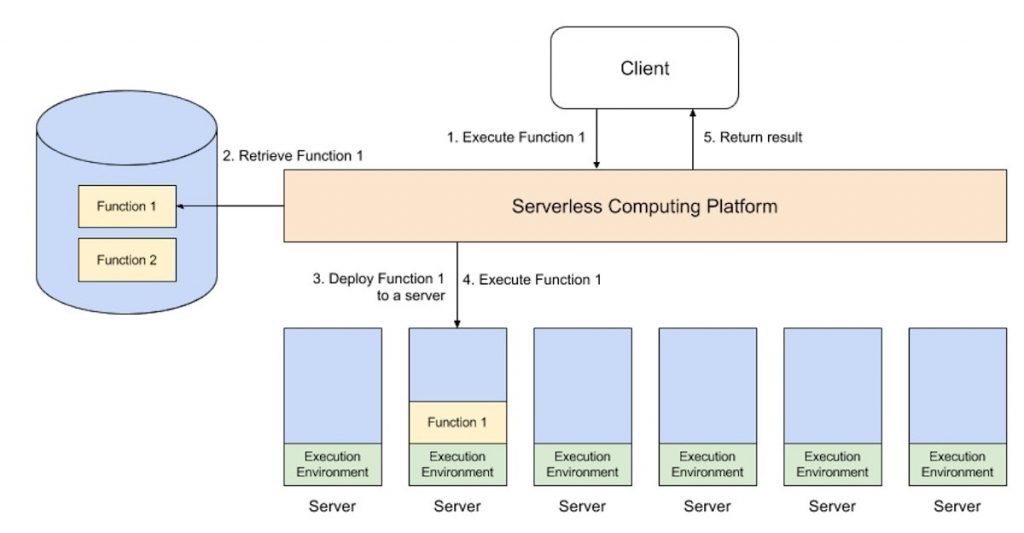

To get an idea for how serverless computing works, let’s start with the serverless computing execution model, which is illustrated in Figure 1.

Here’s the serverless execution model in a nutshell:

- A client makes a request to the serverless computing platform to execute a specific function.

- The serverless computing platform first checks to see if the function is running on any of its servers. If the function isn’t already running, then the platform loads the function from a data store.

- The platform then deploys the function to one of its servers, which are preconfigured with an execution environment that can run the function.

- It executes the function and captures the result.

- It returns the result back to the client.

Sometimes serverless computing is called Function as a Service (FaaS), because the granularity of the code that you build is a function. The platform executes your function on its own server and orchestrates the process between function requests and function responses.

RECOMMENDED WHITEPAPERS

- Making Every Adobe Analytics Implementation a Best-in-Class Deployment

- An IC Defender’s Guide to Winning the Cyber Warfare

- IBM Cloud for Financial Services ™ Whitepaper

Nanoservices, scalability, and price

Three things really matter about serverless computing: its nanoservice architecture; the fact that it’s practically infinitely scalable; and the pricing model associated with that near infinite scalability. We’ll dig into each of those factors.

Nanoservices

- You’ve heard of microservices, and you probably know about 12-factor applications, but serverless functions take the paradigm of breaking a component down to its constituent parts to a whole new level. The term “nanoservices” is not an industry recognized term, but the idea is simple: each nanoservice should implement a single action or responsibility. For example, if you wanted to create a widget, the act of creation would be its own nanoservice; if you wanted to retrieve a widget, the act of retrieval would also be a nanoservice; and if you wanted to place an order for a widget, that order would be yet another nanoservice.

- A nanoservices architecture allows you to define your application at a very fine-grained level. Similar to test-driven development (which helps you avoid unwanted side-effects by writing your code at the level of individual tests), a nanoservices architecture encourages defining your application in terms of very fine-grained and specific functions. This approach increases clarity about what you’re building and reduces unwanted side-effects from new code.

Microservices vs nanoservices

- Microservices encourages us to break an application down into a collection of services that each accomplish a specific task. The challenge is that no one has really quantified the scope of a microservice. As a result, we end up defining microservices as a collection of related services, all interacting with the same data model. Conceptually, if you have low-level functionality interacting with a given data model, then the functionality should go into one of its related services. High-level interactions should make calls to the service rather than querying the database directly.

- There is an ongoing debate in serverless computing about whether to build Lambda functions at the level of microservices or nanoservices. The good news is that you can pretty easily build your functions at either granularity, but a microservices strategy will require a bit of extra routing logic in your request handler.

- From a design perspective, serverless applications should be very well-defined and clean. From a deployment perspective you will need to manage significantly more deployments, but you will also have the ability to deploy new versions of your functions individually, without impacting other functions. Serverless computing is especially well suited to development in large teams, where it can help make the development process easier and the code less error-prone.

Scalability

In addition to introducing a new architectural paradigm, serverless computing platforms provide practically infinite scalability. I say “practically” because there is no such thing as truly infinite scalability. For all practical purposes, however, serverless computing providers like Amazon can handle more load than you could possibly throw at them. If you were to manage scaling up your own servers (or cloud-based virtual machines) to meet increased demand, you would need to monitor usage, identify when to start more servers, and add more servers to your cluster at the right time. Likewise, when demand decreased you would need to manually scale down. With serverless computing, you tell your serverless computing platform the maximum number of simultaneous function requests you want to run and the platform does the scaling for you.

Pricing

Finally, the serverless computing pricing model allows you to scale your cloud bill based on usage. When you have light usage, your bill will be low (or nil if you stay in the free range). Of course, your bill will increase with usage, but hopefully you will also have new revenue to support your higher cloud bill. For contrast, if you were to manage your own servers, you would have to pay a base cost to run the minimum number of servers required. As usage increased, you would scale up in increments of entire servers, rather than increments of individual function calls. The serverless computing pricing model is directly proportional to your usage.

AWS Lambda for serverless computing

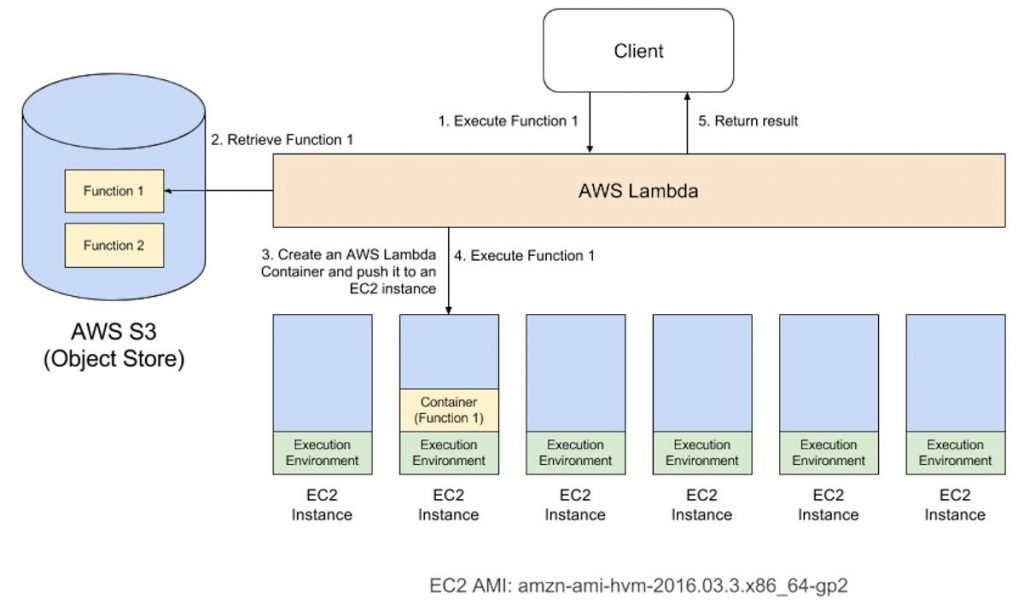

AWS Lambda is a serverless computing platform implemented on top of Amazon Web Services platforms like EC2 and S3. AWS Lambda encrypts and stores your code in S3. When a function is requested to run, it creates a “container” using your runtime specifications, deploys it to one of the EC2 instances in its compute farm, and executes that function. The process is shown in Figure 2.

- When you create a Lambda function, you configure it in AWS Lambda, specifying things like the runtime environment (we’ll use Java 8 for this article), how much memory to allocate to it, identity and access management roles, and the method to execute. AWS Lambda uses your configuration to setup a container and deploy the container to an EC2 instance. It then executes the method that you’ve specified, in the order of package, class, and method.

- At the time of this writing, you can build Lambda functions in Node, Java, Python, and most recently, C#. For the purposes of this article we will use Java.

What is a Lambda function?

When you write code designed to run in AWS Lambda, you are writing functions. The term functions comes from functional programming, which originated in lambda calculus. The basic idea is to compose an application as a collection of functions, which are methods that accept arguments, compute a result, and have no unwanted side-effects. Functional programming takes a mathematical approach to writing code that can be proven to be correct. While it’s good to keep functional programming in mind when you are writing code for AWS Lambda, all you really need to understand is that the function is a single-method entry-point that accepts an input object and returns an output object.

Serverless execution modes

- While Lambda functions can run synchronously, as described above, they can also run asynchronously and in response to events. For example, you could configure a Lambda to run whenever a file was uploaded to an S3 bucket. This configuration is sometimes used for image or video processing: when a new image is uploaded to an S3 bucket, a Lambda function is invoked with a reference to the image to process it.

- I worked with a very large company that leveraged this solution for photographers covering a marathon. The photographers were on the course taking photographs. Once their memory cards were full, they loaded the images onto a laptop and uploaded the files to S3. As images were uploaded, Lambda functions were executed to resize, watermark, and add a reference for each image to its runner in the database.

- All of this would take a lot of work to accomplish manually, but in this case the work not only processed faster because of AWS Lambda’s horizontal scalability, but also seamlessly scaled up and back down, thus optimizing the company’s cloud bill.

- In addition to responding to files uploaded to S3, lambdas can be triggered by other sources, such as records being inserted into a DynamoDB database and analytic information streaming from Amazon Kinesis. We’ll look at an example featuring DynamoDB in Part 2.

AWS Lambda functions in Java

Now that you know a little bit about serverless computing and AWS Lambda, I’lll walk you through building an AWS Lambda function in Java.

Get the code

Source code for the example application for this tutorial, “Serverless computing with AWS Lambda.” Created by Steven Haines for JavaWorld.

Implementing Lambda functions

You can write a Lambda function in one of two ways:

- The function can receive an input stream to the client and write to an output stream back to the client.

- The function can use a predefined interface, in which case AWS Lambda will automatically deserialize the input stream to an object, pass it to your function, and serialize your function’s response before returning it to the client.

The easiest way to implement an AWS Lambda function is to use a predefined interface. For Java, you first need to include the following AWS Lambda core library in your project (note that this example uses Maven):

- <dependency>

- <groupId>com.amazonaws</groupId>

- <artifactId>aws-lambda-java-core</artifactId>

- <version>1.1.0</version>

- </dependency>

Next, have your class implement the following interface:

Listing 1. RequestHandler.java

- public interface RequestHandler<I, O> {

- /**

- * Handles a Lambda function request

- * @param input The Lambda function input

- * @param context The Lambda execution environment context object.

- * @return The Lambda function output

- */

- public O handleRequest(I input, Context context);

- }

The RequestHandler interface defines a single method: handleRequest(), which is passed an input object and a Context object, and returns an output object. For example, if you were to define a Request class and a Response class, you could implement your lambda as follows:

- public class MyHandler implements RequestHandler<Request, Response> {

- public Response handleRequest(Request request, Context context) {

- …

- }

- }

Alternatively, if you wanted to bypass the predefined interface, you could manually handle the InputStream and OutputStream yourself, by implementing a method with the following signature:

- public void handleRequest(InputStream inputStream, OutputStream outputStream, Context context)

- throws IOException {

- …

- }

The Context object provides information about your function and the environment in which it is running, such as the function name, its memory limit, its logger, and the amount of time remaining, in milliseconds, that the function has to complete before AWS Lambda kills it.

A couple of examples

UI-driven applications

- Let’s think about a traditional three-tier client-oriented system with server-side logic. A good example is a typical ecommerce app—dare I say an online pet store?

- Traditionally, the architecture will look something like the diagram below. Let’s say it’s implemented in Java or Javascript on the server side, with an HTML + Javascript component as the client:

- With this architecture the client can be relatively unintelligent, with much of the logic in the system—authentication, page navigation, searching, transactions—implemented by the server application.

- With a Serverless architecture this may end up looking more like this:

This is a massively simplified view, but even here we see a number of significant changes:

- We’ve deleted the authentication logic in the original application and have replaced it with a third-party BaaS service (e.g., Auth0.)

- Using another example of BaaS, we’ve allowed the client direct access to a subset of our database (for product listings), which itself is fully hosted by a third party (e.g., Google Firebase.) We likely have a different security profile for the client accessing the database in this way than for server resources that access the database.

- These previous two points imply a very important third: some logic that was in the Pet Store server is now within the client—e.g., keeping track of a user session, understanding the UX structure of the application, reading from a database and translating that into a usable view, etc. The client is well on its way to becoming a Single Page Application.

- We may want to keep some UX-related functionality in the server, if, for example, it’s compute intensive or requires access to significant amounts of data. In our pet store, an example is “search.” Instead of having an always-running server, as existed in the original architecture, we can instead implement a FaaS function that responds to HTTP requests via an API gateway (described later). Both the client and the server “search” function read from the same database for product data.

- If we choose to use AWS Lambda as our FaaS platform we can port the search code from the original Pet Store server to the new Pet Store Search function without a complete rewrite, since Lambda supports Java and Javascript—our original implementation languages.Finally, we may replace our “purchase” functionality with another separate FaaS function, choosing to keep it on the server side for security reasons, rather than reimplement it in the client. It too is fronted by an API gateway. Breaking up different logical requirements into separately deployed components is a very common approach when using FaaS.

- Stepping back a little, this example demonstrates another very important point about Serverless architectures. In the original version, all flow, control, and security was managed by the central server application. In the Serverless version there is no central arbiter of these concerns. Instead we see a preference for choreography over orchestration, with each component playing a more architecturally aware role—an idea also common in a microservices approach.

- There are many benefits to such an approach. As Sam Newman notes in his Building Microservices book, systems built this way are often “more flexible and amenable to change,” both as a whole and through independent updates to components; there is better division of concerns; and there are also some fascinating cost benefits, a point that Gojko Adzic discusses in this excellent talk.

- Of course, such a design is a trade-off: it requires better distributed monitoring (more on this later), and we rely more significantly on the security capabilities of the underlying platform. More fundamentally, there are a greater number of moving pieces to get our heads around than there are with the monolithic application we had originally. Whether the benefits of flexibility and cost are worth the added complexity of multiple backend components is very context dependent.

Message-driven applications

A different example is a backend data-processing service.

- Say you’re writing a user-centric application that needs to quickly respond to UI requests, and, secondarily, it needs to capture all the different types of user activity that are occurring, for subsequent processing. Think about an online advertisement system: when a user clicks on an ad you want to very quickly redirect them to the target of that ad. At the same time, you need to collect the fact that the click has happened so that you can charge the advertiser. (This example is not hypothetical—my former team at Intent Media had exactly this need, which they implemented in a Serverless way.)

- Traditionally, the architecture may look as below. The “Ad Server” synchronously responds to the user (not shown) and also posts a “click message” to a channel. This message is then asynchronously processed by a “click processor” application that updates a database, e.g., to decrement the advertiser’s budget.

In the Serverless world this looks as follows:

- Can you see the difference? The change in architecture is much smaller here compared to our first example—this is why asynchronous message processing is a very popular use case for Serverless technologies. We’ve replaced a long-lived message-consumer application with a FaaS function. This function runs within the event-driven context the vendor provides. Note that the cloud platform vendor supplies both the message broker and the FaaS environment—the two systems are closely tied to each other.

- The FaaS environment may also process several messages in parallel by instantiating multiple copies of the function code. Depending on how we wrote the original process this may be a new concept we need to consider.

Unpacking “Function as a Service”

- We’ve mentioned FaaS a lot already, but it’s time to dig into what it really means. To do this let’s look at the opening description for Amazon’s FaaS product: Lambda. I’ve added some tokens to it, which I’ll expand on.

- AWS Lambda lets you run code without provisioning or managing servers.

(1) With Lambda, you can run code for virtually any type of application or backend service

(2) – all with zero administration. Just upload your code and Lambda takes care of everything required to run

3) and scale

(4) your code with high availability. You can set up your code to automatically trigger from other AWS services

(5) or call it directly from any web or mobile app

- Fundamentally, FaaS is about running backend code without managing your own server systems or your own long-lived server applications. That second clause—long-lived server applications—is a key difference when comparing with other modern architectural trends like containers and PaaS (Platform as a Service).

- If we go back to our click-processing example from earlier, FaaS replaces the click-processing server (possibly a physical machine, but definitely a specific application) with something that doesn’t need a provisioned server, nor an application that is running all the time.FaaS offerings do not require coding to a specific framework or library. FaaS functions are regular applications when it comes to language and environment. For instance, AWS Lambda functions can be implemented “first class” in Javascript, Python, Go, any JVM language (Java, Clojure, Scala, etc.), or any .NET language. However your Lambda function can also execute another process that is bundled with its deployment artifact, so you can actually use any language that can compile down to a Unix process (see Apex, later in this article).

- FaaS functions have significant architectural restrictions though, especially when it comes to state and execution duration. We’ll get to that soon.

Let’s consider our click-processing example again. The only code that needs to change when moving to FaaS is the “main method” (startup) code, in that it is deleted, and likely the specific code that is the top-level message handler (the “message listener interface” implementation), but this might only be a change in method signature. The rest of the code (e.g., the code that writes to the database) is no different in a FaaS world.Deployment is very different from traditional systems since we have no server applications to run ourselves. In a FaaS environment we upload the code for our function to the FaaS provider, and the provider does everything else necessary for provisioning resources, instantiating VMs, managing processes, etc. - Horizontal scaling is completely automatic, elastic, and managed by the provider. If your system needs to be processing 100 requests in parallel the provider will handle that without any extra configuration on your part. The “compute containers” executing your functions are ephemeral, with the FaaS provider creating and destroying them purely driven by runtime need. Most importantly, with FaaS the vendor handles all underlying resource provisioning and allocation—no cluster or VM management is required by the user at all.

- Let’s return to our click processor. Say that we were having a good day and customers were clicking on ten times as many ads as usual. For the traditional architecture, would our click-processing application be able to handle this? For example, did we develop our application to be able to handle multiple messages at a time? If we did, would one running instance of the application be enough to process the load? If we are able to run multiple processes, is autoscaling automatic or do we need to reconfigure that manually? With a FaaS approach all of these questions are already answered—you need to write the function ahead of time to assume horizontal-scaled parallelism, but from that point on the FaaS provider automatically handles all scaling needs.Functions in FaaS are typically triggered by event types defined by the provider. With Amazon AWS such stimuli include S3 (file/object) updates, time (scheduled tasks), and messages added to a message bus (e.g., Kinesis).

- Most providers also allow functions to be triggered as a response to inbound HTTP requests; in AWS one typically enables this by way of using an API gateway. We used an API gateway in our Pet Store example for our “search” and “purchase” functions. Functions can also be invoked directly via a platform-provided API, either externally or from within the same cloud environment, but this is a comparatively uncommon use.

State

- FaaS functions have significant restrictions when it comes to local (machine/instance-bound) state—i.e., data that you store in variables in memory, or data that you write to local disk. You do have such storage available, but you have no guarantee that such state is persisted across multiple invocations, and, more strongly, you should not assume that state from one invocation of a function will be available to another invocation of the same function. FaaS functions are therefore often described as stateless, but it’s more accurate to say that any state of a FaaS function that is required to be persistent needs to be externalized outside of the FaaS function instance.

- For FaaS functions that are naturally stateless—i.e., those that provide a purely functional transformation of their input to their output—this is of no concern. But for others this can have a large impact on application architecture, albeit not a unique one—the “Twelve-Factor app” concept has precisely the same restriction. Such state-oriented functions will typically make use of a database, a cross-application cache (like Redis), or network file/object store (like S3) to store state across requests, or to provide further input necessary to handle a request.

Execution duration

- FaaS functions are typically limited in how long each invocation is allowed to run. At present the “timeout” for an AWS Lambda function to respond to an event is at most five minutes, before being terminated. Microsoft Azure and Google Cloud Functions have similar limits.

- This means that certain classes of long-lived tasks are not suited to FaaS functions without re-architecture—you may need to create several different coordinated FaaS functions, whereas in a traditional environment you may have one long-duration task performing both coordination and execution.

Startup latency and “cold starts”

- It takes some time for a FaaS platform to initialize an instance of a function before each event. This startup latency can vary significantly, even for one specific function, depending on a large number of factors, and may range anywhere from a few milliseconds to several seconds. That sounds bad, but let’s get a little more specific, using AWS Lambda as an example.

- Initialization of a Lambda function will either be a “warm start”—reusing an instance of a Lambda function and its host container from a previous event—or a “cold start” —creating a new container instance, starting the function host process, etc. Unsurprisingly, when considering startup latency, it’s these cold starts that bring the most concern.

- Cold-start latency depends on many variables: the language you use, how many libraries you’re using, how much code you have, the configuration of the Lambda function environment itself, whether you need to connect to VPC resources, etc. Many of these aspects are under a developer’s control, so it’s often possible to reduce the startup latency incurred as part of a cold start.

- Equally as variable as cold-start duration is cold-start frequency. For instance, if a function is processing 10 events per second, with each event taking 50 ms to process, you’ll likely only see a cold start with Lambda every 100,000–200,000 events or so. If, on the other hand, you process an event once per hour, you’ll likely see a cold start for every event, since Amazon retires inactive Lambda instances after a few minutes. Knowing this will help you understand whether cold starts will impact you on aggregate, and whether you might want to perform “keep alives” of your function instances to avoid them being put out to pasture.

- Are cold starts a concern? It depends on the style and traffic shape of your application. My former team at Intent Media has an asynchronous message-processing Lambda app implemented in Java (typically the language with the slowest startup time) which processes hundreds of millions of messages per day, and they have no concerns with startup latency for this component. That said, if you were writing a low-latency trading application you probably wouldn’t want to use cloud-hosted FaaS systems at this time, no matter the language you were using for implementation.

- Whether or not you think your app may have problems like this, you should test performance with production-like load. If your use case doesn’t work now you may want to try again in a few months, since this is a major area of continual improvement by FaaS vendors.

- For much more detail on cold starts, please see my article on the subject.

API gateways

- One aspect of Serverless that we brushed upon earlier is an “API gateway.” An API gateway is an HTTP server where routes and endpoints are defined in configuration, and each route is associated with a resource to handle that route. In a Serverless architecture such handlers are often FaaS functions.

- When an API gateway receives a request, it finds the routing configuration matching the request, and, in the case of a FaaS-backed route, will call the relevant FaaS function with a representation of the original request. Typically the API gateway will allow mapping from HTTP request parameters to a more concise input for the FaaS function, or will allow the entire HTTP request to be passed through, typically as a JSON object. The FaaS function will execute its logic and return a result to the API gateway, which in turn will transform this result into an HTTP response that it passes back to the original caller.

- Amazon Web Services have their own API gateway (slightly confusingly named “API Gateway”), and other vendors offer similar abilities. Amazon’s API Gateway is a BaaS (yes, BaaS!) service in its own right in that it’s an external service that you configure, but do not need to run or provision yourself.

- Beyond purely routing requests, API gateways may also perform authentication, input validation, response code mapping, and more. (If your spidey senses are tingling as you consider whether this is actually such a good idea, hold that thought! We’ll consider this further later.)

- One use case for an API gateway with FaaS functions is creating HTTP-fronted microservices in a Serverless way with all the scaling, management, and other benefits that come from FaaS functions.

- When I first wrote this article, the tooling for Amazon’s API Gateway, at least, was achingly immature. Such tools have improved significantly since then. Components like AWS API Gateway are not quite “mainstream,” but hopefully they’re a little less painful than they once were, and will only continue to improve.

Tooling

- The comment above about maturity of tooling also applies to Serverless FaaS in general. In 2016 things were pretty rough; by 2018 we’ve seen a marked improvement, and we expect tools to get better still.

- A couple of notable examples of good “developer UX” in the FaaS world are worth calling out. First of all is Auth0 Webtask which places significant priority on developer UX in its tooling. Second is Microsoft, with their Azure Functions product. Microsoft has always put Visual Studio, with its tight feedback loops, at the forefront of its developer products, and Azure Functions is no exception. The ability it offers to debug functions locally, given an input from a cloud-triggered event, is quite special.

- An area that still needs significant improvement is monitoring. I discuss that later on.

Open source

- So far I’ve mostly discussed proprietary vendor products and tools. The majority of Serverless applications make use of such services, but there are open-source projects in this world, too.

- The most common uses of open source in Serverless are for FaaS tools and frameworks, especially the popular Serverless Framework, which aims to make working with AWS API Gateway and Lambda easier than using the tools provided by AWS. It also provides an amount of cross-vendor tooling abstraction, which some users find valuable. Examples of similar tools include Claudia and Zappa. Another example is Apex, which is particularly interesting since it allows you to develop Lambda functions in languages other than those directly supported by Amazon.

- The big vendors themselves aren’t getting left behind in the open-source tool party though. AWS’s own deployment tool, SAM—the Serverless Application Model—is also open source.

- One of the main benefits of proprietary FaaS is not having to be concerned about the underlying compute infrastructure (machines, VMs, even containers). But what if you want to be concerned about such things? Perhaps you have some security needs that can’t be satisfied by a cloud vendor, or maybe you have a few racks of servers that you’ve already bought and don’t want to throw away. Can open source help in these scenarios, allowing you to run your own “Serverful” FaaS platform?

- Yes, and there’s been a good amount of activity in this area. One of the initial leaders in open-source FaaS was IBM (with OpenWhisk, now an Apache project) and surprisingly—to me at least!—Microsoft, which open sourced much of its Azure Functions platform. Many other self-hosted FaaS implementations make use of an underlying container platform, frequently Kubernetes, which makes a lot of sense for many reasons. In this arena it’s worth exploring projects like Galactic Fog, Fission, and OpenFaaS. This is a large, fast-moving world, and I recommend looking at the work that the Cloud Native Computing Federation (CNCF) Serverless Working Group have done to track it.

What isn’t Serverless?

- So far in this article I’ve described Serverless as being the union of two ideas: Backend as a Service and Functions as a Service. I’ve also dug into the capabilities of the latter. For more precision about what I see as the key attributes of a Serverless service (and why I consider even older services like S3 to be Serverless), I refer you to another article of mine: Defining Serverless.

- Before we start looking at the very important area of benefits and drawbacks, I’d like to spend one more quick moment on definition. Let’s define what Serverless isn’t.

Comparison with PaaS

- Given that Serverless FaaS functions are very similar to Twelve-Factor applications, are they just another form of “Platform as a Service” (PaaS) like Heroku? For a brief answer I refer to Adrian Cockcroft

- In other words, most PaaS applications are not geared towards bringing entire applications up and down in response to an event, whereas FaaS platforms do exactly this.

- If I’m being a good Twelve-Factor app developer, this doesn’t necessarily impact how I program and architect my applications, but it does make a big difference in how I operate them. Since we’re all good DevOps-savvy engineers, we’re thinking about operations as much as we’re thinking about development, right?

- The key operational difference between FaaS and PaaS is scaling. Generally with a PaaS you still need to think about how to scale—for example, with Heroku, how many Dynos do you want to run? With a FaaS application this is completely transparent. Even if you set up your PaaS application to auto-scale you won’t be doing this to the level of individual requests (unless you have a very specifically shaped traffic profile), so a FaaS application is much more efficient when it comes to costs.

- Given this benefit, why would you still use a PaaS? There are several reasons, but tooling is probably the biggest. Also some people use PaaS platforms like Cloud Foundry to provide a common development experience across a hybrid public and private cloud; at time of writing there isn’t a FaaS equivalent as mature as this.

Comparison with containers

- One of the reasons to use Serverless FaaS is to avoid having to manage application processes at the operating-system level. PaaS services, like Heroku, also provide this capability, and I’ve described above how PaaS is different to Serverless FaaS. Another popular abstraction of processes are containers, with Docker being the most visible example of such a technology. Container hosting systems such as Mesos and Kubernetes, which abstract individual applications from OS-level deployment, are increasingly popular. Even further along this path we see cloud-hosting container platforms like Amazon ECS and EKS, and Google Container Engine which, like Serverless FaaS, let teams avoid having to manage their own server hosts at all. Given the momentum around containers, is it still worth considering Serverless FaaS?

- Principally the argument I made for PaaS still holds with containers – for Serverless FaaS scaling is automatically managed, transparent, and fine grained, and this is tied in with the automatic resource provisioning and allocation I mentioned earlier. Container platforms have traditionally still needed you to manage the size and shape of your clusters.

- I’d also argue that container technology is still not mature and stable, although it is getting ever closer to being so. That’s not to say that Serverless FaaS is mature, of course, but picking which rough edges you’d like is still the order of the day.

- As we see the gap of management and scaling between Serverless FaaS and hosted containers narrow, the choice between them may just come down to style and type of application. For example, it may be that FaaS is seen as a better choice for an event-driven style with few event types per application component, and containers are seen as a better choice for synchronous-request–driven components with many entry points. I expect in a fairly short period of time that many applications and teams will use both architectural approaches, and it will be fascinating to see patterns of such use emerge.

#NoOps

- Serverless doesn’t mean “No Ops”—though it might mean “No sysadmin” depending on how far down the Serverless rabbit hole you go.

- “Ops” means a lot more than server administration. It also means—at least—monitoring, deployment, security, networking, support, and often some amount of production debugging and system scaling. These problems all still exist with Serverless apps, and you’re still going to need a strategy to deal with them. In some ways Ops is harder in a Serverless world because a lot of this is so new.

- The sysadmin is still happening—you’re just outsourcing it with Serverless. That’s not necessarily a bad (or good) thing—we outsource a lot, and its goodness or badness depends on what precisely you’re trying to do. Either way, at some point the abstraction will likely leak, and you’ll need to know that human sysadmins somewhere are supporting your application.

- Charity Majors gave a great talk on this subject at the first Serverlessconf. (You can also read her two write-ups on it: WTF is operations? and Operational Best Practices.)

Stored Procedures as a Service

- Another theme I’ve seen is that Serverless FaaS is “Stored Procedures as a Service.” I think that’s come from the fact that many examples of FaaS functions (including some I’ve used in this article) are small pieces of code that are tightly integrated with a database. If that’s all we could use FaaS for I think the name would be useful, but because it is really just a subset of FaaS’s capability, I don’t think it’s useful to think about FaaS in these terms.

- That being said, it’s worth considering whether FaaS comes with some of the same problems of stored procedures, including the technical debt concern Camille mentions in the above-referenced tweet. There are many lessons that come from using stored procedures that are worth reviewing in the context of FaaS and seeing whether they apply. Consider that stored procedures:

- Often require vendor-specific language, or at least vendor-specific frameworks / extensions to a language

- Are hard to test since they need to be executed in the context of a database

- Are tricky to version control or to treat as a first class application

- While not all of these will necessarily apply to all implementations of stored procs, they’re certainly problems one might come across. Let’s see if they might apply to FaaS:

(1) is definitely not a concern for the FaaS implementations I’ve seen so far, so we can scrub that one off the list right away.

(2) since we’re dealing with “just code,” unit testing is definitely as easy as any other code. Integration testing is a different (and legitimate) question though, and one which we’ll discuss later.

(3), again since FaaS functions are “just code” version control is okay. Until recently application packaging was also a concern, but we’re starting to see maturity here, with tools like Amazon’s Serverless Application Model (SAM) and the Serverless Framework that I mentioned earlier. At the beginning of 2018 Amazon even launched a “Serverless Application Repository” (SAR) providing organizations with a way to distribute applications, and application components, built on AWS Serverless services. (Read more on SAR in my fittingly titled article Examining the AWS Serverless Application Repository.)

Serverless vs. FaaS

Serverless and Functions-as-a-Service (FaaS) are often conflated with one another but the truth is that FaaS is actually a subset of serverless. As mentioned above, serverless is focused on any service category, be it compute, storage, database, etc. where configuration, management, and billing of servers are invisible to the end user. FaaS, on the other hand, while perhaps the most central technology in serverless architectures, is focused on the event-driven computing paradigm wherein application code, or containers, only run in response to events or requests.

Serverless architectures pros and cons

Pros

While there are many individual technical benefits of serverless computing, there are four primary benefits of serverless computing:

- It enables developers to focus on code, not infrastructure.

- Pricing is done on a per-request basis, allowing users to pay only for what they use.

- For certain workloads, such as ones that require parallel processing, serverless can be both faster and more cost-effective than other forms of compute

- Serverless application development platforms provide almost total visibility into system and user times and can aggregate the information systematically.

Cons

While there is much to like about serverless computing, there are some challenges and trade-offs worth considering before adopting them:

- Long-running processes: FaaS and serverless workloads are designed to scale up and down perfectly in response to workload, offering significant cost savings for spiky workloads. But for workloads characterized by long-running processes, these same cost advantages are no longer present and managing a traditional server environment might be simpler and more cost-effective.

- Vendor lock-in: Serverless architectures are designed to take advantage of an ecosystem of managed cloud services and, in terms of architectural models, go the furthest to decouple a workload from something more portable, like a VM or a container. For some companies, deeply integrating with the native managed services of cloud providers is where much of the value of cloud can be found; for other organizations, these patterns represent material lock-in risks that need to be mitigated.

- Cold starts: Because serverless architectures forgo long-running processes in favor of scaling up and down to zero, they also sometimes need to start up from zero to serve a new request. For certain applications, this delay isn’t much of an impact, but for something like a low-latency financial application, this delay wouldn’t be acceptable.

- Monitoring and debugging: These operational tasks are challenging in any distributed system, and the move to both microservices and serverless architectures (and the combination of the two) has only exacerbated the complexity associated with managing these environments carefully.

Understanding the serverless stack

Defining serverless as a set of common attributes, instead of an explicit technology, makes it easier to understand how the serverless approach can manifest in other core areas of the stack.

- Functions as a Service (FaaS): FaaS is widely understood as the originating technology in the serverless category. It represents the core compute/processing engine in serverless and sits in the center of most serverless architectures. See “What is FaaS?” for a deeper dive into the technology.

- Serverless databases and storage: Databases and storage are the foundation of the data layer. A “serverless” approach to these technologies (with object storage being the prime example within the storage category) involves transitioning away from provisioning “instances” with defined capacity, connection, and query limits and moving toward models that scale linearly with demand, in both infrastructure and pricing.

- Event streaming and messaging: Serverless architectures are well-suited for event-driven and stream-processing workloads, which involve integrating with message queues, most notably Apache Kafka.

- API gateways: API gateways act as proxies to web actions and provide HTTP method routing, client ID and secrets, rate limits, CORS, viewing API usage, viewing response logs, and API sharing policies.

Comparing FaaS to PaaS, containers, and VMs

While Functions as a Service (FaaS), Platform as a Service (PaaS), containers, and virtual machines (VMs) all play a critical role in the serverless ecosystem, FaaS is the most central and most definitional; and because of that. it’s worth exploring how FaaS differs from other common models of compute on the market today across key attributes:

- Provisioning time: Milliseconds, compared to minutes and hours for the other models.

- Ongoing administration: None, compared to a sliding scale from easy to hard for PaaS, containers, and VMs respectively.

- Elastic scaling: Each action is always instantly and inherently scaled, compared to the other models which offer automatic—but slow—scaling that requires careful tuning of auto-scaling rules.

- Capacity planning: None required, compared to the other models requiring a mix of some automatic scaling and some capacity planning.

- Persistent connections and state: Limited ability to persist connections and state must be kept in external service/resource. The other models can leverage http, keep an open socket or connection for long periods of time, and can store state in memory between calls.

- Maintenance: All maintenance is managed by the FaaS provider. This is also true for PaaS; containers and VMs require significant maintenance that includes updating/managing operating systems, container images, connections, etc.

- High availability (HA) and disaster recovery (DR): Inherent in the FaaS model with no extra effort or cost. The other models require additional cost and management effort. In the case of both VMs and containers, infrastructure can be restarted automatically.

- Resource utilization: Resources are never idle—they are invoked only upon request. All other models feature at least some degree of idle capacity.

- Resource limits: FaaS is the only model that has resource limits on code size, concurrent activations, memory, run length, etc.

- Charging granularity and billing: Per blocks of 100 milliseconds, compared to by the hour (and sometimes minute) of other models.

What are the most common use cases for AWS Lambda?

Due to Lambda’s architecture, it can deliver great benefits over traditional cloud computing setups for applications where:

- individual tasks run for a short time;

- each task is generally self-contained;

- there is a large difference between the lowest and highest levels in the workload of the application.

Some of the most common use cases for AWS Lambda that fit these criteria are:

- Scalable APIs. When building APIs using AWS Lambda, one execution of a Lambda function can serve a single HTTP request. Different parts of the API can be routed to different Lambda functions via Amazon API Gateway. AWS Lambda automatically scales individual functions according to the demand for them, so different parts of your API can scale differently according to current usage levels. This allows for cost-effective and flexible API setups.

- Data processing. Lambda functions are optimized for event-based data processing. It is easy to integrate AWS Lambda with datasources like Amazon DynamoDB and trigger a Lambda function for specific kinds of data events. For example, you could employ Lambda to do some work every time an item in DynamoDB is created or updated, thus making it a good fit for things like notifications, counters and analytics.

- Task automation. With its event-driven model and flexibility, AWS Lambda is a great fit for automating various business tasks that don’t require an entire server at all times. This might include running scheduled jobs that perform cleanup in your infrastructure, processing data from forms submitted on your website, or moving data around between different datastores on demand.

Supported languages and runtimes

As of now, AWS Lambda doesn’t support all programming languages, but it does support a number of the most popular languages and runtimes. This is the full list of what’s supported:

- Node.js 8.10

- Node.js 10.x (normally the latest LTS version from the 10.x series)

- Node.js 12.x (normally the latest LTS version from the 12.x series)

- Python 2.7

- Python 3.6

- Python 3.7

- Python 3.8

- Ruby 2.5

- Java 8

- This includes JVM-based languages that can run on Java 8’s JVM — the latest Clojure 1.10 and Scala 2.12 both run on Java 8 so can be used with AWS Lambda

- Java 11

- Go 1.x (latest release)

- C# — .NET Core 1.0

- C# — .NET Core 2.1

- PowerShell Core 6.0

All these runtimes are maintained by AWS and are provided in an Amazon Linux or Amazon Linux 2 environment. For each of the supported languages, AWS provides an SDK that makes it easier for you to write your Lambda functions and integrate them with other AWS services.

A few additional runtimes are still in the pre-release stage. These runtimes are being developed as a part of AWS Labs and are not mentioned in the official documentation:

- Rust 1.31

- C++

The C++ runtime also serves as an example for creating custom runtimes for AWS Lambda. See the AWS docs for the details of how to create a custom runtime if your language isn’t supported by default.