TCS (Tata Consultancy Services) interview questions often focus on assessing technical skills, problem-solving abilities, and cultural fit. Candidates can expect questions related to programming languages, data structures, and algorithms, as well as situational and behavioral questions. It’s important to demonstrate not only technical knowledge but also effective communication and teamwork skills.

1. What is the distinction between procedural and object-oriented programming?

Ans:

Procedural programming is a paradigm that uses approaches or exercises to function on records, emphasizing a chain of duties or commands. It systems code into features and approaches that can cause higher company of this system logic. In contrast, object-oriented programming (OOP) focuses on objects that encapsulate records and behaviours, allowing for greater modular and reusable code. OOP ideas consist of inheritance, polymorphism, encapsulation, and abstraction.

2. What is the distinction between a summary elegance and an interface?

Ans:

A summary elegance is a category that cannot be instantiated on its downright incorporate and urban strategies . It permits shared code and defines a not-unusual interface for derived training, allowing a shape of partial implementation. On the other hand, an interface is a settlement representing a fixed set of strategies that enforcing training ought to offer with no implementation details. An elegance can put into effect multiple interfaces, selling a greater bendy design.

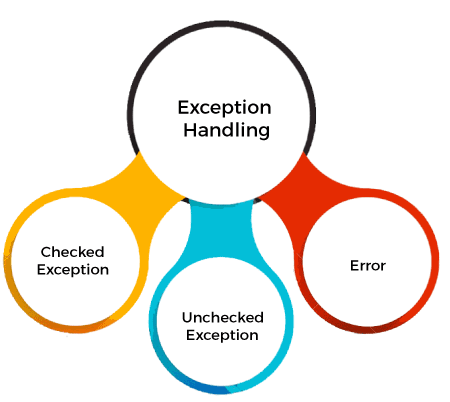

3. Explain the idea of exceptions dealing with programming.

Ans:

- Exception dealing is a programming assembly that permits builders to control mistakes or sudden activities that arise at some stage in software execution.

- This mechanism improves code robustness by setting apart mistakes dealing with the primary logic and permitting packages to cope with errors instead of crashing gracefully.

4. How important is unit checking out in software program improvement?

Ans:

- Unit checking out is a software program checking method that entails checking out personal additives or features of software in isolation to ensure they are painted as intended.

- This exercise is important for figuring out insects early in the improvement process, which can considerably lessen the fee and time of solving troubles later.

- By verifying that every unit of code plays correctly, builders can enhance code excellently and facilitate adjustments or refactoring without worry of introducing new mistakes.

5. How does a database index enhance a question’s overall performance?

Ans:

A database index is a facts shape that improves the velocity of facts retrieval operations on a database desk on the value of extra garage and protection overhead. It has capabilities like an index in a book, permitting the database engine to quickly find unique rows without scanning the complete desk. Queries that clear out or type on one’s columns can run noticeably faster if an index is created on one or more columns. Proper indexing techniques are vital for optimizing database performance while handling useful resource trade-offs.

6. Explain the differences between CSS and HTML.

Ans:

| Aspect | HTML | CSS |

|---|---|---|

| Definition | Complete SAP software installation | Single running copy of SAP system |

| Purpose | Structure and content of web pages | Presentation and styling of web pages |

| Syntax | Uses tags and attributes to define elements | Uses selectors and properties for styling |

| Functionality | Creates elements like headings, paragraphs, links | Controls layout, colors, fonts, spacing, etc. |

| Document Structure | Defines the overall structure of the webpage | Enhances the appearance of the structured content |

| File Extension | Typically uses .html or .htm | Typically uses .css |

7. What are the blessings of the use of responsive net design?

Ans:

Responsive net design (RWD) guarantees that an internet site’s format adapts seamlessly to special display sizes and orientations. This is done through bendy grids, layouts, and CSS media queries that tailor the consumer revel throughout devices, from computers to smartphones. The number one blessing of RWD consists of advanced consumer revel in, as site visitors can, without problems, navigate and interact with the web website online no matter their tool.

8. How to optimize the internet site’s overall performance and loading velocity?

Ans:

Optimizing an internet site’s overall performance and loading velocity includes numerous techniques to decrease load instances and enhance consumer level. Key strategies include:

- Minimizing HTTP requests by consolidating files.

- Compressing pictures to lessen their size.

- Using asynchronous loading for JavaScript and CSS.

Implementing a CDN can also distribute content material in the direction of users, decreasing latency. Regularly auditing and optimizing code, alongside tracking overall performance metrics, guarantees that the internet site runs efficiently and effectively.

9. Explain the idea of AJAX in net improvement.

Ans:

- AJAX (Asynchronous JavaScript and XML) is an internet improvement method that permits net programs to speak with a server asynchronously without requiring a complete web page reload.

- This allows dynamic content material updates and improves consumer revel in permitting website components to be replaced independently, resulting in quicker interactions.

- AJAX generally uses JavaScript to ship and acquire records from the server, frequently in JSON format, making it less difficult to address and parse records.

10. What are the one-of-a-kind forms of HTTP requests utilized in net improvement?

Ans:

- The essential sorts consist of GET, which retrieves records from the server POST, which sends records to the server for processing PUT, which updates current assets at the server; and DELETE, which removes assets.

- Other requests consist of PATCH, which applies partial changes to a resource, and OPTIONS, which retrieves the supported strategies for a resource.

- Understanding those request sorts is crucial for designing and enforcing RESTful APIs and ensuring the right communication among customers and servers.

11. Explain the distinction between supervised and unsupervised learning?

Ans:

Supervised learning entails schooling a version of the use of classified records in which the output or goal variable is known, permitting the version to analyze the connection among inputs and outputs. Commonly supervised learning of responsibilities consists of category and regression. In contrast, unsupervised gaining knowledge of offers with unlabeled records, in which the version attempts to become aware of styles or groupings inside the records without predefined labels.

12. Explain the idea of overfitting gadgets to gain knowledge.

Ans:

Overfitting occurs whilst a gadget gaining knowledge of the version learns no longer only the underlying styles within side tooling records but also beauties and outliers, resulting in a version that plays properly on schooling records but poorly on unseen records. This is frequently because of immoderate version complexity, too many capabilities or a totally deep neural network. Practitioners screen overall performance metrics on each schooling and validation dataset to become aware of overfitting.

13. What is the motive of cross-validation in devices gaining knowledge?

Ans:

Cross-validation is a way to evaluate the generalizability of a device, gaining knowledge of the version through splitting the dataset into more than one schooling and validation set. It ensures that the version’s overall performance depends only on citing a subset of the facts. Common techniques consist of okay-fold cross-validation, wherein the dataset is split into okay identical parts and the version is skilled okay times, on every occasion using a special element for validation. This procedure aids in choosing the excellent version and tuning hyperparameters effectively.

14. What are the special assessment metrics used for assessing type fashions?

Ans:

- Several assessment metrics are generally used to evaluate the overall performance of type fashions. Accuracy measures the share of successfully expected times out of the total, it may be deceptive in imbalanced datasets.

- Precision shows the ratio of proper positives to the sum of appropriate and false positives, reflecting the first class of high-quality predictions, indicating the version’s cap potential to seize applicable times.

- The F1 rating combines precision and does not fall into an unmarried metric, supplying stability among the two. Confusion matrices assist in visualizing overall performance throughout more than one class.

15. How does characteristic choice or dimensionality discount advantage devices gaining knowledge of fashions?

Ans:

- Feature choice and dimensionality discount are strategies used to enhance devices’ overall performance and interpretability, gaining knowledge of fashions through lowering the range of entered features.

- Feature choice entails figuring out and keeping the handiest of the maximum applicable features that can beautify version accuracy, lower overfitting, and decrease schooling time.

- Dimensionality discount strategies, consisting of Principal Component Analysis (PCA), remodel high-dimensional facts right into a lower-dimensional area even as retaining critical information.

16. What distinguishes the elasticity and scalability of cloud computing?

Ans:

- Scalability refers to the functionality of a gadget to address expanded hundreds through including sources, either vertically or horizontally. It guarantees that packages can develop.

- Elasticity, on the other hand, is the cap potential of a gadget to mechanically regulate sources primarily based totally on present-day needs, taking into account dynamic scaling up or down in reaction to fluctuations in workload.

- While scalability is an extra static idea specializing in capacity, elasticity emphasizes the responsive allocation of sources in real time to optimize fees and overall performance in cloud environments.

17. What distinguishes the elasticity and scalability of cloud computing?

Ans:

One important DevOps exercise is Infrastructure as Code (IaC), which involves managing and allocating computer infrastructure using code and automation rather than manual procedures. Groups may automate deployment procedures, monitor consistent setups, and model manage their infrastructure by treating infrastructure configuration documents as code. This technique fosters collaboration and complements the agility of improvement and operations groups.

18. What do Continuous Deployment (CD) and Continuous Integration (CI) mean in DevOps?

Ans:

Continuous Integration (CI) is a DevOps exercise that includes robotically checking out and integrating code modifications from more than one member right into a shared repository more than one instance a day. The purpose is to identify and cope with integration troubles early, ensuring new code no longer smashes current functionality. Continuous Deployment (CD) extends CI by automating the discharge process, permitting code modifications to be deployed to manufacturing environments robotically after passing tests. CI/CD pipelines enhance software programs’ first-class and boost the improvement lifecycle.

19. How does containerization advantage the DevOps process?

Ans:

Containerization, exemplified via gear like Docker, encapsulates packages and their dependencies into lightweight, transportable packing containers. This guarantees that packages run constantly throughout distinct environments, from improvement to manufacturing, casting off the “it works on my machine” problem. Containers are remoted from one another, imparting protection and considering green useful resource usage on an unmarried host. The cap potential to fast spin up and tear down packing containers complements scalability and simplifies deployment processes.

20. What is the function of configuration control gear in DevOps?

Ans:

- Configuration control gear like Ansible and Puppet is essential in automating the deployment, configuration, and control of software programs and structures inside a DevOps environment.

- These gear permit groups to outline the preferred nation in their infrastructure and ensure that each one’s structures remain in sync with that configuration, minimizing discrepancies.

- They facilitate constant and repeatable deployment processes, bearing in mind quicker. Configuration control gear simplifies the complexity of coping with huge-scale environments.

21. What is the distinction between relational and NoSQL databases?

Ans:

- Relational databases use an established schema described through tables with rows and columns, permitting relationships among entities through overseas keys.

- They comply with SQL for statistics manipulation and guide ACID houses to make certain dependable transactions allows for scalability and fast development, especially in packages with variable statistics requirements.

- While relational databases are well-appropriate for established statistics and complicated queries, NoSQL databases cope with huge volumes of statistics with various structures, making them perfect for huge statistics and real-time packages.

22. Describe the concept of ACID homes about database transactions.

Ans:

- ACID houses are standards that assure dependable processing of database transactions.

- When a transaction is handled as an unmarried unit, atomicity ensures that all activities are completed correctly or none are performed, preventing partial updates.

- Consistency ensures that a transaction brings the database from one legitimate country to another, retaining statistical integrity.

- Isolation guarantees that simultaneously executing transactions no longer affect each other, maintaining the correctness of statistics at some stage in simultaneous operations.

23. What are the common forms of database normalization, and what is the reason behind it?

Ans:

Database normalization is organizing statistics to lessen redundancy and enhance statistical integrity by dividing a database into associated tables. The number one cause is to remove statistics anomalies that can arise at some stage in statistics insertion, updating, or deletion. Normalization includes numerous everyday forms (NF), every with precise rules. The first everyday form (1NF) calls for every column to carry atomic values, and every row is unique. The 2D everyday form (2NF) builds on 1NF by ensuring that every attribute functionally depends on the number one key.

24. What are indexes in a database, and how do they enhance question overall performance?

Ans:

Indexes are statistics systems that decorate the velocity of statistics retrieval operations in a database by imparting a brief research mechanism for rows in a desk. They paint further to an index in a book, permitting the database control system (DBMS) to discover precise statistics without scanning each row.

Databases can significantly lessen question execution time, particularly for huge datasets, by developing indexes on regularly queried columns. Careful indexing techniques are vital to the stability of overall performance profits in study operations, and capability influences write operations.

25. Explain the distinction between number one and overseas keys in a database.

Ans:

A number one secret is a unique identifier for a report in a database desk, making sure that every access may be fantastically recognized and accessed. It can’t incorporate null values and should stay precise throughout all records, imparting statistical integrity inside the desk. On the other hand, an overseas key is a field in a single desk that references the number one key of every other desk, organizing a date among the 2 tables. While number-one keys are vital for uniquely figuring out records, overseas keys are essential for retaining connections among associated statistics in one-of-a-kind tables.

26. Explain dependency injection and its benefits in software program improvement.

Ans:

- Dependency injection (DI) is a layout sample that lets a category get hold of its dependencies from an outside supply instead of developing them internally.

- This method promotes free coupling among components, making the codebase extra modular and less difficult to maintain. By decoupling the introduction of established gadgets from their usage.

- Dependency injection ends in extra bendy and reusable code, aligning properly with the ideas of contemporary-day software program improvement.

27. How to handle software program insects and debug improvement process?

Ans:

- Handling software program insects starts with a scientific method to figure out, document, and prioritize issues. When a trojan horse is reported, I mirror the difficulty of apprehending its context and impact.

- Utilizing debugging equipment and techniques, including breakpoints and logging, lets me hint at the source of the problem. Once the foundation’s purpose is recognized.

- After deploying the restoration, file the trojan horse and any classes found to enhance destiny practices. This systematic method fosters a subculture of non-stop development and complements software program quality.

28. Explain the idea of layout styles and offer an example?

Ans:

- Design styles are reusable answers to unusual place software program layout problems, presenting a template for resolving a selected issue in a given context.

- They encapsulate quality practices and may be tailored to numerous programming languages and architectures. Common layout styles encompass the Singleton pattern.

- Restricts a category to an unmarried example whilst presenting a worldwide get right of entry to point, and the Observer pattern, which establishes a one-to-many dependency among items.

29. How to ensure code is nice and maintainable for projects?

Ans:

Ensuring code niceness and maintainability entails adopting quality practices for the improvement lifecycle. This consists of writing clean, modular code with significant naming conventions, following coding standards, and keeping steady formatting. Code opinions and pair programming foster collaboration and know-how sharing, permitting group individuals to capture capacity problems early. Automated testing, including unit and integration assessments, is essential for validating capability and stopping regressions.

30. How to manage database backups and catastrophe healing?

Ans:

Handling database backups and catastrophe healing entails organizing a complete method to shield information from loss or corruption. Regular computerized backups are scheduled, ensuring that the data is captured often and saved securely. Backups are examined periodically to affirm their integrity and ensure they may be restored correctly. A catastrophe healing plan outlines the steps to repair database capability after a failure, and timelines for healing. This proactive method minimizes downtime and information loss, ensuring commercial enterprise continuity in detrimental situations.

31. How to manage database scalability in high-call environments?

Ans:

Recognize each vertical and horizontal scaling technique to manage database scalability in high-call environments. Vertical scaling entails upgrading the prevailing hardware, including growing CPU and memory, to address extra load. On the other hand, horizontal scaling distributes the burden throughout a couple of database instances, regularly using strategies like sharding to partition information. Caching mechanisms, including Redis or Memcached, may be hired to lessen database load by storing often accessed data in memory.

32. What are the important variations among front-quit and back-quit net improvement?

Ans:

- Front-quit net improvement specializes in website visual components and consumer experience, using technology like HTML, CSS, and JavaScript to create interactive consumer interfaces.

- Front-quit builders ensure websites are responsive, accessible, and visually attractive through extraordinary gadgets and display sizes severe-facet logic, databases, and alertness functionality.

- Back-quit builders create APIs, control record storage, and manage server configuration while front-quit improvement emphasizes consumer revel in and layout.

33. How to optimize the internet site’s performance and enhance loading instances?

Ans:

- Reducing HTTP requests through file combination decreases load time. Implementing photo optimization techniques, compression, and the usage of suitable formats can drastically enhance overall performance.

- Leveraging browser caching lets regularly accessed assets be saved locally, lowering server load and speeding up next visits.

- A Content Delivery Network (CDN) can distribute content material to users, improving entry speed.

- Optimizing server reaction instances and doing away with render-blocking off assets are crucial for enhancing normal internet site overall performance.

34. Explain the idea of responsive net layout and its importance.

Ans:

- Responsive net layout is a technique that guarantees net packages offer top-quality viewing through a huge variety of gadgets, from laptop computer systems to cell phones.

- It includes the usage of fluid grids, bendy images, and CSS media queries to conform the format and content material to extraordinary display sizes and orientations.

- This layout philosophy is crucial as it complements consumer revel, improves accessibility, and increases engagement by offering a continuing interface on any device.

35. How to manage cross-browser compatibility troubles in net improvement?

Ans:

Handling cross-browser compatibility includes ensuring that net packages are characterized efficiently throughout numerous browsers and versions. Widespread HTML, CSS, and JavaScript functions to maximize compatibility, fending off browser-particular functions whenever possible. Testing the utility in multiple browsers is crucial to discovering discrepancies. Use polyfills or fallback answers when encountering compatibility troubles to offer opportunity implementations for unsupported functions.

36. How to ensure net protection and defend against unusual place vulnerabilities?

Ans:

Ensuring net protection entails imposing more than one layer of safety to protect programs against unusual place vulnerabilities. I start by conducting an intensive protection evaluation and frequently updating software program dependencies to patch recognized vulnerabilities. To prevent SQL injection, cross-web page scripting (XSS), and other injection attacks, input validation is essential. Utilizing HTTPS encrypts information in transit and defensive touchy statistics from interception.

37. What are the distinct forms of database indexes, and operate them?

Ans:

Database indexes are vital for enhancing question overall performance, and numerous kinds exist to serve distinct purposes. B-tree indexes are the most unusual place, imparting balanced tree systems that permit green searching, insertion, and deletion. Hash indexes provide rapid equality searches but aren’t appropriate for various queries, making them best for particular research operations. Bitmap indexes benefit low-cardinality columns, permitting green queries on big datasets, specifically in information warehousing scenarios.

38. Explain the idea of regularization in device getting to know.

Ans:

- Regularization is a method to get to know to save from overfitting by including a penalty period to the loss characteristic at some stage in version education.

- By introducing this penalty, regularization discourages the version from getting to know overly complicated styles that don’t generalize properly to unseen information.

- Regularization no longer best improves version generalization but complements interpretability by decreasing the range of functions that contribute extensively to predictions.

39. How to take care of overfitting in device getting to know models?

Ans:

- I make certain that the education dataset is representative of the real international situation and sufficiently big.

- Implementing regularization strategies, including L1 or L2 regularization, saves from learning noise and overly complicated styles.

- Simplifying the version via ways of decreasing the range of functions or the usage of a much less complicated set of rules can help.

- Augmenting the dataset through strategies like information augmentation can improve version robustness and mitigate overfitting.

40. Explain the bias-variance trade-off in device mastering?

Ans:

- The bias-variance trade-off is an essential idea in device mastering that describes the trade-off among styles of mistakes that affect version overall performance: bias and variance.

- Bias refers to the error delivered through approximating real-international trouble with a simplified version, which may cause underfitting when the version is too simplistic.

- Variance is the mistake delivered through a version’s sensitivity to fluctuations inside the schooling dataset that may bring about overfitting. In contrast, the version captures noise as opposed to the underlying pattern.

41. How to compare the overall performance of a device-mastering version?

Ans:

Evaluating the overall performance of a device-mastering version entails using diverse metrics tailor-made to the particular trouble at hand. Not unusual place metrics for class tasks consist of accuracy, precision, recall, F1 score, and location below the ROC curve. In regression tasks, metrics like suggest absolute error, suggest squared error, and R-squared are regularly employed. Cross-validation techniques, such as k-fold cross-validation, offer a stronger evaluation by splitting the dataset into schooling and validation units more than once.

42. How to manage imbalanced datasets in device mastering?

Ans:

Handling imbalanced datasets in device mastering calls for particular techniques to ensure the version learns efficiently from each class. One unusual place technique is to apply resampling techniques that may contain oversampling of the minority magnificence or undersampling of the bulk magnificence to stabilize the dataset. Synthetic facts-era techniques like SMOTE can also create artificial examples of minority magnificence. Ensemble techniques and balanced random forests or boosting techniques can also enhance performance on imbalanced facts.

43. How to ensure excessive availability and fault tolerance in cloud-primarily based total structures?

Ans:

Ensuring excessive availability and fault tolerance in cloud-primarily based total structures includes enforcing redundancy and failover mechanisms. Utilizing more than one available zone or area permit for disbursed resources minimizes the effect of localized failures. Load balancing distributes visitors throughout more than one instance, ensuring that no unmarried failure factor disrupts the provider. Implementing automatic tracking and alerting structures facilitates perceiving and solving troubles quicker than they affect users.

44. How to manage containerization and orchestration in cloud environments?

Ans:

- Handling containerization in cloud environments includes using technology like Docker to create and control bins that bundle programs and their dependencies for regular deployment.

- Orchestration gear, including Kubernetes or Docker Swarm, is hired to automate containerized programs’ deployment, scaling, and control.

- These gears facilitate load balancing, provider discovery, and fitness tracking, making sure that bins run easily and might scale in keeping with demand.

- Companies can acquire extra agility, scalability, and useful resource efficiency by efficiently combining containerization and orchestration.

45. How to screen and troubleshoot performance troubles in cloud-primarily-based total structures?

Ans:

- Monitoring and troubleshooting overall performance troubles in cloud-primarily-based total structures call for a proactive method of using numerous gears and metrics.

- Implementing complete tracking solutions, which include AWS CloudWatch, Azure Monitor, or Prometheus, permits real-time monitoring of gadget fitness, useful resource utilization, and alertness performance.

- Setting up signals for important thresholds facilitates perceived ability troubles earlier than they increase into vast issues offer insights into utility conduct and assist in pinpointing errors.

46. What is reinforcement gaining knowledge of, and how does it vary from supervised gaining knowledge?

Ans:

- Reinforcement gaining knowledge of (RL) is a system gaining knowledge in which an agent learns to make selections by interacting with surroundings to maximize cumulative rewards.

- Unlike supervised gaining knowledge, in which the version is educated on classified data, RL specializes in gaining knowledge from the outcomes of movements taken.

- This technique is mainly powerful in dynamic environments with complicated decision-making involving robotics, recreation playing, and independent systems.

47. How to interpret the outcomes of a system gaining knowledge of a version?

Ans:

Interpreting the outcomes of a system and gaining knowledge of the version includes reading its predictions and knowing the underlying styles that brought about the predictions. Techniques consisting of confusion matrices, precision-consider curves, and ROC curves offer insights into the version’s overall performance on category tasks, indicating metrics like accuracy, fake positives, and authentic positives. For regression models, metrics like R-squared and residual plots assist in determining prediction quality.

48. What is the position of hyperparameter tuning in a system gaining knowledge of?

Ans:

Hyperparameter tuning is the technique of optimizing the parameters that govern the conduct of a system, gaining knowledge of algorithms that aren’t discovered from the education data. These hyperparameters, consisting of parameters associated with the version architecture, gain an understanding of the rate and regularization strength, which can considerably affect the version’s overall performance. Techniques such as Grid and random seek to systematically compare extraordinary mixtures of hyperparameters to select the premier.

49. What is the motive of model manipulate structures like Git?

Ans:

Versions manipulate structures like Git music adjustments in code over time, permitting builders to collaborate on tasks without overwriting every different’s paintings. Git additionally presents an in-depth record of adjustments, making it simpler to discover bugs, revert to preceding versions, and manipulate exclusive branches of a project. By the usage of Git, groups can paint on separate capabilities or trojan horse fixes concurrently, making sure easy integration of code.

50. What is the distinction between localStorage and sessionStorage in JavaScript?

Ans:

- Both localStorage and sessionStorage are a part of the Web Storage API in JavaScript, used to shop key-price pairs inside the browser.

- localStorage persists records even after the browser is closed, even as sessionStorage most effectively lasts at some stage in the web page session.

- This distinction makes localStorage perfect for saving long-time consumer possibilities and sessionStorage for transient records like shape inputs.

51. What is the container version in CSS?

Ans:

- The CSS container version is an essential idea that defines how factors are based and rendered on a webpage.

- It includes 4 areas the content material, padding, border, and margin. The content material is the real detail, padding surrounds the content material.

- The border encloses the padding, and the margin separates the detail from different factors.

- Understanding the container version is vital for correct format layout and spacing in internet development.

52. What are CSS Flexbox and CSS Grid?

Ans:

- CSS Flexbox and CSS Grid are format fashions used for designing responsive websites.

- Flexbox is good for one-dimensional layouts, arranging objects in a row or column. It works nicely for aligning content like navigation bars.

- On the other hand, CSS Grid is a two-dimensional format system, permitting builders to manipulate each row and column simultaneously.

- It’s beneficial for complicated layouts, like complete web page designs. Flexbox is more appropriate for easy alignment.

53 What are RESTful APIs, and the way they work?

Ans:

REST APIs are policies that permit communication among consumers and servers via stateless HTTP requests. RESTful APIs use trendy HTTP strategies like GET, POST, PUT, and DELETE to carry out operations on data, usually formatted in JSON or XML. These APIs observe the standards of resource-primarily based total architecture, in which every URL corresponds to a selected resource. RESTful APIs are broadly used for net offerings because of their scalability and simplicity.

54. What is Cross-Origin Resource Sharing (CORS), and why is it important?

Ans:

CORS is a safety function applied through browsers that control how sources may be asked from every other area. It prevents the unauthorized right of entry to sources from distinctive origins by imposing the same-beginning policy. APIs and websites frequently want to proportion sources, so CORS headers are used to specify which domain names are allowed to get the right of entry to the sources. Customers could potentially revel in mistakes without the right CORS configuration while making cross-area requests.

55. What is occasion delegation in JavaScript, and why is it beneficial?

Ans:

Event delegation is a JavaScript method of connecting an unmarried occasional listener to a figure detail rather than including listeners to personal baby elements. It works by taking advantage of occasion bubbling, in which an occasion propagates up the DOM tree. This technique improves overall performance by lowering the range of occasion listeners. It is mainly beneficial when dynamically including or putting off baby elements.

56. What is the reason for using preprocessors like SASS or LESS in CSS?

Ans:

- CSS preprocessors like SASS (Syntactically Awesome Stylesheets) and LESS upload capability to normal CSS, permitting builders to apply capabilities like variables, nesting, and mixins.

- These capabilities make CSS more maintainable and modular, lowering code duplication and simplifying complicated stylesheets. Preprocessors combine trendy CSS, so they’re well-suited for all browsers.

- By using SASS or LESS, builders can write more prepared and green styles, enhancing the general improvement workflow.

57. What are guarantees in JavaScript and the way they are painted?

Ans:

- Promises in JavaScript are used to deal with asynchronous operations, imparting a cleaner manner to paintings with asynchronous code in comparison to conventional callback functions.

- A promise may be considered one of 3 states pending, fulfilled, or rejected. It resolves with an end result while a hit or rejects with a mistake if something goes wrong.

- Promises permit chaining operations using.then() and.catch() methods, making the code more readable and less difficult to control.

58. What is the Document Object Model (DOM) in net development?

Ans:

- The DOM is a programming interface that represents the shape of an internet web page as a tree of objects, wherein every element is a node.

- JavaScript can engage with the DOM to dynamically adjust content, shape, or an internet web page.

- Understanding the DOM is crucial for developing dynamic, interactive websites because it gives the inspiration for manipulating website content.

59. What are microservices, and how do they relate to DevOps?

Ans:

Microservices are an architectural fashion wherein packages are constructed as a group of loosely coupled, independently deployable services. Each carrier plays a selected characteristic and may be developed, deployed, and scaled independently. In DevOps, microservices align with CI/CD practices, allowing quicker releases and greater agile development. Tools like Docker and Kubernetes make it less difficult to control microservices by allowing their containerization and orchestration.

60. What is the function of tracking in DevOps?

Ans:

Monitoring is an essential element in DevOps for ensuring the health, performance, and availability of packages and infrastructure. Tools like Prometheus, Grafana, and the ELK stack permit groups to collect, analyze, and visualize statistics in real-time. Effective tracking facilitates identifying troubles early, optimizing aid usage, and maintaining carrier-stage agreements (SLAs). In a DevOps culture, tracking is continuous, allowing short remarks and proactive machine maintenance.

61. Why is a carrier mesh in a microservices architecture?

Ans:

A carrier mesh is an infrastructure layer that controls conversation among microservices in a microservices architecture. It manages carrier discovery, load balancing, encryption, monitoring, and retries, ensuring dependable and steady conversation. Tools like Istio or Linkerd are typically used to put in force carrier meshes. By offloading those issues from character offerings, a carrier mesh permits builders to recognize commercial enterprise common sense while improving the microservices microservices observability, protection, and resilience.

62. What is the distinction between AWS, Azure, and Google Cloud?

Ans:

- AWS, Azure, and Google Cloud are the three predominant cloud carrier providers. They provide comparable offerings like computing, storage, and networking but with a few variations in pricing, tools, and ecosystems.

- AWS has the biggest marketplace proportion and many offerings carefully incorporated with Microsoft merchandise like Windows Server and Active Directory, is famous amongst enterprises.

- Google Cloud focuses closely on information and gadget-mastering offerings, leveraging its understanding of AI and Big Data upon the organization’s wishes and the present infrastructure.

63. What is a blue-inexperienced deployment approach in DevOps?

Ans:

- Blue-inexperienced deployment is an approach that reduces downtime and hazard means of jogging the same manufacturing environments, one stay and one idle .

- When a new utility edition is ready, its miles are simultaneously deployed to the inexperienced surroundings as the blue surroundings keep serving users.

- Once the inexperienced surroundings are validated, site visitors are switched over, making it the stay surroundings.

- Site visitors may be rolled again to the blue surroundings if any troubles arise. This technique minimizes disruption and guarantees easy releases.

64. What is the distinction between horizontal and vertical scaling in cloud environments?

Ans:

- Horizontal scaling entails including extra machines or times to distribute the load, at the same time as vertical scaling entails growing the resources (CPU, memory) of a present gadget.

- Horizontal scaling is typically utilized in cloud environments as it permits higher fault tolerance and redundancy because the load is unfolded several times.

- Vertical scaling is restricted using the most capability of an unmarried gadget. Horizontal scaling gives higher long-term period scalability and resilience, while vertical scaling is easier.

65 What is the shared duty version in cloud computing?

Ans:

The shared duty version outlines the department of protection obligations among the cloud company and the purchaser. In this version, the cloud company is chargeable for the safety of the cloud (infrastructure, bodily protection, and networking). At the same time, the purchaser is chargeable for protection inside the cloud (information, applications, get right of entry to management). AWS controls the hardware and hypervisors, while clients control identification and get the right of entry to management (IAM) and information encryption.

66. What is the function of Configuration Management in DevOps?

Ans:

Configuration control guarantees that each one’s structures and software programs are constantly configured throughout the surroundings, lowering configuration float and permitting clean operations. Tools like Ansible, Puppet, or Chef are used to automate this technique by defining configurations as code. This technique makes infrastructure reproducible and scalable, even lowering human errors. In a DevOps context, configuration control facilitates the manipulation of infrastructure in a manner that helps CI/CD practices, leading to faster, extra-dependable deployments.

67. What is canary deployment, and how does it vary from blue-inexperienced deployment?

Ans:

Canary deployment is an approach wherein a new utility edition is rolled out to a small subset of customers earlier than regularly increasing to the complete consumer base. This lets groups check the new edition in a stay surroundings and discover troubles early. In contrast, blue-inexperienced deployment switches among the same environments, deploying the latest edition to the inexperienced surroundings earlier than directing all visitors to it. Canary deployment is extra slow and decreases risk, even as blue-inexperienced specializes in lowering downtime and immediate rollbacks.

68. What is a catastrophe healing plan in cloud environments?

Ans:

- A catastrophe healing (DR) plan outlines the tactics required to repair essential commercial enterprise capabilities during a machine failure or outage.

- This could include record backups, replication throughout regions, and failover mechanisms in cloud environments.

- Cloud carriers like AWS and Azure offer backup and catastrophe-healing tools to automate and simplify this technique.

- A stable DR plan guarantees commercial enterprise continuity by minimizing downtime and record loss in case of emergencies.

69. What is immutable infrastructure, and what are its blessings?

Ans:

- Immutable infrastructure is an exercise wherein servers or digital machines aren’t changed after deployment.

- Instead of patching or updating a jogging machine, a new, up-to-date model is constructed and deployed to update the prevailing instance.

- This technique guarantees consistency, reduces configuration float, and simplifies the deployment technique, as each extrude effect results in fresh, smooth surroundings.

- Tools like Docker and Terraform are regularly used to acquire immutable infrastructure. The most important benefits are accelerated reliability, simpler debugging, and improved security.

70. What are the important variations among stateful and stateless packages inside the cloud?

Ans:

- Stateful packages maintain statistics about consumer periods or operations among requests, requiring continual garages.

- Stateless packages are usually less difficult to scale, as they don’t rely upon any precise server for consumer statistics.

- In a cloud environment, stateless architectures are desired because they’re more resilient and may better manage load distribution.

- Stateful packages require conscious control of consultation statistics, frequently counting on databases or dispensed garages.

71. How do safety organizations and community ACL fluctuate in cloud environments?

Ans:

Security organizations and community ACLs are each used to govern inbound and outbound visitors in cloud environments. Security organizations act as digital firewalls for man or woman instances, controlling visitors at the example stage and permitting or denying precise IPs or protocols. They are stateful, which means going back to visitors is robotically allowed. On the other hand, network ACLs function on the subnet stage, controlling visitors to and from whole subnets. ACLs are stateless, so inbound and outbound guidelines should be explicitly defined.

72. What is the distinction among OLTP and OLAP structures?

Ans:

OLTP structures are designed to deal with real-time, transactional workloads specializing in insert, update, and delete operations with many concurrent users. OLAP structures are optimized for complicated question processing and statistics analysis, usually used for decision-making and reporting. OLTP structures prioritize pace and performance for ordinary transactions, whilst OLAP structures are constructed for high-overall performance querying throughout huge datasets.

73. What is database partitioning, and how does it enhance overall performance?

Ans:

Database partitioning divides a huge desk into smaller, more achievable portions known as walls, every one of which may be saved and queried independently. Partitioning may be done through range, list, hash, or composite methods, relying on statistics and question patterns. It improves overall performance by decreasing the number of statistics that desire to be scanned through queries and can also simplify protection responsibilities like backups and archiving.

74. What is the CAP Theorem, and how does it practice allotted databases?

Ans:

- The CAP Theorem states that during an allotted database machine, may best attain the subsequent 3 properties Consistency, Availability, and Partition Tolerance.

- Consistency guarantees all nodes see the equal facts at the same time; Availability approach, the machine stays operational even though Partition Tolerance allows the machine to continue running of community partitions.

- Structures should compromise by selecting CP or AP, relying on the use case. CAP allows designing allotted databases by using guiding trade-offs among those properties.

75. What are CTE, and how to operate them?

Ans:

- Brief SQL result units known as CTEs can be referred to within SELECT, INSERT, UPDATE, or DELETE queries.

- They describe the use of the WITH clause and assist in destroying complicated queries by organizing code into more readable blocks.

- CTEs are particularly beneficial for recursive queries, together with hierarchical fact retrieval performing multiple calculations.

- Unlike subqueries, CTEs enhance clarity and maintainability without affecting overall performance, as they may be accomplished simply during question execution.

76. What is database replication, and what are its unique types?

Ans:

- Database replication is the system of copying and preserving database objects (like tables or whole databases) throughout more than one database server.

- It guarantees facts redundancy and improves availability and fault tolerance. There are 3 essential forms of replication master-slave, master-master , and synchronous or asynchronous replication.

- Modifications are both at once meditated throughout replicas or implemented with a few delay excessive availability and cargo distribution in massive, allotted structures.

77. What is an index test vs. an index users are looking for, and how do they affect overall performance?

Ans:

An index test takes place while the database engine reads the whole index to meet a much less green question as it approaches all index entries. On the other hand, an index entails looking at the index tree to discover the applicable rows at once, making it quicker and greater green. The index seeks are optimum for queries that clear out on particularly selective standards, even as index scans are regularly used while the clear-out standards shape a massive part of the facts.

78. What are database isolation ranges, and how do they affect transaction conduct?

Ans:

Database isolation ranges outline the degree to which transactions are remoted from each other, stopping troubles like grimy reads, non-repeatable reads, and phantom reads. Read Uncommitted, Read Committed, Repeatable Read, and Serializable are the recommended ranges, each of which exhibits increasing isolation. Higher isolation ranges provide greater consistency but can result in overall performance trade-offs by increasing the chance of locks or delays in concurrent transactions.

79. What are surrogate keys, and how do they vary from herbal keys?

Ans:

Surrogate keys are synthetic identifiers assigned to database records, frequently carried out as an auto-incremented number, and haven’t no enterprise. On the other hand, natural keys are fields with inherent enterprise that means and are used to pick out records uniquely. Surrogate keys simplify database layout and avoid headaches like converting enterprise values, while herbal keys can put significant constraints in force. Surrogate keys are normally preferred in huge databases as they may be strong and carry out higher indexing and joins.

80. What are the blessings and downsides of using triggers in a database?

Ans:

- Triggers are computerized moves achieved in reaction to particular events in a database.

- They can enforce enterprise rules, keep audit logs, or synchronize statistics throughout tables automatically.

- Triggers may introduce overall performance overhead if overused, mainly when some triggers are placed on complicated tables.

- While they provide energy and flexibility, applying triggers judiciously and recording their conduct clearly is essential.

81. What are SQL window features, and how do they vary from combination features?

Ans:

- Window features perform calculations throughout a fixed set of desk rows associated with the contemporary row, presenting a more bendy manner to compute cumulative or transferring values.

- Unlike combination features, which crumble a couple of rows right into an unmarried result, window features keep the row-degree element whilst including calculated values like walking totals, ranks, or averages.

- They benefit from complicated analytical queries, including reporting or statistics analysis. Window features permit greater superior calculations without dropping the authentic statistics context.

82. What are the benefits of using columnar garage in databases like Amazon Redshift or Google BigQuery?

Ans:

- Columnar garage organizes facts via columns instead of rows, which allows for green examination and questions overall performance, mainly in analytical workloads.

- Since the desired columns are most effectively examined from disk, queries that contain aggregations or filters on a small subset of columns run faster.

- Columnar databases additionally use superior compression techniques, significantly decreasing storage charges.

- This method is particularly beneficial for factory warehousing and commercial enterprise intelligence systems, wherein huge datasets are queried with a focus on particular columns.

83. What are precision and consideration, and how do they differ?

Ans:

Precision and consideration are overall performance metrics utilized in type tasks, mainly for imbalanced datasets. Precision is the ratio of real positives to the sum of real and false positives, measuring the accuracy of fantastic predictions. High precision approaches fewer false positives, even as excessive consideration approaches fewer fake negatives. The F1 rating combines each metric into an unmarried number, balancing precision and consideration.

84. What is the distinction between a generative and a discriminative version?

Ans:

Generative fashions analyze the joint probability distribution of the facts and may generate new factors by modelling how the facts are distributed. On the other hand, discriminatory fashions focus on getting to know the boundary among lessons and estimating the conditional possibility of the output given the input, along with logistic regression and help vector machines (SVM). Generative fashions benefit facts generation, even as discriminative fashions are usually more correct for type tasks.

85 What is cross-validation, and why is it important?

Ans:

Cross-validation is a way to evaluate how properly a gadget getting to know a version generalizes to unseen facts by splitting the dataset into education and validation units more than once. The maximum not unusual place approach is okay-fold cross-validation, wherein the facts are divided into okay subsets, and the version is skilled on okay-1 subsets even as being tested at the closing subset. Cross-validation facilitates overfitting, improves the version’s overall performance estimation, and guarantees that the version can generalize to specific fact distributions.

86 What is the distinction between bagging and boosting?

Ans:

- Bagging entails schooling a couple of fashions independently on specific subsets of the statistics and averaging their predictions, lowering variance and stopping overfitting.

- Random forests are a famous bagging method. Conversely, Boosting builds fashions sequentially, in which every version corrects the mistakes of the preceding one.

- It specializes in lowering bias and developing sturdy beginners from vulnerable ones. Boosting algorithms encompass AdaBoost and Gradient Boosting.

87. What is the curse of dimensionality, and how can it be addressed?

Ans:

- The curse of dimensionality refers to the demanding situations that get up while running with high-dimensional statistics.

- As the variety of capabilities increases, the extent of the function area grows exponentially, making it more difficult for fashions to generalize.

- Strategies like dimensionality reduction (PCA, t-SNE), function selection, or regularization techniques like Lasso are used to cope with this.

- Another method is growing the dataset length or the usage of fashions which can cope with high-dimensional statistics effectively.

88 What are activation features in neural networks, and why are they important?

Ans:

- Activation features introduce non-linearity into neural networks, permitting them to examine complicated styles and relationships inside the statistics.

- Without activation features, the version could behave like a linear regression version and not be able to seize non-linear capabilities.

- Common activation features encompass ReLU (Rectified Linear Unit), Sigmoid, and Tanh. ReLU is extensively used for its simplicity and performance in schooling deep networks.

- Activation features play a vital function in figuring out how neurons in a neural community heart place and affect the gradient.

89. What is switch mastering, and how is it implemented in gadget mastering?

Ans:

Transfer mastering is a method wherein a pre-educated version, usually educated on a massive dataset, is used as a starting line for a new, associated undertaking with restricted facts. Instead of schooling a version from scratch, switch mastering leverages the pre-educated version’s understanding, allowing quicker convergence and regularly progressed performance, particularly in duties with smaller datasets. Transfer mastering is treasured while schooling facts are scarce or steeply priced to obtain

90. What are the variations among softmax and sigmoid characteristics?

Ans:

The softmax and sigmoid characteristics are each activation features utilized in neural networks, particularly within the out, inside or class duties. The sigmoid characteristic outputs a chance cost among zero and 1 for binary class, making it appropriate for issues with classes. In contrast, the softmax characteristic generalizes sigmoid for multi-magnificence class, outputting a chance distribution over more than one class, wherein the sum of all outputs equals.