Amazon Redshift is a petabyte-scale, fully managed data warehouse service provided by Amazon Web Services (AWS). It allows users to quickly analyze data using SQL and Business Intelligence (BI) tools. Redshift is optimized for fast querying and can handle petabyte-sized data warehouses, making it a popular choice for organizations with large amounts of data to analyze. It is designed to be cost-effective and easy to use, with features such as automatic data compression and columnar storage to help reduce storage requirements and improve query performance. Redshift is also highly scalable, with the ability to add or remove nodes as needed to accommodate changes in data volume or query workloads.

1. What is Amazon’s red shift?

Ans:

Amazon Redshift is used to analyze all of our data using standard SQL and our existing business intelligence tools, and is used to allow us to run complex analytical queries. Organized and semi-organized can turn terabytes into petabytes, optimize complex queries, and scale up performance Columnar Storage – Perform storage and parallel query execution at scale.

2. What is the purpose of using the AWS Redshift database?

Ans:

Amazon Redshift is a petabyte-scale managed data warehouse service in the cloud that can start with just a few gigabytes of data and scale to petabytes or more. They are used to enable us to use our data to gain new insights into our business and our customers.

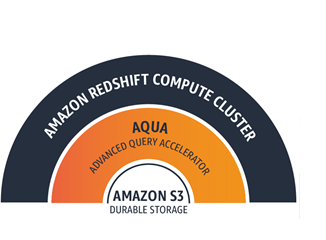

3. What is AQUA for Amazon Redshift?

Ans:

Advanced Query Accelerator (AQUA) is a hardware-accelerated cache, enabling redshifting up to 10 times faster than any other enterprise cloud data warehouse. All data in a storage structure with central storage requiring data is transferred to compute clusters for processing. It is used in moving computation to storage by performing a large share of data processing in place on the innovative cache.

4. What are the features of Amazon Redshift?

Ans:

- Supports VPC: Redshifting allows users to run within a VPC and also control access to the cluster through the virtual network environment.

- Encryption: All data stored in Redshift can be encrypted and configured while creating the tables.

- SSL: Redshift allows communications between clients and Redshift to be encrypted.

- Scalable: It allows us to expand storage capacity without any loss in performance.

5. Explain Concurrency Scaling in Amazon Redshift.

Ans:

Concurrency Scaling is a feature in Amazon Redshift that automatically adds additional computing resources to a cluster to handle an increase in query load. This feature is designed to improve performance and responsiveness during periods of high concurrency or when there is a sudden surge in query demand.

6. What is the redshift spectrum?

Ans:

Redshift Spectrum is used to enable us to run queries against exabytes of unstructured data in Amazon S3, without the need for load or ETL. It creates and optimises a query plan. Spectrum also scales to thousands of instances if needed, so queries run quickly regardless of the size of the data. We can also use the same SQL for Amazon S3 data as you do for our Amazon Redshift queries, as well as connect to the same Amazon Redshift endpoint using your same BI tools.

7. How does Redshift outperform other data warehouse technologies?

Ans:

Amazon Redshift is the easiest and fastest cloud data warehouse that facilitates three times better price performance than other data warehouses. Redshift delivers fast query performance at a relatively modest cost for businesses with datasets ranging in size from gigabytes to exabytes.

8. What are the most important features of red shift?

Ans:

- Redshift uses columnar storage, data compression, and region maps to reduce the amount of I/O needed to execute queries.

- The parallel processing data warehouse architecture is widely used to parallelize and distribute SQL operations.

- Redshift uses machine learning to deliver high productivity based on your workloads.

- Redshift uses result caching to provide sub-second response times for recurring queries.

9. How can we upload data to Redshift?

Ans:

There are several ways to load data into Redshift, but the three most commonly used methods are:

- The copy command is used to upload data to AWS Redshift.

- Use AWS services to load data into Redshift.

- Use the Insert command to load data into Redshift.

10. How to connect a private Redshift cluster?

Ans:

By selecting the No option, you can access your own IP address within the VPC. By doing this, you can implement the public IP address. Now, it is accessed via VPC.

11. What are the limitations of Amazon Redshift?

Ans:

It cannot be used as a direct database for applications due to the slow processing speed of web applications. There is no way to enforce uniqueness in AWS Redshift on imported data. It supports parallel loading only for Amazon EMR, relational DynamoDB, and Amazon S3.

12. How will Amazon Redshift pricing differ?

Ans:

Amazon Redshift pricing depends on the node type the customer has chosen to build their cluster. It basically offers two types of nodes that differ in terms of storage and computation.

Computation dense nodes:

- These enhanced compute nodes offer up to 244 GB of RAM and up to 2.5TB SSDs. The lowest spec price for dc2.largest ranges from $0.25 to $0.37 per hour.

Dense storage nodes:

- These nodes offer high-capacity storage in two versions – a basic version (ds2.xlarge) with up to 2TB hard drives and a top-end version (ds2.8xlarge) with up to 16TB hard drives.

13. Can you provide a list of system catalogue tables?

Ans:

- PG_ATTRIBUTE_INFO PG_CLASS_INFO PG_DATABASE_INFO

- PG_DEFAULT_ACL PG_EXTERNAL_SCHEMA PG_LIBRARY PG_PROC_INFO

- PG_STATISTIC_INDICATOR PG_TABLE_DEF

14. Can RDS instances be upgraded or downgraded as needed?

Ans:

Yes, can upgrade RDS instances with the help of the following command: modify-db-instance. If you can’t figure out how much CPU is needed for the upgrade, start with the db.m1.small database instance class and monitor CPU usage with the help of the Amazon CloudWatch Service tool.

15. Describe the difference between batch gradient descent and stochastic gradient descent.

Ans:

| Aspect | Batch Gradient Descent | Stochastic Gradient Descent | |

| Processing Data |

Uses every iteration to process the entire training dataset. |

Handles one randomly chosen data point each time around. | |

| Update Frequency | Before altering the model’s parameters, computes the cost function’s gradient using the complete dataset. | Updates parameters and computes the gradient for every single data point. | |

| Computational Efficiency | Usually slower because it needs the complete dataset for every iteration, especially for large datasets. | Generally quicker because it analyzes data points one at a time, especially for large datasets. | |

| Convergence | Tends to converge more slowly to the minimum but might offer a more precise solution. | More quickly converges but may bob around the minimum, yielding a less precise answer. | |

| Memory Usage | Requires more memory as it processes the entire dataset. | Requires less memory, making it suitable for online learning and large datasets. |

16. Whether Redshift is a columnar storage database?

Ans:

Redshift is a columnar storage database, optimised for large and redundant data types. Using columnar storage dramatically reduces disk I/O, improving performance as a result. Redshift gives you an option to select column-based encoding to compress data. Columnar data stores can be much more compact than row-based data stores because similar data is stored sequentially on disk.

17. What occurs if a table with the same name as an external table exists in my local storage?

Ans:

In the event that an external table and a table in your local storage share the same name, there may be conflicts or ambiguities when querying the table without providing the schema. The database management system must ascertain whether you are referring to an external or local table when you query one in a database. It is easier to distinguish between the local and external tables when you use the schema name in conjunction with the table name in your queries (e.g., schema_name.table_name).

18. Is redshift a massively parallel process? What is it all about?

Ans:

Amazon Redshift automatically distributes data and loads queries across all nodes. Amazon Redshift makes it easy to add nodes to your data warehouse and enables you to maintain fast query performance as your data warehouse grows.

19. How does Amazon Redshift handle concurrency?

Ans:

Amazon Redshift uses a combination of Workload Management (WLM) and queuing to handle concurrency in a multi-user environment. Here’s how it works:

- Workload Management (WLM): WLM in Amazon Redshift allows you to define queues and allocate memory and processing power to different queues based on workload priorities.

- Queues: WLM employs a concept of queues to manage concurrent queries. Queries are assigned to different queues based on the query group or by explicitly setting the service class.

- Query Prioritisation: WLM allows you to prioritise queries within queues based on importance.

20. What’s the difference between Amazon RDS, DynamoDB, and Redshift?

Ans:

- Amazon RDS: The RDS storage limit depends on the drive you’re running, but is up to 64TB with Amazon Aurora .SQL accommodates 16TB, all other engines allow 32TB.

- Redshift: The maximum capacity of Redshift is much higher at 2PB.

- DynamoDB: DynamoDB has unlimited storage capacity.

21. What happens to my backups if I delete a data warehouse group?

Ans:

When you delete a data warehouse group, you have the ability to specify whether a final snapshot is created upon deletion. This allows the deleted data warehouse group to be restored at a later time. All previously created manual snapshots of your data warehouse cluster will be retained and billed at standard Amazon S3 rates, unless you choose to delete them.

22.What are the most important functions of red shift?

Ans:

Redshift uses columnar garage, statistics compression, and sector maps to reduce the amount of I/O needed to execute queries. It uses a massively parallel processing statistics warehouse architecture to parallelize and distribute SQL operations. Redshift uses system recognition to deliver high productivity based on your workloads. Redshift uses result caching to provide second child react instances for recurring queries.

23. What are the most important functions of red shift?

Ans:

- Redshift uses columnar garage, statistics compression, and sector maps to reduce the amount of I/O needed to execute queries.

- It uses a massively parallel processing statistics warehouse architecture to parallelize and distribute SQL operations.

- Redshift uses system recognition to deliver high productivity based on your workloads.

- Redshift uses result caching to provide second child react instances for recurring queries.

- Redshift automatically and continuously backs up your statistics to S3.

- It can asynchronously mirror your footage to S3 in every other adjacent disaster recovery region.

24. What is the purpose of the ANALYZE COMPRESSION command in Amazon Redshift?

Ans:

- Compression Analysis: The command analyzes the data in each column of the specified table to understand patterns and characteristics.

- Recommendations: Based on the analysis, Redshift provides recommendations for the most efficient compression encodings for each column.

- Space Optimization: By implementing the suggested compression settings, you can reduce the amount of storage required for the table.

- Increased Query Performance: Efficient compression can enhance query performance by reducing the amount of data that needs to be read from disk and transferred across the network during query execution.

25. How can you control security in Amazon Redshift?

Ans:

By default, an Amazon Redshift cluster is most actively available to the AWS account that creates the cluster. Use IAM to create personal debts and control individual debt permissions to control the group process. If you are using the EC2-VPC platform in your Redshift cluster, you will need to use VPC security organisations. If you are using the EC2-Classic platform in your Redshift cluster, you will need to use Redshift security organisations.

26. What is the premium alternative to tracking in Amazon Redshift?

Ans:

Use the database audit logging function to record music data related to authentication attempts, connections and disconnections, modifications to database user definitions, and queries run within the database. Logs are saved in S3 buckets. Redshift tracks activities and keeps data about them for several weeks in your AWS account. Redshift provides overall performance metrics and statistics so you can listen to the overall fitness and performance of your clusters and databasesIt uses CloudWatch metrics to view cluster metrics, which include CPU usage, latency, and throughput.

27. What is batch snapshots in Amazon Redshift?

Ans:

Just-in-time backup of the cluster. There are different types of shots: computerized and directed. Snapshots are saved to S3 to use SSL. Redshift periodically takes incremental snapshots of your stats every eight hours or every 5GB as stats nodes change. Redshift provides a free snapshot garage that is the same storage capacity as your collection until you delete the collection. After the bulk photo garage limit is reached, you will be charged for any additional garage at the regular rate. Automatic snapshots are enabled by default while creating a batch.

28. Why should I use Amazon Redshift over an on-premises statistics warehouse?

Ans:

Local statistics repositories require a significant amount of time and resources to manage, especially for large data sets. In addition, the financial costs of creating, maintaining, and growing self-controlled website statistics repositories on the Internet are extremely high. As your statistics expand, you need to constantly switch between which statistics to load into your statistics repository and which statistics to keep so that one can handle the charges, keep ETL complexity low, and provide the appropriate results.

29. How does amazon redshift secure data in both at rest and in transit?

Ans:

Amazon Redshift supports data encryption at rest and in transit to enhance the security of your data.

- Data at Rest Encryption: Redshift uses Amazon S3 server-side encryption (SSE) to encrypt data at rest in the underlying storage. Alternatively, you can use AWS Key Management Service (KMS) to manage and control the keys used for encrypting data at rest.

- Data in Transit Encryption: Redshift uses SSL (Secure Sockets Layer) to encrypt data in transit between your client application and the Redshift cluster.

30. Discuss the impact of the distribution key choice on query performance.

Ans:

- The distribution key choice in a distributed database significantly influences query performance. Selecting an appropriate distribution key is crucial for achieving optimal data distribution among nodes.

- If the distribution key aligns with the access patterns of your queries, it helps distribute data evenly across nodes.

- Common distribution strategies include hash-based, range-based, or even a replicated distribution. Hash-based distribution is often versatile, but it relies on the evenness of the hash function to prevent skew.

31. What is a materialised view and how does it differ from a regular view?

Ans:

- The key difference lies in performance optimization: materialised views improve query response times by storing computed results, whereas regular views dynamically generate results when queried, potentially incurring more processing overhead.

- Materialised views are suitable for scenarios where there are complex and resource-intensive queries that can benefit from precomputed results, but they come with the trade-off of managing and refreshing the materialised data as needed.

32. What is an Amazon Redshift controlled garage?

Ans:

Amazon Redshift Controlled Storage is available with RA3 node types that allow you to scale out pay-for-compute and store one at a time so you can configure your cluster based on your compute needs. It automatically uses the high-performance SSD-based local garage as a Level 1 cache and takes advantage of optimizations including statistics block temperature, statistics blocking, and workload patterns to deliver high performance while automatically scaling the garage to Amazon S3 as required without any action required.

33. How to restore collections from a backup in redshift?

Ans:

Amazon Redshift copies all your data within your data warehouse cluster when it is mounted, and also continuously backs up your data to S3. Amazon Redshift always tries to keep at least three copies of your data (the original, the replica on the compute nodes, and the backup in Amazon S3). Redshift can also asynchronously copy your snapshots to S3 in another disaster recovery region.

34. What database query options are available in Amazon Redshift?

Ans:

Database Query Options: Connect to your cluster via a SQL recipient machine using common ODBC and JDBC connections. Connect to your cluster and run queries in the AWS Management Console using the Query Editor.

35. What is Amazon Redshift ODBC?

Ans:

The Amazon Redshift ODBC Driver lets you connect to live Amazon Redshift data, directly from applications that support ODBC connectivity. It is also useful for reading, writing, and updating Amazon Redshift data through the standard ODBC driver interface.

36. What are the limits for each region in Amazon Redshift?

Ans:

The maximum number of tables is 9,900 for 1xlarge cluster types and 20,000 for 8xlarge cluster types. The number of user-defined databases you can create for each group is 60. The number of simultaneous user connections that can be made to the group is 500. The number of AWS accounts you can licence to restore a snapshot is 20 per snapshot and 100 per AWS KMS key.

37. What are the functionalities of amazon redshift spectrum?

Ans:

Amazon Redshift Spectrum extends the functionality of Amazon Redshift to enable querying data stored in Amazon S3. Here’s a discussion on its role:

- External Tables: Spectrum allows you to define external tables in Amazon Redshift that reference data stored in Amazon S3.

- Separation of Storage and Compute: Redshift Spectrum separates storage and compute resources. The data remains in Amazon S3, and compute resources are used only when queries are executed.

- Cost Efficiency: By storing large volumes of data in Amazon S3 and utilising Redshift Spectrum for querying, you can manage costs more efficiently.

- Unified Querying: Redshift Spectrum seamlessly integrates with the Redshift query engine, providing a unified experience for querying data stored in both Redshift tables and external tables in S3.

38. Explain how Redshift manages and optimises queries with complex filters.

Ans:

Amazon Redshift optimises queries with complex filters through a combination of query optimization techniques, parallel processing, and distribution strategies.

- Query Parsing and Rewriting: When a complex query is submitted, Redshift’s query planner parses and rewrites the SQL to generate an initial query plan.

- Query Optimization: Redshift’s query optimizer evaluates various execution plans and selects the most efficient one.

- Distribution and Sorting: Redshift uses the distribution key and sort key settings to organise data across nodes. If the filters involve conditions on the distribution key or sort keys.

39. What are the limitations of Amazon Redshift?

Ans:

Amazon Redshift has some limitations that users should be aware of: Redshift is a data storage service and is not designed for real-time, low-latency workloads. It may not be suitable for applications that require fast typing or reading.

40. What is the role of the Redshift COPY options such as COMPUPDATE and STATUPDATE?

Ans:

In Amazon Redshift, the COPY command is used to load data from Amazon S3 or other supported sources into a Redshift table. The COMPUPDATE and STATUPDATE options are parameters you can include with the COPY command to control how compression and statistics are updated during the data load process.

41. Explain the impact of the COPY command’s ‘COMPUPDATE’ option on performance.

Ans:

The impact of the COMPUPDATE option on performance in Amazon Redshift is a trade-off between faster data loading times (with potential suboptimal compression) and more accurate compression decisions (with potentially slower data loading). The appropriate setting depends on specific use cases and priorities.

42. What is the purpose of the ANALYZE COMPRESSION command in Amazon Redshift?

Ans:

- The ANALYZE COMPRESSION command in Amazon Redshift is used to analyse and optimise the compression settings applied to tables. Compression is a technique used to reduce the storage space required for data.

- To use the ANALYZE COMPRESSION command, you typically run it on a specific table, and it provides insights and recommendations for that table’s compression settings.

43. Explain the COPY and ‘IGNORE HEADER’ option and when it might be used.

Ans:

- The IGNORE HEADER option in the COPY command of Amazon Redshift is used to specify the number of header rows to skip at the beginning of the data file during the data loading process.

- Using the IGNORE HEADER option is helpful in scenarios where the first few rows of your data file are not part of the actual dataset and should be excluded during the loading process into Amazon Redshift.

44. Explain the concept of zone maps in Amazon Redshift.

Ans:

In Amazon Redshift, zone maps are metadata structures that store information about the minimum and maximum values within a block of data in a column. They help optimise query performance by allowing the query planner to skip reading irrelevant blocks when processing a query, leading to faster data retrieval. Zone maps are particularly beneficial for range-restricted queries, where the query filters data based on a range of values within a column.

45. When you choose a distribution key for a compound sort key?

Ans:

When choosing a distribution key for a compound sort key in Amazon Redshift, it’s important to consider several factors to ensure optimal performance. The distribution key determines how data is distributed across nodes in the Redshift cluster, and the compound sort key defines the physical order of the data on disk within each node.

46. Can you elaborate on the importance of choosing a proper column encoding for performance

Ans:

Selecting the right column encoding in Amazon Redshift is a critical step in achieving optimal query performance and efficient use of storage resources. It requires consideration of data characteristics, workload patterns, and ongoing maintenance practices to ensure sustained performance gains over time.

47. Explain distribution styles in Amazon Redshift.

Ans:

- Key distribution: Data is distributed based on a chosen key.

- Even distribution: Data is distributed evenly across all nodes.

- All distribution: A single copy of the entire table is distributed to each node.

48.Can you use Redshift with other AWS services?

Ans:

Yes, you can integrate Amazon Redshift with various AWS services. Some common integrations include:

- Amazon S3: You can load data into Redshift from S3 using the COPY command, and Redshift Spectrum allows querying data directly from S3.

- Amazon DynamoDB: Redshift integrates with DynamoDB, enabling you to analyse and combine data from DynamoDB tables with Redshift data.

- Amazon EMR (Elastic MapReduce): Redshift can be used in conjunction with EMR for processing and analysing large datasets using Hadoop or other frameworks.

49. What is the significance of the Redshift Advisor, and how can it be utilised?

Ans:

The Redshift Advisor is a valuable tool in Amazon Redshift that provides recommendations and insights to help users optimise their Redshift clusters for better performance, cost efficiency, and overall resource utilisation.

50. Discuss the role of Redshift’s automatic workload management in query optimization.

Ans:

Redshift’s Automatic Workload Management is instrumental in query optimization by providing a flexible and adaptive framework for managing concurrent queries, prioritising resources, and ensuring efficient allocation based on the workload characteristics. It plays a vital role in maintaining consistent performance and responsiveness in dynamic analytical environments.

51. Discuss the use of Redshift’s COPY command with the ‘MAXERROR’ option.

Ans:

The MAXERROR option in the Redshift COPY command is used to specify the maximum number of allowed errors during the data loading process. When loading data into a Redshift table using the COPY command, it’s possible that some records may not conform to the expected format or constraints. The MAXERROR option allows you to control how many such errors are tolerated before the COPY operation is considered to have failed.

52. What is the role of the COPY command’s ‘manifest’ option in Amazon Redshift?

Ans:

The manifest option in the COPY command of Amazon Redshift is used when loading data from Amazon S3. When you use the manifest option, you provide a JSON-formatted manifest file that contains a list of the data files to be loaded into Redshift. This manifest file helps manage the loading process by specifying the files to be loaded and their corresponding S3 locations.

53. How does Amazon Redshift achieve high performance?

Ans:

- Redshift achieves high performance through a combination of columnar storage, data compression, and parallel processing across multiple nodes.

- These features collectively contribute to Amazon Redshift’s ability to handle complex analytical queries on large datasets with high performance

54. Can you list best practices for optimising Amazon Redshift performance?

Ans:

- Best practices include choosing appropriate sort and distribution keys, utilising compression, managing table statistics, and keeping data distribution even.

- These best practices cover various aspects of Amazon Redshift performance optimization, ranging from query tuning to cluster configuration and data loading strategies.

55. What is the role of the leader node in Amazon Redshift?

Ans:

The leader node acts as the orchestrator of query execution, managing the distribution of tasks, communication with compute nodes, and aggregation of results. It plays a pivotal role in ensuring efficient parallel processing and coordination within an Amazon Redshift cluster.

56. What is the significance of the sort key in Amazon Redshift tables?

Ans:

The sort key in Amazon Redshift is a critical element in optimising storage, compression, and query performance. By choosing an appropriate sort key strategy based on the types of queries and workload patterns, you can significantly enhance the efficiency of your data warehouse.

57. How does Amazon Redshift optimise query performance using execution plans?

Ans:

- Amazon Redshift optimises query performance using execution plans, which are detailed strategies outlining how a query will be executed. These plans are created by the query planner, a component of the Redshift query processing engine.

- By utilising execution plans and cost-based optimization, Amazon Redshift dynamically adapts to the characteristics of the data and queries, resulting in efficient and performant execution of analytical workloads.

58. What are some considerations for optimising data loading performance in Redshift?

Ans:

- Optimising data loading performance in Amazon Redshift is crucial for efficient and timely data processing.

- By carefully considering these optimization strategies, you can significantly enhance the efficiency and performance of data loading operations in Amazon Redshift.

59. How does Amazon Redshift handle backups?

Ans:

By using automated and manual snapshots with customizable retention periods, Redshift provides a reliable and flexible backup solution. This approach ensures that you have a point-in-time recovery option and can restore your data in case of unexpected events or data loss.

60. Can you use Redshift for real-time analytics?

Ans:

While Redshift is designed for high-performance analytics, it may not be the best choice for real-time analytics due to its batch processing nature. For real-time use cases, other AWS services like Amazon Aurora or Amazon DynamoDB might be more suitable.

61. What is Zone Maps and how does it contribute to performance?

Ans:

Zone Maps store metadata about the minimum and maximum values of a column in a block, improving performance by skipping irrelevant blocks during query execution.

62. What is the purpose of the UNLOAD command in Amazon Redshift?

Ans:

The UNLOAD command is used to export data from Redshift tables to Amazon S3, providing a convenient way to create backups or move data to other systems.

63. What is the role of Amazon Redshift Spectrum in improving analytics capabilities?

Ans:

- Amazon Redshift Spectrum is a feature that extends the analytics capabilities of Amazon Redshift by allowing you to query and analyse data stored in Amazon S3 directly from your Redshift cluster.

- Amazon Redshift Spectrum extends the reach of your data analytics by allowing you to seamlessly analyse and query data stored in Amazon S3 alongside your Redshift data.

64. How does data distribution across nodes contribute to parallel processing efficiency?

Ans:

- Amazon Redshift, data distribution across nodes is a key aspect of its Massively Parallel Processing (MPP) architecture, and it plays a crucial role in achieving efficient parallel processing.

- Data distribution across nodes in Amazon Redshift allows for efficient parallel processing, minimising data movement, optimising query performance, and enabling the system to scale seamlessly as data volumes increase.

65. Explain the role of the COMMIT command in Amazon Redshift.

Ans:

The COMMIT command in Amazon Redshift plays a crucial role in finalising transactions and ensuring data consistency. When a transaction is initiated in Redshift, it may involve multiple SQL statements that are executed as a single unit of work. The COMMIT command is responsible for making the changes performed during the transaction permanent.

66. What is the purpose of the Staging area in Amazon Redshift data loading?

Ans:

The staging area in Amazon Redshift data loading serves as an intermediate storage space where data is temporarily stored before being loaded into Redshift tables. This staging process is particularly important for efficient and parallelized data loading operations In this example, data is initially loaded into the staging_table where transformations and validations can be applied. Once the data is prepared, it is then loaded into the final target_table.

67. What is the significance of the NO LOAD option in the COPY command?

Ans:

The NO LOAD option is added to the COPY command, indicating that the data in the specified file (data_file.csv in this case) will not be loaded into the target_table. This can be especially helpful as a pre-check step before actual data loading, ensuring that the data conforms to the expected format and can be successfully loaded into the target table without errors.

68. What is data distribution in Amazon Redshift, and why is it important?

Ans:

- Data distribution in Amazon Redshift refers to the way data is distributed across the nodes of a Redshift cluster in a Massively Parallel Processing (MPP) architecture.

- Data distribution is a critical factor in optimising the performance and scalability of Amazon Redshift. By distributing data effectively, Redshift ensures that queries can be processed efficiently in parallel, leading to faster and more responsive analytical queries in a data warehouse environment.

69. What is the significance of the VACUUM operation in Amazon Redshift?

Ans:

- The VACUUM operation in Amazon Redshift is significant for managing storage and optimising query performance. When rows are updated or deleted in Redshift, the space occupied by these changes is not immediately released.

- The VACUUM operation is responsible for reclaiming this unused space, compacting the data, and resorting rows.

- It’s important to note that running VACUUM is a maintenance task and should be performed regularly, especially in environments with frequent data modifications, to keep the cluster’s storage and performance in good shape.

70. Explain the use of Materialised Views in Amazon Redshift.

Ans:

Materialised views in Amazon Redshift are database objects that store the results of a precomputed query. Unlike standard views that execute the underlying query at the time of access, materialised views store the computed result, offering improved query performance by eliminating the need to repeatedly execute complex or resource-intensive queries.

71. Explain the importance of compression in Amazon Redshift.

Ans:

Compression in Amazon Redshift is a fundamental optimization technique that offers a balance between storage efficiency, cost savings, and improved query performance. Utilising Redshift’s automatic compression algorithms and adjusting compression settings based on your data characteristics are key strategies for achieving these benefits.

72. Explain the WLM (Workload Management) in Amazon Redshift.

Ans:

WLM (Workload Management) in Amazon Redshift refers to a set of features and controls designed to manage and prioritise query workloads within a Redshift cluster. This is particularly important in a multi-user environment where multiple queries from different users or applications are contending for resources.

73. Explain the architecture of Amazon Redshift. How does it handle large-scale data processing?

Ans:

- Amazon Redshift employs a distributed and columnar storage architecture to handle large-scale data processing efficiently.

- Amazon Redshift’s architecture leverages distributed computing, columnar storage, and effective data distribution to handle large-scale data processing.

74. Explain the concept of Sort and Merge key in Amazon Redshift.

Ans:

- Sort Key: The Sort Key determines the order in which data is physically stored on disk within a table. Redshift uses a columnar storage model, and sorting data based on a specific column can significantly enhance query performance.

- Merge Key: The Merge Key is used during the loading process when new data is added to an existing table. It helps efficiently merge the new data with the existing data. By specifying a Merge Key, Redshift can avoid the need to re-sort the entire table when new data is added.

75. What are the benefits and use cases of columnar storage in Amazon Redshift?

Ans:

- Analytical Reporting: Columnar storage is ideal for reporting and analytical workloads where queries involve aggregations, filtering, and selection of specific columns.

- Data Warehousing: Redshift’s columnar storage makes it well-suited for data warehousing applications, especially when dealing with large datasets and complex analytics.

- Business Intelligence (BI): BI tools often generate queries that benefit from the efficiency of columnar storage, leading to faster insights and reporting.

- Data Exploration: For ad-hoc queries and data exploration, columnar storage allows users to quickly analyse specific subsets of data without unnecessary I/O operations.

76. How do compute nodes function in Amazon Redshift?

Ans:

Compute nodes in Amazon Redshift enable parallel and distributed processing of queries, allowing the system to efficiently handle large-scale data analytics. Their autonomy, combined with parallelism, plays a crucial role in achieving high-performance query processing in a data warehousing environment.

77. How do you troubleshoot and optimise slow-running queries in Amazon Redshift?

Ans:

Troubleshooting and optimising slow-running queries in Amazon Redshift involves a combination of analysing query performance, identifying bottlenecks, and applying optimization techniques. BH

78. What is the role of the leader node in Amazon Redshift?

Ans:

The leader node in Amazon Redshift is the central coordinating entity that receives, optimises, and distributes queries across compute nodes for parallel processing. Its efficient management of resources and coordination with compute nodes contribute to the overall performance of the data warehouse.

79. How does Amazon Redshift support schema changes, and what should be taken into account when making schema modifications?

Ans:

Amazon Redshift supports schema changes through the use of SQL statements, particularly the ALTER command. When making schema modifications, it’s essential to consider the potential impact on existing data, queries, and overall performance.

- Data Migration and Validation: Before making schema changes, it’s crucial to plan for the migration of existing data. Validate that the data in the affected tables can be transformed or migrated without loss of integrity.

- Query Impact: Analyse the impact of schema changes on existing queries. Modifications may require updates to SQL queries and applications that rely on the database schema. Evaluate how queries will be affected and ensure compatibility.

80. How can you optimise query performance in Amazon Redshift, considering table design and keys?

Ans:

Optimising query performance involves selecting appropriate distribution and sort keys, using compression effectively, choosing optimal table designs, and leveraging features like materialised views. Regular monitoring and tuning of queries contribute to ongoing performance optimization.

81. How does Amazon Redshift handle backups?

Ans:

- Automated Snapshots: Amazon Redshift automatically takes regular snapshots of the entire data warehouse cluster. These automated snapshots are incremental, capturing changes to the cluster since the last snapshot.

- Manual Snapshots: In addition to automated snapshots, users can create manual snapshots of the cluster at any time. Manual snapshots are useful before making significant changes to the database, such as schema modifications or data loading.

82. How does Amazon Redshift handle schema changes?

Ans:

Amazon Redshift supports schema changes, but they involve considerations such as the potential impact on existing queries, the need for table maintenance, and the use of the ALTER command. Proper planning and communication are essential to minimise disruption during schema changes.

83. How can you monitor and optimise query performance in Amazon Redshift?

Ans:

Monitoring and optimising query performance in Amazon Redshift involve using tools like query monitoring, reviewing query execution plans, and making adjustments based on insights gained. Regular maintenance tasks like vacuuming and analysing tables also contribute to ongoing optimization.

84. Can you explain the process of concurrency scaling?

Ans:

Concurrency Scaling in Amazon Redshift dynamically adds extra computing resources, known as clusters, during periods of high query demand. This ensures that the system can handle concurrent queries effectively, optimising performance without manual intervention.

85. Compare Amazon Redshift with other cloud-based data warehousing solutions.

Ans:

- Amazon Redshift is well-suited for users already on the AWS platform, offering tight integration with other AWS services.

- Google BigQuery is known for its serverless architecture and ease of use, particularly for users on the Google Cloud Platform.

- Snowflake provides a cloud-native data warehousing solution with separation of storage and compute resources, offering flexibility and scalability.

- Ultimately, the choice between these solutions depends on specific business requirements, existing cloud provider preferences, and the desired balance between performance, ease of use, and cost.

86. What are the advantages of using columnar storage in Amazon Redshift?

Ans:

Using columnar storage in Amazon Redshift offers several advantages, particularly in the context of analytics and data warehousing. Here are the key advantages and the impact on query efficiency:

- The advantages of columnar storage in Amazon Redshift, such as compression efficiency, minimised I/O operations, improved query performance, and efficient data retrieval, collectively contribute to a high-performance analytics platform.

- The design choices of columnar storage align with the patterns of analytical workloads, making Amazon Redshift well-suited for large-scale data warehousing and analytics tasks.

87. What is Columnar storage?

Ans:

Columnar storage is a database storage format where data is stored in columns rather than rows. In a traditional row-based storage system, each record or row is stored together, including all the columns associated with that row. However, in a columnar storage system, all values from a single column are stored together.

88. How does WLM contribute to efficient resource utilisation?

Ans:

By utilising Workload Management in Amazon Redshift, users can effectively manage and prioritise queries, allocate resources based on their importance, and prevent resource contention. This helps optimise the performance of the data warehouse in a multi-user environment, ensuring that queries are executed efficiently and meeting the specific needs of different workloads.

89. What are the benefits of using AWS Redshift?

Ans:

- Built-in security with end-to-end encryption.

- Multiple query support which provides significant upgrades in query speed.

- It provides an easy-to-use platform similar to MySQL and provides the use of PostgreSQL, ODBC, and JDBC.

- It provides automated backup and fast scaling with less complexity.

- It is a cost-effective storage technology.

90. How does Amazon Redshift handle data encryption during data loading and unloading

Ans:

Data Loading:

- SSL/TLS Encryption: Amazon Redshift supports Secure Sockets Layer (SSL) and Transport Layer Security (TLS) encryption for data in transit. This ensures that data transmitted between client applications and the Redshift cluster is encrypted.

- AWS Key Management Service (KMS): Redshift allows the use of AWS Key Management Service (KMS) for encryption of data during the loading process.

UNLOAD Command Encryption:

- The UNLOAD command in Amazon Redshift is used to export data from Redshift tables to Amazon S3. During this process, you can specify options for encrypting the data in the Amazon S3 bucket.

- S3 Server-Side Encryption: If your Amazon S3 bucket is configured for server-side encryption, the data unloaded to S3 can be automatically encrypted by S3. Redshift supports server-side encryption using AWS-managed keys (SSE-S3), AWS Key Management Service (SSE-KMS), or customer-provided keys (SSE).

91. What is the purpose of the SVV_TABLE_INFO system view in Amazon Redshift?

Ans:

The SVV_TABLE_INFO system view in Amazon Redshift provides information about the structure and characteristics of tables in a Redshift database. It serves as a valuable resource for users and administrators to gather metadata and statistics related to tables, helping in performance optimization, query planning, and general database management.

92. How does Amazon Redshift handle transactions and isolation levels?

Ans:

Amazon Redshift provides robust support for transactions following the ACID properties and offers standard SQL isolation levels. Users can control the isolation level for their sessions, balancing the trade-off between consistency and concurrency based on their specific application requirements.

93. Explain the differences between COPY and INSERT operations in Amazon Redshift.

Ans:

- COPY: The COPY command is the preferred method for bulk data loading into Amazon Redshift. It efficiently loads large volumes of data from Amazon S3, Amazon DynamoDB, or other supported sources. COPY is designed for high-performance parallel loading and minimises data movement between nodes in the Redshift cluster.

- INSERT: The INSERT command is typically used for inserting a smaller amount of data or for loading data row by row. While INSERT supports bulk loading through multiple value lists, it is generally less efficient than COPY for large-scale data loading.

94. What are the benefits Amazon Redshift support materialised?

Ans:

- Query Speed: Materialised views enhance query performance, especially for analytical queries that involve aggregations, by avoiding the need to recalculate results on the fly.

- Reduced Complexity: Users can simplify complex queries by using materialised views, leading to more readable and maintainable SQL code.

- Better Resource Utilisation: By precomputing aggregations and storing them in materialised views, resources are used more efficiently when executing queries.

95. Explain the concept of skew in Amazon Redshift.

Ans:

In Amazon Redshift, skew refers to an uneven distribution of data across slices or nodes within a cluster. When certain slices or nodes contain significantly more data than others, it results in data skew. Skew can have a notable impact on query performance, particularly in a massively parallel processing (MPP) architecture like Redshift. Here’s an explanation of the concept of skew and its implications on query performance.