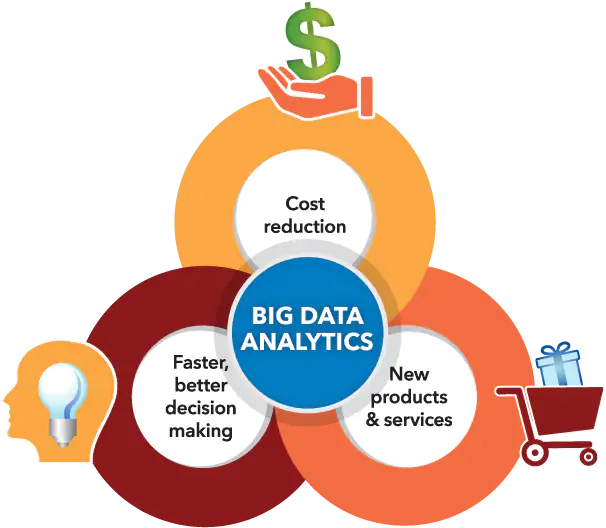

Overview of Big Data Analytics :-

Big Data Analytics offers an almost perpetual wellspring of business and enlightening understanding, that can prompt functional improvement and new freedoms for organizations to give hidden income across pretty much every industry. From use cases like client personalization, to chance moderation, to misrepresentation discovery, to inside tasks investigation, and the wide range of various new use cases emerging close every day, the Value concealed in organization information has organizations hoping to make a forefront examination activity.

Finding esteem inside crude information presents many difficulties for IT groups. Each organization has various necessities and various information resources. Business drives change rapidly in an always speeding up commercial center, and staying aware of new orders can require deftness and versatility. In addition, a fruitful Big Data Analytics activity requires huge processing assets, innovative framework, and profoundly talented faculty.

These provokes can make numerous activities come up short before they convey esteem. Before, an absence of figuring power and admittance to mechanization made a genuine creation scale investigation activity past the scope of most organizations: Big Data was excessively costly, with a lot of problem, and no reasonable ROI. With the ascent of distributed computing and new advancements in process asset the board, Big Data devices are more open than any other time in recent memory.

Benefits of Big Data Analytics :-

1. Hazard Management:

Banco de Oro, a Phillippine banking organization, utilizes Big Data examination to recognize false exercises and disparities. The association use it to limit a rundown of suspects or underlying drivers of issues.

2. Item Development and Innovations:

Rolls-Royce, probably the biggest producer of stream motors for aircrafts and military across the globe, utilizes Big Data examination to investigate how proficient the motor plans are and assuming there is any requirement for upgrades.

3. Faster and Better Decision Making Within Organizations:

Starbucks utilizes Big Data investigation to settle on essential choices. For instance, the organization use it to choose if a specific area would be appropriate for another outlet or not. They will dissect a few distinct variables, like populace, socioeconomics, availability of the area, and that’s only the tip of the iceberg.

4. Further develop Customer Experience:

Delta Air Lines utilizes Big Data examination to further develop client encounters. They screen tweets to discover their clients’ experience in regards to their excursions, delays, etc. The aircraft distinguishes negative tweets and does what’s important to cure going on. By freely resolving these issues and offering arrangements, it helps the aircraft assemble great client relations.

The Lifecycle Phases of Big Data Analytics :-

Presently, we should audit how Big Data investigation functions:

Step 1 – Business case assessment – The Big Data examination lifecycle starts with a business case, which characterizes the explanation and objective behind the investigation.

Step 2 – Identification of information – Here, a wide assortment of information sources are distinguished.

Step 3 – Data separating – All of the distinguished information from the past Step is sifted here to eliminate degenerate information.

Step 4 – Data extraction – Data that isn’t viable with the apparatus is separated and afterward changed into a viable structure.

Step 5 – Data conglomeration – In this Step, information with similar fields across various datasets are coordinated.

Step 6 – Data examination – Data is assessed utilizing scientific and factual apparatuses to find helpful data.

Step 7 – Visualization of information – With devices like Tableau, Power BI, and QlikView, Big Data investigators can create realistic representations of the examination.

Step 8 – Final investigation result – This is the last advance of the Big Data examination lifecycle, where the end-product of the investigation are made accessible to business partners who will make a move.

- It is benchmarked as handling 1,000,000 100 byte messages each second per hub

- Storm guarantee for unit of information will be handled at least once.

- Incredible level adaptability

- Inherent adaptation to internal failure

- Auto-restart on crashes

- Clojure-composed

- Works with Direct Acyclic Graph(DAG) geography

- Yield documents are in JSON design

- It has different use cases – constant examination, log handling, ETL, persistent calculation, circulated RPC, AI.

- Smoothes out ETL and ELT for Big information.

- Achieve the speed and size of sparkle.

- Speeds up your transition to ongoing.

- Handles various information sources.

- Gives various connectors under one rooftop, which thus will permit you to alter the arrangement according to your need.

- Talend Big Data Platform improves on utilizing MapReduce and Spark by producing local code

- More astute information quality with AI and normal language handling

- Deft DevOps to accelerate large information projects

- Smooth out all the DevOps processes

- CouchDB is a solitary hub information base that works like some other data set

- It permits running a solitary consistent data set server on quite a few servers

- It utilizes the omnipresent HTTP convention and JSON information design

- record addition, updates, recovery, and cancellation is very simple

- JavaScript Object Notation (JSON) arrangement can be translatable across various dialects

- It assists with running an application in Hadoop bunch, up to multiple times quicker in memory, and multiple times quicker on plate

- It offers lighting Fast Processing

- Support for Sophisticated Analytics

- Capacity to Integrate with Hadoop and existing Hadoop Data

- It gives worked in APIs in Java, Scala, or Python

- Sparkle gives the in-memory information handling abilities, which is way quicker than plate handling utilized by MapReduce.

- Also, Spark works with HDFS, OpenStack and Apache Cassandra, both in the cloud and on-prem, adding one more layer of adaptability to large information activities for your business.

- It can progressively scale from a couple to great many hubs to empower applications at each scale

- The Splice Machine enhancer naturally assesses each inquiry to the disseminated HBase districts

- Decrease the board, send quicker, and lessen hazard

- Devour quick streaming information, create, test and convey AI models

- Effectively transform any information into eye-getting and instructive designs

- It gives reviewed enterprises fine-grained data on information provenance

- Plotly offers limitless public document facilitating through its free local area plan

- Solid examination with an industry-driving SLA

- It offers endeavor grade security and observing

- Ensure information resources and reach out on-premises security and administration controls to the cloud

- A high-usefulness stage for engineers and researchers

- Joining with driving usefulness applications

- Convey Hadoop in the cloud without buying new equipment or paying other direct front expenses

- Viable information taking care of and storage space,

- It gives a set-up of administrators to computations on clusters, specifically, lattices,

- It gives a cognizant, incorporated assortment of large information instruments for information examination

- It gives graphical offices to information examination which show either on-screen or on printed copy

- Exceptionally Scalable Algorithms

- Computerized reasoning for Data Scientists

- It permits information researchers to envision and comprehend the rationale behind ML choices

- The simple to take on GUI or automatically in Java by means of. Skytree

- Model Interpretability

- It is intended to tackle vigorous prescient issues with information arrangement capacities

- Automatic and GUI Access

- It gives both 2D and 3D diagram perceptions with an assortment of programmed formats

- Interface investigation between chart substances, joining with planning frameworks, geospatial examination, media investigation, constant joint effort through a bunch of tasks or work areas.

- It accompanies explicit ingest handling and connection point components for printed content, pictures, and recordings

- It spaces highlight permits you to put together work into a bunch of ventures, or work areas

- It is based on demonstrated, adaptable enormous information advancements

- Upholds the cloud-based climate. Functions admirably with Amazon’s AWS.

- Validation upgrades when utilizing HTTP intermediary server

- Determination for Hadoop Compatible File framework exertion

- Support for POSIX-style record framework broadened credits

- It offers a vigorous biological system that is appropriate to meet the insightful necessities of a designer

- It acquires Flexibility Data Processing

- It considers quicker information Processing

Top Tools of Big Data Analytics with its Features :-

1.Apache Storm: Apache Storm is an open-source and free large information calculation framework. Apache Storm likewise an Apache item with a constant structure for information stream handling for the backings any programming language. It offers circulated ongoing, issue open minded handling framework. With ongoing calculation abilities. Storm scheduler oversees responsibility with various hubs regarding geography design and functions admirably with The Hadoop Distributed File System (HDFS).

Features :

2. Talend: Talend is a major information device that streamlines and computerizes large information mix. Its graphical wizard produces local code. It likewise permits enormous information mix, ace information the board and really looks at information quality.

Features:

3. Apache CouchDB: It is an open-source, cross-stage, record situated NoSQL information base that focuses on convenience and holding an adaptable engineering. It is written in simultaneousness arranged language Erlang. Lounge chair DB stores information in JSON records that can be gotten to web or inquiry utilizing JavaScript. It offers appropriated scaling with shortcoming lenient capacity. It permits getting to information by characterizing the Couch Replication Protocol.

Features:

4. Apache Spark: Spark is likewise an extremely well known and open-source huge information examination apparatus. Flash has more than 80 undeniable level administrators for making simple form equal applications. It is utilized at a wide scope of associations to deal with enormous datasets.

Features:

5. Join Machine: It is a major information investigation instrument. Their engineering is convenient across open mists like AWS, Azure, and Google.

Features:

6. Plotly: Plotly is an investigation device that allows clients to make outlines and dashboards to share on the web.

Features:

7. Azure HDInsight: It is a Spark and Hadoop administration in the cloud. It gives large information cloud contributions in two classifications, Standard and Premium. It gives an endeavor scale bunch to the association to run their large information responsibilities.

Features:

8. R: R is a programming language and free software and It’s Compute statistical and graphics. The R language is popular between statisticians and data miners for developing statistical software and data analysis. R Language provides a Large Number of statistical tests.

Features:

9. Skytree: Skytree is a major information examination instrument that enables information researchers to assemble more exact models quicker. It offers precise prescient AI models that are not difficult to utilize.

Features:

10. Lumify: Lumify is viewed as a Visualization stage, huge information combination and Analysis apparatus. It assists clients with finding associations and investigate connections in their information by means of a set-up of insightful choices.

Features:

11. Hadoop: The long-standing boss in the field of Big Data handling, notable for its capacities for gigantic scope information handling. It has low equipment prerequisite because of open-source Big Data structure can run on-prem or in the cloud.

Features:

12. Qubole: Qubole information administration is an autonomous and comprehensive enormous information stage that makes due, gains and advances all alone from your use. This allows the information to group focus on business results as opposed to dealing with the stage. Out of the many, hardly any renowned names that utilization Qubole incorporate Warner music gathering, Adobe, and Gannett. The nearest contender to Qubole is Revulytics.

Conclusion :-

Big data has become an essential requirement for enterprises looking to harness their business potential. Today both large and small businesses enjoy greater profitability and competitive edge through the capture management, analysis of vast volumes of unstructured data. However, all organizations have realized they require a modern data architecture for going to the next level. This need has led to the emergence of data lakes.