Renowned for its high-performance and scalable database technology, Teradata is a leading supplier of analytics and data warehousing solutions. Organizations can use it to efficiently manage massive amounts of data, run intricate queries, and obtain insights that are useful. Businesses looking to use data-driven decision-making prefer Teradata because of its strong architecture that facilitates advanced analytics.

1. Which four attributes make up a database?

Ans:

The ACID qualities (Atomicity, Consistency, Isolation, and Durability) are the basis of database transactions. Transactions are guaranteed to be atomic (indivisible), consistent (adhering to predetermined rules), isolated (preventing interference from one another), and durable (enduring even in the event of system failures) by DBMS. With the intelligent loading feature in the Hadoop View, Teradata Studio gives you easier access to Hadoop data.

2. How much are you aware of Teradata?

Ans:

In essence, Teradata is a relational database management system that has gained notoriety for a variety of data-related jobs because of its extensive and practical features. It is taken into account when operating a company’s data mart, OLAP, and data warehouse applications. When it comes to controlling the data processing in parallel, it is advisable to give it some thought right away.

3. Tools for data warehouses: what are they?

Ans:

- Tools for data warehouses include platforms like Snowflake, Amazon Redshift, Google BigQuery, Microsoft Azure Synapse Analytics, and Apache Hive.

- These tools enable organizations to store, manage, and analyze large volumes of structured and unstructured data efficiently.

- They provide features such as data transformation, query optimization, and scalability to support complex analytics and reporting tasks.

4. Can you name two popular ETL tools that you are able to use with Teradata?

Ans:

Two popular ETL tools are DataStage and Informatica. The Oracle Data Integrator, Microsoft SQL Server Integration Services (SSIS), Talend Open Studio, Pentaho Data Integration (PDI), Hadoop, AWS Glue, and AWS Data Pipeline are a few well-known ETL technologies.

5. Can the Teradata jobs be sent or circumvented in a straightforward Unix environment?

Ans:

Indeed, as long as specific prerequisites are met, users are free to proceed in the same manner. In order to accomplish this, UNIX execution might be used. User: Enter the Teradata user’s username here.

- Password: Enter the Teradata user’s password here.

- DataSource: Enter the TDPID, DBC Name, or Teradata server name.

- Port: Indicate which port the server is currently using.

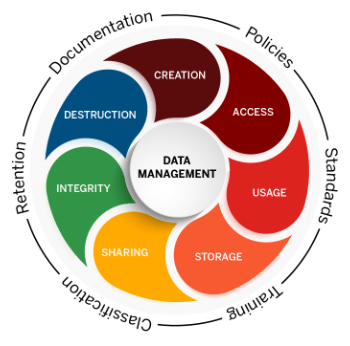

6. Define The significance of data management.

Ans:

The truth is that data is the true source of meaningful information, and with data, many crucial jobs, including issue-solving, company management, decision-making, and many other beneficial tasks, are easier to complete. The user is likely to encounter issues in each of the duties above if the same is not maintained. Users may locate items with ease and save time when data is properly handled.

7. How much do you really know about Teradata catching?

Ans:

It is essentially a Teradata add-on feature that enables users to share the cache with any application. This is because it closely collaborates with the source and allows users to customize the results in a way that suits them. When dealing with complex data that has a high number of errors linked to it, this strategy allows them to save time.

8. What characteristics does Teradata offer?

Ans:

- Teradata is a highly scalable and adaptable approach. Tasks can be divided among teams to obtain the actual results.

- It can help manage bulk data. It can handle large volumes of data without experiencing any issues.

- Immensely few factors can impact its essential parameters, such as performance.

- The data can be managed even if most of the segments are unstructured.

- It can enhance relational database administration in all respects.

9. What is the meaning of a Teradata Channel driver? What distinguishes it from Gateway?

Ans:

- Users must ensure that there is efficient communication between the applications and the PEs that are taken into consideration while managing the data and performing other relevant operation a program known as Channel Driver is used for this.

- In general, it serves as a direct conduit between them, making it simple for clients to exchange information.

- The only distinction between gateways and channel drivers is their ability to facilitate PE and application connectivity for network clients or customers who are linked to the network.

10. What distinguishes database management from data management?

Ans:

| Aspect | Database Management | Data Management |

|---|---|---|

| Scope | Administration and maintenance of databases | Broader activities related to data, including governance, quality, and analytics |

| Purpose | Ensures databases are functional, efficient, and secure | Ensures data is accurate, consistent, accessible, and used effectively |

| Activities | Database design, creation, performance tuning, backups, and security | Data governance, quality, integration, modeling, warehousing, and analytics |

| Tools | Database Management Systems (DBMS) like Oracle, MySQL, SQL Server, etc. | Data integration platforms, data quality tools, data governance tools, analytics platforms |

11. What’s the plan if the Fast Load Script fails to perform as expected during system restart?

Ans:

There are two practical ways to go about this. Users do not need to remove the error table entirely in order to continue using the previous file. They might run the command repeatedly instead of fixing the mistakes. Alternatively, they can execute a new file to maintain the same speed. In essence, During this process, the script is run, and anything that can assist them in this situation loads and starts the table. This usually removes all the obstacles in the tables, making it easy to execute the full script again.

12. Significant tasks completed with Teradata using the Access Module Processor?

Ans:

- The Database Manager subsystems are really loaded into the Access Module Processor.

- Because of this, the following operations may be completed with ease using it: DDL, DML, joining and aggregating data, applying, and clearing locks.

- Access module processors (AMPs), which are parallel database processors, are in charge of 500-megabyte disk storage units.

- Records from the same table are dispersed over many disk units due to the data partitioning across the disk units.

13. What is the simplest way to create a series in Teradata at the display time?

Ans:

In Teradata, the simplest way to create a series at display time is by using the `SEQUENCE` function. You can specify the start and end values along with the increment. For example, `SELECT SEQUENCE(1, 10, 2)` will generate a series from 1 to 10 with an increment of 2. This approach allows you to quickly generate sequential numbers without the need for pre-existing tables or complex logic.

14. What is the maximum number of nodes in Teradata that a gateway can be assigned to?

Ans:

There is no restriction on the number of Gateways that can be assigned to each node in a complicated application while it is in use. The data in every row of the table is stored inline if the maximum length given is less than or equal to 64000 bytes (64000 LATIN characters or 32000 UNICODE characters).

15. How are you going to verify the Teradata version? Does Teradata have a command for the same thing?

Ans:

- You may quickly verify it by using the command “SHOW VERSION.”

- opens a Teradata System login. Configures the Default Database in Step 1 and. Step 2: Emp_bkup is created as a table.

- When prompted, enter the password and log on with tdpid or userid. 2. Batch mode, in which you input the data into a file and run it to log in. Password and tdpid/user ID for login.

16. Why does Teradata’s Multiload support NUSI instead of USI?

Ans:

- Every AMP in Teradata is permitted to run individually, and they cannot be combined with USI, mainly when multiple operations are carried out concurrently.

- A lot of time can be saved, and a better strategy that is readily clubbed is NUSI.NUSI would put the index subtable and data row on the same AMP so that each could be managed independently.

17. Could you briefly describe Teradata’s Parallel Data Extension?

Ans:

Teradata’s Parallel Data Extension (PDE) is a feature that enhances data processing capabilities within the Teradata Database. It enables parallel execution of data-intensive tasks across multiple nodes, maximizing performance and scalability. PDE optimizes query performance by distributing workloads efficiently across the system, leveraging parallel processing techniques. It significantly accelerates data processing tasks, making it suitable for large-scale analytical workloads and complex queries.

18. In the event that a node fails, how may data be saved?

Ans:

Data can be saved in the event of a node failure through redundancy mechanisms such as replication or backups. Replication involves maintaining duplicate copies of data across multiple nodes, ensuring data availability even if one node fails. Backups involve regularly saving data to external storage, allowing restoration in case of node failure.

19. Which kinds of data warehouses are there?

Ans:

- Data warehouses come in three primary varieties.

- EDW stands for Enterprise Data Warehouse. This kind of warehouse acts as an enterprise-wide key or central database to assist decision-support services.

- ODS, or Operational Data Store Real-time updates are made to this kind of data warehouse.

- Data Mart.

20. Could you elaborate on the Virtual Disk?

Ans:

- Actually, it’s a general composition of many disk-only cylinders that form a huge group.

- Users also refer to it as the Disk Array. This ensures that, in the event of an emergency, there will be as little data loss as possible because it has already been divided into parts.

- Users can choose how to interpret the results as they see fit because backups can be made.

21. Is it possible to evaluate string symbols that are present at multiple locations in Teradata?

Ans:

Yes, consumers are able to carry out this task, and typically, parsing is considered for this. The procedure only verifies various kinds of mistakes, including syntactic and semantic ones. Furthermore, it may also be considered to verify the object’s genuine existence.

22. What is an Access Module Processor in Teradata exactly?

Ans:

An Access Module Processor (AMP) in Teradata is a processing unit responsible for managing and executing queries within the system. Each AMP handles a portion of the data stored in the database, performing tasks such as data retrieval, insertion, and modification. AMPs work collectively to distribute query workload and ensure efficient data processing across the system’s nodes.

23. In Teradata, what is a parsing engine?

Ans:

- Essentially, it is a methodology that facilitates users’ management of all SQL requests, after which the SQL provides the answers.

- It has multiple software components, and users can divide the SQL into distinct pieces at will.

- Then, these portions are either randomly or predeterminedly moved to the AMP.

24. Can you provide a method for managing Teradata traffic that is simple to implement?

Ans:

This may be accomplished with the aid of a function known as Tpump, which is explicitly intended for managing and tracking traffic.Since Teradata Viewpoint provides a wide range of capabilities and functions and connects with other Teradata tools, it is the tool of choice for Teradata database monitoring.

25. What does Teradata’s PPI mean?

Ans:

Partition Primary Index, or PPI for short, is essentially a storage function. There is no need for a thorough table scan because the storage is in the intermediate range. The conventional primary index used to assign rows to AMPs is called a nonpartitioned primary index (NPPI). With the use of a partitioned primary index (PPI), rows can be sorted by the hash of the primary index columns inside the partition and divided depending on the set of columns on the AMP to which they are distributed.

26. What are the Teradata components that you are acquainted with?

Ans:

With Teradata’s many helpful features, users are always free to achieve the results they want in the quickest amount of time. Here are a few that are frequently utilized and significantly impact simplifying duties. Access Module, Virtual Drive, and Passing Engine Processor, Virtual Storage System, Bit-Binding, Meta Manager

27. Which Teradata locks are the most significant ones that you have already accessed?

Ans:

- Access Locks: Prevent concurrent access to data blocks, ensuring data consistency and integrity.

- Row Hash Locks: Control access to individual rows in tables, managing concurrency at the row level.

- Table Locks: Regulate access to entire tables, preventing conflicting operations on the same table.

28. In Teradata, what is a data warehouse?

Ans:

A data warehouse is a system used for reporting and data analysis. It is often referred to as an Enterprise Data Warehouse (EDW), DW, or DWH. Data warehouses can create reports for senior management, including quarterly and annual comparisons, and they can contain both current and historical data.

29. What do you think is the main problem with Teradata, and how can it be easily avoided?

Ans:

One of the main issues with Teradata is node failure. Even while the likelihood is low, when it does happen, there are various issues. Creating a Clique is one of Teradata’s dedicated features for managing this circumstance. Likewise, essentially, the standard drives share a variety of nodes. The failure of one node has minimal effect when using this strategy.

30. When will you utilize Teradata’s Spool space?

Ans:

- It is employed in query execution. Teradata stores intermediate and final result sets in Spool Space, a temporary workspace.

- This area is only utilized momentarily, and it is immediately released at the conclusion of the session or when it is no longer needed.

31. What do Teradata Journals mean?

Ans:

Teradata Journals are records of changes made to a Teradata database. They capture all transactions and modifications, providing a comprehensive history of data alterations. Journals enable organizations to track data changes for auditing, recovery, and compliance purposes. They play a crucial role in ensuring data integrity and facilitating disaster recovery processes. Access to journals allows administrators to monitor database activity and troubleshoot issues effectively.

32. What are the differences between Teradata’s SET and MULTISET tables?

Ans:

- SET Table: Prevents repeated entries.

- MULTISET Table: Permits more than one record.

- Duplicate records are not stored in a table classified as SET; however, they can be stored in a MULTISET table. In Teradata, tables are created using the CREATE TABLE command.

33. How many different kinds of BTEQ Exports does Teradata offer?

Ans:

- Teradata offers three types of BTEQ exports:

- Formatted Export: Suitable for human-readable output, it formats data with column headers.

- Unformatted Export: Intended for machine processing, it exports data without formatting or column headers.

- Report Export: Enables the creation of reports with customizable formatting options, facilitating data analysis and presentation.

34. Describe the Teradata table creation process.

Ans:

The build TABLE statement, a statement with column definition, a CREATE TABLE statement from an existing table, and a CREATE TABLE statement with a SELECT statement can all be used to build tables.

CREATE TABLE; Table Options − Identifies the table’s physical characteristics, including Journal and Fallback.

35. Compose a statement to identify redundant data in a table.

Ans:

With the use of the DISTINCT or GROUP BY statement, we can identify duplicate records.

Locating Duplicate Documents Using the statement DISTINCT:

SELECT DISTINCT in columns 1 and 2.

Using the GROUP BY Query, locate duplicate records FROM table name:

Column 1, Column 2, SELECT.

GROUP BY column 1, column 2;

36. FROM table name Create a query to find out how many AMPs are present in the system.

Ans:

- Utilizing the Query SELECT HASHAMP() + 1

- In contrast, NUSI will only access the blocks if the sub-table has the necessary rows. All the blocks within the AMP.

37. Could you list a few popular BTEQ scripts?

Ans:

- LOGON: It facilitates your Teradata system login.

- ERROR CODE: It provides the most recent Query’s status code.

- ACTIVITY COUNT: Indicates how many rows were impacted by the previous Query that was run.

- DATABASE: The default database is set.

- RUN FILE: This command facilitates the execution of a file’s Query.

38. What does Teradata’s FAST EXPORT mean?

Ans:

- The term “FAST EXPORT” in Teradata describes data exports to a flat file. Upload a file.

- LOGOFF: It logs you out of the database and ends all sessions.

- EXPORT: It begins exporting to the output file and provides a path to it.

39. Regarding PPI (Partitioned Primary Index), what do you mean?

Ans:

Teradata’s Partitioned Primary Index (PPI) is an extremely potent feature that lets users use a portion of the table instead of the entire table. PPI is an indexing system designed to improve query efficiency. When used for data distribution, PPI works similarly to a Primary Index, dividing data based on the range or case defined in the database.

40. How may duplicate records be located within a table?

Ans:

To find duplicate records in a table, we can use the “DISTINCT” or “GROUP BY” statements. We have two options:

SELECT tablename.DISTINCT column1, column2;

Alternatively, you might do this: SELECT column1, column2, FROM table-name GROUP BY column1, column2;

41. How does Teradata’s CASE Expression function?

Ans:

The Teradata CASE expression functions similarly to a switch statement in programming languages. It evaluates a set of conditions sequentially and returns a result based on the first matching condition. It offers flexibility by allowing multiple conditions and specifying different outcomes for each. This expression is useful for creating conditional logic within SQL queries, enabling dynamic result generation based on data conditions.

42. What are the advantages of Teradata’s UPSERT command?

Ans:

- Teradata’s UPSERT command combines the functionalities of INSERT and UPDATE operations, offering efficiency in managing data.

- It simplifies the process of inserting new records and updating existing ones in a single statement.

- This approach reduces complexity and enhances performance by minimizing the need for multiple database transactions.

- UPSERT ensures data integrity and consistency by handling potential conflicts gracefully, making it a valuable tool for maintaining up-to-date databases.

43. What Does Teradata’s Transient Journaling Mean?

Ans:

A dictionary table maintained by the system, the transient journal safeguards transactions against several types of system failures and deadlock (for more information on deadlock, see SQL Request and Transaction Processing). Every transaction that the Teradata Database processes saves a picture of the rows that were affected by the transaction prior to the change.

44. What Do Teradata Database Privileges Entail?

Ans:

Permission to view or modify database objects or data is known as a database privilege. Almost anything that can be done in the Teradata Database requires specific privileges.

The privileges are used to restrict who can do the following tasks:

- Remove particular database objects and data.

- Run particular macros, stored procedures, and UDFs.

- Keep an eye on system activity.

- Grant other users rights.

45. Which Teradata Privilege Levels are available?

Ans:

- System-level privileges grant permissions at the system level, allowing users to perform administrative tasks across the entire Teradata system.

- Database-level privileges apply to specific databases within the Teradata system, permitting users to perform actions such as creating, modifying, or dropping databases.

- Object-level privileges are granted on individual database objects like tables, views, or procedures, allowing users to perform operations specific to those objects, such as selecting, inserting, updating, or deleting data.

46. What does Teradata’s GIVE statement mean?

Ans:

A GIVE statement allows one user to give another user ownership of a database or user space. Additionally, all users and databases that are owned by the moved user or database are migrated.

Syntax : TRANSFER recipient_name TO database_name

Give user_name to recipient_name in syntax two.

47. What does Teradata’s GRANT statement mean?

Ans:

GRANT creates roles, databases, proxy users, and individual users with explicit privileges. System administrators can assign roles and privileges to user accounts and roles by using the GRANT statement. The following grammatical constraints are in effect: GRANT cannot combine the granting of roles and privileges in a single statement. A specified GRANT statement must grant either roles or privileges.

48. Which GRANT forms are accessible from Teradata?

Ans:

There are various GRANT forms in Teradata, including:

- Teradata Database performance tracking via GRANT (Monitor Form).

- GRANT (Role Form) – Giving users and other roles access to roles.

- GRANT (SQL Form) – This Form allows you to create, grant access to, and log out of different Teradata database objects.

- GRANT ZONE: This allows roles or users who don’t belong in any zone to be treated as guests in that zone. Users are not immediately granted access to database items within GRANT ZONE. Before allowing entry, zone users must offer guests privileges.

49. How are GRANT (MONITOR Form) and GRANT (SQL Form) used?

Ans:

The GRANT (MONITOR form) power set is related to tracking system performance, whereas the GRANT (SQL form) controls access to and manipulation of database objects. In order to give a user full access, including MONITOR, you need to make the following two requests:

GIVE THE USER ALL ACCESS RIGHTS TO THE OBJECT WITH GRANT OPTION; GIVE THE USER MONITOR RIGHTS WITH GRANT OPTION;

50. What does Teradata’s REVOKE statement mean?

Ans:

REVOKE removes explicit privileges from a role, database, proxy user, or one or more users. The rights may have been granted by a prior GRANT declaration or automatically. REVOKE revokes the specified privileges from the designated grantee. DELETE, INSERT, and SELECT privileges are removed when ALL privileges are restricted. Privileges from the PUBLIC role are withdrawn by specifying ROLE PUBLIC. The privileges that have been directly or indirectly granted to users will remain in place.

51. What does the SHOW statement aim to achieve?

Ans:

- In the Form of a CREATE database object or ALTER database object statement, SHOW statements return the outcome of the most recent data definition statement run against the specified database object.

- You can view the current status of your SQL Plus environment by using the SHOW command.

- It can be used to show the current value of any parameter that the SET command controls.

- You can also use SHOW to view the current page footers, titles, and other content.

52. What do Teradata Hot Standby Nodes (HSN) mean?

Ans:

One node in a clique that is not initially set up to run any Teradata processes is known as the Hot Standby Node (HSN).In the event of a node loss, hot standby nodes (HSNs), which are spare, operational nodes, increase availability and rapidly restore performance levels. The system replaces failed nodes with the same number of available HSNs in the event that one or more nodes fail.

53. Describe Teradata’s volatile tables.

Ans:

- Teradata’s volatile tables are temporary storage structures used for session-specific data.

- They are created on-the-fly and exist only for the duration of the session. Volatile tables are useful for holding intermediate results or temporary data within a session.

- They are automatically dropped at the end of the session, freeing up system resources.

- Volatile tables enhance performance by reducing disk I/O and optimizing query processing.

54. What does Teradata mean by LOG and NO LOG?

Ans:

You can specify whether their activity is logged to the transitory journal by using global temporary and volatile tables. Although the NO LOG option lowers the logging system cost, it is also true that table modifications are lost in the event of an aborted SQL request and cannot be restored.

55. What Does Perpetual Journaling Mean?

Ans:

The user-specified permanent journal table can be used to obtain images of Teradata Database transactions taken before and after. During a recovery operation, the Archive/Recovery utility can use the journal entries in this table to roll back or advance transactions.

56. What does Teradata’s MERGEBLOCKRATIO mean?

Ans:

- The option MERGEBLOCKRATIO offers When doing complete table alteration procedures for permanent tables and permanent journal tables, there is a method to merge multiple small data blocks into one more giant data block.

- For global temporary files and volatile files, this option is not available. The file system reduces the number of data blocks within a table that would otherwise mainly consist of small data blocks by using the merge block ratio that you define.

57. What does Teradata’s DATABLOCKSIZE mean?

Ans:

- The variable DATABLOCKSIZE defines the maximum data block size for blocks that contain multiple rows. A data block is the Teradata file system’s physical input/output unit.

- More rows can be selected in a single I/O for entire table scan operations thanks to larger block sizes. For transaction-oriented tables, smaller block sizes are ideal to minimise overhead by just obtaining what is required.

58. What does BLOCK COMPRESSION mean?

Ans:

This option allows you to configure a table’s temperature-based block compression state. Since the file system gets its temperature information from Teradata Virtual Storage, which records data temperatures at the cylinder level rather than tables, it also manages temperature-related compression at the cylinder level.

59. What Do Teradata Surrogate Keys Mean?

Ans:

- There are occasions when it is difficult, if possible, to identify and select a straightforward primary key.

- It is likely that no one column in a database can uniquely identify its rows or that using a composite key is discouraged due to performance issues. Surrogate keys are a perfect solution in these cases.

- An artificial key is a surrogate key overhead by just obtaining what is required.

60. Describe Teradata’s CHECK Constraints.

Ans:

The most common kind of SQL constraint specification is called CHECK. A CHECK constraint can apply to a single column or the entire table, depending on where it appears in the SQL text for the CREATE TABLE or ALTER TABLE statements.

61. What does Teradata’s Referential Constraint mean?

Ans:

In some cases, if specific referential relationships between the tables given in the request have been created, the Optimizer can produce noticeably superior query plans. You can benefit from these advantages without having to bear the cost of enforcing the recommended referential constraints thanks to the Referential Constraint feature, often known as soft referential integrity.

62. How can Teradata and Snowflake be connected?

Ans:

To manually migrate from Teradata to Snowflake, follow these instructions.

Step:

- Relocating your Data Model.

- Create a Snowflake Account

- Converting Current DDL Scripts

- Developing New DDL Scripts

- Transfer Teradata Data to Snowflake.

- Link Data Sources to Snowflake

63. What does Teradata’s ADD Option mean?

Ans:

- Teradata’s ADD Option is a feature used in the ALTER TABLE statement to add columns to an existing table.

- It allows users to modify the table structure by adding new columns without impacting existing data.

- This option enables seamless schema evolution, facilitating changes to accommodate new requirements or data types.

- By using the ADD Option, users can enhance the flexibility and scalability of their Teradata databases without the need for complex data migration processes.

64. Define Tera Database.

Ans:

A Tera database is a high-performance, distributed database system developed by Teradata Corporation. It’s designed for handling large volumes of data and complex queries in parallel processing environments. Tera databases utilize massively parallel processing (MPP) architecture to achieve scalability and high availability. They are commonly used in enterprise settings for data warehousing, analytics, and decision support applications due to their robustness and efficiency.

65. Describe the Keyword QUEUE.

Ans:

In order for a table, you define to have the properties associated with queue tables and to be able to use consume mode when you select from it, each queue table you define must include the keyword QUEUE as one of the CREATE TABLE options after the table name (see SQL Data Manipulation Language).

66. What does Teradata’s QITS Column mean?

Ans:

- Any queue table must have a Queue Insertion Time Stamp (QITS) column declared as its initial column.

- There is only one QITS column per queue table, and it needs to be specified precisely as shown with the following properties.

- Name of the QITS column The name you provide for the QITS column is indicated by QITS_column_name in the expression TIMESTAMP(6) NOT NULL DEFAULT CURRENT_TIMESTAMP(6).

67. Dissimilarities in REPLACE TRANSFORM?

Ans:

- The REPLACE command in SQL is used to update existing records in a table with new values, replacing the old values entirely.

- On the other hand, the TRANSFORM function in SQL is used to perform calculations or apply operations on data within a query result, without altering the original data in the table.

- While REPLACE modifies existing records, TRANSFORM manipulates the result set of a query.

68. What distinguishes ANSI session mode from BTET session mode in Teradata?

Ans:

ANSI session mode pertains to a state where transaction processing semantics conform to the rules specified by the ANSI SQL:2011 specification. On the other hand, Teradata (or BTET) session mode is a state where transaction processing adheres to a set of rules defined by Teradata.

69. In what ways is Snowflake superior to Teradata?

Ans:

Snowflake’s cloud-based architecture provides excellent elasticity and flexibility, whereas Teradata is a well-tuned, traditional system with more robust workload management features. Your organization’s particular needs and requirements will determine which to choose. Nevertheless, it has been a mainstay of the on-premises data warehousing industry for more than 40 years, providing excellent performance and scalability for intricate queries.

70. What kinds of Teradata utilities are there?

Ans:

- Teradata offers various utilities for database management tasks such as data loading, backup, and administration.

- These utilities include FastLoad for fast data loading, MultiLoad for high-volume data maintenance, TPump for continuous data updates, FastExport for efficient data extraction, and BTEQ (Basic Teradata Query) for scripting and interactive querying.

- Each utility serves specific purposes, enabling efficient data processing and management within Teradata environments.

71. How do you believe this Query accomplishes?

Ans:

This query aims to understand your perception of a particular approach or method. It seeks a concise assessment of how you think the query fulfills its objective. Your response should provide a brief evaluation of the effectiveness or outcome of the query in five lines or fewer.

72. Could you list a few Teradata benefits?

Ans:

- Bigger Warehouses: Compared to all of its rivals, Teradata enables enormous warehouse data.

- Scalability: We can store data in the Teradata database on a single machine ranging from 100 GB to over 100+ Petabytes. This massive amount of data can be scaled without causing any problems with performance.

- Parallel Aware Optimizer: This Optimizer streamlines query execution and query tweaking.

- Automatic Data Distribution: Teradata’s Automatic Data Distribution feature is fantastic as it improves application performance. By doing away with intricate indexing techniques and maintaining even data distribution, it cuts down on time-consuming reorganisations.

73. How many uses are there for a Teradata database?

Ans:

Teradata databases are primarily utilized for data warehousing and analytics purposes. They serve as a centralized repository for storing and analyzing large volumes of structured and unstructured data. Common applications include business intelligence, reporting, and advanced analytics. Teradata databases support complex SQL queries and are often employed in industries such as finance, retail, and telecommunications to drive data-driven decision-making.

74. What constitutes the Teradata Architecture’s core elements?

Ans:

Teradata Architecture’s primary parts are PARsing Engines (PE), Access Module Processors (AMPs), qaBYNETs, and Disks. Access Module Processors (AMPs): The BYNET-connected virtual processor that interfaces with PE. Every AMP has a disk that can read and write data as soon as AMPs get the data type conversion, filtering, aggregating, and sorting of the execution plan and data from the PE. It also writes (stores) information on the necessary disks. Every time a query is issued, all AMPs collaborate to deliver the information.

75. Describe the architecture of Teradata.

Ans:

Based on MPP, Teradata’s architecture can be divided into two categories: retrieval architectures that include AMPs and disks and storage architectures that include parsing engines and BYNET.

Parsing Engine (PE): Processes queries from clients and creates plans for SQL query execution. A SQL query that the user executes is linked to the PE, which performs the following tasks:

- Look for syntactic mistakes in the queries.

- Ascertains the existence or non-existence of the items in the SQL query.

- Creates and submits query execution plans to BYNET.

- Gets the results of SQL queries from the AMPs and forwards them to the client.

76. Which Teradata operators and functions are available for string manipulation?

Ans:

- SUBSTRING: According to the ANSI standard, extracts a specific section of the lengthy string.

- Take the string “Simplilearn” from a table as an example.

- Choose Substring “Simplilearn” from 1 to 5; the result is Inter

- POSITION: Finds a specific character within a string.

- For instance, SELECT POSITION(“e” IN “Simplilearn”); 8 is the result.

- Trim: A string’s blank spaces are eliminated.

- For instance, SELECT TRIM(” Simplilearn “); the result is Simplilearn

- UPPER: Makes the string all capital.

- For instance, SELECT UPPER(“Simplilearn”); Simplilearn is the output.

77. Why does Multiload support NUSI over USI?

Ans:

Teradata enables each AMP to function independently. To have several AMPs, the index subtable with USI would be necessary. In this case, AMPs would need to communicate with one another. Because the index subtable and data row are on the same AMP with NUSI, that AMP may be managed separately. Consequently, Multiload supports NUSI but not USI.

78. What makes using Teradata so important?

Ans:

- A lot of data, even more than 50 petabytes, can be processed and stored by the system.

- Teradata can be integrated with other business intelligence (BI) software programs.

- Teradata facilitates Online Analytical Processing, or OLAP, which enables users to carry out intricate analyses of their data.

- Teradata also provides a full range of data warehousing services, including hardware- and cloud-based DW, business analytics.

79. How many populated tables can Teradata MultiLoad handle?

Ans:

Teradata Multiload can support up to five filled tables. MultiLoad may do many operations, including INSERT, DELETE, UPDATE, and UPSERT, in addition to loading multiple tables simultaneously. It can execute up to 20 DML operations in a script and load up to 5 tables concurrently.

80. What does “skewness in Teradata” mean? How is it used?

Ans:

The row distribution on AMPs (Access Module Processors) is referred to as “skewness” in Teradata. The distribution of table data among AMPs is known as the Skew Factor in data distribution. When the skewed factor is zero, the data distribution among the AMPs is equal. A highly skewed set of data suggests that the distribution is not uniform and that some AMPs have comparatively few rows while others have a lot more. The large Skew Factor (unequal data distribution) in this instance affects Teradata’s performance and parallelism.

81. What does Teradata’s Spool Space mean? Why is it applied?

Ans:

- The unutilized space in the system where SQL query intermediate results are stored is known as spool space.

- Users can only execute if there is enough spool space. The amount of spool space allotted is determined by the number of AMPs; however, each AMP only has access to a fraction of it.

- If the per-AMP limit is surpassed, the user will be notified that they have run out of spool space.

82. In Teradata, what do you mean by nodes?

Ans:

- In Teradata, nodes refer to individual servers or machines within a Teradata system.

- Each node typically hosts one or more components of the Teradata Database, such as parsing engines or AMPs (Access Module Processors). Nodes collaborate to distribute and process queries across the system, enabling parallelism and scalability.

- The number of nodes in a Teradata system can vary based on the system’s configuration and scalability requirements.

83. What are a handful of Teradata’s ETL (Exact, Transform, and Load) tools?

Ans:

Teradata regularly uses a variety of ETL (Extract, Transform, and Load) tools, including the following:

- Informatica

- DataStage as well as

- The SQL Server Integration Services, or SSIS.

84. Is Teradata a database or an ETL (Exact, Transform, and Load) tool?

Ans:

Extract, Transform, and Load, or ETL, is not what Teradata does. Teradata is an open-source, free relational database management system (RDBMS) compatible with Linux, Windows, and additional operating systems. Terabyte-sized data loads can be handled by Teradata, a relational database management system (RDMS). The system can perform large-scale data warehouse applications.

85. Could you provide some advantages of ETL tools over Teradata for me to know?

Ans:

- It supports several heterogeneous data sources and destinations.

- A comprehensive GUI provided by the ETL tools makes controlling Debugging databases simpler.

- You can also reuse components with ETL tools. Because of this, if the central server receives an update, all related programs that rely on it are automatically updated as well.

- ETL technologies can pivot and de-pivot or convert rows into columns and rows into columns.

86. What Does Teradata Caching Entail?

Ans:

Caching in Teradata refers to the practice of storing frequently used data and information in the cache memory so that it may be quickly retrieved from memory when needed again, saving the application from having to build it from scratch. Changes are rare in Teradata since caching usually occurs in the same sequence. Numerous apps often share caches.

87. What does “Channel Driver” signify to you?

Ans:

A driver for a channel acts as a communication channel connecting PEs to the application programs running on client-connected channels. The Teradata Gateway works like a channel driver by serving as a bridge between the Parse Engine and applications linked to network clients.

88. What kinds of tables can you create with Teradata?

Ans:

- Permanent Table: After data is entered, it remains on file indefinitely and contains all of the user-inputted data. Users and sessions can share the same content thanks to permanent tables. It is the standard table.

- Volatile Table: When data is entered into a volatile table, it is destroyed right away and is only retained for the length of the active user session.

- They are usually used to store intermediate data after the end of the user session while data is being transformed.

- Global Temporary Table: This is a different kind of permanent table. It stores the globally used variables for the duration of the program, and its lifetime is determined by the user’s session. After the meeting, the table is taken down or disposed of.

89. When you refer to Parallel Data Extension, what do you mean?

Ans:

- The term “Parallel Data Extension” typically refers to a feature or module in software systems that enables parallel processing of data.

- It allows tasks to be divided and executed concurrently across multiple processing units, such as CPU cores or nodes in a distributed system.

- This extension enhances performance by leveraging parallelism to handle large datasets more efficiently.

- It’s commonly utilized in computing environments where speed and scalability are crucial, such as big data analytics or high-performance computing applications.

90. Could you describe Teradata’s fallback feature?

Ans:

The fallback mechanism safeguards the table’s data in Teradata. It saves the second duplicate of the table’s rows on an additional AMP called the Fallback AMP. The fallback rows are utilised in the event that an AMP fails. Because there is an accessible fallback AMP, data can still be obtained in the event that one of the AMPs fails.

91. Should the Fast Script operate inconsistently, what steps will you take?

Ans:

- Restarting the previous file: Make sure you don’t completely erase the error tables. Alternatively, you can correct the problems in the script or file and then rerun the file.

- Starting a new file: The starting and ending loading statements can also be used to restart. As a result, the lock that was on the target table can be removed. The record can also be deleted from the fast-log table. You can execute the entire script again if everything goes according to Plan. Alternatively, you might try to recreate it without the table.

92. What is Teradata’s ETL process?

Ans:

Using the ETL process, you can extract data from numerous sources and move it to a central data warehouse or analytics platform. However, this can be quite challenging and time-consuming without ETL tools, particularly if you’re working with numerous different data sources and kinds.

93. Which transformations occur most frequently in ETL processes?

Ans:

- Cleaning: Consistency in date format, mapping NULL to 0 or “Male” to “M” and “Female” to “F,” etc.

- Deduplication: locating and eliminating redundant data.

- Format revision: Converting character sets, units of measurement, dates and times, etc.

94. How may Teradata data be extracted?

Ans:

- To examine tTeradataInput’s Basic settings, double-click on it.

- Within the Table Name field, type the table name that you want to read data from.

- To get data from the table person, type the following SQL statement into the Query field.

- Press the []

95. How can a Python script connect to Teradata?

Ans:

To connect to Teradata using Python, you can utilize the `teradatasql` library. First, install the library using pip (`pip install teradatasql`). Then, import the library in your Python script and establish a connection by providing the Teradata database details such as host, username, password, and database name. After establishing the connection, you can execute SQL queries and interact with the Teradata database within your Python script.