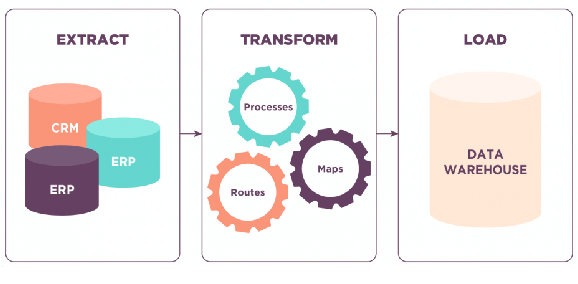

ETL stands for Extract, Transform, Load. It’s a process used in data integration where data is extracted from various sources, transformed or cleansed to fit specific business needs or a target database, and then loaded into the target system or data warehouse. This process involves extracting data from diverse sources, manipulating it into a consistent format, and loading it into a central repository for analysis and business intelligence purposes. ETL enables organizations to consolidate, refine, and organize large volumes of data from different sources to make it suitable for analysis and reporting.

1. What is ETL?

Ans:

ETL stands for Extract Transform Load and is widely regarded as one of essential Testing tools in the data warehousing architecture. Its main task is to handle the data management for the business process which is complex and is useful to business in many ways.

2. What about ETL testing and operations that are part of the same?

Ans:

It simply means verifying a data in terms of its transformation in correct or the right manner as per the needs of a business. It also includes verification of the projected data. The users can also check whether a data is successfully loaded in warehouse or not without worrying about the loss of data.

3. How is Power Center different from Power Mart?

Ans:

Power Mart is a good to be considered only when data processing requirements are low. On other side, the Power Center can simply the process bulk time in a short span of time. Power Center can easily support an ERP such as SAP while no support of same is available on the ERP.

4. What is partitioning in ETL?

Ans:

The transactions are always needed to be divided for a better performance. The same processes are known as a Partitioning. It simply makes sure that server can directly access the sources through the multiple connections.

- — Example of date-based partitioning in SQL

- CREATE TABLE my_table (

- id INT,

- data_column VARCHAR(255),

- date_column DATE

- ) PARTITION BY RANGE (YEAR(date_column));

- — Add a new partition

- ALTER TABLE my_table

- ADD PARTITION (PARTITION new_partition VALUES LESS THAN (2023));

5. Name few tools that can easily use with the ETL?

Ans:

There are many tools can be considered. However, it is not always necessary that user needs all of them at the same time. Also, which tool is used simply depends on preference and the task that needs to accomplished.

6. What is the difference between Connected Lookup and Unconnected ones?

Ans:

| Aspect | Connected Lookup | Unconnected Lookup | |

| Integration |

Defines permPart of the data flow; directly connected to the pipeline. |

Not directly integrated into the data flow; called as needed. | |

| Complexity | Can handle complex logic and conditions within the transformation. | Simpler, suitable for straightforward lookups without complex logic. | |

| Performance Impact | May have a higher performance impact due to its continuous integration in the data flow. | Generally has a lower performance impact as it is called on-demand. |

7. What is the tracing level and types of the same?

Ans:

There are file logs and there is limit on them when it comes to storing data in them. The Tracing level is nothing but amount of data that can be easily stored on the same. These levels clearly explain tracing levels and in manner that provides all the necessary information regarding the same. There are the two types are:

- Verbose

- Normal

8. How are data warehousing and data mining distinct, and their association with warehousing applications?

Ans:

The warehousing applications are important generally include analytical process, Information processing, as well as a Data mining. There are actually very large number of predictive that needs to extracted from the database with the lot of data. The warehousing sometimes depends on the mining for operations involved.

9. What is the Grain of Fact?

Ans:

The fact information can be stored at level that is known as grain fact. The other name of this Fact Granularity. It is possible for users to change the name of the same when the need for a same is realized.

10. Does possible to load data and use it as a source?

Ans:

Yes, ETL it is possible. This task can be accomplished simply by using Cache. The users must make sure that Cache is free and is generally optimized before it is used for this task. At the same users simply make sure that the desired outcomes can simply be assured without making the lot of effort.

11. What is a Factless fact table in ETL?

Ans:

It is defined as a table without measures in the ETL. There are number of events that can be managed directly with the same. It can also record events that are related to an employees or with the management and this task can be accomplished in the very reliable manner.

12. What is Transformation? What does types of same?

Ans:

It is basically regarded as a repository object which is capable to produce data and can even modify and pass it in the reliable manner. The two commonly used transformations are the Active and Passive.

13. Explain the concept of data wrangling and its importance in ETL processes?

Ans:

Data wrangling is a pivotal aspect of ETL processes, involving the cleaning and transformation of raw data for effective analysis. It addresses data quality issues by handling missing values, errors, and inconsistencies. Through tasks such as restructuring, enrichment, and filtering, data wrangling ensures that information is standardized and relevant.

14. When will make use of Lookup Transformation?

Ans:

It is one of the finest and in fact one of very useful approaches in the ETL. It simply makes sure that users can get a related value from a table and with help of a column value that seems useless. In addition to this, it simply makes the sure of boosting the performance of dimensions table which is changing at a very slow rate.

15. What is Data Purging?

Ans:

There are needs and situations when a data needs to be deleted from a data warehouse. It is a very daunting task to delete data in bulk. The Purging is an approach that can delete the multiple files at same time and enable users to maintain speed as well as efficiency. A lot of extra space can created simply with this.

16. How does one implement data security and access control in ETL processes?

Ans:

Securing ETL involves encryption, strong authentication, and authorization. Regular audits, data classification, and user training ensure ongoing protection against unauthorized access, maintaining data integrity.

17. What is Dynamic and the Static Cache?

Ans:

When it comes to updating a master table, the dynamic cache can opt. Also, users are free to use it for changing dimensions. On the other side, the users can simply manage flat files through the Static Cache.

18. What is the staging area?

Ans:

It is an area that is used when it comes to the holding the information or data temporary on the server that controls a data warehouse. There are certain steps that are included and prime one among them is Surrogate assignments.

19. What are the types of Partitioning ?

Ans:

- Hash

- Round-robin

20. What does use of Data Reader Destination Adapter?

Ans:

There are ADO recordsets that generally consist of the columns and records. When it comes to populating them in the simple manner, the Data Reader Destination Adapter is more useful. It simply exposes a data flow and let the users impose the various restrictions on the data which is required in many cases.

21. Does it possible to extract t SAP data with help of Informatica?

Ans:

There is the power connect option that simply lets the users perform this task and in very reliable manner. It is necessary to import a source code in the analyzer before can accomplish this task.

22. Explain Mappet?

Ans:

This is actually an approach that is useful for creating or arranging different sets in transformation. It simply let user accomplish the other tasks also that largely matters and are related to data warehouse.

23. What are commercial ETL tools?

Ans:

- Ab Initio

- Adeptia ETL

- Business Objects Data Services

- Informatica PowerCenter

24. How does one handle data archiving and retention in ETL processes?

Ans:

In ETL processes, set clear retention policies, automate archiving based on business needs, and optimize storage. Regular reviews, secure storage practices, and integration into disaster recovery plans ensure efficient data management and compliance.

25. What are the types of measures?

Ans:

Additive Measures – Can be joined across any dimensions of a fact table.

Semi Additive Measures – Can be joined across the only some of dimensions of fact table.

Non-Additive Measures – Cannot be joined across to any dimensions of fact table.

26. How does one handle data synchronization between different systems in ETL processes?

Ans:

Effective data synchronisation in ETL involves mapping and transforming data between source and target systems, adopting incremental loading strategies to handle only the changed data, and implementing Change Data Capture (CDC) techniques for efficient updates.

27. Define Transformation?

Ans:

In ETL, Transformation involves, data cleansing, Sorting data, Combining or merging, and applying business rules to the data for improving data for quality and accuracy in ETL process.

28. Does possible to update a table using SQL service?

Ans:

Yes, it is actually possible and users can perform this task simply and in fact without worrying about anything. The users are generally have several options to accomplish this task easily.

29.How does one handle large-scale data migration using ETL?

Ans:

Handling large-scale data migration with ETL involves strategic planning, such as leveraging parallel processing, adopting incremental loading, and implementing compression and encryption for efficient and secure data transfer. Thorough analysis of source and target systems, rigorous data validation, and the establishment of backup and rollback procedures are essential.

30. What does understand by term fact in the ETL and what does types of the same?

Ans:

Basically, it is regarded as the federal component that generally belongs to the model that has multiple dimensions. The same can also be used when it comes to considering measures that belong to analyzation. The facts are generally useful for providing dimensions that largely maters in ETL.

31. What does the Data source view and how does significant?

Ans:

There are several analysis services databases that largely depend on relational schema and prime task of the Data source is to define the same. They are also helpful in creating cubes and dimensions with the help of which users can set the dimensions in more easy manner.

32. What are objects in Schema?

Ans:

These are basically considered a logical structures that are related to data. They generally contain a tables, views, synonyms, clusters as well as function packages. In addition to this, there are the several database links that are present in them.

33. What are Cubes in ETL and how does different from OLAP?

Ans:

There are things on which data processing depends largely and cubes are one among them, they are generally regarded as units of the same that provide useful information regarding dimensions and fact tables. When it comes to the multi-dimensions analysis, the same can simply be assured from this.

34. Define Bus Schema?

Ans:

In data warehouse, BUs Schema is used for an identifying the most common dimensions in business process. In one word its is definite dimension and a standardized equivalent of the facts.

35. How does handle data compression in ETL processes?

Ans:

In ETL processes, handle data compression by using compression algorithms (e.g., gzip), employing columnar storage formats like Parquet, applying binary encoding for repetitive or categorical data, and adjusting compression levels based on the trade-off between ratio and processing speed. Additionally, leverage in-database compression features, choose optimal data types, and consider deduplication, archiving, and purging to further optimize storage and processing efficiency.

36. Explain terms Mapplet, Session, Workflow, and Worklet?

Ans:

Mapplet: Reusable object that contains the set of transformations.

Worklet: Represents a set of workflow tasks

Workflow: Customs tasks for each record that need to be execute.

Session: a set of instructions that instructs how to flow data to the target.

37. What is the importance of ETL testing?

Ans:

- Ensure data is a transformed efficiently and quickly from a one system to another.

- Data quality issues during the ETL processes, such as duplicate data or data loss, can also be identified and prevented by an ETL testing.

- Assures that ETL process itself is running smoothly and is not hampered.

38. Explain the process of ETL testing.

Ans:

ETL testing is a systematic process crucial for ensuring the accuracy and reliability of data throughout the Extract, Transform, Load workflow. It begins with a thorough analysis of ETL requirements, encompassing data extraction, transformation, and loading rules.

39. What are the different types of ETL testing?

Ans:

- Production Validation Testing

- Source to Target Count Testing

- Metadata Testing

- Performance Testing

- Source to Target Data Testing

40. What are the roles and responsibilities of ETL tester?

Ans:

- In-depth knowledge of ETL tools and processes.

- Performs thorough the testing of ETL software.

- Check data warehouse test component.

- Perform a backend data-driven test.

41. What are the different challenges of ETL testing?

Ans:

- An incomplete or corrupt a data source.

- Changing the customer requirements result in re-running test cases.

- Uncertainty about the business requirements or employees who are not aware of them.

42. Explain data mart.

Ans:

An enterprise data warehouse can be divided into the subsets, also called data marts, which are focused on particular business unit or department. Data marts allow the selected groups of users to easily access the specific data without having to search through entire data warehouse.

43. Explain how data warehouse differs from data mining.

Ans:

- An incomplete or corrupt a data source.

- Changing the customer requirements result in re-running test cases.

- Uncertainty about the business requirements or employees who are not aware of them.

44. Explain how data warehouse differs from data mining.

Ans:

A data warehouse is a centralized repository that stores structured, historical data from various sources, facilitating reporting and analysis. On the other hand, data mining is a process within the field of data analysis that involves discovering patterns, trends, and insights from large datasets. It utilizes statistical algorithms and machine learning techniques to identify relationships and hidden knowledge within the data.

45. What is the difference between power mart and power center.

Ans:

PowerMart and PowerCenter are data integration tools from Informatica. PowerMart is a scaled-down version, designed for small to medium-sized enterprises with basic data integration needs. It offers a subset of features compared to PowerCenter. In contrast, PowerCenter is the enterprise-level, full-featured version suitable for large organizations with complex data integration requirements. PowerCenter provides advanced functionalities, scalability, and broader connectivity options, making it suitable for handling extensive ETL (Extract, Transform, Load) tasks in enterprise environments.

46. What is the difference between power mart and power center.

Ans:

ETL testing focuses on validating the end-to-end data integration process, ensuring accuracy and integrity. Database testing is a broader concept covering various aspects of database systems beyond ETL workflows, including data integrity, transactions, and stored procedures. ETL testing specifically targets the ETL pipeline, while database testing encompasses a wider range of database functionalities. Both are crucial for maintaining data quality and system reliability in the context of data management.

47. What is BI (Business Intelligence)?

Ans:

Business Intelligence (BI) involves the acquiring, cleaning, analyzing, integrating, and sharing data as means of identifying actionable insights and enhancing business growth. An effective BI test verifies a staging data, ETL process, BI reports, and ensures the implementation is more reliable.

48. What is the ETL Pipeline?

Ans:

ETL pipelines are mechanisms to perform the ETL processes. This involves the series of processes or activities required for a transferring data from one or more sources into data warehouse for analysis, reporting and data synchronization.

49. Explain data cleaning process.

Ans:

There is always possibility of duplicate or mislabeled data when combining the multiple data sources. Incorrect data leads to unreliable outcomes and algorithms, even when they appear to correct.

50.Difference between ETL testing and manual testing.

Ans:

ETL testing is a specialized process validating the accuracy and integrity of the Extract, Transform, Load data integration flow, often involving automation. Manual testing, on the other hand, is a broader testing approach where tests are executed manually without automated tools. While ETL testing focuses on end-to-end data integration, manual testing involves a more general assessment of software functionalities. ETL testing is particularly relevant in data-centric environments, ensuring the reliability of the entire data workflow, while manual testing is a versatile method applicable across various software testing scenarios.

51. Mention some of ETL bugs.?

Ans:

- User Interface Bug

- Input/Output Bug

- Boundary Value Analysis Bug

- Calculation bugs

52. What is ODS (Operational data store)?

Ans:

Between staging area and the Data Warehouse, ODS serves as repository for data. Upon inserting data into ODS, ODS will load all data into the EDW (Enterprise data warehouse).

53. What is the staging area and write main purpose?

Ans:

During extract, transform, and load (ETL) process, a staging area or landing zone is used as intermediate storage area. It serves as temporary storage area between the data sources and data warehouses.

54. Explain Snowflake schema.

Ans:

Adding additional dimension tables to the Star Schema makes it a Snowflake Schema. In Snowflake schema model, multiple hierarchies of dimension tables surround the central fact table. Alternatively, dimension table is called snowflake if its low-cardinality attribute has been segmented into the separate normalized tables.

55. What are the Advantages of Snowflake schema.

Ans:

- Data integrity is more reduced because of structured data.

- Data are highly structured, so it requires a little disk space.

- Updating or maintaining a Snowflaking tables is easy.

56. What are the Disadvantages of Snowflake schema ?

Ans:

Snowflake reduces the space consumed by the dimension tables, but the space saved is usually insignificant compared with an entire data warehouse. Due to number of tables added, may need complex joins to perform the query, which will reduce a query performance.

57. Explain Bus Schema.

Ans:

An important part of the ETL is dimension identification, and this is largely done by Bus Schema. A BUS schema is actually comprised of the suite of verified dimensions and uniform definitions and can be used for the handling dimension identification across all the businesses.

58. What is a factless table?

Ans:

Factless tables do not contain the any facts or measures. It contains only dimensional keys and deals with an event occurrences at informational level but not at the calculational level. As name implies, factless fact tables capture the relationships between dimensions but lack any numerical or a textual data.

59. Explain SCD (Slowly Change Dimension).

Ans:

SCD (Slowly Changing Dimensions) basically keep and manage both the current and historical data in the data warehouse over time. Rather than changing regularly on the time-based schedule, SCD changes slowly over a time. SCD is considered one of the most critical aspects of an ETL.

60. What are the different ways of updating table when SSIS is being used.?

Ans:

- Use SQL command.

- For storing stage data, use the staging tables.

- Scripts can be used for a scheduling tasks.

61. List some ETL test cases?

Ans:

- Mapping Doc Validation

- Data Quality

- Correctness Issues

- Constraint Validation

62. Explain ETL mapping sheets.

Ans:

ETL mapping sheets include the full information about a source and destination table, including each column as well as their lookup in the reference tables. As part of ETL testing process, ETL testers may need to write a big queries with multiple joins to validate data at any point in testing process.

63. How does ETL testing is used in third party data management?

Ans:

Different kinds of the vendors develop different kinds of applications for the big companies. Consequently, no single vendor manages everything. Consider the Telecommunication project in which billing is handled by a one company and CRM by another.

64. How is ETL used in data migration projects.

Ans:

Data migration projects commonly use the ETL tools. As an example, if the organization managed data in Oracle 10g earlier and now they need to move to SQL Server cloud database, the data will need to be migrated from a Source to Target.

65. What does machine learning in ETL processes?

Ans:

Machine learning can be used in the ETL processes to improve data quality, to automate a data preparation, and to optimize data processing. Machine learning algorithms can be used to identify and correct data quality issues, to learn from the historical data to improve ETL processes, and to predict and prevent data errors.

66. How does one ensure data accuracy in ETL testing?

Ans:

- Data Profiling

- Data Validation

- Data Transformation Testing

67. What does test plan in ETL testing?

Ans:

A Test Plan in ETL (Extract, Transform, Load) testing is the comprehensive document that outlines testing approach, objectives, scope, resources, schedule, and criteria for determining a success of the testing process. It serves as the roadmap for the testing team, guiding them on how to perform an ETL testing effectively.

68. Explain ‘Surrogate Key.’

Ans:

A surrogate key is unique identifier for a record in the database table. Unlike natural keys, which are based on existing data attributes surrogate keys are specifically created and maintained solely for purpose of identifying the records in the database.

69. What is the Transaction Fact Table?

Ans:

A transaction fact table is one of the three types of fact table and the most basic one. In this type of a fact table, each event is stored only once, and it contains a data of the smallest level. Also, the number of rows in this fact table is similar to number of rows in a source table.

70. What is the dimension table?

Ans:

A dimension table is one of the two types of tables used in the dimensional modeling, other being fact table. A dimension table describes dimensions or the descriptive criteria of objects in fact table.

71. What is data integrity in ETL testing?

Ans:

Data integrity ensures that a data remains accurate, consistent, and reliable throughout ETL process and in the target database. Data integrity in ETL (Extract, Transform, Load) testing refers to assurance that data maintains its accuracy, consistency, and reliability as it undergoes extraction, transformation, and loading processes within ETL pipeline.

72. Explain data lineage in ETL.

Ans:

Data lineage tracks movement of data from a source to target, including transformations, showing how data changes along the way. Data lineage in ETL (Extract, Transform, Load) refers to visual representation and tracking of the flow of data from its source through the various transformations and processes to final destination.

73. How does handle data validation in ETL testing?

Ans:

Data validation involves the checking data accuracy, completeness, and adherence to the business rules. It can be done through the SQL queries, scripts, or data profiling tools.

74. How does one ensure referential integrity in ETL testing?

Ans:

Referential integrity can be ensured by performing a key matching between related tables, validating primary and foreign keys, and resolving the any mismatches.

75. What is metadata in ETL testing?

Ans:

Metadata is data that describes the other data. ETL includes the information about data sources, transformations, and target structures.

76. What is data duplication, and how does performed in ETL testing?

Ans:

Data duplication involves the removing duplicate records. In ETL testing it’s done by identifying a duplicate entries in source data and preventing their insertion into the target.

77. What does QA analyst in ETL testing?

Ans:

A QA analyst is responsible for a test specifications, designing test scenarios, creating test cases, executing tests, identifying defects, and ensuring the quality of ETL processes.

78. What is data reconciliation in ETL testing?

Ans:

Data reconciliation in ETL (Extract, Transform, Load) testing refers to a process of comparing and validating data at a different stages of the ETL pipeline to ensure consistency, accuracy, and completeness.

79. How does one handle testing of ETL workflows with dependencies?

Ans:

- Understanding order of execution.

- Simulating dependencies.

- Verifying a data integrity throughout the process.

80. What does ETL process in QA?

Ans:

ETL testing is the process that verifies a data coming from source systems has been extracted completely, transferred correctly, and loaded in an appropriate format — effectively letting know if have high data quality. It will identify a duplicate data or data loss and any missing or incorrect data.

81. What is the difference between ETL and ETL 2.0?

Ans:

ETL (Extract, Transform, Load) is a traditional data integration process that involves extracting data from source systems, transforming it into a suitable format, and loading it into a target data warehouse for analysis. ETL 2.0 represents an evolution in this approach, incorporating modern technologies and methodologies to address the challenges of big data, real-time processing, and scalability. ETL 2.0 emphasises a more flexible and agile architecture, often leveraging cloud-based solutions, microservices, and streaming data processing. It aims to provide faster, more real-time data integration capabilities to meet the dynamic needs of today’s data-driven organisations, in contrast to the batch-oriented nature of traditional ETL.

82. Difference between ETL and data integration tools?

Ans:

ETL is a specific process for data integration, involving extraction, transformation, and loading. ETL tools are specialized for structured batch processing. Data integration encompasses broader techniques beyond ETL, including real-time integration and replication. ETL is part of data integration, which addresses varied data source management needs.

83. How does one optimize ETL processes for scalability?

Ans:

- Parallelize data processing

- Use cloud-based storage and processing

- Optimize data formats

84. Explain ETL orchestration and how it is used in ETL processes?

Ans:

ETL orchestration is a process of coordinating and managing various stages of an ETL process. This involves defining a data sources, specifying the transformations to be applied, and determining a the target destination for the transformed data.

85. Explain data virtualization and its use in ETL processes?

Ans:

Data virtualization is a process of abstracting the underlying physical data sources and presenting them as single virtual data source. This allows for the easier access and manipulation of the data, without having to deal with complexity of the underlying the physical data sources.

86. How does one design an ETL process for a distributed system?

Ans:

- Partition a source data into smaller chunks that can be processed independently.

- Distribute processing tasks across the multiple nodes in the system.

- Merge results from processing tasks into the single output.

87. What is the difference between push and pull ETL architectures?

Ans:

The difference between push and pull ETL architectures lies in how data is transferred between systems. In a push architecture, the data integration process actively pushes data from source systems to the target system. It typically involves a centralized server initiating and controlling the data transfer. In contrast, a pull architecture has the target system actively pulling data from source systems when needed. This approach often relies on distributed systems and allows for more on-demand and flexible data retrieval. Push architectures are more proactive, while pull architectures offer greater adaptability to varying data processing requirements.

88. How does one handle big data in ETL processes?

Ans:

Handling big data in ETL involves using distributed computing frameworks (e.g., Apache Spark), scalable storage (e.g., HDFS), and parallel processing for efficiency. Leveraging cloud-based ETL services and employing hybrid approaches with both batch and real-time processing helps manage the velocity of big data streams. Optimizing algorithms and using partitioning strategies are crucial for effective big data ETL workflows.

89. What does data profiling in ETL testing and how is it done?

Ans:

Data profiling in ETL testing involves analyzing and assessing the quality of data sources to ensure accuracy, completeness, and consistency. It includes examining data types, patterns, and identifying anomalies or outliers. ETL testing tools are employed to automate the profiling process, generating statistical summaries and data quality reports. Profiling helps discover issues early, improving data integrity and the overall success of the ETL process. Continuous monitoring and profiling are essential for maintaining data quality throughout the ETL lifecycle.

90. How does one handle real-time data feeds in ETL processes?

Ans:

Handle real-time data in ETL by using stream processing (e.g., Apache Kafka), microservices, change data capture, and in-memory databases for efficient, near real-time ingestion, transformation, and loading.