Performance testing is a crucial part of software development aimed at assessing how a system performs under various conditions. It involves testing factors like response time, scalability, reliability, and resource usage to ensure that an application meets performance benchmarks. By simulating different user loads and stress levels, performance testing identifies performance bottlenecks and helps optimize software for better speed, stability, and responsiveness.

1. What is performance testing?

Ans:

Performance testing is a type of software testing that ensures a system performs well under expected workloads. It assesses the speed, responsiveness, and stability of a system. The goal is to identify and eliminate performance bottlenecks. Performance testing encompasses various testing methods like load, stress, and endurance testing. It ensures the system meets performance criteria. It helps in optimizing system performance. Practical performance testing enhances user experience.

2. Why is performance testing necessary?

Ans:

Performance testing is necessary to ensure that applications function efficiently under various conditions. It helps identify performance issues before the system goes live, ensures that the application can handle peak loads, and prevents system crashes and downtimes. Performance testing improves user satisfaction by ensuring fast and reliable applications. It also helps validate the system’s scalability. Overall, it provides business continuity and reliability.

3. What are the critical objectives of performance testing?

Ans:

- Performance testing’s critical objectives include evaluating system performance under load, identifying performance bottlenecks, and optimizing resource usage.

- The testing ensures the system meets specified performance criteria. It validates the scalability and reliability of the application.

- Performance testing assesses the response time and throughput. It helps in understanding the system’s behavior under stress. Ultimately, it ensures a smooth user experience.

4. Differentiate between performance testing, load testing, and stress testing.

Ans:

- Performance testing evaluates the overall performance of a system under various conditions. Load testing focuses on assessing how the system behaves under expected peak load conditions.

- Stress testing, on the other hand, pushes the system beyond its limits to see how it handles extreme conditions.

- Performance testing encompasses both load and stress testing. Load testing aims to ensure the system can handle expected traffic.

- Stress testing identifies the system’s breaking point. Each serves a distinct purpose in assessing system performance.

5. What is scalability testing?

Ans:

Scalability testing evaluates a system’s ability to handle increased load by adding resources. It ensures the application can scale up or down based on demand. This testing helps understand the system’s capacity limits. Scalability testing involves adding more users or data to assess performance. The goal is to ensure the system maintains performance standards. It helps in planning for future growth and resource allocation. Effective scalability testing ensures business applications can grow with demand.

6. Explain the concept of throughput in performance testing.

Ans:

Throughput in performance testing refers to the number of transactions a system can process within a given time frame. It measures the system’s capacity to handle workload efficiently. High throughput indicates a well-performing system. A common way to measure throughput is in transactions per second (TPS) or requests per second (RPS). It helps assess the system’s efficiency and performance under load. Throughput is critical for understanding the system’s handling of concurrent users. It directly impacts user experience and system reliability.

7. What is latency in the context of performance testing?

Ans:

- Latency in performance testing is the time taken for a data packet to travel from the source to the destination. It measures the delay before the transfer of data begins following an instruction.

- Latency impacts a system’s responsiveness. High latency indicates a delay in processing requests, affecting user experience.

- It is measured in milliseconds (ms). Latency is a critical parameter in network performance testing.

- Reducing latency improves the overall performance of the application.

8. Define response time and how it is measured.

Ans:

- Response time is the total time taken from sending a request to receiving a response. It includes the time the system takes to process the request and generate a response.

- One important performance measure is response time user experience, which is measured in milliseconds (ms) or seconds (s).

- A lower response time indicates a faster system. Response time can be measured using performance testing tools. It helps identify areas for performance improvement.

9. What is a performance bottleneck?

Ans:

A performance bottleneck is a point in the system where the performance is significantly hindered. It occurs when a component or resource limits the overall system performance. Bottlenecks can be caused by hardware, software, or network issues. Identifying bottlenecks is crucial for optimizing system performance. Bottlenecks lead to slow response times and poor user experience. Performance testing helps detect and resolve bottlenecks.

10. What is the difference between client-side and server-side performance testing?

Ans:

| Aspect | Client-Side Performance Testing | Server-Side Performance Testing |

|---|---|---|

| Focus | Tests the performance of the client-side components, such as web browsers, mobile apps, or desktop applications. | Tests the performance of server-side components, including application servers, databases, APIs, and backend services. |

| Key Metrics | Response time, rendering speed, memory usage on client devices. | Response time, throughput, CPU utilization, database query performance. |

| Tools Used | Browser-based tools like Chrome DevTools, YSlow, WebPageTest. | Performance testing tools like JMeter, LoadRunner, Apache Bench. |

| Testing Environment | Typically conducted on various client devices with different configurations (browsers, operating systems). | Conducted on dedicated servers or cloud environments to simulate user interactions. |

11. What is the purpose of performance testing in software development?

Ans:

- Performance testing is done to make sure that a software application performs well under expected workload conditions.

- It involves evaluating the system’s responsiveness, speed, scalability, and stability. Performance testing helps identify performance bottlenecks and areas that need improvement.

- It provides insights into how the system behaves under various scenarios, ensuring reliability.

- This type of testing ensures that the application meets performance criteria and user expectations. Ultimately, it helps improve user satisfaction by providing a smooth and efficient user experience.

12. Explain the concept of peak load in performance testing.

Ans:

- Peak load in performance testing refers to the maximum load or number of concurrent users the system can handle at a specific point in time.

- It is the highest level of demand the application is expected to experience. Testing for peak load helps determine if the system can sustain high usage without performance degradation.

- This ensures that the application remains functional and responsive during traffic spikes.

- Identifying the peak load capacity helps in optimizing system resources and planning for scalability.

13. What is the difference between synchronous and asynchronous performance testing?

Ans:

Synchronous performance testing involves executing tasks sequentially, with each task waiting for the previous one to complete before starting. It is easier to manage and monitor but may not accurately reflect real-world scenarios. Asynchronous performance testing, on the other hand, executes tasks concurrently without waiting for each to finish. This method better simulates real-world environments by mimicking simultaneous user interactions.

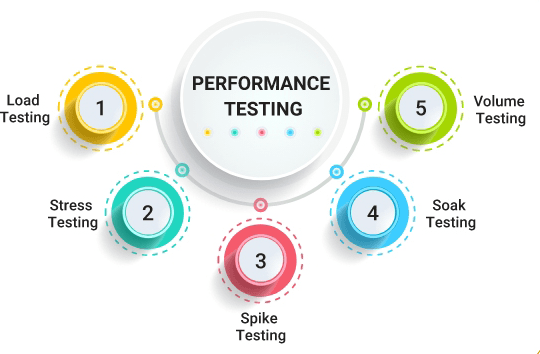

14. What does load testing entail?

Ans:

Load testing is a type of performance testing that evaluates how a system performs under a specific expected load. It involves simulating multiple users accessing the application simultaneously to assess its behavior. The goal is to identify performance bottlenecks, response times, and system capacity. Load testing helps ensure that the application can handle the anticipated user load efficiently. It provides insights into how the system scales and performs during normal and peak conditions.

15. Explain stress testing with an example.

Ans:

- A particular kind of performance assessment called stress testing evaluates how a system behaves under extreme conditions beyond its expected operational capacity.

- For example, an e-commerce website might undergo stress testing by simulating a sudden surge in traffic during a flash sale.

- The test helps identify the system’s breaking point and its ability to recover from failures.

- Stress testing reveals weaknesses such as memory leaks, slow response times, and potential crash points. It ensures that the system can handle unexpected spikes in load.

16. What is soak testing (endurance testing)?

Ans:

- Also known as endurance testing, soak testing assesses how a system performs under a sustained load over an extended period.

- It aims to identify issues such as memory leaks, resource depletion, and performance degradation over time.

- For example, an application might be tested continuously for 24 hours to observe its stability.

- Soak testing verifies the system’s ability to sustain performance levels during prolonged usage. It helps in identifying potential long-term issues that might need to be clarified in shorter tests.

17. What is spike testing?

Ans:

Spike testing is a type of performance testing that evaluates how a system handles sudden, extreme increases in load. It involves simulating rapid spikes in user activity to observe the system’s response, such as testing how a website handles a sudden influx of visitors during a major event. Spike testing helps identify potential performance issues and the system’s ability to recover quickly. It guarantees the stability of the application and its responsiveness under sudden load changes.

18. Define volume testing.

Ans:

A kind of performance testing called volume testing evaluates how a system handles a large volume of data. It involves testing the system’s capacity to process and manage substantial amounts of data within a specified time frame. For example, a database application with a large dataset is tested to observe performance. Volume testing helps identify performance bottlenecks, database issues, and potential data handling problems. It ensures that the system can efficiently manage high volumes of data without degradation.

19. What is configuration testing?

Ans:

- Configuration testing evaluates how a system performs under different hardware, software, and network configurations.

- It involves testing the application in various environments to ensure compatibility and performance.

- For example, testing an application on different operating systems, browsers, and network conditions.

- Configuration testing helps identify compatibility issues and performance variations across different setups.

20. Explain the purpose of isolation testing.

Ans:

- Isolation testing involves testing individual components or modules of a system separately to identify issues within specific areas.

- The purpose is to isolate and identify defects in individual components without interference from other parts of the system.

- For example, a single microservice can be tested independently in a microservices architecture.

- Isolation testing helps pinpoint the source of defects and understand component behavior. It ensures that each part of the system functions correctly before integrating it.

21. What is the difference between performance tuning and performance testing?

Ans:

Performance testing involves evaluating an application’s current performance through various tests to identify bottlenecks and areas for improvement. It aims to measure specific metrics like response time and throughput under different conditions. Performance tuning, on the other hand, focuses on optimizing based on the findings of performance testing. It involves adjusting configurations, code optimizations, and infrastructure changes to enhance overall system performance, aiming for better efficiency and responsiveness.

22. How does performance testing contribute to software scalability?

Ans:

Performance testing assesses how an application handles increasing workloads, helping to identify scalability issues early in the development lifecycle. By simulating multiple users and varying loads, performance testing measures metrics such as response time and throughput under different conditions. This information allows developers to optimize the application’s architecture, database schema, and codebase for scalability, ensuring it can effectively handle growing user bases and workload demands.

23. What are the key metrics used to measure performance testing results?

Ans:

Key metrics in performance testing include response time, which measures the time taken for the system to respond to a user request, and throughput, which measures the rate of transactions processed per unit of time. Other metrics include error rates, which indicate the frequency of failed transactions or errors encountered, and resource utilization metrics like CPU usage and memory usage.

24. Explain the term “ramp-up” in the context of load testing.

Ans:

- Ramp-up refers to the gradual increase in the number of virtual users or concurrent connections during a load test.

- It allows testers to observe how the system performs as the load increases incrementally, simulating real-world scenarios where user traffic grows gradually over time.

- This approach helps identify performance thresholds, scalability limits, and potential bottlenecks that may occur under increasing loads.

- Ramp-up also ensures that the system’s performance is tested under conditions that mimic actual usage patterns.

25. What is the ideal method to determine the number of virtual users required for a load test?

Ans:

The ideal number of virtual users for a load test depends on factors such as the application’s expected peak usage, the system’s capacity, and performance goals. Testers typically start with a baseline number of users and gradually increase them (ramp-up) until performance metrics stabilize or degrade. Analyzing metrics like response time, throughput, and error rates during each ramp-up phase helps determine the maximum number of virtual users the system can handle without compromising performance or stability.

26. What are the advantages of using a performance testing tool over manual testing?

Ans:

Performance testing tools automate the process of simulating user interactions and generating load on the system, which improves efficiency and accuracy compared to manual testing. These tools provide precise metrics and detailed reports on system performance under different conditions, enabling quicker identification of bottlenecks and issues. Automation also allows for repeatable tests, facilitating regression testing and ensuring consistent performance evaluations across various environments.

27. Describe the role of APM (Application Performance Monitoring) tools in performance testing.

Ans:

- APM tools monitor and analyze the performance of applications and infrastructure components in real time during performance testing.

- They collect and display metrics related to response times, error rates, CPU usage, memory usage, and more, providing deep insights into application performance.

- APM tools help identify performance bottlenecks, pinpoint root causes of issues, and correlate performance metrics with code changes or infrastructure modifications.

28. What techniques are used to simulate real-world network conditions in performance testing?

Ans:

- Simulating real-world network conditions involves introducing factors like latency, bandwidth constraints, packet loss, and network jitter into performance testing scenarios.

- Performance testing tools can simulate these conditions by configuring network emulation settings, adjusting transmission rates, and introducing delays in network communications between clients and servers.

- By replicating typical network conditions, testers can assess the way a program functions in various network environments and ensure it meets performance expectations in the field.

29. What is the difference between horizontal and vertical scaling?

Ans:

Horizontal scaling involves increasing the number of instances or nodes in a distributed system to handle growing workloads, distributing the load across multiple machines or servers. By adding more resources horizontally, it aims to improve performance and availability. Vertical scaling, on the other hand, involves increasing the capacity of individual resources, such as growing a single server’s CPU, memory, or storage.

30. What strategies can mitigate the risks identified during performance testing?

Ans:

Mitigating risks identified during performance testing involves implementing strategies to address performance issues and ensure the application meets performance goals. This may include optimizing code for efficiency, tuning database queries, scaling infrastructure resources, caching frequently accessed data, or implementing load-balancing techniques. Continuous monitoring, using APM tools, and conducting regular performance tests help detect and mitigate risks early in the development lifecycle.

31. What is the importance of baseline testing in performance testing?

Ans:

- Baseline testing establishes a performance benchmark for an application under normal operating conditions.

- This benchmark serves as a reference point for measuring the impact of changes, such as code updates or infrastructure modifications.

- By comparing future test results to the baseline, testers can identify performance degradations or improvements.

- Baseline testing helps track performance trends over time and ensures that the application meets performance criteria before making major changes.

32. What steps are taken to identify memory leaks during performance testing?

Ans:

- Identifying memory leaks in performance testing involves monitoring the application’s memory usage over an extended period.

- Use performance testing tools to track memory consumption while running tests that simulate typical user behavior. If memory usage continuously increases without being released, it indicates a memory leak.

- Analyzing heap dumps can help pinpoint the source of leaks. Profiling tools like JProfiler or VisualVM can provide detailed insights into memory allocations.

33. Explain the concept of think time in performance testing scenarios.

Ans:

Think time refers to the simulated pause or delay that mimics a real user’s interaction with an application during a performance test. It represents the time taken by a user to read, analyze, and respond to the application’s output before proceeding to the following action. Including think time in performance scripts ensures a more realistic and accurate simulation of user behavior. It helps distribute the load more naturally across the system.

34. What are the common challenges faced during performance testing of web applications?

Ans:

Common challenges in performance testing of web applications include handling dynamic content and session management. Managing varying network conditions to simulate natural user environments can be complex. Identifying and replicating realistic user scenarios is often tricky. Ensuring data consistency and avoiding cache effects can pose challenges. Scaling the test environment to match production conditions requires significant resources.

35. Describe the process of analyzing performance test results.

Ans:

- Analyzing performance test results starts with collecting and aggregating data from various monitoring tools.

- Examine key performance metrics such as response time, throughput, and error rates. Correlate metrics with application and server logs to identify performance bottlenecks.

- Compare test results against the established baseline or performance benchmarks. Look for patterns or anomalies that indicate performance issues.

36. What is the best way to simulate concurrent users in a performance-testing scenario?

Ans:

- Simulating concurrent users involves creating virtual users that perform actions simultaneously in the testing environment.

- Use performance testing tools like LoadRunner, JMeter, or Gatling to script user interactions. Configure the test to launch multiple virtual users, each executing the same or different actions concurrently.

- Adjust the ramp-up time to control how quickly virtual users are introduced.

- Ensure the test environment can handle the simulated load to avoid skewed results. Monitor system performance and resource utilization during the test.

37. What is the role of caching in improving application performance?

Ans:

Caching improves application performance by temporarily storing frequently accessed data in a faster storage medium. This reduces the need to fetch data from slower backend systems repeatedly. Implementing caching strategies like browser caching, server-side caching, and content delivery network (CDN) caching can significantly enhance performance. Caching reduces server load and speeds up user response times. It helps handle higher loads and improve scalability.

38. What are the methods to measure server-side resource utilization during performance testing?

Ans:

Measuring server-side resource utilization involves monitoring CPU, memory, disk I/O, and network usage on the server hosting the application. Use tools like PerfMon, Nagios, or built-in monitoring solutions in cloud environments to track these metrics. Set up monitoring agents to collect real-time data during performance tests. Analyze resource usage patterns to identify bottlenecks or resource constraints—correlate resource utilization with application performance metrics like response time and throughput.

39. What is the impact of database indexing on performance testing results?

Ans:

- Database indexing can significantly impact performance testing results by improving query performance. Properly indexed databases reduce the time required to retrieve data, leading to faster response times.

- Indexes help in handling large datasets efficiently, reducing the load on the database server.

- During performance testing, evaluate the effectiveness of indexes by comparing query execution times with and without indexes.

40. Describe the steps involved in setting up a performance testing environment.

Ans:

- Setting up a performance testing environment involves several key steps. First, define the performance testing objectives and identify the key metrics to be measured.

- Next, replicate the production environment as closely as possible, including hardware, software, network configurations, and data.

- Install and configure performance testing tools such as LoadRunner, JMeter, or Gatling.

- Develop test scripts that simulate realistic user interactions and scenarios.

41. What approaches are used to handle dynamic data in performance testing scripts?

Ans:

To handle dynamic data in performance testing scripts, use parameterization to replace hard-coded values with variables. This allows the script to simulate realistic user inputs and actions. Tools like LoadRunner or JMeter support parameterization by enabling data to be read from external sources such as CSV files. Implement correlation to capture and reuse dynamic values like session IDs or tokens. Regular expressions can help extract dynamic data from responses.

42. What is the difference between stress testing and endurance testing?

Ans:

Stress testing entails exceeding the system’s average operational capacity to identify breaking points and bottlenecks. It helps determine the system’s robustness under extreme conditions. Endurance testing, also known as soak testing, checks the system’s performance over an extended period to detect issues like memory leaks. While stress testing focuses on short bursts of intense load, endurance testing assesses long-term stability.

43. What techniques can be used to simulate peak load conditions in performance testing?

Ans:

- To simulate peak load conditions, identify the highest expected user load and create test scenarios to match. Then, use performance testing tools to generate the required number of virtual users.

- Gradually increase the load to the peak level to observe system behavior. Track essential performance indicators, including throughput, response time, and resource utilization.

- Ensure the test environment mirrors the production setup for accurate results. Make the simulation realistic by using real-world user behavior patterns.

44. What is the role of SLAs (Service Level Agreements) in performance testing?

Ans:

- SLAs define the expected performance standards for a system, including metrics like response time, throughput, and uptime.

- They serve as benchmarks for performance testing, providing clear criteria for success.

- During performance testing, results are compared against SLA requirements to ensure compliance.

- SLAs help prioritize performance testing efforts based on business-critical metrics.

45. What is the process for calculating transaction response time in performance testing?

Ans:

Transaction response time is calculated by measuring the time taken to complete a transaction from the user’s perspective. Start the timer when the user initiates the transaction and stop it when the transaction is completed. Use performance testing tools to automate this process and collect precise timings. Average the response times over multiple iterations to get a reliable measure. Ensure the test environment closely resembles the production environment for accuracy.

46. What is the relationship between performance testing and CI/CD pipelines?

Ans:

Performance testing in CI/CD pipelines makes certain that problems with performance are found early in the development cycle and fixed. The CI/CD pipeline’s integration of performance tests allows for continuous performance assessment with every code change. Automated performance tests can be triggered by code commits, builds, or deployments. This approach helps maintain performance standards throughout development.

47. What measures ensure that performance testing addresses security vulnerabilities?

Ans:

- To cover security vulnerabilities in performance testing, incorporate security testing practices into the performance test plan.

- Identify and test for common security issues such as SQL injection, cross-site scripting (XSS), and denial-of-service (DoS) attacks.

- Use tools that support both performance and security testing or integrate specialized security testing tools.

- Monitor the system’s behavior under load for security breaches or vulnerabilities. Validate that security controls remain effective under peak load conditions.

48. Explain the importance of baseline performance metrics in continuous testing.

Ans:

- Baseline performance metrics provide a reference point for evaluating the performance of new builds or changes. They represent the standard performance levels expected from the application.

- Continuous testing uses these baselines to detect performance regressions and improvements.

- Comparing current test results against baselines helps identify deviations early.

- Baselines help set realistic performance goals and expectations and provide insights into the impact of code changes on performance.

49. What are the benefits of conducting performance testing in a cloud environment?

Ans:

Conducting performance testing in a cloud environment offers scalability, allowing tests to simulate large user loads. It provides flexibility to create realistic test environments without investing in physical infrastructure. Cloud-based testing tools provide on-demand resources, reducing costs and setup time. They enable testing from multiple geographic locations to assess global performance. Cloud environments support continuous integration and deployment workflows.

50. What approach is taken for performance testing of mobile applications?

Ans:

Approach performance testing for mobile applications by considering various network conditions, device types, and usage patterns. Use emulators and real devices to test application performance under different scenarios. Simulate varying network speeds, latency, and packet loss to assess performance under real-world conditions. Monitor key metrics like response time, battery usage, and memory consumption. Test the application under different user loads to evaluate scalability.

51. What are the critical considerations for performance testing of microservices architecture?

Ans:

- When performance testing microservices, consider the interdependencies between services and their communication patterns.

- Focus on testing individual microservices and the entire ecosystem. Ensure proper orchestration and monitoring to identify bottlenecks.

- To evaluate resilience, simulate realistic load and failure scenarios. Pay attention to the impact of network latency and data serialization/deserialization.

52. How does performance testing contribute to the overall user experience of an application?

Ans:

- Performance testing ensures that applications respond quickly and efficiently under various conditions, enhancing user satisfaction.

- Identifying and addressing performance bottlenecks minimizes delays and ensures smooth interactions.

- It helps maintain application reliability and stability, preventing crashes or slowdowns.

- Optimized performance leads to better user retention and engagement. Performance testing also helps meet service level agreements (SLAs) and user expectations.

53. What strategies can be employed to optimize the performance of a slow-performing application?

Ans:

- Start by identifying performance bottlenecks using profiling tools.

- Optimize database queries and ensure proper indexing.

- Implement caching strategies to reduce redundant computations and data retrievals.

- Minimize resource contention and improve concurrency handling.

- Optimize code and eliminate inefficient algorithms.

54. What methods are used to simulate different user behaviors in performance testing scripts?

Ans:

Simulating different user behaviors involves creating realistic test scenarios that mimic actual user interactions. Use a mix of user types, actions, and workflows to represent various usage patterns. Incorporate think times to simulate user delays between actions. Randomize data inputs to avoid caching and ensure diverse interactions. Utilize performance testing tools to script and automate these scenarios. Adjust load patterns to reflect peak, off-peak, and varying usage conditions.

55. What are the limitations of using synthetic transactions in performance testing?

Ans:

- Synthetic transactions, while helpful, have limitations. They may only partially replicate actual user behavior, leading to less accurate results.

- Synthetic tests often need more variability and randomness of actual user interactions. They might miss edge cases or uncommon scenarios encountered by real users.

- Synthetic transactions can generate false positives or negatives, requiring careful interpretation.

- They also may not account for real-world network conditions and latencies. Relying solely on synthetic transactions can give an incomplete picture of performance.

56. What process is followed to validate API performance in performance testing scenarios?

Ans:

- To validate API performance, define clear performance metrics and benchmarks.

- Create and execute API tests using tools like Postman, JMeter, or LoadRunner. Simulate various loads and monitor response times, throughput, and error rates.

- Validate the API’s scalability and ability to handle concurrent requests. Check for proper handling of edge cases and large data sets.

- Monitor server resource usage during the tests to identify bottlenecks. Compare results against expected performance criteria and optimize as needed.

57. What are the best practices for analyzing and reporting performance testing results?

Ans:

When analyzing and reporting performance testing results, start by ensuring data accuracy and consistency. Utilize graphic aids such as graphs and charts to present data clearly. Highlight critical metrics such as response times, throughput, and error rates. Compare results against benchmarks and previous test runs to identify trends. Provide actionable insights and recommendations for improvement. Include detailed logs and supporting data for transparency.

58. What methods can simulate network latency and bandwidth constraints in performance testing?

Ans:

Simulating network latency and bandwidth constraints involves using network simulation tools or configuring test environments. Tools like Network Link Conditioner, WANem, or Charles Proxy can introduce artificial delays and bandwidth limits. These tools should be configured to mimic real-world network conditions, including packet loss and jitter. The settings must be incorporated into performance testing scenarios. Tests should cover various network conditions, from optimal to poor, to analyze how the application performs under these conditions.

59. What is the role of profiling tools in performance testing and optimization?

Ans:

- Profiling tools play a crucial role in performance testing by identifying performance bottlenecks and resource usage.

- They provide detailed insights into CPU, memory, and I/O utilization. Profiling helps pinpoint inefficient code, memory leaks, and concurrency issues.

- It enables developers to optimize algorithms and resource allocation. Profiling tools also assist in understanding application behavior under different loads.

- They support continuous performance monitoring and tuning. Using profiling data, teams can make informed decisions to improve application performance.

60. What steps are involved in conducting performance testing for distributed systems or microservices?

Ans:

- Performance testing for distributed systems or microservices involves several key steps. First, define performance goals and benchmarks for each service.

- Use tools like JMeter, Gatling, or Locust to create load tests that simulate real-world usage.

- Monitor inter-service communication and identify bottlenecks using distributed tracing tools.

- Test each microservice individually and in combination to assess overall performance.

61. What is the difference between stress testing and spike testing?

Ans:

Stress testing entails progressively adding more load to a system until it reaches its breaking point to understand its limits. This helps identify the maximum capacity and points of failure. Spike testing, on the other hand, involves sudden and extreme increases in load to observe how the system handles abrupt changes. This helps evaluate the system’s stability and recovery capabilities. While stress testing is about finding the maximum sustainable load, spike testing tests the system’s response to unexpected traffic surges.

62. What is the importance of correlation in performance testing?

Ans:

Correlation in performance testing involves capturing dynamic values from server responses and using them in subsequent requests. This is essential for accurately simulating actual user behavior and maintaining session continuity. With correlation, tests may succeed due to incorrect or missing dynamic data, leading to false negatives. Correlation helps ensure the validity of the test scripts, making them more realistic and reliable.

63. Describe the concept of think time and its impact on performance testing results.

Ans:

- Think time refers to the simulated delay between user actions in a performance test script.

- It mimics the actual time users take to interact with an application, such as reading a page or filling out a form.

- Including thinking time makes the test scenarios more realistic, reflecting actual user behavior.

- It impacts the test results by affecting transaction response times and overall load patterns.

- Properly configured think time helps avoid unrealistic load conditions and provides more accurate performance metrics.

64. What are the benefits of using a profiler in performance testing?

Ans:

- A profiler is a tool that helps identify performance bottlenecks by providing detailed insights into application execution.

- It can pinpoint inefficient code, memory leaks, and resource utilization issues. Using a profiler allows testers to understand which parts of the application are consuming the most resources.

- This information is crucial for optimizing performance and improving application efficiency.

- Profilers help in making informed decisions about code improvements and infrastructure adjustments.

65. Explain the term “baseline” in performance testing.

Ans:

A baseline in performance testing is a set of metrics collected from an initial test run, serving as a reference point for future tests. It represents the application’s standard performance under a known set of conditions. Baselines help compare the impact of changes in code, configuration, or environment on application performance. They are essential for identifying performance regressions and improvements. By establishing a baseline, testers can track performance trends over time.

66. What techniques are employed to perform performance testing for a web service or API?

Ans:

Performance testing for a web service/API involves creating test scripts that simulate multiple users sending requests to the API. The process starts by defining the key performance indicators (KPIs), such as response time, throughput, and error rate. Tools like JMeter, LoadRunner, or Postman can be used to create and execute these tests. The scripts should include dynamic data handling and proper error checking. Tests are then run under different load conditions to evaluate the API’s performance.

67. What are the key challenges of conducting performance testing for IoT applications?

Ans:

- Performance testing for IoT applications involves several challenges due to the complexity and scale of IoT ecosystems.

- One key challenge is simulating a large number of heterogeneous devices with varied communication protocols.

- Ensuring realistic network conditions and latency is another critical aspect.

- The dynamic nature of IoT data and interactions makes scripting and correlation complex.

68. Describe the process of analyzing performance test results for a distributed system.

Ans:

- Analyzing performance test results for a distributed system involves several steps. First, gather and consolidate performance metrics from all network devices, servers, and databases, which are examples of system components.

- Next, these metrics will be compared against the defined performance goals and baselines.

- Identify any bottlenecks or points of failure, focusing on response times, throughput, and resource utilization.

- Look for patterns indicating performance degradation under load. Correlate performance issues with specific components or interactions within the system.

69. What are the considerations for conducting performance testing in a virtualized environment?

Ans:

Performance testing in a virtualized environment requires careful planning and configuration. One key consideration is ensuring resource isolation to avoid interference from other virtual machines. Properly allocate CPU, memory, and storage resources to match production conditions. Monitor the hypervisor and host system for any performance bottlenecks. Network configurations should accurately simulate real-world conditions.

70. What is the best way to automate performance testing as part of CI/CD pipelines?

Ans:

Automating performance testing in CI/CD pipelines involves integrating performance test tools with the build and deployment process. Start by creating performance test scripts using tools like JMeter, LoadRunner, or Gatling. Configure these tools to run automatically after each build or deployment. Use CI/CD tools like Jenkins, GitLab CI, or Azure DevOps to orchestrate the tests. Set thresholds for performance metrics and configure the pipeline to fail if these thresholds are not met.

71. What strategies can be used to optimize database performance during load testing?

Ans:

- To optimize database performance during load testing, start by indexing frequently queried columns to speed up data retrieval.

- Avoid complex joins and subqueries to ensure efficient query design. Use database partitioning to distribute data across multiple storage areas, improving read and write performance.

- Implement caching strategies to reduce load on the database by storing frequently accessed data in memory.

- Regularly monitor and tune database parameters, such as buffer size and cache settings.

72. What methods are used to simulate realistic user behavior in performance testing scenarios?

Ans:

- Simulating realistic user behavior involves creating detailed user personas that reflect typical usage patterns.

- Use a mix of automated scripts and manual interactions to replicate real-world scenarios.

- Incorporate think times to mimic the pauses users naturally take between actions. Randomize data inputs and user actions to avoid predictable patterns.

- Include error handling and recovery in scripts to simulate actual user responses to application issues.

73. What are the advantages of using cloud-based load generators in performance testing?

Ans:

Cloud-based load generators offer scalability, allowing simulation of large numbers of users without the need for physical hardware. They provide flexibility, enabling testers to quickly adjust the load based on testing needs. These tools are cost-effective, with costs tied to the resources used. They also offer global reach, facilitating testing from various geographic locations. Additionally, cloud-based solutions often include advanced analytics and reporting features, and they integrate seamlessly with CI/CD pipelines, supporting continuous performance testing.

74. Describe the role of bottleneck analysis in performance testing.

Ans:

Bottleneck analysis identifies the points in a system where performance is constrained, hindering overall efficiency. By pinpointing these areas, testers can focus their optimization initiatives where they will yield the most results. This analysis helps in understanding the system’s capacity limits and the factors that cause slowdowns. It involves monitoring resource utilization, such as CPU, memory, and I/O, during load tests. Bottleneck analysis aids in making informed decisions about hardware upgrades or software optimizations.

75. What is the approach to simulating browser caching in performance testing scripts?

Ans:

- To simulate browser caching, start by capturing fundamental user interactions with and without caching enabled.

- Modify test scripts to include headers that mimic cache-control directives. Use tools like HAR files to analyze and replicate caching behavior.

- Implement think times to simulate the delay between cache checks and actual content retrieval. Run tests with different cache states: cold (empty), warm (some cached), and hot (fully cached).

76. What are the different types of performance testing tools available in the market?

Ans:

- Different kinds of performance testing tools exist, including load testing tools like Apache JMeter and LoadRunner, which simulate multiple users.

- Stress testing tools, such as NeoLoad, test system stability under extreme conditions. APM tools like New Relic and AppDynamics provide detailed performance metrics and diagnostics.

- Network performance testing tools, like Wireshark and iPerf, analyze network traffic and bandwidth issues. Tools for web speed testing, like Google Lighthouse and WebPageTest, evaluate website speed and optimization.

77. What techniques are used to measure the scalability of an application during performance testing?

Ans:

Scalability is measured by gradually increasing the load on the application while monitoring its performance. Begin with baseline tests to establish a reference point for performance metrics. Incrementally add users or transactions and record response times, throughput, and resource utilization. Identify the point at which performance degrades, indicating the scalability limit. Use load-testing tools to simulate different levels of demand and analyze how well the application scales horizontally (adding more servers) or vertically (upgrading server hardware).

78. Explain the concept of “think time” and its impact on performance test scenarios.

Ans:

“Think time” refers to the simulated delay between user actions in a performance test scenario. It mimics the real-world pauses users take while interacting with an application, such as reading content or filling out forms. Incorporating think time into scripts provides a more realistic load on the system, as it prevents continuous, unrealistic bombardment of requests. This helps in accurately measuring response times and resource utilization under typical user conditions.

79. What practices ensure the accuracy and reliability of performance testing results?

Ans:

- To ensure accuracy and reliability, start by establishing a controlled and consistent test environment. Use realistic data sets and simulate real-world user behavior with appropriate thinking times and randomization.

- Perform multiple test runs to account for variability and gather a statistically significant sample of results.

- Regularly calibrate and validate test scripts to ensure they accurately replicate user actions.

- Monitor and control external factors, such as network conditions and background processes, that influence test outcomes.

80. What are the advantages of using containerization in performance testing?

Ans:

- Containerization offers consistent and isolated test environments, reducing discrepancies between the development, testing, and production stages.

- Containers are easily spun up or down and are lightweight, providing scalability and flexibility in performance testing.

- They allow for easy replication of test environments, ensuring tests are conducted under identical conditions.

- Containers support microservices architecture, enabling performance testing of individual components in isolation or as part of an integrated system.

81. What strategies are used for conducting performance testing on mobile applications?

Ans:

- Begin by defining the key performance metrics, such as response time, throughput, and resource utilization.

- Use tools like Appium, JMeter, or LoadRunner to simulate user interactions and load.

- Create test scenarios that reflect real-world usage, including network conditions like 3G, 4G, and Wi-Fi.

- Monitor the application’s behavior on different devices and operating systems.

82. Describe the process of setting performance testing goals and objectives.

Ans:

Setting performance testing goals and objectives involves identifying the critical performance criteria that the application must meet. Begin by consulting with stakeholders to understand their expectations and business requirements. Define specific, measurable, achievable, relevant, and time-bound (SMART) goals. Establish key performance indicators (KPIs) such as response time, throughput, and resource usage.

83. What is the best approach to performance testing for real-time systems?

Ans:

- Performance testing for real-time systems involves simulating scenarios where the system processes data with strict timing constraints.

- Identify the critical real-time operations that must be tested. Use tools that support real-time simulation, such as LoadRunner or JMeter.

- Create test scenarios that reflect peak load conditions and measure latency, jitter, and response times.

- Monitor the system’s ability to meet its timing requirements under various loads. Analyze the results to identify any timing violations or performance bottlenecks.

84. What role does user feedback play in refining performance testing scenarios?

Ans:

- User feedback is crucial for refining performance testing scenarios as it provides insights into real-world usage patterns and performance expectations.

- Collect feedback through surveys, user reviews, and direct communication.

- Analyze the feedback to identify common performance issues and user pain points. Adjust test scenarios to reflect actual user behavior and conditions better.

85. What procedures validate API performance in performance testing scenarios?

Ans:

- Define key metrics such as response time, throughput, error rate, and resource utilization.

- Use tools like Postman, JMeter, or LoadRunner to create and execute test scripts.

- Simulate different load conditions and measure how the API handles various traffic volumes.

- Monitor the API’s performance under peak loads and during stress testing.

- Analyze the results to identify any bottlenecks, failures, or performance degradation.

86. What are the key metrics to monitor during performance testing of cloud-based applications?

Ans:

Key metrics to monitor during performance testing of cloud-based applications include response time, throughput, and error rates. Additionally, track resource utilization such as CPU, memory, and disk I/O. Monitor network latency and bandwidth usage to identify potential bottlenecks. Analyze the scalability by observing how the application performs under varying loads. Check for the elasticity of the application, ensuring it can scale up and down based on demand.

87. What techniques are used to simulate gradual increases in load during a performance test?

Ans:

- Simulating gradual increases in load involves incrementally adding virtual users or transactions over a specified period.

- Make use of LoadRunner or JMeter, two tools for performance testing to configure a ramp-up period. Define the initial load, the maximum load, and the duration for increasing the load.

- Start with a small number of users and gradually increase them at regular intervals.

- Monitor the system’s performance as the load increases, paying attention to response times, throughput, and resource utilization.

88. Describe the impact of third-party integrations on performance testing strategies.

Ans:

- Third-party integrations can significantly impact performance testing strategies by introducing additional variables and potential bottlenecks.

- Identify all third-party services and their criticality to the application. Include these integrations in test scenarios to reflect real-world usage.

- Monitor the performance of third-party services alongside application, focusing on response times and reliability.

- Consider the impact of network latency and data transfer rates on these integrations. Test for failure scenarios, such as service outages or slowdowns, to understand their effects.

89. What are the key differences between synthetic and real-user monitoring in performance testing?

Ans:

Synthetic monitoring involves using scripts to simulate user interactions with an application, providing consistent and controlled performance data. Real-user monitoring (RUM), on the other hand, collects data from actual users as they interact with the application, offering insights into real-world performance. Synthetic monitoring allows for proactive testing and is helpful in identifying performance issues before they affect users.

90. What method is used to determine the optimal concurrency level for a performance test?

Ans:

Determining the optimal concurrency level for a performance test involves understanding the expected user load and the application’s capacity. Start by analyzing historical data, user behavior, and peak usage times. Conduct load tests with varying levels of concurrency to identify the point at which the application starts to degrade. Track important performance indicators during these tests, including throughput, response time, and resource utilization.

91. What are the security considerations in performance testing?

Ans:

- Security considerations in performance testing include ensuring that test data does not expose sensitive information.

- Use anonymized or synthetic data to prevent data breaches. Verify that the testing environment is secure and isolated from production to avoid unintended access.

- Test for security vulnerabilities that could be exploited under load conditions, such as SQL injection or cross-site scripting. Ensure that authentication and authorization mechanisms function correctly under high load.

92. How can performance testing help in capacity planning for production environments?

Ans:

Performance testing helps in capacity planning by providing insights into the application’s behavior under various load conditions. By simulating different levels of user activity, it identifies the maximum load the application can handle before performance degrades. This data helps in determining the necessary hardware and software resources to support peak loads. Performance testing also reveals bottlenecks and areas that need optimization, guiding resource allocation.