A Software Development Engineer in Test (SDET) is an expert in both software development and testing. They are responsible for designing, developing, and maintaining automated test frameworks and tools to uphold software quality. SDETs collaborate closely with developers to understand the codebase, create test scripts, and detect bugs early in the development cycle. By bridging the gap between development and testing, SDETs ensure efficient and reliable software delivery through automation and continuous integration practices.

1. What is the SDET?

Ans:

SDET stands for Software Development Engineer in Test. SDETs are responsible for both developing software and ensuring its quality through automated testing. They write and maintain code to test software functionality, performance, and reliability. By creating automated test frameworks and scripts, SDETs enable efficient and repeatable testing processes.

2. What level of expertise is there in ad hoc testing?

Ans:

Proficiency in ad hoc testing involves spontaneous and unstructured testing to uncover defects that might be missed in formal test cases. It requires quick thinking, domain knowledge, and the ability to simulate end-user behavior to identify unexpected issues and improve software reliability. This approach often reveals critical issues that automated or planned tests may overlook, enhancing overall software quality. Adaptability and creativity are essential for effectively addressing diverse and unpredictable scenarios.

3. How can a text box be tested if it doesn’t have background functionality?

Ans:

- In such cases, Testing can focus on the visible aspects like input validation, error messages, and user experience.

- Functional tests can verify behavior in isolation, and mock data can simulate background responses.

- Boundary testing ensures input limits are respected, while usability testing checks if user expectations are met without background processes.

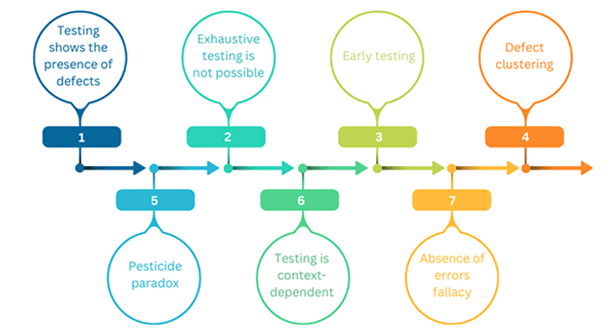

4. What are software testing’s guiding principles?

Ans:

Software testing principles encompass several key concepts. Early testing advocates for starting the testing process as early as possible to catch defects sooner. Exhaustive testing is acknowledged as impractical, so focus is placed on risk-based testing. Defect clustering highlights that defects often occur in specific areas, requiring targeted attention. The pesticide paradox states that repeating the same tests will not uncover new defects, so test cases must evolve.

5. What steps are taken to conduct exploratory testing?

Ans:

Simultaneous learning, test design, and test execution are all part of exploratory Testing. Start by understanding the application’s objectives, workflows, and user expectations. Next, interact with the software dynamically, intuitively, and systematically, recording findings in real time. This approach seeks to uncover unexpected behavior and enhance test coverage.

6. Explain fuzziness testing.

Ans:

- Fuzz testing is a technique for discovering vulnerabilities and bugs by injecting invalid, unexpected, or random data into an application’s inputs.

- It helps uncover security flaws, stability issues, and unexpected behaviors that may not be evident during regular operation, improving overall software robustness.

7. What does a typical workday look like for an SDET (Software Development Engineer in Test)?

Ans:

- Writing and maintaining automated test scripts.

- Analyzing test results.

- Collaborating with developers on bug fixes.

- Participating in code reviews.

- Refining testing strategies.

They may also engage in test planning, API testing, performance testing, and ensuring test coverage across various software components.

8. List the various classifications that are used to organize test cases.

Ans:

Test cases can be classified into various types to ensure comprehensive test coverage. Functional tests assess specific functionalities against requirements, while non-functional tests evaluate performance, usability, and other quality attributes. Automated tests are executed by tools, and manual tests are performed by testers. Positive tests verify that the system behaves as expected with valid inputs, whereas negative tests check for proper handling of invalid inputs.

9. Distinguish between manual testers and software development engineers in Testing (SDETs).

Ans:

| Aspect | Manual Testers | Software Development Engineers in Test (SDETs) |

|---|---|---|

| Test Execution | Conducts tests manually, following predefined test cases | Develops and executes automated test scripts |

| Tools & Frameworks | Limited use of automation tools | Proficient in various automation tools and frameworks |

| Code Understanding | Basic understanding of the application | Deep understanding of the codebase and application logic |

| Bug Detection | Identifies bugs through manual exploration and test cases | Identifies bugs early through automated tests |

10. What knowledge is there about alpha testing, and what are its aims?

Ans:

Experience in alpha testing involves evaluating software in a controlled environment before its release to a small group of users. The goals are to identify bugs, gather early user feedback, validate functionality, and assess overall usability. This process helps refine the product prior to broader beta testing and final release. Additionally, alpha testing provides an opportunity to ensure that core features work as intended and to make necessary adjustments based on feedback.

11. What are the elements of a bug report?

Ans:

- A bug report typically includes elements such as a concise title, detailed steps to reproduce the issue, expected and actual behaviors, screenshots or logs, if applicable, severity and priority assessments, and the environment in which the bug was found.

- These elements ensure that developers can understand, reproduce, and fix the issue efficiently, maintaining clear communication throughout the resolution process.

12. What is a bug report in the context of software testing?

Ans:

- In software testing, a bug report serves as a formal documentation of issues identified during Testing.

- It provides crucial information about software defects or anomalies, enabling developers to investigate and resolve them. A well-crafted bug report includes detailed descriptions, steps to replicate the issue, environment details, and sometimes suggestions for potential fixes.

- Effective bug reporting enhances collaboration between testers and developers, facilitating smoother debugging and improving software quality.

13. What is Regression Testing?

Ans:

Regression testing is the process of retesting modified parts of a software system to ensure that existing functionalities still work correctly after changes or updates. It aims to uncover regression defects, which are unintended changes or disruptions to previously working features due to new code implementations or fixes. By systematically running regression test cases, teams verify that recent modifications haven’t introduced unintended side effects, maintaining overall software stability and reliability.

14. Explain the difference between Black Box and White Box testing.

Ans:

Black box testing is the process of evaluating a software system’s functionality without having access to its core code or structure. Testers treat the software as a “black box,” deriving test cases based on external specifications, requirements, and user perspectives. In contrast, White Box testing examines the software’s internal workings, including code structures, paths, and logic. Testers use knowledge of the system’s internal design to create test cases that target specific code paths, conditions, and algorithms.

15. What is Load Testing?

Ans:

Load testing is a type of performance testing that evaluates how a system behaves under specific load conditions, typically by simulating concurrent user interactions or data processing loads. It aims to determine the system’s response times, resource utilization, and overall stability when subjected to expected or peak workloads. By identifying performance bottlenecks, scalability limits, and potential issues related to system resources under load, load testing helps optimize software performance and ensure it can handle anticipated user demands effectively.

16. Describe the concept of End-to-End Testing.

Ans:

- End-to-end Testing validates the complete flow of a software application from start to finish, ensuring all integrated components work together as expected.

- It involves testing the entire user journey, including interactions with interfaces, databases, networks, and other external dependencies.

- End-to-end Testing verifies that the software functions correctly across all interconnected modules and subsystems by mimicking real-world scenarios and user behaviors. It also identifies potential integration issues or discrepancies that could affect user experience or business processes.

17. What are the advantages of Automated Testing over Manual Testing?

Ans:

Automated Testing offers several advantages over manual Testing, including faster execution of test cases, consistent and repeatable test results, increased test coverage across different platforms and configurations, early detection of defects, and improved productivity of testing teams. By automating repetitive and time-consuming tests, teams can allocate more resources to exploratory Testing and complex scenarios, enhancing overall software quality and accelerating time-to-market without compromising accuracy or reliability.

18. What methods are used to handle performance issues identified during testing?

Ans:

- When performance issues are identified during Testing, it’s essential to isolate and reproduce the problem using profiling tools or monitoring metrics.

- Once the root cause is identified, prioritize fixes based on impact analysis and potential risks to the system.

- Implement targeted optimizations such as code refactoring, caching strategies, or resource allocation adjustments to improve performance.

- Finally, the effectiveness of fixes will be validated through retesting and performance benchmarks to ensure that the system meets expected performance criteria and user experience standards.

19. What is Integration Testing?

Ans:

- Integration testing verifies the interactions between different software modules or components to ensure they function together as intended.

- It focuses on detecting interface defects, data transfer issues, and communication failures between integrated units.

- By simulating real-world interactions and dependencies, integration testing validates that integrated components collaborate seamlessly within the more extensive system architecture.

- This testing phase helps uncover integration errors early in the development lifecycle, ensuring software stability, reliability, and interoperability across interconnected modules.

20. Explain the concept of Acceptance Testing.

Ans:

Acceptance testing evaluates whether a software system meets specified business requirements and user expectations before its deployment. It involves validating the software’s functionality, usability, performance, and compliance with predefined acceptance criteria or user stories. Typically conducted by end-users, stakeholders, or quality assurance teams, acceptance testing aims to confirm that the software is ready for production release and aligns with business objectives.

21. What criteria are used to prioritize testing activities in Agile development?

Ans:

In Agile, prioritizing testing activities revolves around understanding user stories and their business impact. Prioritization is based on risk, focusing first on critical functionalities or areas prone to defects. Continuous communication with stakeholders helps align testing efforts with changing project priorities. Automated regression tests are prioritized to ensure core functionalities remain stable, while exploratory Testing targets new or complex features early in the sprint.

22. What is the purpose of Smoke Testing?

Ans:

- Smoke testing aims to quickly determine if a build’s basic functionalities are working correctly before proceeding with more detailed Testing.

- It verifies essential functionalities without diving deep into specific details.

- Conducting smoke tests early in the testing phase helps catch major issues early, ensuring that subsequent testing efforts are focused on stable builds.

23. Describe the concept of Usability Testing.

Ans:

- Usability testing assesses how user-friendly a software product is by observing real users interacting with it.

- It focuses on intuitiveness, ease of navigation, and user satisfaction.

- Through tasks and scenarios, testers gather qualitative feedback on user interactions.

- Iterative improvements based on usability testing enhance user experience, leading to higher adoption rates and customer satisfaction.

24. What strategies ensure test coverage in a testing strategy?

Ans:

Mapping test cases ensures test coverage to requirements and risk areas identified during project planning. A combination of techniques, such as equivalence partitioning, boundary value analysis, and decision tables, helps achieve comprehensive coverage. Regular reviews and traceability matrices validate coverage against project objectives and acceptance criteria.

25. What is Boundary Testing?

Ans:

Boundary testing validates a system’s behavior at the boundaries of allowed input values. It aims to uncover errors related to boundary conditions, such as off-by-one errors or unexpected behaviors near limits. By testing both sides of boundaries (within, just above, just below), robust handling of edge cases is ensured, enhancing overall system reliability.

26. What approach is taken to handle security testing in a test plan?

Ans:

- Security testing encompasses identifying vulnerabilities and ensuring the software can resist attacks.

- It involves testing for authentication, authorization, data integrity, and encryption measures.

- Incorporating security testing early in the SDLC and using tools like penetration testing and code analysis helps identify and mitigate potential risks.

- Regular updates and compliance with security standards further strengthen the software’s resilience.

27. Explain the concept of Sanity Testing.

Ans:

Regression testing’s subset, or sanity testing, rapidly confirms that recent modifications haven’t negatively impacted any of the features currently in place. It focuses on critical areas affected by recent updates, ensuring core features still work as expected. Unlike exhaustive regression testing, sanity testing is brief and aims to confirm stability before deeper testing phases.

28. What processes are followed to identify and manage test dependencies?

Ans:

Test dependencies are identified by analyzing requirements and understanding interdependencies between functionalities or components. Mapping out dependencies early allows for prioritizing testing accordingly and managing resource allocation effectively. Test management tools and dependency tracking matrices help visualize and mitigate risks associated with dependencies, ensuring comprehensive test coverage.

29. What is Non-Functional Testing?

Ans:

- Non-functional Testing evaluates aspects of software that do not relate to specific behaviors or functions but instead focus on attributes like performance, reliability, scalability, and usability.

- It ensures the software meets quality standards beyond functional requirements.

- Performance testing, security testing, and usability testing are examples of non-functional testing techniques crucial for delivering a robust and user-friendly product.

30. Describe the difference between Verification and Validation.

Ans:

- Verification ensures that the software meets specified requirements and adheres to its intended design without necessarily focusing on user expectations.

- It involves reviews, walkthroughs, and inspections.

- Validation, on the other hand, ensures the software meets user expectations and needs, confirming it solves the right problem.

- Validation involves Testing, user feedback, and acceptance criteria to ensure the product’s usability and functionality align with user needs.

31. What metrics are used to measure the success of a testing effort?

Ans:

The success of testing efforts is gauged by several factors. Primarily, it includes the number of defects identified and resolved before release, ensuring that critical functionalities meet the specified requirements. Additionally, it involves assessing the extent of test coverage achieved compared to planned objectives. Efficiency is also measured by evaluating how effectively testing processes utilize time and resources.

32. What is the role of a Test Plan in software testing?

Ans:

A Test Plan outlines the scope, approach, resources, and schedule for testing activities. It serves as a roadmap, guiding testers through each phase of the testing lifecycle, ensuring systematic and comprehensive coverage of requirements. The Test Plan also communicates testing objectives, risks, and deliverables, aligning stakeholders on expectations and ensuring traceability between requirements and test cases.

33. What practices handle testing in a CI/CD environment?

Ans:

- In a CI/CD environment, Testing is integrated into automated pipelines, ensuring every code change undergoes automated unit tests, integration tests, and regression tests.

- This process detects issues early, maintains code quality, and enables rapid feedback loops for developers.

- Test automation scripts are crucial for ensuring that each build meets quality standards before deployment.

34. Explain the concept of Compatibility Testing.

Ans:

- Compatibility Testing ensures software functions correctly across different environments, devices, browsers, and operating systems.

- It verifies that the application behaves consistently across varying configurations, identifying compatibility issues such as UI distortions or functional discrepancies.

- This Testing validates seamless user experiences regardless of the platform used, enhancing usability and customer satisfaction.

35. What is the difference between System Testing and Integration Testing?

Ans:

Integration Testing validates interactions between integrated components or modules to ensure they function correctly together. It detects interface defects and communication issues early in the development cycle. System Testing, on the other hand, tests the complete, integrated system against business requirements and user expectations. It evaluates system functionalities, performance, and behavior in a simulated real-world environment.

36. What methods are used to perform risk-based testing?

Ans:

Risk-based Testing prioritizes testing efforts based on potential business impact and likelihood of occurrence. It involves identifying and assessing risks, defining testing priorities, and allocating resources accordingly. Test cases focus on high-risk areas to mitigate critical issues early in the development lifecycle, ensuring comprehensive test coverage aligned with project goals and risk management strategies.

37. Describe the concept of Performance Testing.

Ans:

- Performance Testing evaluates how a system performs under various workload conditions, measuring responsiveness, scalability, and stability.

- It identifies performance bottlenecks, such as slow response times or memory leaks, ensuring optimal system performance under expected and peak loads.

- Performance tests include load testing, stress testing, and scalability testing. These provide insights into system behavior and ensure reliable performance in production environments.

38. What is the role of Exploratory Testing in Agile development?

Ans:

- Exploratory Testing complements scripted Testing by allowing testers to explore the application dynamically, uncovering defects that scripted tests might miss.

- It promotes rapid feedback and adaptability in Agile projects, emphasizing tester creativity and domain expertise to simulate real user scenarios.

- Exploratory Testing fosters collaboration between testers and developers, enhances test coverage, and identifies critical issues early in the development process.

39. What techniques ensure test repeatability in automated testing?

Ans:

Test repeatability in automated Testing is ensured by maintaining consistent test environments, version-controlling test scripts, and using reliable data sets. Tests should be designed to be independent of each other and should handle setup and teardown processes robustly. Continuous integration practices help ensure that tests execute predictably and reliably with each code change, maintaining the integrity of automated testing efforts.

40. Explain the concept of Scalability Testing.

Ans:

Scalability Testing evaluates a system’s ability to handle increasing workloads and user demands without sacrificing performance. It assesses how well the system scales in terms of processing power, network bandwidth, and database capacity as user traffic grows. This Testing identifies scalability bottlenecks, ensuring systems can accommodate future growth and maintain optimal performance levels under peak usage scenarios.

41. What approaches are used to handle testing for mobile applications?

Ans:

- Testing for mobile apps involves ensuring compatibility across devices, OS versions, and screen sizes.

- Using emulators and real devices helps replicate user environments.

- Focus on usability, performance, and security to provide a seamless user experience.

- Implement test automation frameworks tailored for mobile platforms to increase test coverage.

- Continuous integration ensures rapid feedback on changes, which is crucial for agile, mobile development.

42. What is the importance of Test Data Management?

Ans:

- Test Data Management ensures testing effectiveness by providing relevant, accurate, and secure data.

- It minimizes risks associated with privacy breaches and regulatory compliance.

- Proper management reduces test execution time and ensures comprehensive test coverage.

- Data masking and anonymization techniques secure sensitive information during Testing.

- Effective management supports continuous integration and deployment by providing consistent data environments.

43. Describe the concept of Accessibility Testing.

Ans:

Accessibility Testing ensures software usability for users with disabilities. It confirms adherence to WCAG and other accessibility guidelines. Testing involves screen readers, keyboard navigation, and color contrast checks. Accessibility audits identify and resolve barriers to access for disabled users. Automated tools complement manual Testing to ensure comprehensive coverage. Successful Testing improves user satisfaction and broadens audience reach.

44. What procedures are followed for test environment setup and management?

Ans:

Test environment setup involves replicating production environments for testing purposes. Use configuration management tools to automate setup and ensure consistency across environments. Establish environment policies to manage access, configurations, and data privacy. Monitor environmental health to prevent testing disruptions and ensure reliability. Collaborate with development and operations teams to streamline environment provisioning.

45. What is the difference between Retesting and Regression Testing?

Ans:

- Retesting verifies fixes for identified defects to ensure they are resolved correctly. It focuses on specific functionalities affected by recent changes.

- Regression Testing validates unchanged functionalities to ensure new updates don’t introduce unintended changes.

- It ensures system stability and prevents regression of previously working features.

- Automation tools help execute regression tests efficiently across different versions. These tests complement each other in maintaining software quality.

46. Explain the concept of Ad-hoc Testing.

Ans:

- Ad-hoc Testing involves spontaneous, unstructured Testing without predefined test cases or scripts.

- Testers explore the software to identify defects not covered by formal testing processes.

- It relies on the tester’s experience and domain knowledge to uncover critical issues quickly.

- Ad-hoc Testing is informal and often unplanned, addressing usability, functionality, and performance concerns. While not exhaustive, it complements formal testing methods by providing rapid feedback.

47. What methods are used to ensure traceability between requirements and test cases?

Ans:

Establish traceability matrices to map requirements to corresponding test cases and vice versa. Use tools and templates to document and track requirements throughout the software development lifecycle. Regularly review and update traceability links to ensure alignment with changing requirements. Conduct impact analysis to assess the coverage and dependencies between requirements and test cases. Collaboration between stakeholders facilitates clear communication and enhances traceability effectiveness.

48. Describe the concept of Static Testing.

Ans:

Static Testing reviews software artifacts (code, specifications) without executing them. It identifies defects early in the development lifecycle, reducing rework costs. Techniques include walkthroughs, inspections, and code reviews to improve code quality. Static analysis tools automate defect detection and enforce coding standards. Effective static Testing enhances software reliability, maintainability, and security. It complements dynamic Testing by preventing defects from entering the codebase.

49. What strategies are employed to handle performance bottlenecks identified during testing?

Ans:

- Identify bottlenecks using performance profiling tools and analysis of system metrics.

- Prioritize and replicate bottleneck scenarios in a controlled test environment. Implement performance testing methodologies, such as load and stress testing, to simulate real-world conditions.

- Optimize code, database queries, and infrastructure configurations based on bottleneck analysis.

- Monitor and measure improvements iteratively to ensure sustained performance gains. Collaboration between development and operations teams is crucial for effective resolution.

50. What is the role of Defect Triage in the testing process?

Ans:

- Defect Triage involves prioritizing and assigning severity to reported defects for timely resolution.

- It ensures alignment between defect resolution efforts and project priorities.

- Triage meetings with stakeholders prioritize defects based on impact and urgency.

- Cross-functional collaboration determines defect assignment to development teams for resolution.

- Metrics and reports track defect resolution progress and quality improvement initiatives. Efficient triage minimizes project risks and enhances software quality throughout the testing lifecycle.

51. What criteria are used to prioritize test cases for execution?

Ans:

Prioritizing test cases involves assessing criticality, impact, and dependencies. Start with high-risk and critical functionalities, ensuring core features are tested first. Use risk-based analysis and feedback from stakeholders to determine execution order. Continuous reassessment during Testing helps adapt priorities based on current project needs and discovered issues.

52. Explain the concept of Exploratory Testing.

Ans:

Exploratory Testing involves simultaneous learning, test design, and execution. Testers explore the software, forming hypotheses about potential issues and validating them in real-time. It emphasizes creativity, adaptability, and domain knowledge, uncovering defects that scripted tests might miss. Results provide immediate feedback, enhancing understanding of software behavior and informing subsequent testing efforts.

53. What is the difference between Validation and Verification in software testing?

Ans:

- Validation ensures that the software meets the customer’s requirements and expectations.

- It checks if the right product is being built.

- Verification, on the other hand, ensures that the software adheres to its specifications and requirements.

- It focuses on the correctness and completeness of the software against predefined conditions and specifications.

54. Explain the concept of Equivalence Partitioning.

Ans:

- Equivalence Partitioning is a testing technique that divides input data into partitions of equivalent data.

- It simplifies Testing by reducing the number of test cases needed to cover a range of scenarios.

- Each partition is tested with representative inputs, assuming all inputs in the same partition behave similarly.

- This method improves test coverage while minimizing redundancy in test cases.

55. What approach is taken to handle testing for web services or APIs?

Ans:

Testing web services or APIs involves validating their functionality, performance, security, and interoperability. Use tools like Postman or SOAPUI to send requests and verify responses. Test various inputs, edge cases, and error scenarios defined in API documentation—Automate repetitive tests to ensure consistency and efficiency. Validate data formats, authentication mechanisms, and compliance with API contracts.

56. What is the importance of Test Automation Frameworks?

Ans:

Test Automation Frameworks provide structure and guidelines for automated Testing. They streamline test script development, maintenance, and execution across different applications and environments. Frameworks like Selenium or Appium offer reusable components, reporting mechanisms, and integration capabilities, ensuring consistent test coverage and reliability. They enhance efficiency, reduce testing time, and support continuous integration and delivery practices.

57. Describe the concept of Risk-based Testing.

Ans:

- Risk-based Testing prioritizes testing efforts based on potential risks to the project’s success.

- It involves identifying and assessing risks, determining their impact on quality and business objectives, and allocating testing resources accordingly.

- High-risk areas receive more testing focus to mitigate potential failures early.

- Continuous risk assessment throughout the project lifecycle ensures testing efforts align with evolving project risks and priorities.

58. What processes are used to perform compatibility testing?

Ans:

- Compatibility Testing verifies software’s compatibility with different operating systems, browsers, devices, and networks.

- Identify target configurations based on user demographics and market trends.

- Execute tests to validate functionality, performance, and user experience across targeted environments.

- Use virtualization or cloud-based platforms to simulate diverse configurations.

- Document and prioritize compatibility issues for resolution, ensuring seamless user experience across platforms.

59. What is the role of a Test Strategy in software testing?

Ans:

A Test Strategy defines the approach, scope, resources, and schedule for testing activities. It aligns testing efforts with business goals, project requirements, and development methodologies. The strategy outlines test objectives, entry and exit criteria, test environment setup, and risk management strategies. It guides test planning, execution, and reporting phases, ensuring comprehensive test coverage and efficient resource utilization.

60. Explain the concept of Load Testing and its objectives.

Ans:

Load Testing evaluates a system’s performance under expected load conditions. Objectives include assessing system response times, scalability, and reliability under specific user loads. To stress-test application components, simulate realistic user scenarios using tools like JMeter or LoadRunner. Measure and analyze performance metrics to identify bottlenecks, resource limitations, and potential failure points. Optimize system performance based on load testing results to enhance user experience and reliability.

61. What methods are employed to handle performance testing for a distributed system?

Ans:

- Performance testing for distributed systems involves simulating realistic usage scenarios across networked components.

- It’s crucial to identify and measure key performance metrics such as response time and throughput under varying loads.

- Utilizing distributed load generation tools helps simulate real-world conditions.

- Analyzing performance bottlenecks across different nodes and ensuring scalability is essential.

62. Describe the concept of Stress Testing and its benefits.

Ans:

- Stress testing evaluates system stability by subjecting it to load beyond average operational capacity.

- It identifies breaking points, potential failures, and performance degradation under extreme conditions.

- Benefits include uncovering scalability limits, improving reliability, and ensuring robustness in critical scenarios.

- It helps validate recovery mechanisms and prepares systems for unexpected spikes in usage.

- Stress testing also provides insights into resource allocation and enhances overall system resilience.

63. What is the importance of Code Coverage in Testing?

Ans:

The percentage of code that is run during Testing is measured by code coverage. It ensures that all parts of the application code are tested, reducing the risk of undiscovered defects. High code coverage indicates thorough Testing, increasing confidence in software quality. It helps identify untested or poorly covered areas, guiding additional test case creation. Code coverage metrics also aid in assessing testing completeness and effectiveness, supporting informed decision-making in release readiness.

64. What practices ensure comprehensive security testing?

Ans:

Comprehensive security testing involves identifying vulnerabilities across multiple layers: application, network, and data. It uses methods including code review, vulnerability scanning, and penetration testing. Incorporating security best practices and compliance standards ensures thorough coverage. Continuous security testing throughout the development lifecycle mitigates risks early on. Collaboration with security experts and leveraging automated tools enhance the detection and remediation of security flaws.

65. Explain the concept of Dependency Testing.

Ans:

- Dependency testing verifies interactions between components to ensure correct functionality.

- It identifies dependencies such as data flow, module interactions, and external integrations.

- By testing how changes in one component affect others, it validates system integrity and reliability.

- Dependency testing prevents cascading failures and enhances maintainability.

- Techniques include interface testing, integration testing, and stubs/mocks to isolate dependencies during Testing.

66. What approach is taken to handle testing for localization and internationalization?

Ans:

- Testing for localization involves adapting software for specific languages, cultural conventions, and local regulations.

- It validates language support, date/time formats, currency symbols, and legal requirements.

- Internationalization testing ensures software can be easily adapted to different languages and regions without code changes.

- It focuses on design and architecture to support global markets. Using localization testing tools, linguistic experts, and culturally diverse test data ensures accurate validation across target locales.

67. What is the role of Exploratory Testing in Agile methodologies?

Ans:

Exploratory Testing in Agile allows testers to explore the application dynamically, uncovering defects not easily detected by scripted tests. It promotes collaboration and quick feedback loops, aligning with Agile principles of flexibility and responsiveness. Testers use domain knowledge and user perspectives to guide testing efforts. It complements scripted Testing by validating user workflows, edge cases, and usability aspects iteratively.

68. Describe the concept of Usability Testing and its importance.

Ans:

Usability testing evaluates how user-friendly and intuitive a software application is. It involves real users performing tasks to identify usability issues and gather feedback. The importance lies in enhancing user satisfaction, productivity, and adoption rates. Usability testing uncovers navigation issues, accessibility barriers, and design flaws early in the development lifecycle. Iterative Testing and user-centered design improve overall user experience, ensuring software meets user expectations and business goals.

69. What methods are used to manage test data in automated testing?

Ans:

- Managing test data in automated Testing involves creating, maintaining, and controlling data used in test scenarios.

- It includes data generation, manipulation, and cleanup to ensure consistency and reliability.

- Techniques like data masking and anonymization protect sensitive information during Testing.

- Using data management tools and version control systems helps maintain data integrity across testing environments.

70. Explain the concept of Continuous Testing in DevOps.

Ans:

- Continuous Testing integrates automated Testing into the DevOps pipeline, ensuring quality across rapid development cycles.

- It validates every code change through automated tests, from unit tests to end-to-end scenarios.

- Continuous Testing accelerates feedback loops, enabling early detection of defects and reducing time-to-market.

- It supports continuous integration and deployment by verifying functionality, performance, and security.

71. What strategies are used to approach testing for a microservices architecture?

Ans:

In a microservices architecture, testing each service independently is crucial to ensure it functions correctly in isolation. Emphasis is placed on contract testing to validate interactions between services, and tools like Docker are used for containerized testing environments. Continuous integration and deployment (CI/CD) pipelines are essential for automating testing across microservices. Performance testing is also conducted to verify scalability and resilience under varying loads, ensuring each service can handle its expected workload.

72. What are the key metrics used to measure test effectiveness?

Ans:

Test effectiveness is often measured by metrics such as test coverage, defect detection rate, and test execution efficiency. I track code coverage to ensure critical paths are tested, aiming for a balanced approach between statement, branch, and path coverage. The defect detection rate helps gauge how early and efficiently defects are identified in the testing process. Test execution efficiency measures how quickly tests run and provide feedback.

73. Describe the concept of Static and Dynamic Analysis in Testing.

Ans:

- Static analysis involves reviewing code and documentation without executing it, using tools to detect issues like coding standards violations or potential bugs.

- It helps identify problems early in the development process. Dynamic analysis, on the other hand, involves executing code to observe its behavior under different conditions.

- This includes testing functionalities, performance, and security vulnerabilities.

- Both static and dynamic analysis are integral to comprehensive software testing, providing complementary insights into code quality and behavior.

74. What techniques are employed to perform usability testing for a mobile application?

Ans:

- Usability testing for mobile apps involves evaluating the app’s user interface (UI) design, navigation, and overall user experience (UX).

- Conduct tests with target users to gather feedback on ease of use, intuitiveness, and task efficiency.

- The tasks are designed to cover typical user interactions, focusing on navigation flow, responsiveness to gestures, and accessibility features.

- Use usability testing tools to record sessions and analyze user behavior and preferences.

75. Explain the concept of Cross-browser Testing and its challenges.

Ans:

Cross-browser testing verifies that web applications perform consistently across different web browsers and versions. Challenges include handling browser-specific behaviors, CSS compatibility issues, and differences in JavaScript execution. Use automated testing tools to test scripts across multiple browsers simultaneously, identifying and resolving visual discrepancies or functional inconsistencies.

76. What is the role of Test Metrics in software testing?

Ans:

Test metrics provide quantitative insights into various aspects of the testing process, helping assess quality, progress, and efficiency. They track key performance indicators such as test coverage, defect density, and test execution time. These metrics facilitate data-driven decisions, enabling teams to prioritize testing efforts, allocate resources effectively, and identify areas needing improvement. By monitoring trends over time, test metrics also support benchmarking and setting realistic quality goals.

77. What strategies ensure test coverage in a complex software system?

Ans:

- Ensuring comprehensive test coverage in a complex system involves a systematic approach to identifying critical paths, functionalities, and integration points.

- Begin by mapping requirements to test cases and prioritizing high-risk areas and business-critical functionalities.

- Test coverage matrices help visualize gaps and ensure all scenarios, including edge cases and error conditions, are tested.

- Techniques such as equivalence partitioning and boundary value analysis are employed to maximize coverage without duplicating efforts.

78. Describe the concept of Gorilla Testing.

Ans:

- Gorilla testing focuses on stress-testing specific components or functionalities within a system to evaluate their robustness and performance under extreme conditions.

- It involves subjecting these components to unusually high loads or inputs beyond normal operational limits.

- The goal is to identify potential weaknesses, such as memory leaks or performance bottlenecks, that may not surface during regular Testing.

- Gorilla testing complements other testing methods by targeting specific areas of concern, providing insights into system resilience and scalability under challenging scenarios.

79. What approach is taken to handle testing for a real-time system?

Ans:

Testing real-time systems requires validating response times, data accuracy, and system reliability under time-critical conditions. Designing the test scenarios to simulate real-time events and interactions, focusing on latency and data synchronization across distributed components. Performance testing ensures the system meets response time requirements, handling concurrent transactions and data processing in real-time environments.

80. Explain the concept of Recovery Testing and its objectives.

Ans:

Recovery testing verifies how well a system can recover from hardware failures, software crashes, or unexpected interruptions. It aims to ensure data integrity, minimize downtime, and maintain system availability. I simulate failure scenarios, such as power outages or network disruptions, to observe how the system handles these events. Recovery procedures are tested to validate backup and restoration mechanisms, ensuring data consistency and operational continuity.

81. What is the importance of the Traceability Matrix in software testing?

Ans:

- A Traceability Matrix links requirements to test cases, ensuring each requirement is validated through tests.

- It helps maintain test coverage and ensures all requirements are addressed in the testing phases.

- This matrix also facilitates impact analysis, allowing teams to understand the scope of changes and their testing implications.

- Moreover, it serves as a documentation artifact, aiding in audits and compliance checks. Overall, a Traceability Matrix enhances transparency, traceability, and the reliability of the testing process.

82. What methods are used to handle performance bottlenecks during testing?

Ans:

- Identifying performance bottlenecks involves profiling the application to pinpoint resource-intensive areas.

- Utilizing tools like profilers and performance monitors helps gather data on CPU, memory, and I/O usage.

- Once identified, bottlenecks are addressed through optimization techniques such as code refactoring, caching, or scaling resources.

- Testing under simulated load conditions with stress testing verifies improvements. Continuous monitoring post-deployment ensures sustained performance.

83. Describe the concept of Mutation Testing.

Ans:

Mutation Testing involves making minor modifications (mutations) to code and running tests to check if existing test cases detect these mutations. It evaluates the effectiveness of the test suite by measuring its ability to detect introduced faults. Successful detection indicates robust test coverage, while undetected mutations highlight weaknesses. Although resource-intensive, Mutation Testing provides valuable insights into test suite quality and overall software reliability.

84. What practices are followed to manage regression testing in an Agile environment?

Ans:

In Agile, regression testing is integrated into every sprint to verify new features haven’t caused unintended issues. Test automation is pivotal, ensuring quick execution of regression tests across iterative builds. Prioritizing tests based on risk and impact helps manage time constraints effectively. Continuous integration and frequent minor releases aid in early defect detection, minimizing regression scope. Collaboration between developers and testers ensures comprehensive coverage and faster feedback loops.

85. Explain the concept of Test Driven Development (TDD).

Ans:

- Test Driven Development (TDD) involves writing automated tests before writing the actual code.

- Tests initially fail as they define the expected behavior. Developers then write the minimum code required to pass these tests, subsequently refactoring to improve code quality.

- TDD promotes better design, reduces defects early in the development cycle, and provides clear criteria for code completion.

- It encourages modular and testable code, fostering agility and improving overall software maintainability.

86. What is the role of Exploratory Testing in uncovering hidden defects?

Ans:

- Exploratory Testing involves simultaneous test design and execution, focusing on discovery rather than validation.

- Testers dynamically investigate the application, modifying their strategy in response to observations made in real-time.

- This method uncovers defects missed by scripted tests, leveraging tester intuition and domain knowledge.

- Exploratory Testing is effective in complex or rapidly changing environments where scripted tests may be insufficient.

87. What methods are used to perform backward compatibility testing?

Ans:

Backward Compatibility Testing ensures that current software remains functional and interoperable with older versions. It involves testing newer versions against previous configurations, data formats, and APIs. Automated and manual tests validate functionality, data integrity, and system performance across backward-compatible scenarios. Compatibility matrices and version control systems guide testing scope and coverage.

88. Describe the concept of Benchmark Testing and its benefits.

Ans:

Benchmark Testing evaluates system performance by comparing it against established standards or competitors. It involves measuring key performance indicators (KPIs) like response times, throughput, and scalability under controlled conditions. Benchmarks establish performance baselines, aiding in performance tuning, capacity planning, and SLA compliance. They validate architectural decisions and highlight areas for improvement.

89. What strategies are employed to maintain test cases over time?

Ans:

- Ensuring test case maintainability involves using clear, standardized naming conventions and documentation.

- Regular reviews and refactoring of test cases keep them relevant and efficient.

- Test automation frameworks that support modularization and parameterization facilitate more manageable updates.

- Version control systems track changes and provide historical context. Collaboration between testers and developers promotes shared ownership and alignment with evolving requirements.

90. Explain the concept of Compliance Testing.

Ans:

Compliance Testing verifies software adherence to regulatory standards, industry guidelines, or internal policies. It ensures legal and security requirements are met throughout the development lifecycle. Test cases are designed based on specific compliance criteria, covering areas like data protection, accessibility, and privacy. Compliance audits and certifications validate adherence, ensuring software reliability and user trust.

91. What is the role of the Test Environment in software testing?

Ans:

A Test Environment replicates production conditions for testing software changes without impacting live systems. It provides controlled settings for functional, performance, and integration testing. Configuration management ensures environment consistency across testing phases. Test data management ensures realistic scenarios are simulated. Efficient environment provisioning and maintenance support agile development cycles. Monitoring tools track resource usage and identify bottlenecks.

92. What approach is taken to handle testing for a highly scalable system?

Ans:

Testing for scalability involves simulating varying loads and stress conditions to assess system performance under increasing demands. Performance testing validates response times, throughput, and resource usage at peak loads. Horizontal and vertical scaling scenarios are tested to ensure seamless expansion. Auto-scaling mechanisms and load balancers are validated for effectiveness. Realistic production-like environments and data volumes ensure accurate results.