The Manual Testing Interview Questions and Answers offered by our ACTE institute present a comprehensive resource for individuals preparing for interviews in the field of manual testing. Tailored to cover diverse topics, these questions aim to equip candidates with a solid understanding of fundamental concepts and practical scenarios in manual testing. With a focus on clarity and depth, the answers elucidate key principles and methodologies, making the material valuable for entry-level candidates and experienced professionals seeking to enhance their knowledge and interview readiness in manual testing. The curated content ensures that individuals are well-prepared to confidently navigate interviews, providing insights into the intricacies of manual testing processes and methodologies.

1. What is manual testing, and why is it important?

Ans:

Manual testing is a process where testers execute test cases without the use of automated testing tools. It’s important because it allows for human intuition, uncovering usability issues, and performing exploratory testing, which automated testing may miss.

2. What benefits may manual testing offer?

Ans:

- Exploratory Testing: Testers can creatively explore the software, uncovering unexpected defects.

- User Perspective: Testers can think like end-users, ensuring the software meets user expectations.

- Cost-Effective: Manual testing is often more cost-effective for small projects or short-term testing needs.

- Adaptability: Testers can easily adapt to changes in requirements or the software itself.

3. What is the software testing life cycle?

Ans:

STLC, or Software Testing Life Cycle, unfolds as a structured sequence encompassing distinct phases such as requirements analysis, test planning, test design, test execution, defect reporting, and test closure. This methodical approach ensures a comprehensive and systematic testing process for software applications, emphasizing the importance of thorough testing at each stage.

4. What is a test case, and how do you write one?

Ans:

A test case is a set of conditions or steps designed to verify the functionality of a software component. To write one, define the test objective, prerequisites, steps to execute, expected results, and any additional information needed for the tester to perform the test.

5. Describe the process of regression testing.

Ans:

Regression testing is the practice of rerunning existing test cases to verify that recent code modifications have not introduced new defects and have not adversely affected previously functional features. This systematic process plays a crucial role in preserving software quality by ensuring that updates or changes do not compromise the integrity of the existing codebase.

6. What is boundary testing, and why is it useful?

Ans:

Boundary testing is a technique that assesses a software application at the extremes of its input domain. By focusing on the minimum and maximum input values, this testing method is valuable in uncovering potential issues and vulnerabilities that might exist at the edges of the application’s capabilities. The aim is to identify and address errors that may arise when the software operates at the boundaries of its specified input range.

7. Explain the concept of black-box testing.

Ans:

Black-box testing is a testing methodology that concentrates on evaluating a software application without delving into the knowledge of its internal code or logic. Testers approach the assessment by focusing solely on inputs and observing corresponding outputs, emphasizing the software’s user experience and overall behavior.

8. What is the purpose of exploratory testing?

Ans:

Exploratory testing is an unscripted and intuitive testing approach where testers explore the software without predefined test cases. The primary purpose of this method is to uncover unexpected defects, identify usability issues, and assess the overall user experience. By encouraging testers to navigate through the application dynamically and use their domain knowledge, exploratory testing aims to simulate real-world user interactions.

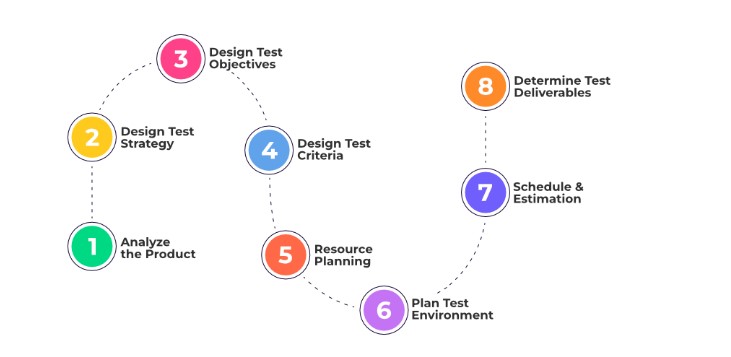

9. What are the important elements of a test plan?

Ans:

A test plan encompasses critical components such as test objectives, scope, test strategies, resource allocation, schedule, test environment details, entry/exit criteria, and anticipated deliverables. Functioning as a comprehensive roadmap, the test plan outlines the entire testing process, providing a structured guide for executing and managing testing activities throughout the software development lifecycle.

10. What does “functional testing” mean?

Ans:

Functional testing is a type of software testing that validates if a system’s functions operate as intended. It involves checking whether the software adheres to its specified requirements and assessing its performance in real-world scenarios. This testing method focuses on verifying the functionality of the software components to ensure that they meet the expected outcomes and deliver the intended user experience.

11. What is the importance of test data in manual testing?

Ans:

- Test data simulates real-world scenarios.

- Validates how the software behaves under different conditions.

- Ensures thorough testing of functionality.

- Helps uncover defects and issues.

- Enhances the quality and reliability of testing.

12. How do you handle defect reporting and tracking?

Ans:

Defect reporting involves documenting and describing any issues discovered during testing, while defect tracking ensures issues are monitored, prioritized, and resolved. Effective defect management is essential for delivering a high-quality product.

13. Describe the difference between positive and negative testing.

Ans:

Positive testing validates the system under valid input conditions, ensuring it functions as expected. Negative testing assesses the system’s handling of invalid or unexpected inputs and error scenarios. Both types of testing contribute to a comprehensive strategy, ensuring software reliability and robustness. Positive testing verifies correct behavior, while negative testing uncovers potential vulnerabilities.

14. Distinguish between smoke testing and sanity testing.

Ans:

| Aspect | |||

| Objective | To check if the essential functionalities work. | To verify specific features after changes or fixes. | |

| Scope | Broad and covers major components of the system. | Narrow and focuses on specific functionalities. | |

| Depth | Shallow testing, ensuring basic functionalities. | Deeper testing to verify specific features. | |

| Extensiveness | Limited in terms of test cases and scenarios. | More extensive, covering specific use cases. | |

| Dependency | Performed before detailed testing phases. | Performed after major changes or bug fixes. | |

| Example | Verifying basic functions like login and navigation. | Checking if a specific feature like payment processing works after a bug fix. |

15. Describe the concept of end-to-end testing.

Ans:

End-to-end testing is a comprehensive software testing approach that evaluates the entire software system from start to finish. It simulates real-world scenarios to ensure that all components and subsystems of the software work together as expected.

16. What’s the difference between validation and verification in software testing?

Ans:

Verification:

This process assesses whether the software product meets specified design and development requirements. It involves activities such as code reviews, static analysis, and unit testing.

Validation:

Validation determines whether the program satisfies the requirements and expectations of the user. To make sure the program serves the intended purpose, it uses dynamic testing approaches such as functional testing, system testing, and acceptance testing.

17. What is usability testing, and why is it necessary?

Ans:

Usability testing is a method of assessing a system’s user-friendliness by observing real users interact with it. This testing approach is crucial to guarantee that the software aligns with user expectations, is easy to navigate, and delivers an optimal user experience. By directly observing users engaging with the system, usability testing provides valuable insights into areas for improvement.

18. What are the advantages and disadvantages of exploratory testing compared to scripted testing?

Ans:

Advantages:

- Flexibility: Exploratory testing allows testers to adapt and improvise, responding to issues as they discover them.

- Realistic Scenarios: Testers can replicate real user experiences and identify unforeseen issues.

- Quick Start: Minimal preparation is required, making it suitable for rapid testing cycles.

Disadvantages:

- Lack of Reproducibility: It can be challenging to reproduce test cases consistently.

- Limited Documentation: Less formal documentation compared to scripted testing.

- Varied Coverage: The coverage may not be as comprehensive as scripted tests.

19. How do you perform compatibility testing?

Ans:

- Test software on different devices, browsers, and OS versions.

- Ensure the application functions across various platforms.

- Verify proper rendering and functionality.

- Identify compatibility issues and browser-specific bugs.

- Ensure a consistent user experience.

20. What is ad-hoc testing, and when is it used?

Ans:

Ad-hoc testing is an informal and unstructured testing approach where testers explore the software without predefined test cases. It’s typically used when there’s limited time or documentation, and testers need to identify critical issues quickly.

21. Explain the concept of risk-based testing.

Ans:

Risk-based testing is a testing approach that prioritizes testing efforts based on the perceived risks within the software. In this approach, high-risk areas are subjected to more rigorous testing to ensure that critical issues are identified and addressed early in the development process.

22. What is the role of a test environment in manual testing?

Ans:

A test environment is a controlled setup designed for conducting manual testing. It is configured to mimic the production environment, ensuring that testing accurately reflects how the software will perform under real-world conditions. This controlled environment allows testers to interact with the software, assess its functionality, and validate its behavior in a manner that closely resembles the actual operational environment.

23. Describe the importance of test coverage in testing.

Ans:

Test coverage is a metric that gauges the extent to which testing has exercised various aspects of the software. It helps ensure that different parts of the software have been tested, providing a quantitative measure of the thoroughness of the testing process. The goal of test coverage is to increase confidence by verifying that critical functionalities, code segments, and scenarios have been tested, reducing the likelihood that defects will go unnoticed.

24. Describe the process of root cause analysis for defects identified during testing.

Ans:

- Defect Identification: Log and document defects as they are discovered during testing.

- Data Collection: Gather information about the defect, its symptoms, and the circumstances under which it occurred.

- Isolation: Isolate the defect to determine the specific component or process where it originates.

- Analysis: Investigate the root cause by examining the code, system behavior, and relevant data.

- Resolution: Once the root cause is identified, implement necessary corrective actions or code fixes.

25. What is the significance of test prioritization?

Ans:

Test prioritization is a testing strategy that involves ensuring that critical and high-risk test cases are executed first in the testing process. This approach aims to uncover important defects early in the testing lifecycle. By prioritizing critical and high-risk scenarios, testing teams focus on areas of the software that are more likely to harbor severe issues.

26. How do you ensure the security of an application during testing?

Ans:

Security testing employs various techniques, such as penetration testing and code analysis, to identify vulnerabilities in an application and enhance its defenses against potential threats. The goal of security testing is to identify and rectify vulnerabilities, ensuring that the application is resilient to potential security threats and adheres to established security standards.

27. Can you describe the different types of performance testing?

Ans:

- Load Testing

- Stress Testing

- Scalability Testing

- Endurance Testing

- Spike Testing

- Volume Testing

28. What is the purpose of a test case design technique like equivalence partitioning?

Ans:

Equivalence partitioning is a testing technique that aids in the creation of efficient test cases by dividing input data into classes with similar characteristics. The goal is to ensure comprehensive test coverage while minimizing redundancy. By grouping inputs that are expected to behave similarly, equivalence partitioning allows testers to select representative test cases from each partition, rather than testing every possible input individually.

29. What problems can you encounter when doing manual testing?

Ans:

- Human errors.

- Slower execution.

- Difficulty replicating scenarios.

- Limited scalability.

30. How do you ensure test traceability and coverage in manual testing?

Ans:

In manual testing, ensuring traceability and coverage is necessary to maintain the quality of the software being tested. This involves linking test cases to requirements and tracking the extent to which the software has been tested. Here are some strategies and techniques:

- Requirements Traceability Matrix (RTM)

- Test Case Management Tools

- Test Coverage Metrics

- Test Case Prioritization

31. What is the significance of the Test Execution phase in manual testing?

Ans:

The Test Execution phase is a critical step in manual testing where test cases are executed, defects are identified, and the application’s functionality is verified against expected outcomes. During this phase, testers actively engage with the software to assess its behavior and ensure that it aligns with the specified requirements. Test Execution plays a pivotal role in validating the application’s overall functionality and performance in real-world scenarios.

32. What is the PDCA cycle, and what does it do?

Ans:

The PDCA (Plan-Do-Check-Act) cycle is a continuous improvement framework utilized in testing to systematically plan, execute, evaluate, and adjust testing processes for enhanced results. In the planning phase, testing objectives and strategies are established. The execution phase involves carrying out the planned testing activities. The check phase entails evaluating the outcomes and comparing them against the established criteria. The PDCA cycle promotes a proactive approach to refining testing practices and achieving better outcomes over time.

33. What are the important tests to do when checking a website?

Ans:

- Functionality testing.

- Usability assessment.

- Performance evaluation.

- Security analysis.

- Compatibility checks.

34. How do you handle test dependencies and test case prioritization?

Ans:

Managing test dependencies involves arranging test cases in a sequence based on their interdependencies. This ensures that tests are executed in a logical order, considering any dependencies between them. On the other hand, test case prioritization is a strategy that involves ensuring critical tests are executed first. This prioritization allows testing teams to identify major issues early in the testing process, addressing high-priority test cases before proceeding to others.

35. Describe the difference between manual testing and automated testing.

Ans:

Manual testing relies on human testers to execute test cases, where testers interact with the software, providing inputs and verifying outputs to validate its functionality. In contrast, automated testing utilizes scripts and testing tools to perform tests. Automated testing is faster, repeatable, and can execute a large number of test cases in a shorter time frame. While manual testing offers the advantage of human intuition and exploratory testing, automated testing enhances efficiency and is well-suited for repetitive tasks in the software testing process.

36. What is the role of a test plan in the testing process?

Ans:

A test plan is a comprehensive document that outlines the testing strategy, objectives, scope, and resources for a software testing project. It serves as a roadmap for the entire testing process, providing a structured and organized approach to testing activities. The test plan defines the goals and priorities of testing, the scope of testing efforts, the resources required, and the schedule for execution.

37. What is the importance of boundary value analysis in testing?

Ans:

Boundary value analysis is crucial in testing as it helps identify potential issues at the edges or boundaries of input domains. This technique focuses on testing values at or near the boundaries of valid input ranges, as these are more likely to cause defects. By testing boundary values, testers can uncover issues like off-by-one errors, overflows, and other boundary-related problems, leading to more robust and effective test coverage and, ultimately, better software quality.

38. How do you perform data-driven testing?

Ans:

Data-driven testing is a technique that involves executing the same test script with multiple sets of data. To perform data-driven testing:

- Prepare a set of test data with different input values and expected outcomes.

- Modify your test script to accept input data from an external source, like a spreadsheet or database.

- Automate the execution of the test script with various data sets.

- Compare the actual results with expected outcomes and report any discrepancies.

39. What is the purpose of a test summary report?

Ans:

A test summary report is a document that offers a brief and comprehensive overview of the testing process, results, and significant findings. Its purpose is to provide stakeholders with a clear understanding of the testing status, the overall quality of the software, and any potential issues or concerns that emerged during the testing phase.

40. How do you validate user interfaces during manual testing?

Ans:

To validate user interfaces during manual testing, testers review and interact with the UI to ensure it meets design specifications and user expectations. They verify elements like layout, functionality, responsiveness, and usability, reporting any deviations from the expected behavior.

41. Describe the role of a test environment and test data in test execution.

Ans:

A test environment provides the necessary infrastructure, hardware, and software for test execution, while test data includes input values and conditions for testing. They both ensure that tests are conducted in a controlled setting and with relevant data.

42. What is the significance of system integration testing in the testing process?

Ans:

- System Integration Testing ensures that individual components or modules work together seamlessly as a complete system.

- It identifies and rectifies interfacing issues, data flow problems, and integration-related defects early in the testing process.

43. How do you conduct recovery testing, and why is it essential?

Ans:

Recovery testing assesses how well a system can recover from failures or disruptions. It involves deliberately causing failures to test system resilience, which is vital to ensure data integrity and uninterrupted services in real-world scenarios.

44. How do you handle boundary cases in test scenarios?

Ans:

Handling boundary cases involves testing input values at the edges of acceptable ranges to uncover issues. Testers ensure the system behaves correctly when inputs are at their minimum, maximum, and just beyond these boundaries.

45. What are the different methods used in white box testing?

Ans:

White box testing methods include code coverage techniques like statement coverage, branch coverage, and path coverage. It involves reviewing and testing the internal structure, logic, and code of the software to uncover defects and ensure code quality.

46. Can you explain the concept of pairwise testing and its applications?

Ans:

Pairwise testing is a technique that tests all possible pairs of input comb inations efficiently, reducing the number of test cases required. It’s particularly useful in situations with numerous input parameters and limited testing resources.

47. Describe the process of test data migration and its potential challenges.

Ans:

Test data migration involves transferring data from one system to another. Challenges may include data loss, transformation errors, and data security concerns. Proper planning and validation are crucial to ensure a smooth migration.

48. What is a test condition, and how does it relate to test cases?

Ans:

- A particular component or feature of the software that needs to be tested, such as a feature, function, or behavior, is called a test condition.

- Test cases are detailed instructions and inputs used to validate a particular test condition, including the expected results and criteria for pass/fail. Test cases are derived from test conditions.

49. How do you perform smoke testing?

Ans:

Smoke testing involves running a minimal set of test cases to quickly verify if the software build is stable. Testers execute basic functionality tests to check for critical issues that might block further testing. If the software passes the smoke test, it is considered suitable for more comprehensive testing.

50. What is the role of test estimation in the planning phase, and what factors influence test estimation?

Ans:

- Test estimation in the planning phase helps in assessing the time, effort, and resources required for testing.

- Factors influencing test estimation include project complexity, available resources, testing scope, and historical data on similar projects.

51. What is the role of a test log in the testing process?

Ans:

A test log records detailed information about test activities, including test execution, defects found, and system configurations. It serves as a valuable reference for tracking progress, debugging, and ensuring transparency in testing.

52. How do you assess the readiness of a software product for release?

Ans:

Assessing readiness involves evaluating whether the software meets predefined quality criteria, such as functionality, performance, and security. This includes regression testing, reviewing test reports, and obtaining stakeholder approval before releasing the product.

53. Distinguish between alpha and beta testing.

Ans:

- Alpha testing is an internal testing phase conducted by the development team within the organization.

- Its primary goal is to identify defects, assess functionality, and ensure the software meets internal requirements and specifications.

- On the other hand, beta testing involves external users who are not part of the development team.

- These users test the software in a real-world environment, providing feedback on usability, user experience, and any issues encountered during their interactions with the software.

54. What are the key attributes of a good test engineer?

Ans:

Key attributes for a successful software tester include strong analytical skills, attention to detail, effective communication skills, adaptability, and a solid understanding of testing principles and methodologies. Analytical skills are crucial for dissecting complex systems and identifying potential issues. Attention to detail ensures thorough testing coverage, while effective communication skills facilitate collaboration with development teams and clear reporting of test results.

55. Explain the concept of test effectiveness and test efficiency.

Ans:

- Test effectiveness measures how well tests identify defects, focusing on finding the right defects.

- Test efficiency gauges how well testing is conducted within resource constraints, emphasizing timely and cost-effective testing.

56. List a few of the tools used in manual testing.

Ans:

- Manual testing tools include test management tools (e.g., TestRail)

- Defect tracking tools (e.g., JIRA)

- Screen capture tools (e.g., Snagit)

57. Explain the procedure for manual testing.

Ans:

Manual testing is a testing approach where human testers create and execute test cases without the use of automation tools. Testers manually interact with the software, providing inputs and verifying outputs to validate its functionality and behavior. This method involves a hands-on, step-by-step process of exploring the software’s features, ensuring that it meets specified requirements, and identifying any potential issues or defects.

58. What is a Latent Defect?

Ans:

The term you’re describing is often referred to as a “hidden defect” in software development. A latent defect is a hidden flaw in the software that may not immediately manifest issues but has the potential to surface in the future, potentially leading to system failures or other problems. These defects are not immediately apparent and may remain dormant until specific conditions or scenarios trigger their emergence.

59. What does soak testing mean?

Ans:

Soak testing, also known as endurance testing, entails subjecting the software to a continuous and sustained load for an extended period. The primary purpose is to assess the stability, performance, and behavior of the software over time. This testing approach is valuable in uncovering potential issues related to long-term usage, such as memory leaks and other resource-related issues.

60. What is the significance of acceptance testing in the testing life cycle?

Ans:

- Acceptance testing is vital as it validates whether the software meets the end-users requirements and expectations.

- It ensures that the software is ready for production and customer use, reducing the risk of post-release issues and improving customer satisfaction.

61. How do you handle test execution in parallel for multiple platforms or browsers?

Ans:

Parallel test execution involves using automation tools to run tests simultaneously on multiple platforms or browsers. This approach reduces testing time, accelerates feedback, and helps identify cross-platform or browser-specific issues efficiently.

62. What is the purpose of the Traceability Matrix in manual testing?

Ans:

- A Traceability Matrix in manual testing links test cases to requirements, ensuring comprehensive test coverage.

- It helps track the progress of testing activities, identifies gaps in testing, and supports change management by showing the impact of requirement changes on test coverage.

63. Can you explain the concept of test coverage metrics?

Ans:

Test coverage metrics measure the extent to which testing exercises different aspects of the software, such as code statements, branches, or requirements. These metrics provide insights into the thoroughness and effectiveness of testing, helping to identify areas with low coverage and potential quality risks.

64. Explain Non-Functional Testing.

Ans:

- Evaluate non-functional aspects like performance, scalability, reliability, and usability.

- Ensures the system meets quality attributes beyond functional requirements.

- Examples include load testing, stress testing, and usability testing.

65. Describe the various levels of testing and their objectives.

Ans:

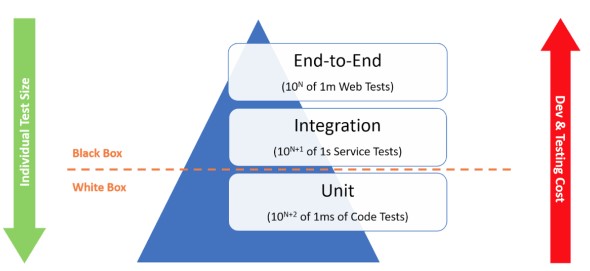

Various testing levels include Unit Testing (verifying individual components), Integration Testing (testing component interactions), System Testing (testing the entire system), and Acceptance Testing (validating against user requirements). Each level aims to uncover specific types of defects and ensure the software functions correctly in its intended environment.

66. How do you perform risk analysis in manual testing?

Ans:

- Risk analysis involves identifying potential risks, assessing their impact and likelihood, and prioritizing them for testing efforts.

- Testers use this analysis to allocate resources effectively and focus on high-risk areas, enhancing test coverage.

67. What is the role of a test management tool in manual testing?

Ans:

Test management tools help organize and streamline manual testing processes by managing test cases, test execution, and defect tracking. They provide visibility into testing progress, traceability, and reporting, improving overall testing efficiency and effectiveness.

68. How do you determine when a test case should be considered as “pass” or “fail”?

Ans:

- A test case is considered a “pass” if it meets all the specified criteria and produces the expected results.

- It is marked as a “fail” if it deviates from expected behavior, indicating the presence of defects or issues.

69. What is GUI testing?

Ans:

- GUI (Graphical User Interface) testing assesses the visual and functional aspects of a software application’s user interface.

- It ensures that the interface is user-friendly, functions correctly, and adheres to design and usability standards.

70. How do you ensure the security of test data during manual testing, especially in the context of sensitive information?

Ans:

To ensure the security of test data, especially sensitive information, testers must use anonymized or dummy data that doesn’t compromise privacy. Access controls, encryption, and data masking techniques can be employed to protect confidential data during manual testing.

71. Explain the Test-Driven Development (TDD) concept and its relevance in manual testing.

Ans:

Developers that use the test-driven development (TDD) methodology write tests before they write code. It guarantees that the code satisfies the anticipated requirements and aids in automating manual testing, which increases the effectiveness of manual testing.

72. How do you assess the impact of changes in the software code on existing test cases during regression testing?

Ans:

Impact assessment during regression testing involves analyzing how code changes affect existing test cases. It ensures that previously working features remain functional and identifies areas where adjustments in test cases are needed.

73. Can you describe the various test design techniques for usability testing and their application?

Ans:

Usability testing design techniques encompass various approaches, including heuristic evaluation, user personas, and think-aloud protocols. These techniques are employed to assess the user-friendliness of software interfaces. Heuristic evaluation involves expert evaluations based on established usability principles, user personas represent archetypal user profiles to guide testing, and think-aloud protocols encourage users to vocalize their thoughts during interactions.

74. What is the purpose of a test closure report?

Ans:

A test closure report is a formal document that provides a summary of testing activities, their outcomes, and any recommendations. This report serves as a conclusive document to formally conclude the testing phase. It encapsulates key information such as the scope of testing, executed test cases, identified defects, overall test results, and any suggestions or improvements for future testing efforts.

75. Describe a critical bug.

Ans:

A critical bug is a severe software defect that has the potential to cause system failure or significant functionality issues. These types of bugs are typically categorized as showstoppers, as they have a critical impact on the software’s performance and may hinder its ability to function properly.

76. Describe the role of test execution dependencies in a test plan.

Ans:

- Identifies the order and relationships of test cases.

- Ensures that prerequisite tests are executed before dependent tests.

- Manages resource allocation and scheduling.

- Minimizes test execution conflicts and optimizes testing efficiency.

77. Define baseline testing.

Ans:

Baseline testing is a crucial process that involves establishing a stable and reliable set of software or system characteristics. This set serves as a reference point for future testing and evaluations. By conducting baseline testing, organizations can define a solid foundation that represents the expected behavior and performance of the software or system under specific conditions.

78. What exactly is the pesticide paradox? How can I overcome it?

Ans:

The pesticide paradox highlights the diminishing effectiveness of using the same set of test cases repeatedly, as it may lead to overlooking potential defects. To overcome this paradox, it is essential to regularly update and diversify test cases. By refreshing and introducing new test scenarios, testing teams can better adapt to changes in the software and identify defects that might not have been evident with repetitive testing.

79. What does API testing mean?

Ans:

- API testing focuses on verifying the functionality of Application Programming Interfaces.

- It checks the interactions between software components, ensuring they work correctly.

- API testing involves sending requests to APIs and validating responses to confirm they meet the expected requirements.

- API testing is essential for ensuring that software components, including web services, libraries, and third-party integrations, function as intended.

80. What do top-down and bottom-up approaches in testing mean?

Ans:

Top-down testing initiates with the highest-level modules and progressively integrates lower-level modules. On the other hand, bottom-up testing starts with the lowest-level modules and incrementally incorporates higher-level modules. The top-down method emphasizes testing from the top of the module hierarchy downward, while the bottom-up approach focuses on testing from the bottom upward.

81. Define Security Testing.

Ans:

- Identifies vulnerabilities and weaknesses in an application’s security.

- Aims to protect data and prevent unauthorized access.

- Techniques include penetration testing, code reviews, and vulnerability scanning.

82. Can manual testing be replaced by automation testing?

Ans:

While manual testing continues to hold significance for specific scenarios, automation testing is progressively taking over in repetitive, time-consuming, and regression testing tasks. Automation testing offers enhanced efficiency and accuracy, allowing for the swift execution of repetitive test cases and ensuring consistent results across multiple iterations. The shift towards automation is driven by its ability to expedite testing processes, reduce human errors, and improve overall testing productivity.

83. Explain the concept of test coverage analysis.

Ans:

- Test coverage analysis assesses the extent to which the software’s code, requirements, or functionalities have been tested.

- It helps identify untested or under-tested areas, reducing the risk of undiscovered defects.

- Test coverage metrics include statement coverage, branch coverage, and path coverage, among others.

- It aids in determining the quality and completeness of the testing effort, enabling better decision-making in the testing process.

84. What are entry criteria in the context of test planning?

Ans:

Entry criteria in test planning refer to the conditions or prerequisites that need to be fulfilled before testing activities can commence. These conditions typically include having the test environment properly set up and ensuring that relevant test data is available. Meeting these entry criteria ensures a conducive testing environment and sets the foundation for effective and accurate testing processes.

85. How do you manage test data variation in manual testing?

Ans:

- Create multiple test datasets with varying input values.

- Use data-driven testing approaches to execute test cases with different data sets.

- Document and organize test data sets for easy reference.

86. How do you mitigate the testing paradox in software testing?

Ans:

To address the testing paradox in software testing, it is crucial to prioritize and concentrate testing efforts on high-risk areas. Implementing risk-based testing strategies allows for a more targeted approach, ensuring that critical aspects are thoroughly examined. Additionally, the strategic use of test automation proves beneficial in efficiently covering essential scenarios, contributing to comprehensive test coverage while optimizing resources.

87. How do you determine the priority of test cases?

Ans:

The priority of test cases is established by considering factors such as business impact, critical functionality, and customer requirements. Test cases addressing more crucial aspects of the application, which are essential for business success or align closely with customer needs, are assigned a higher priority. This prioritization ensures that testing efforts focus on the most critical areas first, optimizing resource allocation and allowing for the identification of high-impact issues early in the testing process.

88. Describe the challenges and best practices for testing mobile applications.

Ans:

Challenges:

- Diverse device and OS fragmentation

- Varying screen sizes and resolutions

- Network connectivity issues

- Frequent updates and version compatibility

Best Practices:

- Prioritize testing on popular devices and OS versions

- Utilize responsive design and adaptive layouts

- Test under different network conditions

- Implement automated testing for regression testing and continuous integration.

89. What is the primary goal of user acceptance testing (UAT)?

Ans:

- Ensure that the application functions as intended in the user’s real-world environment.

- Identify and resolve any usability issues or user experience problems.

- Gain user confidence and gather feedback for potential improvements.

- Minimize the risk of critical issues or user dissatisfaction post-release.

90. What are release notes, and how are they related to testing?

Ans:

Release notes are documents that provide information about the changes and updates in a software release. They are related to testing as they help testers understand what areas to focus on and what defects have been fixed in a release.

91. How do you manage and track test case changes and updates?

Ans:

Changes and updates to test cases can be effectively managed and tracked through the utilization of version control systems or dedicated test management tools. These tools maintain a comprehensive history of alterations, facilitating easy review and analysis of modifications over time.

92. What does accessibility testing involve?

Ans:

Accessibility testing is a process that assesses the usability and functionality of a software application, specifically focusing on its compatibility with individuals with disabilities. The goal is to ensure that the application aligns with accessibility standards such as WCAG (Web Content Accessibility Guidelines), thereby making it inclusive and accessible to all users.

93. How is Cause-Effect Graphing used in test case design?

Ans:

- Cause-effect graphing is a technique for designing test cases.

- It identifies input conditions and their corresponding effects on the system.

- It simplifies complex combinations of inputs by representing them graphically.

- It helps create test cases to cover various scenarios efficiently.

- It uncovers dependencies and relationships between input variables.

94. What is the objective of exit criteria in testing?

Ans:

The purpose of exit criteria in testing is to establish the conditions that must be fulfilled to conclude the testing phase. These conditions typically include reaching a specified level of test coverage, successfully passing critical test cases, and meeting predetermined quality standards. Exit criteria serve as a set of benchmarks that indicate when the testing process has achieved the desired objectives and is ready to transition to the next phase of the software development lifecycle.

95. Why do defects exist in an application?

Ans:

Defects can manifest in an application for various reasons, encompassing coding errors, misinterpretation of requirements, insufficient testing, and the evolving needs of users or environmental factors. The presence of defects underscores the importance of thorough testing, clear communication between development and testing teams, and an adaptive approach to accommodate changing project dynamics and user expectations.

96. Can you define Load Testing and its significance in manual testing?

Ans:

- Load testing assesses an application’s performance under expected or peak loads.

- Significance: It helps determine system scalability and stability.

- In manual testing, load testing may be limited, but it’s essential for identifying bottlenecks and issues before automated load tests.

97. What are Decision Tables in test design?

Ans:

Decision Tables in test design provide a systematic approach to represent intricate business rules or combinations of conditions and corresponding actions. By structuring inputs and expected outcomes, these tables aid in the identification of various test scenarios. This methodical representation enhances the efficiency of testing processes, allowing testers to systematically analyze and validate the software’s behavior under diverse conditions and rule combinations.

98. What is test closure, and how is it conducted?

Ans:

- Test closure involves gathering and documenting test-related information, such as test summary reports and defect reports.

- Test closure also includes evaluating the test process’s effectiveness and ensuring that all exit criteria have been met.

- The process concludes with a formal meeting to discuss results, lessons learned, and future improvements.

99. Describe State Transition Testing.

Ans:

State Transition Testing is a testing technique used to verify the behavior of a system as it transitions between different states. It’s often applied to finite-state machines and is used to ensure proper system behavior during state changes.