Automation Anywhere is a popular automation platform known for its adaptability and effectiveness. It allows companies to free up time and resources by automating repetitive tasks and streamlining operations. With its intuitive UI and advanced features, Automation Anywhere enhances productivity, scalability, and reduces errors. Its extensive toolkit makes it the go-to choice for businesses aiming to improve competitiveness in the digital landscape.

1. Describe test automation and explain its significance.

Ans:

Test automation utilizes software tools to execute pre-scripted tests on applications. It is essential because it speeds up the testing process, increases test coverage, and enhances accuracy. By minimizing manual intervention, automation reduces human errors and allows testers to concentrate on more strategic tasks. Furthermore, it supports continuous integration and delivery by enabling consistent and frequent testing throughout the development cycle.

2. What are the differences between manual testing and automated testing?

Ans:

Manual testing entails human testers using their judgment and observation to carry out test cases in the absence of automation technologies. In contrast, automated testing is more rapid, scalable, and repeatable since it uses tools and scripts to carry out tests. Automated testing works well for repetitive jobs, load testing, regression testing, and exploratory testing, while manual testing is good for exploratory testing and user experience review.

3. What is the software development lifecycle (SDLC) and how does automation fit into it?

Ans:

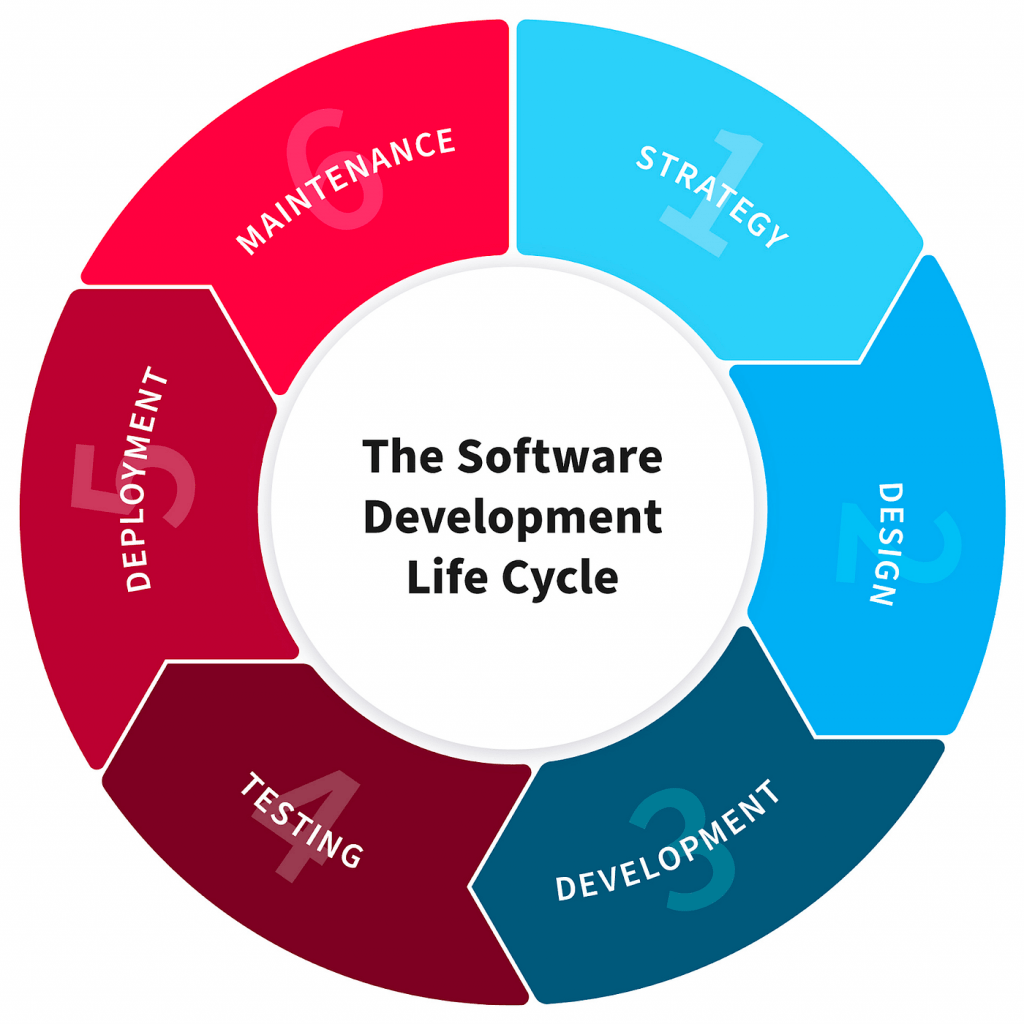

- The software development lifecycle (SDLC) encompasses every phase of the software development process, from requirements collection and planning to maintenance and retirement.

- Automation fits into various stages of SDLC, such as unit testing during development, regression testing in the testing phase, and deployment testing in the release phase.

- It streamlines processes, reduces time-to-market, and ensures software quality.

4. What are the main advantages of utilizing automation in testing?

Ans:

Automation in testing provides efficiency, accuracy, and cost savings by executing repetitive tasks faster and with consistency. It broadens test coverage, detecting defects early and facilitating regression testing. Scalable and reusable, it offers detailed reporting for improved traceability. Overall, it enhances software quality, reduces time-to-market, and optimizes resource allocation.

5. What are some common challenges you might face when automating tests?

Ans:

- Selecting the appropriate tools for automation can be daunting, given the multitude of options available, each with its strengths and weaknesses.

- Maintaining test scripts can become cumbersome as the application evolves, requiring constant updates to ensure accurate testing.

- Dealing with dynamic UI elements poses another challenge, as automation scripts may need frequent adjustments to accommodate changes in the interface.

- Managing test data, including generating and maintaining relevant datasets for testing, is another hurdle that automation teams must overcome.

6. What factors should be considered when determining which test cases to automate?

Ans:

- Determining which test cases to automate involves prioritizing based on several factors.

- Firstly, identify test cases that are repetitive and time-consuming when executed manually.

- These are prime candidates for automation, as automating them can save significant time and effort.

- Additionally, focus on test cases that cover core features and functionalities of the application, as these are critical for ensuring overall quality.

7. What is continuous integration (CI), and how does it relate to automation?

Ans:

Code updates are routinely incorporated into a shared repository as part of the software development process known as continuous integration (CI). After integration, automated builds and tests are triggered to verify the integrity of the codebase. By automating tests as part of the CI process, teams can quickly identify and address issues introduced by new code changes, ensuring the overall stability and quality of the software.

8. How do smoke testing and regression testing differ from one another?

Ans:

Additionally referred to as build verification testing, testing for smoke is a preliminary test conducted to check if an application’s basic functionalities work as expected. It is typically performed after a new build or deployment to ensure that the application is stable enough for further testing. It involves retesting previously tested features to detect and prevent regression issues.

9. What is the concept of “shift-left” in testing?

Ans:

- In testing, “shift-left” refers to the methodology of advancing testing tasks earlier in the software development lifecycle.

- Testing is typically done after the code has been written, at the conclusion of the development process.

- But with shift-left testing, testing is done early, frequently concurrently with development, and includes tasks like unit, integration, and even acceptance testing.

- Teams can reduce the cost and labour of fixing problems later in the development process by moving testing to the left and detecting and correcting them earlier.

10. What are the differences between functional and non-functional testing?

Ans:

| Aspect | Functional Testing | Non-Functional Testing |

|---|---|---|

| Focus | Verifying specific functions/features of the software | Evaluating quality attributes/characteristics of the software beyond its basic functionality |

| Objective | Ensure the software behaves according to requirements | Assess the performance, reliability, usability, security, etc. of the software |

| Examples | Testing individual features, UI, APIs, and integrations | Performance testing, usability testing, security testing, compatibility testing, scalability testing, reliability testing, etc. |

| Scope | Verifying expected outcomes of functions/features | Evaluating how well the system performs under various conditions and its adherence to quality attributes |

11. What is test-driven development (TDD)?

Ans:

Software is developed using the test-driven development (TDD) methodology, in which tests are produced before code. Before writing any automated tests, TDD developers specify the intended functionality of a product or component. Since the related code has yet to be written, these tests are designed to fail at first. After that, developers create the code required to pass the tests, refining iteratively until all tests pass and the intended functionality is realized.

12. What methods are used to balance manual and automated testing in a project?

Ans:

- Balancing manual and automated testing in a project requires careful consideration of several factors.

- Critical and complex scenarios may require manual testing to ensure thorough coverage and accuracy, especially in areas where automation may be impractical or infeasible.

- Additionally, consider the availability of resources, time constraints, and the nature of the application when deciding how to balance manual and automated testing efforts.

- Ultimately, aim to strike a balance that maximizes test coverage, efficiency, and effectiveness while minimizing costs and risks.

13. What is the role of a test plan in automation?

Ans:

- A test plan plays a crucial role in automation by providing a roadmap for the testing effort.

- The test plan outlines the objectives, scope, approach, resources, schedule, and deliverables of the testing process.

- In the context of automation, the test plan also specifies details such as the automation tools, frameworks, and methodologies to be used, as well as the criteria for selecting test cases for automation.

- By documenting these details in a test plan, teams can ensure that automation efforts are organized, structured, and executed effectively to achieve the desired testing outcomes.

14. What criteria help in deciding between using in-house versus third-party automation tools?

Ans:

When deciding between in-house and third-party automation tools, teams must consider several factors to make an informed decision. In-house automation tools offer the advantage of customization and control over the tool’s features and functionalities. On the other hand, third-party automation tools are commercially available solutions developed by external vendors. These tools typically offer ready-to-use features and support, allowing teams to get started quickly with automation.

15. What is acceptance test-driven development (ATDD)?

Ans:

Acceptance Test-Driven Development (ATDD) is an agile development approach that emphasizes collaboration between stakeholders to define and validate acceptance criteria for user stories. In ATDD, acceptance criteria are specified upfront, often in the form of executable tests or scenarios, before any code is written. The tests are automated to ensure that the software meets the specified acceptance criteria and behaves as expected from the user’s perspective.

16. What automation tools are commonly used and what experiences have been had with them?

Ans:

Much expertise has been obtained with various automation technologies, such as Postman for API testing, Appium for mobile testing, and Selenium WebDriver for browser automation. Jenkins has made continuous integration and delivery easier, and JMeter has been utilized for performance and load testing. Git handles version control, Ansible handles configuration management, and Docker handles containerization.

17. What are the differences between Selenium and QTP/UFT?

Ans:

- Selenium, as an open-source tool, is predominantly used for testing web applications.

- It empowers testers to automate browser interactions across multiple platforms and browsers, leveraging its compatibility with various programming languages.

- On the other hand, QTP/UFT, a commercial tool, offers a broader spectrum by supporting testing for web, desktop, and mobile applications.

- However, it requires VBScript proficiency and is limited in terms of platform support compared to Selenium.

18. What are some advantages of using open-source automation tools?

Ans:

- The utilization of open-source automation tools confers several advantages.

- Firstly, they are cost-effective, eliminating licensing fees associated with proprietary tools.

- Moreover, open-source tools benefit from vibrant community support, ensuring rapid issue resolution and continuous enhancement through collaborative efforts.

- Their inherent flexibility allows for customization to suit specific project requirements, fostering transparency in the codebase and facilitating seamless integration with other tools and frameworks.

19. How does Selenium WebDriver work?

Ans:

Selenium WebDriver operates by leveraging browser-specific drivers to interact with web elements, replicating user actions such as clicking buttons, inputting text, and navigating through pages. It communicates with the browser directly via the browser’s native support for automation, thereby facilitating efficient and accurate testing across various web applications.

20. What is Appium, and when would you use it?

Ans:

Appium serves as a cross-platform automation tool specifically designed for testing mobile applications across diverse operating systems, including iOS and Android. It provides a unified API for testing, enabling testers to write and execute tests using a single codebase across multiple platforms, thereby streamlining the testing process and enhancing overall efficiency.

21. What is Jenkins, and how is it used in automation?

Ans:

- Jenkins, an open-source automation server, functions as the foundation of continuous delivery and integration (CI/CD) pipelines.

- It automates the build, test, and deployment processes by orchestrating pipelines and sequences of tasks executed based on predefined triggers like code commits.

- Jenkins fosters rapid feedback loops and ensures the swift delivery of high-quality software by automating repetitive tasks and facilitating collaboration among development and operations teams.

22. What is the role of Docker in automation testing?

Ans:

The containerization platform Docker is essential to automation testing. It enables the creation of lightweight, portable containers encapsulating applications and their dependencies. Testers leverage Docker to establish isolated testing environments with consistent configurations, promoting reproducibility and scalability across different testing scenarios and environments.

23. What is a headless browser, and why might it be used?

Ans:

A headless browser operates without a graphical user interface (GUI), enabling automated testing and web scraping without displaying the browser window. Testers employ headless browsers to expedite testing processes, conserve system resources, and execute tests in environments lacking graphical interfaces, thereby enhancing efficiency and scalability in automation testing.

24. What steps are involved in integrating automated tests with CI/CD pipelines?

Ans:

Integration of automated tests with CI/CD pipelines entails triggering test execution automatically upon code changes, thereby ensuring continuous validation of software quality throughout the development lifecycle. This seamless integration fosters early defect detection, accelerates feedback loops, and facilitates rapid iterations, culminating in the delivery of robust, high-quality software.

25. What is Postman, and how is it used for API testing?

Ans:

- Postman, a versatile API testing tool, facilitates the design, testing, and documentation of APIs through its intuitive interface.

- Testers leverage Postman to send requests to APIs, analyze responses, and validate API behaviour using a plethora of assertions.

- Furthermore, Postman supports the automation of API tests through collections and environments, enhancing efficiency and scalability in API testing endeavours.

26. What is Cucumber, and how is it used in BDD?

Ans:

- Cucumber, a behaviour-driven development (BDD) tool, enables stakeholders to collaboratively define test scenarios in a human-readable format using Gherkin syntax.

- Cucumber fosters communication and alignment between technical and non-technical stakeholders, promoting a shared understanding of project requirements and facilitating the creation of comprehensive test suites.

27. How does JMeter support performance testing?

Ans:

JMeter, an open-source performance testing tool, facilitates the evaluation of web application performance under various load conditions. It simulates heavy loads on servers, networks, or objects, enabling testers to measure performance metrics such as response time, throughput, and resource utilization. JMeter supports multiple protocols, including HTTP, JDBC, FTP, and SOAP, thereby catering to diverse performance testing requirements and scenarios.

28. What is the role of Ansible in automation?

Ans:

Task automation, application deployment, and configuration management are the areas of expertise for the open-source automation tool Ansible. Ansible is essential for setting up test infrastructure, installing apps, and provisioning test environments in the context of automation testing. Ansible facilitates testing procedures and improves cross-functional team collaboration by guaranteeing consistency and reproducibility in testing environments.

29. What is the significance of Git in automation testing?

Ans:

- Git, a distributed version control system, facilitates collaborative software development by tracking changes in source code over time.

- In automation testing, Git serves as a foundational tool for managing test scripts, configuration files, and test data.

- Its versioning capabilities enable testers to track changes, manage dependencies, and facilitate seamless integration with CI/CD pipelines, thereby fostering efficiency and reliability in automation testing endeavours.

30. What are some cloud-based automation tools you have used?

Ans:

Various cloud-based automation tools, including Sauce Labs, BrowserStack, AWS Device Farm, and Google Cloud Test Lab, have been leveraged to streamline testing processes and enhance scalability. Harnessing the power of the cloud enables testers to expedite test execution, reduce infrastructure overhead, and ensure comprehensive test coverage across multiple environments and configurations.

31. What programming languages are commonly used in automation testing?

Ans:

Standard programming languages in automation testing include Python, Java, C#, and JavaScript. Python is favored for its simplicity and readability, making it accessible to both beginners and experienced testers. It has a rich ecosystem of testing libraries such as Selenium for browser automation, PyTest for testing frameworks, Robot Framework for keyword-driven testing, and WebDriverIO for WebDriver integration. These tools enhance Python’s versatility and effectiveness in various testing scenarios.

32. What does a simple Python script for opening a webpage using Selenium look like?

Ans:

A simple Python script for opening a webpage using Selenium might look like this:

from selenium import webdriver

- # Create a new instance of the Chrome driver

- driver = webdriver.Chrome()

- # Open a webpage

- driver.get(‘https://www.example.com’)

- # Close the browser

- driver.quit()

33. What approaches are used to handle exceptions in automation scripts?

Ans:

- Exceptions in automation scripts are handled using try-except blocks.

- Exception handling is crucial in automation testing to gracefully manage unexpected situations that may arise during test execution, such as element not found, timeout, or unpredictable behaviour of the application under test.

- By catching and handling exceptions, testers can prevent test failures and ensure robustness in their automation scripts.

34. What is a framework in the context of automation testing?

Ans:

In automation testing, a framework is a structured set of guidelines, coding standards, concepts, processes, practices, project hierarchies, modularity, reporting mechanisms, and tools that help in automation testing activities. A framework provides a systematic approach to test automation, enabling testers to efficiently create, execute, and maintain automated tests while ensuring scalability, maintainability, and reusability of test artefacts.

35. What is the Page Object Model (POM)?

Ans:

The Page Object Model (POM) is a design pattern in test automation where each web page is represented as a class, and the operations that can be performed on the page are implemented as methods within the class. POM promotes modularity, reusability, and maintainability in automation scripts by encapsulating the interaction with web elements and page functionalities within page objects. This abstraction facilitates easier test maintenance and reduces duplication of code across test scripts.

36. What is a test suite, and how do you organize your test cases?

Ans:

A test suite serves as a structured compilation of test cases designed for execution within a testing environment. These test cases are methodically organized based on various criteria such as functionality, priority, or specific testing objectives. This systematic arrangement ensures comprehensive coverage during the testing process. Proper organization of test suites is crucial for facilitating efficient test execution, accurate reporting, and insightful analysis of testing outcomes.

37. What methods are used to manage test data in automation scripts?

Ans:

- Storing data in external data sources such as Excel sheets.

- CSV files. And databases.

- Using data-driven testing frameworks that separate test scripts from test data.

- Additionally, test data can be defined directly within the automation scripts using variables, constants, or configuration files.

38. What is the purpose of using assertions in test scripts?

Ans:

- Assertions in test scripts are used to validate expected outcomes against actual outcomes.

- They help in verifying whether the application under test behaves as expected and whether the test conditions are met.

- Assertions are critical for validating the correctness and reliability of automated tests by comparing the actual results of test execution with the expected results.

- Common types of assertions include verifying text, element presence, element visibility, element attributes, and page titles.

39. What is a test harness and its purpose?

Ans:

A test harness is a set of test data and software that is intended to execute a program unit under various conditions and observe its outputs and behaviour. A test harness provides an environment for executing automated tests and collecting test results, enabling testers to assess the quality and functionality of the software under test. Test harnesses may include test scripts, test data, test fixtures, test runners, and reporting tools, depending on the testing requirements and the complexity of the application under test.

40. What techniques are used to handle dynamic elements in Selenium?

Ans:

To ensure robust Selenium test automation, dynamic elements—those whose attributes change based on user actions or page updates—require specific handling techniques. These include using waiting strategies like implicit, explicit, or fluent waits to synchronize test execution with element availability. Additionally, direct interaction through polling or JavaScriptExecutor aids in managing dynamic elements. Gracefully handling stale elements by refreshing or re-finding them as necessary during test execution is also crucial.

41. What is the role of data-driven testing?

Ans:

- Parameterization: Data-driven testing enables running the same test script with various input data sets, enhancing test coverage.

- Reusability: Test scripts can be reused with different data sets, minimizing redundancy and improving maintainability.

- Flexibility: It facilitates testing across a wide range of scenarios by varying input data, helping to uncover edge cases and ensure robust application performance.

- Separation of Logic and Data: By separating test logic from test data, it makes it easier to update data without modifying the test scripts.

42. What role do loops and conditions play in automation scripts?

Ans:

- Loops (like for, while) and conditions (if, else) are fundamental constructs in automation scripts used to execute repetitive tasks and make decisions based on certain conditions.

- Loops are used to iterate over collections, perform batch operations, or manage a set of statements repeatedly until a specified condition is met.

- Conditions are used to implement branching logic, execute different code paths based on runtime conditions, and handle decision-making scenarios in automation scripts.

43. What strategies are employed to handle alerts and pop-ups in Selenium?

Ans:

Alerts and pop-ups in Selenium can be handled using specialized WebDriver methods and techniques to interact with browser alerts, JavaScript alerts, and pop-up windows during test execution. Selenium provides built-in support for handling alerts and pop-ups using the Alert class, which exposes methods such as accept(), dismiss(), getText(), and sendKeys() to interact with alert dialogues.

44. What are some best practices for writing clean and maintainable code in automation?

Ans:

- Following established coding standards and conventions.

- Applying design patterns and architectural principles to improve code organization and readability.

- Writing self-documenting code with explicit comments and documentation.

- Adopting coding best practices and guidelines from the testing community and industry standards.

45. What is the process for utilizing RESTful APIs in automation scripts?

Ans:

To use RESTful APIs in automation scripts, start by identifying the endpoints to interact with. A scripting language like Python or JavaScript, along with libraries such as ‘requests’ (Python) or ‘Axios’ (JavaScript), can be used to send HTTP requests to these endpoints. Responses are handled, data is extracted, and validations are performed as needed. Authentication may also be necessary, managed through methods such as API keys or OAuth tokens.

46. What are a few well-known test automation frameworks?

Ans:

- Selenium: Primarily for web testing, accommodating multiple browsers and languages.

- Appium: Specializing in mobile testing on Android and iOS platforms.

- JUnit: Designed for Java unit testing purposes.

- TestNG: Offering advanced Java testing functionalities, including parallel execution capabilities.

- Cucumber: An esteemed BDD framework featuring test cases in natural language.

47. What distinguishes a keyword-driven framework from a data-driven framework?

Ans:

- In a keyword-driven framework, the primary focus is on defining a comprehensive set of actions or keywords that accurately represent the functionalities of the application under test.

- Test scripts are then constructed utilizing these predefined keywords, resulting in scripts that are not only more readable but also easier to maintain over time.

- This allows for the same test script to be executed with varying sets of data, thereby enhancing the overall reusability and scalability of the testing process.

48. What is a hybrid framework?

Ans:

A hybrid framework in test automation integrates various testing approaches to optimize testing processes. It combines elements from keyword-driven, data-driven, and modular frameworks, harnessing their strengths to create a more versatile and robust testing strategy. This approach allows for better test script flexibility, reusability, and maintainability. By leveraging the advantages of each framework, a hybrid framework can address different testing needs and improve overall efficiency.

49. What practices are followed to implement logging in a test framework?

Ans:

Logging in a test framework involves capturing detailed information during test execution, including test steps, errors, warnings, and debug data. This can be achieved using logging libraries such as Log4j or Logback in Java or the logging module in Python. Proper logging allows for tracking the flow of test execution and identifying issues. It provides crucial insights for debugging and analyzing test results, helping to improve test reliability and performance.

50. What are some best practices for maintaining an automation framework?

Ans:

Best practices for maintaining an automation framework involve several key actions. Regular code reviews and adherence to coding standards ensure high-quality, consistent code. Utilizing version control systems like Git helps track changes and manage collaboration. Writing reusable and modular code improves maintainability and efficiency. Implementing robust error handling and continuously updating tests and frameworks enhance reliability and performance.

51. What techniques ensure reusability in test scripts?

Ans:

- Modular Design: Break down test scripts into smaller, separate modules that are interchangeable with other tests.

- Parameterized Tests: Use parameterization to run the same test with different inputs, reducing redundancy.

- Libraries and Utilities: Create shared libraries and utility functions for repetitive tasks.

- Page Object Model (POM): Implement POM to separate test logic from the UI elements, making it easier to maintain and reuse.

52. What is the role of a test runner in an automation framework?

Ans:

- Execute Tests: Run the automated test scripts and manage the test execution process.

- Report Results: Collect and report test results, providing insights into passed and failed tests.

- Manage Test Lifecycle: Handle setup and teardown processes, ensuring the environment is prepared before tests and cleaned up afterwards.

- Parallel Execution: Support parallel test execution to optimize testing time and resource usage.

53. What methods are used to implement parallel testing?

Ans:

Parallel testing involves running multiple test cases simultaneously across various environments or devices, significantly reducing overall test execution time. This approach can be implemented using testing frameworks such as TestNG or JUnit, which offer built-in support for parallel execution. Tools like Selenium Grid also facilitate running tests concurrently on different machines and browsers. Leveraging these tools and frameworks helps achieve more efficient and faster testing cycles.

54. What approaches are used to handle dependencies in test scripts?

Ans:

Dependencies in test scripts refer to the order or prerequisites that must be met for tests to execute correctly. These dependencies ensure that certain tests run only after other tests are completed, or conditions are met. They can be managed using annotations, such as TestNG’s ‘@DependsOnMethods’ or configuration files to define explicit execution sequences. Properly handling these dependencies helps maintain the correct test flow and avoid issues due to unmet prerequisites.

55. What is a configuration file, and how is it used in automation frameworks?

Ans:

- In automation frameworks, a configuration file stores environment-specific settings, such as URLs, database connections, browser configurations, or API endpoints.

- These settings can be loaded dynamically during test execution, allowing tests to be run across different environments without modifying the test scripts.

56. What strategies are employed for reporting in an automation framework?

Ans:

- Reporting in an automation framework involves generating thorough test reports that offer information about how the test was executed, such as pass/fail status, execution time, error messages, and screenshots.

- This can be implemented using reporting libraries like ExtentReports and Allure or built-in reporting features provided by testing frameworks like TestNG or JUnit.

57. What is the role of annotations in TestNG?

Ans:

Annotations in TestNG are essential for controlling test execution flow and configuration. The ‘@Test’ annotation marks a method as a test case. ‘@BeforeMethod’ and ‘@AfterMethod’ are used to set up and tear down test environments before and after each test method. ‘@DataProvider’ supplies data for parameterized tests, allowing the same test method to run with multiple data sets. ‘@Parameters’ enables passing parameters to test strategies, facilitating configuration-driven testing.

58. What steps are involved in setting up a Maven project for automation?

Ans:

To set up a Maven project for automation, create a Maven project structure with ‘src/main/java’ and ‘src/test/java’ directories for production and test code, respectively. Add dependencies for automation libraries such as Selenium WebDriver, TestNG, or JUnit in the ‘pom.xml’ file. Write test scripts, configure test execution, and manage dependencies using Maven commands.

59. What role does Jenkins play in running automation tests?

Ans:

- Jenkins can be used to run automation tests by creating a Jenkins job that triggers test execution.

- You can configure the job to pull the automation code from a version control system like Git, set up build steps to install dependencies and execute tests, and define post-build actions to generate reports or send notifications based on test results.

60. What challenges are encountered with automation frameworks and how are they addressed?

Ans:

Challenges with automation frameworks often involve maintaining tests as applications evolve, dealing with flaky tests that produce inconsistent results, and managing test data effectively. Additional issues include ensuring proper synchronization between test scripts and application behavior, handling cross-browser and cross-device compatibility, and addressing scalability as test suites grow. To overcome these challenges, it is essential to employ strategic planning, select robust tools, and follow best practices in test automation.

61. What practices ensure the reliability of automated tests?

Ans:

To ensure automated tests are reliable, use stable test data, mock dependencies, isolate tests, and ensure idempotency. Regularly review and refactor tests, run them in various environments to detect environment-specific issues, and integrate them into CI/CD pipelines to catch regressions early. Consistently monitor test results to identify and address flaky tests. It’s also crucial to write clear, maintainable test cases, use assertions effectively, and ensure tests are self-checking and deterministic.

62. What is the difference between unit tests, integration tests, and end-to-end tests?

Ans:

- Unit tests verify individual components in isolation, typically focusing on functions or methods.

- They are fast, reliable, and easy to write. Integration tests check interactions between multiple components or systems to ensure they work together as expected.

- They are broader in scope and may involve databases or APIs. End-to-end tests validate the entire system workflow from start to finish, simulating real user scenarios and verifying that the application meets business requirements.

63. What factors determine the prioritization of test cases for automation?

Ans:

- Prioritize test cases that are high-risk, high-impact, and frequently executed.

- Focus on time-consuming or repetitive tests to reduce manual testing effort.

- Consider automating critical path functionalities, areas with frequent code changes, and tests that can benefit from faster feedback. Additionally, prioritize tests that are stable and have clear, repeatable outcomes.

- Balancing coverage with maintainability is critical, so avoid automating tests that are likely to change frequently or are not cost-effective.

64. What is the purpose of code reviews in automation?

Ans:

Code reviews ensure test scripts are correct, efficient, and follow best practices. They help identify and fix errors early, enhance the quality of the code and encourage team members to share information. Additionally, reviews encourage adherence to coding standards and help maintain consistency across the test suite. Code reviews also provide an opportunity to discuss and implement better testing strategies and ensure that all team members understand the codebase, which can lead to more robust and effective test scripts.

65. What methods are used to handle flaky tests?

Ans:

Identify and isolate flaky tests, then investigate and fix the underlying issues. Use techniques like retry logic and adding waits, but aim to resolve the root cause rather than masking the problem. Monitor flaky tests and prioritize their stabilization to maintain the reliability of your test suite. Tools and strategies such as logging, debugging, and thorough analysis can help determine why a test is flaky. Additionally, consider factors like network instability, timing issues, and shared state between tests, and work to eliminate these sources of flakiness.

66. What are some strategies for maintaining your test scripts?

Ans:

- Regularly update test scripts to align with application changes. Refactor code to remove redundancies and enhance readability.

- Use version control for tracking modifications and reverting when necessary.

- Implement modular and reusable test components to simplify maintenance and reduce duplication.

- Document test cases clearly and ensure that all team members are familiar with the structure and purpose of the test suite.

67. What metrics are used to measure the success of automated tests?

Ans:

- Measure success through metrics like test coverage, pass/fail rates, defect detection rate, and the time taken to execute tests.

- Evaluate the reduction in manual testing effort, the speed of feedback, and the overall improvement in software quality.

- Continuous improvement in these areas indicates the effectiveness of your automated testing strategy.

- Additionally, consider metrics like the stability of the test suite, the rate of false positives/negatives, and the ease of maintaining and updating test scripts.

68. What is the role of a version control system in automation?

Ans:

Version control systems track changes to test scripts, facilitate collaboration, and ensure consistent codebases. They allow rollback to previous versions in case of issues and support branching for parallel development. Version control also enables integration with CI/CD pipelines, ensuring automated tests are up-to-date and consistently executed. They provide a history of changes, making it easier to understand the evolution of the test suite and troubleshoot issues.

69. What strategies are implemented to deal with test script failures?

Ans:

Investigate and diagnose the cause of failures. Prioritize fixing critical issues. Update scripts or application code as needed, and ensure thorough re-testing before deployment. Implement logging and detailed reporting to facilitate troubleshooting and ensure that problems are addressed promptly. Categorize failures to identify common issues and trends. Ensure that test failures are communicated to relevant team members and tracked until resolved.

70. What are some common mistakes to avoid in automation testing?

Ans:

- Avoid hard-coding values, neglecting maintenance, over-relying on UI tests, ignoring flaky tests, and not reviewing or refactoring code.

- Ensure tests are well-documented, modular, and aligned with the application’s development cycle.

- Additionally, avoid creating overly complex tests that are difficult to maintain or debug, and ensure that test environments are consistent with production. Avoid the “test everything” approach—focus on high-value tests.

71. What techniques are used to ensure comprehensive coverage in automated tests?

Ans:

- To ensure comprehensive coverage, prioritize risk-based testing, including unit, integration, and end-to-end tests.

- Regularly review and update test cases based on feedback from stakeholders and real-world usage scenarios.

- Employ techniques such as equivalence partitioning, boundary value analysis, and pairwise testing to maximize test coverage while minimizing redundancy.

72. What are some key metrics you track for test automation?

Ans:

Key metrics include test coverage, pass/fail rates, test execution time, defect density, mean time to detect/repair defects, test case reliability, and regression test suite effectiveness. These metrics provide insights into the quality of the software, the efficiency of the testing process, and areas for improvement. Additionally, track metrics related to test automation, such as code coverage of automated tests, frequency of test suite execution, and stability of test environments, to gauge the effectiveness and ROI of test automation efforts.

73. What methods are used to ensure cross-browser compatibility in tests?

Ans:

To ensure cross-browser compatibility, it’s essential to conduct thorough testing across various browsers, versions, and devices. Leveraging tools like Selenium WebDriver, BrowserStack, or Sauce Labs facilitates efficient testing processes by providing comprehensive browser coverage. Additionally, implementing robust CSS and JavaScript compatibility checks helps identify and resolve any rendering issues or discrepancies across different browsers.

74. What is the role of continuous feedback in test automation?

Ans:

- Continuous feedback ensures rapid identification and resolution of issues, facilitating a faster and more reliable development cycle.

- Incorporate feedback loops through automated test reporting, regular code reviews, and collaboration between developers, testers, and stakeholders to improve the testing process and deliver high-quality software iteratively.

- Additionally, establish mechanisms for gathering feedback from end-users and incorporating their insights into future development and testing efforts to align with user needs and expectations.

75. What practices are followed to handle test data security in automation scripts?

Ans:

- Handle test data security by anonymizing sensitive data, using environment variables for secrets, and following data protection regulations such as GDPR and HIPAA.

- Implement encryption techniques for data transmission and storage and restrict access to test environments to authorized personnel only.

- Consider using data masking and obfuscation techniques to anonymize production data while retaining its integrity and relevance for testing purposes.

76. What is the purpose of Selenium Grid and how is it used?

Ans:

Selenium Grid allows parallel test execution across multiple machines and browsers, reducing test execution time and ensuring scalability to accommodate large test suites and diverse testing environments. Configure Selenium Grid with a hub and multiple nodes to distribute test execution across different environments and browsers and leverage cloud-based infrastructure for on-demand scalability and resource optimization.

77. What is the role of an automation architect?

Ans:

An automation architect designs and maintains the test automation framework, ensuring it meets the project’s needs and integrates well with CI/CD pipelines. They establish coding standards, define best practices, and provide guidance on test automation tools and techniques to maximize efficiency and maintainability. Additionally, collaborate with development and operations teams to align automation efforts with overall project goals and objectives and anticipate future scalability and maintainability requirements.

78. What approaches are used to handle security testing in automation?

Ans:

- Security testing in automation involves incorporating security scanners, conducting vulnerability assessments, and integrating security tests into the CI/CD pipeline to detect and remediate security vulnerabilities early in the development process.

- Tools such as OWASP ZAP, Burp Suite, and Nessus identify common security vulnerabilities such as SQL injection, cross-site scripting (XSS), and insecure authentication mechanisms.

- Security tests are also automated to ensure consistent coverage and timely feedback on security risks.

79. What is the concept of mocking in testing and its applications?

Ans:

- Mocking simulates the behaviour of natural objects, allowing isolated testing of components by providing controlled responses from dependent systems.

- Mocking frameworks such as Mockito, PowerMock, and EasyMock facilitate the creation of mock objects and stubs to emulate complex dependencies and interactions.

- Use mocking to isolate units of code external dependencies being tested, including databases, web services, and external APIs and simulate various scenarios and edge cases to validate code behaviour under different conditions.

80. What is behaviour-driven development (BDD)?

Ans:

BDD (Behavior-Driven Development) is a collaborative methodology in which developers, testers, and business analysts collaborate to define the application’s behavior using natural language. This approach focuses on enhancing communication and ensuring clarity among all stakeholders by transforming business requirements into executable specifications. These specifications are written in a human-readable format, often using tools like Gherkin.

81. What methods are used to implement BDD with Cucumber?

Ans:

Implement BDD with Cucumber by writing Gherkin scenarios, implementing step definitions in code, and integrating them into the test framework using tools like JUnit or TestNG. Use regular expressions to match Gherkin steps with corresponding step definitions and organize step definitions into reusable modules to promote maintainability and reusability across different scenarios and features.

82. What are the advantages of using microservices architecture in testing?

Ans:

- Microservices architecture in testing offers modular testing, faster deployments, and isolation of services, leading to more reliable and scalable tests.

- It enables independent testing of microservices, promotes parallel development and testing, and facilitates easier debugging and maintenance of tests.

- Additionally, consider using service virtualization tools such as Hoverfly and WireMock to simulate dependent services and test microservices in isolation, reducing test flakiness and dependencies on external systems.

83. What techniques are used for load testing and which tools are preferred?

Ans:

Perform load testing using tools like JMeter, LoadRunner, or Gatling to simulate multiple users, transactions, and requests and analyze system performance under stress—conduct capacity planning to determine system scalability and identify performance bottlenecks before deploying to production. Additionally, leverage cloud-based load testing platforms such as AWS Load Testing Tools and Azure Load Testing Services to scale load tests dynamically and simulate realistic user traffic from different geographic regions and network conditions.

84. What is the concept of ongoing examination in testing and its benefits?

Ans:

- Testing is done continuously throughout the whole development process.

- Cycle, integrating automated tests into CI/CD pipelines to provide instant feedback on code changes and leveraging techniques such as shift-left testing, test-driven development (TDD), and behaviour-driven development (BDD) to ensure quality throughout the software delivery lifecycle.

- Incorporate automated testing into the CI/CD pipeline to validate code changes automatically and identify regressions early in the development process, reducing the risk of introducing defects and accelerating time to market.

85. What strategies are used for test data generation in large datasets?

Ans:

For large datasets, use data generation tools like Faker, anonymize production data, or use database snapshots to create diverse and realistic test data. Implement data masking and obfuscation techniques to safeguard confidential data and guarantee adherence to data privacy regulations. Additionally, consider using data profiling tools to analyze and understand the structure and distribution of test data and produce artificial data that closely mimics production data while avoiding privacy and security risks.

86. What is the role of AI and machine learning in test automation?

Ans:

AI and ML enhance test automation by improving test case generation, predicting potential failures, optimizing test execution, and identifying patterns and anomalies in test results. They enable intelligent test automation, reduce manual effort, and increase test coverage and effectiveness. Additionally, leverage AI and ML algorithms to analyze historical test data, identify recurring issues, and prioritize test cases for execution based on their likelihood of failure and impact on application functionality.

87. What role does artificial intelligence play in test automation?

Ans:

- Implement AI in test automation by using ML algorithms for test suite optimization, defect prediction, and intelligent test case generation.

- Leverage techniques such as machine learning models, utilizing pattern recognition and natural language processing (NLP) to analyze historical test data, identify recurring issues, and prioritize test cases for execution.

- Additionally, explore AI-driven testing tools and platforms that automate test case creation, execution, and maintenance and incorporate machine learning capabilities to adapt test strategies dynamically based on changing requirements and environmental conditions.

88. What are some techniques for performance tuning in automated tests?

Ans:

Performance tuning in automated tests involves optimizing test scripts, reducing execution time, parallelizing tests, and using efficient test data management techniques. Employ performance profiling tools, code instrumentation, and performance monitoring to identify and address performance bottlenecks in the application and test infrastructure. Additionally, leverage performance testing best practices.

89. What approaches are used for testing IoT (Internet of Things) devices?

Ans:

Testing for IoT devices involves considering their unique characteristics, such as connectivity, interoperability, and security. Start with requirement analysis, then design test cases covering functionalities, data handling, and edge cases. Utilize IoT simulators for scalability and realism. Conduct compatibility tests across various devices and platforms. Prioritize security testing for data encryption, authentication, and device access control.

90. What methods ensure that an automation framework can scale with the application?

Ans:

- Design it with modularity and flexibility.

- Use frameworks supporting parallel execution and distributed testing for scalability.

- Employ design patterns like the Page Object Model for maintainability.

- Regularly review and refactor code for optimization.

- Implement dynamic data-driven approaches to handle evolving application changes.