NLP’s evolution includes breakthroughs in contextual embeddings, attention mechanisms, and transfer learning. Open-source libraries like NLTK and spaCy facilitate NLP development, fostering innovation. As the field progresses, addressing multilingual and low-resource language challenges remains a focus, aiming to make NLP technologies accessible and impactful worldwide.

1. What is NLP?

Ans:

- NLP refers to Natural Language Processing, dedicated to facilitating smooth communication between computers and humans using natural language.

- Natural Language Processing (NLP) is the realm centered on enabling fluid interaction between machines and humans using the nuances of natural language.

- The core of Natural Language Processing (NLP) lies in fostering a harmonious exchange between computers and humans, leveraging the richness of natural language.

2. Explain Tokenization?

Ans:

- Tokenization involves dissecting text into distinct units referred to as tokens.

- Common units include words or phrases.

- Facilitates analysis and comprehension of text structure.

- Fundamental step in natural language processing (NLP).

- Crucial for tasks like text analysis, sentiment analysis, and language modeling.

- Tokens serve as building blocks for linguistic analysis and machine learning applications.

3. What is POS tagging?

Ans:

- Organizing words within a text based on their grammatical parts of speech defines POS tagging, also referred to as Part-of-Speech tagging.

- The fundamental goal of Part-of-Speech tagging, frequently shortened as POS tagging, is to classify words in a text according to their grammatical roles.

- Part-of-Speech tagging, commonly abbreviated as POS tagging, involves assigning words in a text to their specific grammatical categories.

4. Define Named Entity Recognition (NER)?

Ans:

Named Entity Recognition (NER) is a natural language processing (NLP) task that involves identifying and categorizing entities, which are specific objects, individuals, or locations, in a given text.

The goal is to extract meaningful information and understand the context by recognizing entities like names of people, organizations, locations, dates, and other predefined categories. NER plays a crucial role in various applications, including information retrieval, question answering systems, and language understanding, contributing to the overall improvement of automated text analysis and comprehension.

5. What is Stemming?

Ans:

- Definition: Stemming is a linguistic process of natural language processing.

- Objective: It aims to simplify words by reducing them to their base or root form.

- Method: Achieved by removing suffixes from words.

- Example: “Running” and “runner” both stem from “run.”

- Purpose: Unify variations of a word for enhanced text analysis.

- Application: Commonly used in information retrieval, search algorithms, and language processing tasks.Caution: Stemming may not always result in valid words, as it prioritizes linguistic normalization over lexical accuracy.

6. Explain Lemmatization?

Ans:

Lemmatization represents an advanced linguistic procedure applied in the domains of natural language processing (NLP) and computational linguistics.Its primary goal is to reduce words to their base or lemma form, taking into consideration the word’s intended meaning and context within a sentence or document.

7. Difference between Tokenization and Text Segmentation?

Ans:

| Aspect | Tokenization: | Text Segmentation: |

|---|---|---|

| Definition: | okenization is the process of breaking down a text into smaller units, known as tokens. Tokens can be words, subwords, or even characters, depending on the level of granularity. | Tokenization focuses on dividing text into individual elements, such as words or subword units, to create a structured and manageable representation of the text. |

| Unit of Division: | Tokenization focuses on dividing text into individual elements, such as words or subword units, to create a structured and manageable representation of the text. | Text segmentation deals with breaking down text into more substantial units, such as paragraphs, chapters, or other contextually relevant divisions. |

| Granularity: | It is a fine-grained approach, dealing with the smallest linguistic units within the text. This includes handling punctuation, contractions, and other intricacies of language. | It is a coarse-grained approach, focusing on capturing broader themes or topics within the text. The emphasis is on understanding and representing larger contextual units. |

| Example: | For the sentence “The quick brown fox jumps,” tokenization would break it into individual word tokens: [“The”, “quick”, “brown”, “fox”, “jumps”]. | >In a document, text segmentation might involve identifying and labeling sections as “Introduction,” “Body,” and “Conclusion,” providing a high-level overview of the document’s structure. |

8. What is TF-IDF?

Ans:

- Term Frequency (TF): This aspect gauges how often a particular word appears in a given document. It’s calculated by dividing the number of times a word occurs in a document by the total number of words in that document. The idea is to identify words that are prominent within a specific piece of text.

- Inverse Document Frequency (IDF): This part focuses on the uniqueness of a term across a collection of documents. The IDF is computed by dividing the total number of documents by the number of documents containing the word and then taking the logarithm of that quotient. The aim is to emphasize words that are not overly common across the entire document set.

- TF-IDF Score: To get the TF-IDF score for a term in a document, you multiply its TF by its IDF. This results in a numerical representation that highlights words that are frequent in a specific document but relatively rare in the overall document collection.

9. Describe Word Embeddings?

Ans:

Word embeddings are an advanced way of representing words in the field of natural language processing. Rather than treating words as isolated symbols, word embeddings map them to vectors in a continuous mathematical space. This mapping is done in a way that preserves semantic relationships between words.

Let’s simplify it further:

- Vector Representation: Each word is assigned a unique vector, typically with several dimensions. The values within these vectors capture the word’s semantic features.

- Semantic Relationships: Words with similar meanings or contextual usage are positioned closer to each other in the vector space. This proximity reflects the semantic relationships between words. For instance, the vectors for “king” and “queen” would be closer together than those for “king” and “apple.”

- Training Process: Word embeddings are often learned through unsupervised learning from large text corpora. Algorithms like Word2Vec, GloVe, and FastText analyze the context in which words appear to create meaningful vector representations.

- Contextual Understanding: Word embeddings also capture contextual nuances. For example, the word “bank” might have different vector representations depending on whether it’s used in a financial or river context.

- Applications: These embeddings have proven invaluable in various natural language processing tasks, such as sentiment analysis, machine translation, and information retrieval. They enable algorithms to understand and manipulate language in a more nuanced and context-aware manner.

10. How does a Recurrent Neural Network (RNN) work in NLP?

Ans:

- Role of RNNs in NLP: Recurrent Neural Networks (RNNs) are crucial in Natural Language Processing (NLP) for processing sequential data, like words in a sentence.

- Functionality of RNNs: RNNs function by maintaining a hidden state that carries information from previous steps, serving as a contextual memory.

- Contextual Memory’s Purpose: This contextual memory enables the model to capture dependencies and comprehend the sequential nature of the input.

- Challenges Faced by RNNs: Despite their effectiveness, RNNs encounter challenges in retaining long-term information, notably the vanishing gradient problem.

- Vanishing Gradient Problem: As the sequence length increases, gradients diminish during backpropagation, limiting the model’s learning from distant past steps.

11. Explain the concept of Bag of Words (BoW)?

Ans:

BOW is like creating a word collage: it represents a document by counting how many times each word appears, disregarding the order or structure of the text. It simplifies complex documents into a frequency distribution of words, losing the context but providing a straightforward way to analyze and compare texts.

Imagine taking a book, tearing out each page, and throwing all the words into a bag—what you get is the essence of Bag of Words. It’s a basic yet effective way to convert text into a format that machine learning models can understand, making it useful in tasks like document classification and sentiment analysis.

12. What is the purpose of the Attention Mechanism in NLP?

Ans:

- Selective Focus: The Attention Mechanism acts like a spotlight, allowing models to selectively focus on specific parts of the input sequence. This adaptability is crucial for understanding and capturing relevant context in tasks like language translation or summarization.

- Handling Long-Range Dependencies: One key advantage of Attention Mechanism is its ability to handle long-range dependencies. Unlike traditional models that may struggle with distant relationships between words, Attention Mechanism empowers models to dynamically adjust attention, improving their capacity to grasp nuanced connections across the entire sequence.

- Adaptability for Complex Tasks: By dynamically adjusting the importance of different elements in the input, Attention Mechanism brings a level of adaptability to NLP models. This adaptability proves valuable in scenarios where tasks involve intricate relationships or dependencies, enhancing the overall performance of the model in understanding and processing complex linguistic structures.

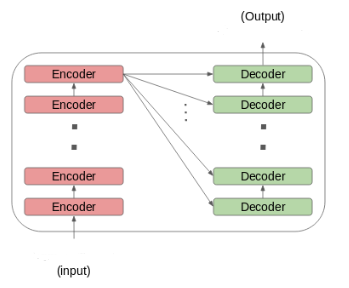

13. Describe the Transformer architecture in NLP?

Ans:

The Transformer architecture, introduced by Vaswani et al., is a neural network structure that utilizes self-attention mechanisms for sequence processing. This design has gained extensive use in Natural Language Processing (NLP) tasks, showcasing its effectiveness in capturing contextual relationships within sequences.

14. What is perplexity in language modeling?

Ans:

- Perplexity measures the typical uncertainty or unexpectedness of a language model when anticipating the next word in a sequence. Other terms for uncertainty could be unpredictability or ambiguity, while surprise could be expressed as astonishment or bewilderment.

- Lower perplexity values indicate better model performance, as the model is more confident and less perplexed by the data.

- It is calculated based on the probability assigned by the model to the actual outcomes in a given dataset.

15. How does Named Entity Recognition (NER) contribute to information extraction?

Ans:

- NER enhances information extraction by pinpointing specific entities like dates, monetary values, and percentages, aiding in the extraction of numerical data.

- It streamlines the identification of key actors, events, and relationships within a text, improving the overall accuracy of information extraction.

- By recognizing entities, NER assists in organizing and categorizing data, making it easier to structure and analyze the content for valuable insights.

16. What challenges does NLP face with handling informal language, like social media text?

Ans:

- NLP faces difficulty interpreting context-specific expressions and memes prevalent in social media, as these often rely on cultural or subcultural knowledge.

- The dynamic nature of language evolution on platforms like social media introduces challenges in keeping models updated with emerging trends and linguistic shifts.

- Sentiment analysis becomes more complex due to the nuanced and context-dependent nature of emotions expressed in informal language.

17. Difference between stemming and lemmatization?

Ans:

- Stemming is a rule-based heuristic process, chopping off prefixes or suffixes to obtain the root, whereas lemmatization involves a more sophisticated analysis, considering word semantics and grammatical rules.

- Stemming may result in non-real words, as it focuses on linguistic normalization, while lemmatization ensures that the reduced forms are valid words found in a language’s lexicon.

- Lemmatization typically requires access to a dictionary or a morphological analysis of words, making it computationally more intensive compared to the simpler stemming algorithms.

18. How does a BiLSTM (Bidirectional Long Short-Term Memory) network work in NLP?

Ans:

- Bidirectional Long Short-Term Memory (BiLSTM) networks consist of two LSTM layers – one processing the sequence from the beginning to end, and the other in reverse.

- The forward LSTM layer captures information from earlier elements in the sequence, while the backward layer captures context from later elements.

- Hidden states from both directions are concatenated, providing a comprehensive understanding of the context surrounding each timestep.

- BiLSTMs are effective in tasks where understanding context in both directions is crucial, such as in machine translation or sentiment analysis.

- They excel in capturing long-term dependencies, thanks to the memory cells in the LSTM architecture.

19. Discuss the challenges of machine translation in NLP?

Ans:

Machine translation grapples with challenges like idiomatic expressions, cultural nuances, and contextual coherence. Ambiguities, syntactic variations, domain-specific terms, and fluency maintenance across languages are additional hurdles.

20. What is Transfer Learning in NLP?

Ans:

Transfer learning in Natural Language Processing (NLP) entails training a model on a specific task and utilizing its acquired knowledge to enhance performance on a different yet related task. This process allows the model to leverage previously learned patterns and features, promoting efficiency in handling new tasks.

21. Explain the concept of Word2Vec?

Ans:

Word2Vec operates through two main models: Skip-gram and Continuous Bag of Words (CBOW). Skip-gram predicts context words given a target word, while CBOW predicts a target word from its context. These models are trained on large text corpora, adjusting word vectors to capture semantic nuances and similarities. The result is a powerful representation where words with similar meanings are closer together in the vector space.

22. How does the concept of n-grams contribute to language modeling?

Ans:

- N-grams are particularly useful in probabilistic language models, where the probability of the next word is estimated based on the preceding n-gram. This helps model dependencies and relationships between adjacent words in a sequence.

- Additionally, the choice of the n-gram size balances context and computational complexity. Smaller n-grams capture local dependencies but may miss broader context, while larger n-grams require more data and can lead to sparsity issues. The selection depends on the specific language modeling task and available data.

23. What is the role of pre-processing in NLP?

Ans:

- Normalization: Lowercasing ensures uniformity in text by converting all characters to lowercase, preventing the model from treating differently cased words as distinct.

- Tokenization: Breaking down text into tokens (words or subwords) facilitates analysis by providing discrete units for processing and understanding.

- Stop Word Removal: Eliminating common words (e.g., “and,” “the”) that carry little semantic meaning helps focus the model on relevant content and reduces noise.

- Stemming and Lemmatization: Reducing words to their root forms (stemming) or dictionary forms (lemmatization) aids in consolidating variations of a word, enhancing model generalization.

- Handling Special Characters and Numbers: Cleaning text from non-alphabetic characters and numbers prevents them from introducing noise and irrelevant information.

- Handling Contractions and Abbreviations: Expanding contractions and abbreviations ensures consistency and proper representation of words in the text.

- Removing Redundant Spaces: Cleaning unnecessary spaces enhances text readability and avoids treating multiple spaces as separate tokens.

24. Discuss the difference between precision and recall in the context of NLP evaluation?

Ans:

Precision is the ratio of true positive predictions to the total predicted positives, emphasizing how well the model avoids false positives. Recall, on the other hand, is the ratio of true positives to the total actual positives, focusing on the model’s capability to find all relevant instances.

In NLP, precision is crucial for ensuring the identified positives are truly relevant, while recall is important for not missing any relevant information, striking a balance is often the goal.

25. How does the concept of a language model differ from a language model in NLP?

Ans:

A language model predicts the likelihood of a sequence of words, while a language model in NLP refers to a system’s ability to understand and generate human language.

26. What is the purpose of a stemming algorithm, and name a popular stemming algorithm

Ans:

Stemming aims to normalize words by removing suffixes, allowing variations of a word to be represented by a common root. This helps in information retrieval, search engines, and text mining. The Porter stemming algorithm, devised by Martin Porter, is widely used for its simplicity and effectiveness in reducing words to their base or root form. It aids in simplifying text analysis and improving the accuracy of tasks like text classification and information retrieval.

27. Discuss the importance of context window size in word embeddings?

Ans:

- Semantic Relationships: A larger context window captures more distant relationships between words, providing a broader context for understanding their meanings.

- Local Context: Smaller window sizes focus on immediate neighbors, capturing more local context and nuances in word usage.

- Computational Efficiency: Larger windows may require more computational resources, affecting the training time and model complexity.

- Specificity vs. Generality: Smaller windows lead to more specific embeddings, while larger windows may result in more general representations.

28. What are the advantages and disadvantages of using deep learning models in NLP?

Ans:

Advantages:

- Complex Pattern Recognition: Deep learning models excel at capturing intricate patterns in language, enhancing NLP tasks like sentiment analysis and language translation.

- Feature Learning: They automatically learn relevant features from data, reducing the manual effort in feature engineering.

Disadvantages:

- Data Dependency: Deep learning models often require massive labeled datasets for effective training, posing challenges in domains with limited data.

- Computational Intensity: Training deep models demands substantial computational resources, making it resource-intensive and time-consuming.

29. Explain the concept of cross-validation in NLP model training?

Ans:

Cross-validation is especially valuable when working with limited data, as it maximizes the use of available samples for both training and evaluation. It aids in hyperparameter tuning by providing insights into the model’s performance under various configurations. Additionally, it helps detect issues like data leakage and ensures that the model’s success is not overly dependent on a specific subset of the data. Overall, cross-validation is a pivotal tool in refining and validating NLP models for real-world deployment.

30. How can you handle imbalanced datasets in sentiment analysis tasks?

Ans:

- Resampling: Oversample the minority class or undersample the majority class to balance the dataset.

- Class Weights: Adjust class weights during training to give more importance to the minority class, helping the model focus on learning patterns in the imbalanced class.

- Different Metrics: Use evaluation metrics like F1 score, precision, and recall instead of accuracy, as they provide a more comprehensive assessment in imbalanced scenarios.

- Ensemble Methods: Employ ensemble techniques, such as bagging or boosting, to combine predictions from multiple models and enhance performance on the minority class.

Ans:

- Information Retrieval: TF-IDF is crucial for search engines, ranking documents based on relevance to a user’s query by considering the importance of words in documents.

- Text Summarization: It aids in identifying key terms for summarizing a document, focusing on the most significant words.

- Document Classification: TF-IDF is used in machine learning models for tasks like spam detection or sentiment analysis, helping prioritize words indicative of specific categories.

- Keyword Extraction: It assists in extracting essential keywords from documents, aiding in content understanding and indexing.

32. What is the purpose of the softmax function in natural language processing?

Ans:

The softmax function essentially squashes the raw scores of different classes into a probability distribution, making it easier to interpret and compare their likelihoods. It’s like turning the volume up on the most confident predictions while dampening the influence of less certain ones. This is particularly useful in NLP for tasks like sentiment analysis or language translation where assigning probabilities to different outcomes is crucial.

33. How do you handle out-of-vocabulary words in NLP models?

Ans:

- Versatility: Subword tokenization and character-level embeddings enhance NLP models’ adaptability to diverse vocabularies, promoting versatility.

- Transfer Learning for Robustness: Leveraging pre-trained embeddings and transfer learning improves model robustness, enabling effective handling of out-of-vocabulary words in varied linguistic contexts.

34. Discuss the challenges of sentiment analysis on social media data?

Ans:

The brevity of social media posts often limits the amount of available context, making it challenging to interpret nuanced emotions. Furthermore, the rapid spread of misinformation and fake news can skew sentiment analysis results, as the sentiment expressed may not align with factual accuracy. Addressing these challenges requires a combination of advanced natural language processing techniques and constant adaptation to the evolving online communication landscape.

35. What is BLEU score, and how is it used in machine translation evaluation?

Ans:

- N-gram Precision: BLEU calculates precision based on the overlap of n-grams (consecutive word sequences) between the machine-generated translation and human references.

- Brevity Penalty: It accounts for the length of the machine-generated translation compared to the reference translations, penalizing overly short outputs.

- Cumulative BLEU Scores: BLEU is often computed for various n-gram sizes (unigrams, bigrams, etc.) and then combined into a cumulative score, providing a more comprehensive evaluation.

36. Explain the concept of coreference resolution in NLP?

Ans:

Consider this sentence:

“The cat sat on the windowsill. It enjoyed the view outside.”

In coreference resolution, the model needs to understand that “It” in the second sentence refers to the same entity as “The cat” in the first sentence. This linkage ensures a seamless understanding that the cat is the one enjoying the view.

37. Discuss the role of hyperparameters in training NLP models?

Ans:

- Adjusting the learning rate impacts how quickly or slowly a model learns during the training. A higher learning rate might lead to faster convergence, but it risks overshooting the optimal values. On the other hand, a lower learning rate may ensure stability but could extend training time.

- Batch size, another key hyperparameter, determines the number of examples used in each iteration. Larger batch sizes often accelerate training, leveraging parallel processing, but they might require more memory. Smaller batches may enhance generalization but may slow down the training process.

38. How does the Long Short-Term Memory (LSTM) network address the vanishing gradient problem?

Ans:

- Memory Cells: LSTMs have memory cells that can store and retrieve information over long sequences, preventing the vanishing gradient problem that arises in traditional RNNs.

- Gating Mechanism: LSTMs use gates (input, forget, and output gates) that regulate the flow of information. This allows the network to decide when to update, remember, or forget information, effectively addressing the vanishing gradient issue.

- Long-Term Dependencies: By selectively updating the memory cell through the gating mechanism, LSTMs can maintain information for longer durations, making them more effective in capturing dependencies in sequential data.

39. What is the purpose of the Adam optimizer in training NLP models?

Ans:

Adam’s adaptability to varying gradient sparsity in NLP tasks makes it efficient for handling sparse gradients. Its regularization effects act as a form of noise injection, aiding in preventing overfitting and improving generalization.

The optimizer also exhibits robust performance, requiring minimal hyperparameter tuning, contributing to its widespread adoption in the NLP community. With elements like bias correction and momentum, Adam ensures stable and efficient optimization across complex, high-dimensional models commonly encountered in NLP applications. Overall, the optimizer’s versatility and effectiveness in diverse scenarios make it a go-to choice for researchers and practitioners working on natural language processing tasks.

40. Discuss the concept of cross-entropy loss in NLP and its role in training language models?

Ans:

In training a sentiment analysis model, cross-entropy loss evaluates how well the predicted probability distribution aligns with the actual sentiment labels, guiding the model to minimize discrepancies such as predicting a positive sentiment when the true sentiment is negative.

41.What does word sense disambiguation entail within the realm of Natural Language Processing (NLP)?

Ans:

This process involves disentangling multiple possible interpretations a word may have, ensuring accurate understanding by considering the surrounding words and context. Word sense disambiguation is crucial in NLP tasks to prevent misinterpretation and enhance the precision of language understanding models. It requires algorithms to discern the intended meaning of a word in a specific instance, considering nuances and context cues for more precise language comprehension.

42. Discuss the role of regularization in preventing overfitting in NLP models?

Ans:

- Penalizing Large Weights: Regularization introduces penalty terms, such as the L1 and L2 norms, during training. These penalties discourage the model from assigning excessively large weights to certain features, preventing it from fitting the training data too closely.

- Generalization Improvement: By restraining the magnitude of weights, regularization encourages the model to capture more generalized patterns rather than memorizing specific details from the training data. This enhanced generalization aids in preventing overfitting, making the model more robust to unseen data in NLP tasks..

43. What are the challenges of handling negation in sentiment analysis?

Ans:

- Scope of Negation: Determining the scope of negation is challenging, as it requires identifying which words in a sentence are affected by negation. For instance, in the phrase “not happy,” the sentiment of “happy” is reversed, but this scope determination becomes more complex in longer and more intricate sentences.

- Contextual Negation: Context plays a crucial role in negation handling. The sentiment of a negated word might differ based on the overall context of the sentence. For example, “not bad” generally conveys a positive sentiment despite the negation of “bad.”

44. Explain the concept of cross-lingual information retrieval?

Ans:

- Bridging Language Barriers: Cross-lingual information retrieval aims to overcome language barriers, enabling users to access relevant information even if it’s written in a different language than their query.

- Multilingual Search Engines: It often involves the development of multilingual search engines that can process queries in various languages and retrieve documents from diverse linguistic sources.

45. Discuss the impact of word order in different languages on machine translation?

Ans:

These challenges necessitate advanced neural network architectures, such as Transformer models, which excel at capturing long-range dependencies. Additionally, incorporating contextual information, like in contextual embeddings, helps address word order nuances. Training on parallel corpora with diverse language structures aids in improving translation accuracy. Despite advancements, maintaining fluency and coherence in translations remains an ongoing focus, as subtle variations in word order can significantly impact the conveyed meaning.

46. What is the purpose of a confusion matrix in evaluating classification models in NLP?

Ans:

A confusion matrix provides a detailed breakdown of model performance, showing true positive, true negative, false positive, and false negative predictions.

47. How can you address the issue of biased language models in NLP? 47. How can you address the issue of biased language models in NLP?

Ans:

Example: Bias Mitigation through Dataset Augmentation

To address gender bias, a model trained on biased data referring to doctors as predominantly male can be augmented with gender-neutral examples, ensuring a more balanced representation. This helps the model avoid reinforcing stereotypes and promotes fairer language understandi

48. Discuss the concept of chunking in NLP?

Ans:

- Phrase Identification: Chunking identifies and extracts phrases or chunks from sentences, revealing syntactic structures beyond individual words.

- Part-of-Speech Tags: It often relies on part-of-speech tagging to recognize and group words based on their grammatical roles, such as identifying noun phrases or verb phrases.

- Named Entity Recognition: Chunking is integral to tasks like Named Entity Recognition, where identifying and extracting specific types of entities (e.g., names of people, organizations) is crucial.

49. What is the role of the cosine similarity measure in NLP?

Ans:

- Document Similarity: In NLP, cosine similarity quantifies the similarity between document vectors, where each vector represents the frequency of words in a document. Higher cosine similarity indicates greater similarity in content.

- Word Embeddings: It is commonly applied to assess the similarity between word embeddings. Word vectors with similar orientations (small angle between them) have higher cosine similarity, indicating semantic similarity between the corresponding words.

50. Explain the difference between a rule-based and a machine learning-based approach in NLP?

Ans:

Rule-Based Approach:

- Explicit Rules: Rule-based systems use predefined linguistic and grammatical rules to process and analyze text.

- Limited Adaptability: These approaches can be rigid, struggling with ambiguous or evolving language patterns that may not conform to predefined rules.

- Human-Defined Patterns: The rules are often crafted by linguists or domain experts based on their understanding of language structures.

Machine Learning-Based Approach:

- Learning from Data: Machine learning models learn patterns and representations from labeled data, allowing them to generalize to unseen examples.

- Flexibility: These models adapt to changing language dynamics, making them well-suited for tasks where rules might be challenging to define explicitly.

- Feature Extraction: Features are automatically learned from data, reducing the reliance on handcrafted linguistic rules.

51. How does the concept of word frequency impact the performance of topic modeling algorithms?

Ans:

Common Word Dominance: High-frequency words in a corpus can dominate topics, leading to less informative or overly general topics. Topic modeling algorithms might assign more importance to these common words.

TF-IDF Weighting: Term Frequency-Inverse Document Frequency (TF-IDF) is often employed to mitigate the impact of word frequency. It assigns higher weights to words that are frequent in a document but rare across the entire corpus, emphasizing more distinctive terms in topic modeling.

Normalization: Normalizing word frequencies helps in achieving a balanced representation of terms across documents, preventing the dominance of common words in the identification of topics.

52. Discuss the challenges of handling informal language, like slang, in text classification?

Ans:

- Contextual Ambiguity: Slang often relies heavily on context, making it challenging for models to accurately interpret the intended meaning without a deep understanding of the surrounding text.

- Rapid Language Evolution: Informal language, including slang, evolves quickly, introducing new expressions and meanings. Keeping models updated with the latest linguistic trends is essential for maintaining classification accuracy.

- Diverse Regional Variations: Slang usage varies significantly across regions and communities. Text classification models need to be adaptable to regional nuances, ensuring effective classification across diverse linguistic landscapes.

53. Explain the role of a language model in text generation tasks?

Ans:

Language model uses patterns and context from the input it receives to predict and generate coherent sequences of words. It’s like having a smart assistant that understands context and can continue sentences in a meaningful way. This technology is widely used in various applications, from autocomplete suggestions to chatbots and even creative writing assistance.

54. What is the impact of data imbalance on NLP models, and how can it be addressed?

Ans:

- Data imbalance in NLP models can result in skewed predictions, where the model may struggle to accurately represent minority classes. For instance, in sentiment analysis, if there’s an insufficient representation of negative sentiments, the model may perform poorly on negative sentiment classification.

- To address this, oversampling techniques involve duplicating instances of the minority class, ensuring a more balanced dataset. For example, in a spam detection model, if spam messages are underrepresented, oversampling involves creating additional copies of existing spam examples to achieve a better balance in the training data.

55. Discuss the trade-offs between using a rule-based and a statistical approach for named entity recognition?

Ans:

Rule-based systems often require manual crafting of rules, which can be time-consuming and might miss unexpected patterns. Meanwhile, statistical approaches may face challenges in handling rare entities or situations due to their reliance on patterns observed in the training data. It’s a constant tug-of-war between precision and coverage.

56. How does the concept of transfer learning apply to fine-tuning pre-trained language models?

Ans:

Imagine a language model initially trained on a broad range of internet text. For a customer support task, you’d fine-tune it using examples of support queries and responses. The model, having learned general language patterns, now refines its understanding to better address specific customer needs, making it more effective in handling support-related inquiries.

57. Explain the impact of word embeddings on mitigating the curse of dimensionality in NLP?

Ans:

- Semantic Similarity: Word embeddings encode semantic relationships, placing similar words closer in the vector space. This compact representation retains semantic meaning while reducing the dimensionality.

- Curse of Dimensionality: Traditional text representations create high-dimensional sparse vectors, leading to increased computational complexity and sparse data challenges. Word embeddings provide a dense, lower-dimensional representation, addressing these issues.

- Contextual Information: Word embeddings capture contextual information, considering the meaning of words in their surrounding context. This contextual understanding enables more effective modeling of language patterns without relying solely on isolated words.

58. What are the challenges in building chatbots that can handle natural language conversations effectively?

Ans:

- Ambiguity Handling: Chatbots must tackle the ambiguity in natural language, deciphering multiple meanings.

- Context Maintenance: Maintaining context is crucial; chatbots need to recall previous interactions for coherent responses.

- Dynamic Conversations: Adapting to shifts in user intent within a dialogue is essential for effective chatbot responses.

- Variability in Expression: Chatbots should understand and respond appropriately to diverse language expressions, including slang and colloquialisms.

59. Discuss the concept of entropy in information theory and its relevance in NLP?

Ans:

In information theory, entropy is a fundamental idea that measures the degree of uncertainty or surprise linked to an event. In NLP, entropy is relevant when analyzing the predictability of language. High entropy implies greater unpredictability, indicating a diverse range of words or characters. Low entropy, on the other hand, suggests more predictability in the sequence.

60. How can you assess the quality of machine translation outputs using human evaluations?

Ans:

In a human evaluation of machine translation, annotators might rate translations based on fluency, checking for natural language flow without grammatical errors. Adequacy is assessed by comparing the translated content’s relevance to the source text.

For instance, if the source text discusses technical details, a high-quality translation should convey these accurately. An overall quality score considers both fluency and adequacy, providing a comprehensive assessment of how well the machine translation captures the essence of the original content.

61. Explain the concept of document similarity and its applications in NLP?

Ans:

In NLP, techniques like cosine similarity or Jaccard similarity are employed to quantify the resemblance between documents. This is handy for tasks such as duplicate detection, clustering similar articles, and information retrieval systems like search engines. It helps to gauge the closeness in meaning or content between different textual documents.

62. Discuss the challenges of sentiment analysis on multilingual text?

Ans:

- Language Nuances: Different languages convey sentiment in unique ways, making it challenging to create universal sentiment analysis models.

- Cultural Variations: Sentiment expressions vary across cultures, and a sentiment in one language might not translate directly to the same sentiment in another.

- Data Availability: Adequate labeled datasets for sentiment analysis might be limited in certain languages, affecting the model’s training and performance.

- Word Ambiguity: Words may have different meanings or sentiments in various languages, leading to potential misinterpretations.

63. What is the role of the activation function in a neural network, especially in NLP models?

Ans:

In NLP models, activation functions play a crucial role by introducing non-linearities, allowing the network to capture intricate patterns and relationships within language data. Non-linear activation functions enable neural networks to approximate complex functions, making them more adept at learning and representing the intricate structures present in natural language.

Popular activation functions like Rectified Linear Unit (ReLU) are effective in NLP models as they mitigate issues like vanishing gradients, facilitating the training of deep networks. Additionally, activation functions like sigmoid are used in binary classification tasks to squash output values between 0 and 1, representing probabilities.

64. How does the concept of named entity linking differ from named entity recognition?

Ans:

- Named Entity Recognition (NER): Identifies and classifies entities in text, such as names of people, organizations, and locations.

- Named Entity Linking (NEL): Takes NER a step further by connecting identified entities to specific real-world entities, resolving ambiguity, and linking mentions of the same entity across different contexts.

- NER Focus: Identifying and categorizing entities within text.

- NEL Focus: Creating links between identified entities and real-world entities, providing context and disambiguation.

- Example: NER might recognize “Paris” as a location, and NEL would then link it to the real-world entity “Paris, France,” providing additional context and disambiguating it from other entities with the same name.

65. Discuss the impact of domain-specific language on NLP model performance?

Ans:

NLP models may struggle with domain-specific language, requiring domain adaptation or fine-tuning for optimal performance in specialized areas.

66. Explain the concept of a language model’s perplexity and its relationship with entropy?

Ans:

- Perplexity quantifies the uncertainty or surprise of a language model when predicting the next word in a sequence.

- It’s calculated as the inverse probability of the test set normalized by the number of words.

- Lower perplexity indicates better predictive performance and lower uncertainty in the model.

- Entropy, closely related, measures the average amount of information (uncertainty) associated with each word prediction.

67. How can you handle spelling errors in NLP tasks like text classification?

Ans:

- Spell-Checking: Use a spell-checking tool to correct misspelled words automatically. For instance, transforming “teh” to “the” or “happey” to “happy” helps improve the accuracy of text.

- Normalization: Apply text normalization techniques to handle variations. For example, treating different forms of a word as the same, such as converting “running” and “runs” to their base form “run,” contributes to better consistency in text data.

68. Discuss the challenges of sentiment analysis in languages with rich morphology?

Ans:

In languages with rich morphology, words can undergo various changes based on context, making sentiment analysis tricky. Identifying the root sentiment-carrying unit becomes challenging due to inflections, prefixes, and suffixes altering word forms. This complexity requires more sophisticated algorithms and linguistic analysis to grasp nuanced emotions accurately.

69. What is the impact of context window size on word embeddings, especially in capturing semantic relationships?

Ans:

A larger context window size in word embeddings may capture broader semantic relationships, while a smaller window size may focus on more local context.

70. Explain the concept of topic coherence in topic modeling evaluation?

Ans:

- Interpretability: Topic coherence gauges how easily humans can understand and interpret the topics generated by a model.

- Meaningfulness: It evaluates the significance and relevance of words within each topic, ensuring coherent and semantically meaningful groupings.

- Semantic Consistency: Examining the consistency of word associations within topics helps measure the overall coherence, ensuring that words within a topic are contextually related.

- Algorithmic Evaluation: Topic coherence metrics provide a quantitative measure, aiding in the comparison of different topic models to identify the one that produces more coherent and interpretable topics.

71. Discuss the role of a language model in speech recognition systems?

Ans:

- Contextual Understanding: Language models enhance speech recognition by considering context and the probability of specific word combinations. This contextual understanding improves the accuracy of transcribing spoken words.

- Error Correction: By leveraging language patterns, the model can identify and correct potential errors in the recognized speech, leading to more accurate transcriptions.

- Vocabulary Support: Language models assist in handling a wide vocabulary, including rare or context-specific words, by predicting their occurrence based on linguistic context.

72. How does the use of attention mechanisms contribute to the interpretability of NLP models?

Ans:

Imagine a translation task where the source sentence is “The cat is on the mat,” and the model needs to translate it to French. With attention mechanisms, the model might assign higher attention weights to different words at each step. For instance:

- Step 1 (English): “The” -> Attention on “Le” (French)

- Step 2 (English): “cat” -> Attention on “chat”Step 3 (English): “is” -> Attention on “est”

This dynamic attention allocation provides transparency into the translation process, showing that, at each step, the model focuses on specific words relevant to the translation. It helps users and developers understand which parts of the input are crucial for the model’s decisions, enhancing interpretability.

73. What is the impact of imbalanced class distribution on text classification models, and how can it be addressed?

Ans:

An uneven distribution of classes may cause models to prioritize the majority class, resulting in suboptimal performance for minority classes. Addressing this issue involves employing techniques such as class re-sampling, which includes over-sampling the minority class or under-sampling the majority class.

Additionally, utilizing evaluation metrics like F1 score, precision, and recall offers a more nuanced assessment, considering both false positives and false negatives. These strategies help mitigate bias and enhance the model’s ability to handle imbalanced text classification tasks.

74. Discuss the trade-offs between rule-based and machine learning-based approaches in part-of-speech tagging?

Ans:

Rule-based approaches may be simpler but lack adaptability; machine learning-based approaches learn patterns from data, offering flexibility but requiring sufficient training data.

75. Explain the role of a beam search algorithm in sequence generation tasks in NLP?

Ans:

- Candidate Exploration: Beam search explores a set of candidate sequences during sequence generation tasks, considering multiple possibilities.

- Quality Improvement: By retaining a set of top candidates at each step, beam search aims to enhance the overall quality of the generated sequences.

- Trade-off Parameter: The “beam width” parameter determines the number of candidates retained, allowing a balance between exploration and exploitation in the search space.

- Global Optimization: Unlike greedy search, beam search looks beyond immediate decisions, aiming for more globally optimized sequences in tasks like language generation.

76. What challenges do dialects pose in NLP, and how can models be adapted to handle them?

Ans:

Dialects present challenges in NLP due to variations in vocabulary, grammar, and expressions unique to specific regions. Adapting models requires addressing these variations. One approach involves incorporating dialect-specific data during model training to expose the system to diverse linguistic nuances. Alternatively, transfer learning techniques enable models to generalize across dialects by pre-training on a large dataset and fine-tuning on dialect-specific data. This adaptation ensures models understand and generate text that aligns with the linguistic characteristics of different dialects, enhancing their performance in diverse language contexts.

77. Discuss the concept of data augmentation in the context of NLP model training?

Ans:

- Variety in Training: Data augmentation introduces variations in the input data, such as paraphrasing, word substitution, or introducing grammatical changes. This exposes the model to a broader range of linguistic patterns, making it more adaptable to diverse real-world scenarios.

- Mitigating Overfitting: By providing a more extensive and varied dataset, data augmentation helps prevent overfitting, where models become too specific to the training examples. This regularization technique promotes better generalization, improving the model’s performance on unseen data.

78. How does the choice of word embedding model impact the performance of downstream NLP tasks?

Ans:

Different word embedding models, such as Word2Vec, GloVe, or fastText, may capture different aspects of word semantics, influencing task performance.

79. Explain the concept of mutual information in feature selection for text classification?

Ans:

- Quantifying Relevance: Mutual information calculates the extent to which the presence or absence of a feature provides information about the class labels in text data.

- Feature Selection Guidance: High mutual information indicates strong relevance to class distinctions, guiding the selection of features that contribute significantly to the classification task.

- Effective Dimensionality Reduction: By focusing on features with high mutual information, text classification models can effectively reduce dimensionality, improving efficiency and often enhancing overall classification accuracy.

80. Discuss the challenges and approaches to handling polysemy in word sense disambiguation?

Ans:

- Semantic Ambiguity: Polysemy introduces semantic ambiguity, making it challenging to discern the intended meaning of a word in a given context.

- Contextual Disambiguation: Leveraging surrounding words and context is a key approach. Analyzing the words in proximity helps models infer the intended sense of the polysemous word based on the context in which it appears.

- Sense-Tagged Datasets: Training models on sense-tagged datasets, where instances of polysemous words are annotated with their correct meanings, aids in learning contextual patterns and improving disambiguation accuracy.

81. Explain the concept of self-supervised learning and its applications in NLP?

Ans:

In self-supervised learning for NLP, a common approach is to mask certain words in a sentence and task the model with predicting those masked words based on the context provided by the remaining words.

For instance, if you consider the sentence: “The cat sat on the ____.”

The model is trained to predict the missing word “mat” by learning contextual relationships within the sentence.

This pre-training on unlabeled data helps the model capture rich linguistic representations, and the pre-trained model can then be fine-tuned for specific NLP tasks like sentiment analysis or named entity recognition using labeled data.

82. Discuss the impact of word frequency on the performance of sentiment analysis models?

Ans:

- For instance, in sentiment analysis, common words like “good” or “bad” may have straightforward sentiment associations. However, if a model is not equipped to handle rare words or specific domain-related terms, it might struggle with nuanced sentiment expressions.

- Consider a review saying “exquisite” – a less frequent word conveying positive sentiment. A robust sentiment analysis model should account for both common and rare words to accurately capture the sentiment nuances present in diverse language expressions.

83. What is the role of embeddings in graph-based representations of text data?

Ans:

Embeddings in graph-based representations capture relationships between words, enhancing the understanding of semantic connections in textual data.

84. Explain the concept of disfluency in natural language processing and its challenges?

Ans:

- Interruptions and Repetitions: Disfluencies include interruptions like “uh” or “um,” repetitions of words or phrases, impacting the flow of natural language communication.

- Speech Recognition Challenges: Disfluencies pose challenges for speech recognition systems, as accurately transcribing and interpreting interrupted or repeated segments can be complex.

- Semantic Ambiguity: Understanding the intended meaning becomes challenging when disfluencies introduce ambiguity. Deciphering whether a repetition is intentional or a correction influences accurate natural language processing.

- Adapting Models: NLP models need to be equipped to handle disfluencies, requiring training on datasets that encompass various speech patterns to enhance performance in real-world communication scenarios.

85. How can you address the issue of biased training data affecting NLP models?

Ans:

Imagine a sentiment analysis model trained on biased movie reviews that disproportionately favor a specific genre.

To address this, you’d need to diversify the training data with a representative sample from various genres, ensuring a more balanced perspective in the model’s understanding of sentiment.

Additionally, techniques like adversarial training or re-weighting can be applied to mitigate biases during the model training process.

86. Discuss the significance of context in coreference resolution tasks in NLP?

Ans:

Context is crucial in coreference resolution, helping models determine which expressions refer to the same entities within a text.

87. What is the role of unsupervised learning in topic modeling?

Ans:

- Through techniques like Latent Dirichlet Allocation (LDA), unsupervised learning identifies patterns and relationships among words in a corpus, allowing the model to infer topics without explicit guidance.

- It’s valuable when you have large datasets with unstructured text, as it autonomously uncovers underlying themes, aiding in document organization, summarization, and understanding the content’s inherent structure.

88. Explain the challenges of handling semantic drift in word embeddings?

Ans:

- Contextual Changes: Over time, words may acquire new meanings or shift in context. Word embeddings, originally trained on historical data, may not capture these evolving semantics, leading to discrepancies between the model’s understanding and current language usage.

- Cultural Shifts: Language is influenced by cultural shifts, and word meanings can transform based on societal changes. Word embeddings might struggle to adapt to these cultural nuances, potentially misinterpreting or misrepresenting words in a contemporary context.

89. How does the use of convolutional neural networks (CNNs) differ in NLP compared to image processing?

Ans:

In NLP, CNNs are applied to sequential data like text, capturing local patterns, while in image processing, they scan spatial features within an image.

90. Discuss the role of discourse analysis in understanding the structure of written or spoken communication?

Ans:

- Contextual Understanding: Discourse analysis goes beyond individual sentences, considering the broader context to unveil implicit meanings. It helps decipher the relationships between sentences and how they contribute to the overall message, enhancing comprehension.

- Identifying Patterns: By scrutinizing linguistic patterns, discourse analysis reveals recurring structures, such as the use of specific phrases, rhetorical devices, or discourse markers. Recognizing these patterns aids in understanding the organization and intent of the communication.

91. Explain the concept of topic coherence and its importance in evaluating topic models?

Ans:

Topic coherence is crucial in evaluating topic models as it reflects the model’s ability to generate coherent and semantically meaningful topics. When topics exhibit high coherence, it implies that the words within a topic share strong semantic relationships, enhancing the interpretability of the model’s output. Evaluating coherence aids researchers and practitioners in selecting optimal hyperparameters and refining models to produce more coherent and insightful topics, ensuring the utility of topic modeling in various applications like document summarization or content recommendation.

92. How does the use of neural language models, like GPT-3, impact natural language understanding tasks?

Ans:

Neural language models, such as GPT-3, excel in capturing context and nuances, significantly impacting tasks like text generation, summarization, and translation.

93. Discuss the challenges and strategies for handling ambiguity in intent recognition for chatbots?

Ans:

Ambiguity Challenges:

- Homonymy and Polysemy: Words with multiple meanings can lead to confusion.

- Vague User Queries: Users may express intents imprecisely, making it challenging to discern their true intention.

Strategies for Handling Ambiguity:

- Context Utilization: Leverage contextual information from previous interactions to better understand user intent.

- Clarifying Questions: Prompt users for additional information to clarify ambiguous queries and refine intent recognition

94. What is the impact of named entity recognition errors on downstream information extraction tasks?

Ans:

Errors in named entity recognition can propagate to information extraction tasks, affecting the accuracy of extracting meaningful information from text.

95. Explain the concept of feature engineering in NLP and its role in model performance?

Ans:

- Informative Features: Feature engineering enhances model understanding by creating meaningful input representations in NLP tasks.

- TF-IDF and Word Embeddings: Techniques like TF-IDF capture term importance, while word embeddings represent semantic relationships, both crucial for model comprehension.

- Syntactic Features: Incorporating syntactic structures, such as part-of-speech tags, aids in capturing linguistic nuances, contributing to improved model performance in natural language processing tasks.

96. Discuss the challenges of handling sarcasm in sentiment analysis tasks?

Ans:

- Contextual Nuances: Sarcasm often relies on context and tone, making it difficult for models to discern the intended sentiment solely from words.

- Literal vs. Intended Sentiment: The literal meaning of sarcastic statements may convey a different sentiment than what the speaker intends, leading to misinterpretation.

- Subjectivity Variability: Individuals may interpret sarcasm differently, adding variability to sentiment labels and making it challenging to establish a universal understanding.

- Irony Detection: Sarcasm is a form of verbal irony, and detecting this requires models to grasp subtle cues, making it a complex task within sentiment analysis.

- Training Data Representation: Ensuring that training data includes diverse examples of sarcastic expressions is crucial to enhance a model’s ability to handle this nuanced form of communication.

97. How can you assess the diversity of topics generated by a topic modeling algorithm?

Ans:

Assessing topic diversity involves examining the distinctiveness and coverage of topics generated by the algorithm across a document collection.

98. Explain the concept of language generation and its applications in NLP?

Ans:

- Dialog Systems: Language generation is fundamental in creating engaging and context-aware conversations within dialog systems, making interactions more natural and user-friendly.

- Story Generation: In creative writing or entertainment domains, language generation contributes to generating narratives, stories, or dialogues for various purposes, enhancing storytelling capabilities.

- Language Translation: Language generation plays a role in machine translation by generating grammatically correct and contextually appropriate translations, facilitating cross-language communication.

99. Discuss the impact of tokenization choices on the performance of NLP models?

Ans:

Tokenization choices, such as word-based or subword-based tokenization, can impact model performance by influencing the granularity of input representations.

100. How does the choice of loss function influence the training of sequence-to-sequence models in NLP?

Ans:

For instance, using cross-entropy loss in sequence-to-sequence models may prioritize optimizing token-level probabilities, potentially leading to over-smoothed outputs.

On the other hand, employing sequence-to-sequence loss, which considers the entire sequence generation process, can better capture global coherence and result in more contextually relevant and coherent outputs during training.