Data science is a fast-growing subject that uses domain knowledge, computer skills, and statistical experience to extract useful insights from data. The complete data lifecycle is covered, including the stages of data gathering, cleaning, analysis, and interpretation. Data scientists find patterns and trends in data using a range of instruments and methods, including data mining, machine learning, and data visualisation.

1.What is data science?

Ans:

Data science is a multidisciplinary subject that uses domain knowledge, computer science, statistics, and other tools to mine useful information from vast volumes of data. It covers various stages, from data collection and cleaning to analysis and visualization, and employs techniques from machine learning, predictive modeling, and big data analytics.

2.Explain supervised and unsupervised learning.

Ans:

The two primary subcategories of machine learning are supervised and unsupervised learning.

supervised education: Each example in the dataset is associated with the appropriate output because the algorithm in this case is trained on a labeled dataset. The objective is to learn an input-to-output mapping.

Unsupervised Learning:

This entails training on an unlabeled dataset with the purpose of identifying patterns or structures in the data. Because there are no labels supplied, the algorithm attempts to group or cluster data based on similarities or differences.3. What is bias-variance tradeoff?

Ans:

It describes the balance between model complexity (variance) and the risk of over-simplifying (bias). Overfitting has high variance & low bias, while underfitting has high bias & low variance.

Bias: Refers to errors due to overly simplistic assumptions in the learning algorithm.

Variance: Represents errors due to too much complexity in the learning algorithm.

4.What is overfitting?

Ans:

Overfitting happens when a machine learning model depends excessively on its training dataset, to the point that it catches noise, fluctuations, and outliers in the data. While such a model will perform impressively on its training data, it will likely struggle with new, unseen data because it lacks generalization.

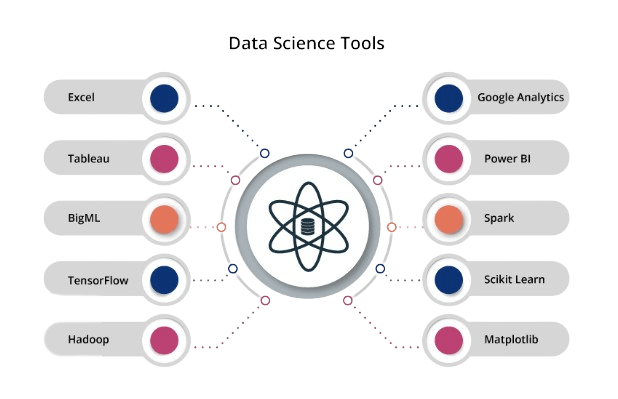

5. Name some popular data visualization tools.

Ans:

Matplotlib and Seaborn: Python libraries known for producing static, animated, and interactive visualizations.

Plotly: An interactive graphing library.

6.Define precision and recall.

Ans:

Precision: It quantifies the accuracy of positive predictions. It’s the ratio of correctly predicted positive observations to the total predicted positives.

Recall (or Sensitivity): It measures the ability of the classifier to find all positive instances. It’s the ratio of correctly predicted positive observations to all the actual positives in the dataset.

7.What is a confusion matrix?

Ans:

It’s a table used to evaluate the performance of a classification model, showing actual vs. predicted classifications.

True Positives (TP): Instances which are positive and predicted as positive.

True Negatives (TN): Instances which are negative and predicted as negative.

False Positives (FP): Instances which are negative but predicted as positive.

8. How do you handle missing data?

Ans:

Removal: Delete the rows with missing values. This method is straightforward but can lead to loss of valuable data, especially if the dataset isn’t large.

Mode Imputation: Replace missing values using the column’s mode. Appropriate for categorical data.

Predictive Modeling: Use algorithms, like decision trees or KNN, to predict and impute missing values based on other columns.

9. What is a decision tree?

Ans:

A decision tree is a machine-learning approach that has a tree structure that looks like a flowchart. It consists of nodes (representing attributes or features), branches (representing decision rules), and leaves (representing outcomes or decisions).

10.What is regularisation and why is it used?

Ans:

Regularisation is a method used in machine learning and statistical modelling to prevent overfitting by introducing a penalty term into the loss function. By introducing this penalty, the model is dissuaded from fitting too closely to the training data. The two most common regularisation techniques are L1 (Lasso) and L2 (Ridge).

11. What are ensemble methods?

Ans:

Bagging (Bootstrap Aggregating):

Uses different subsets of the training data to train multiple models and aggregates predictions. Example: Random Forest.Boosting: Iteratively adjusts weights of training instances based on the previous model’s errors. It focuses on training instances that are harder to predict. Examples: AdaBoost, Gradient Boosting.

12. What is cross-validation?

Ans:

Cross-validation is a technique for assessing how a model will generalise to an independent dataset. It involves splitting the data multiple times and training/testing the model on these splits. The most common method is k-fold cross-validation where the data is split into k subsets. On k-1 subsets, the model is trained, and on the final subset, it is tested.

13. Explain the difference between Type I and Type II errors.

Ans:

| Error Type | Type I Error (False Positive) | Type II Error (False Negative) | |

| explanation |

rejecting a valid null hypothesis incorrectly |

not disproving a bogus null hypothesis | |

| Also referred to | as an alpha mistake or false positive | A beta mistake or false negative | |

| Realistic Effect | assuming the existence of a link or effect when none exists | overlooking a link or impact that already exists | |

| Sign of Probability |

α(alpha) |

β (beta) |

14.What is principal component analysis (PCA)?

Ans:

PCA is a dimensionality reduction approach that determines the axes in the dataset that maximize variance. By transforming the original features onto these axes (or principal components), PCA can reduce the number of dimensions in the dataset while retaining most of the original variance.

15. Define A/B testing.

Ans:

A/B testing, often known as split testing, is a way of comparing two versions of a website or app to see which one performs better in terms of a given statistic (e.g., conversion rate). Users are randomly allocated to either the A (control) or B (variant) groups, and the results for each group are compared to determine whether version is more successful.

16. What is the difference between a DataFrame and a Series in pandas?

Ans:

In the pandas library, a Series is a one-dimensional labelled array that may store any form of data. It’s analogous to a column in a spreadsheet or a dataset in R. A DataFrame, on the other hand, is a two-dimensional labelled data structure containing columns of various sorts, akin to a spreadsheet, SQL table, or data frame in R. You can think of it as a collection of Series objects that share a common index.

17. What are hyperparameters in a ML model?

Ans:

Hyperparameters are model components that cannot be learned from data. They are set before the learning process begins. For instance, in a neural network, the learning rate, number of layers, and number of nodes in each layer might be considered hyperparameters. In a decision tree, the maximum depth of the tree can be a hyperparameter.

18.Describe gradient descent.

Ans:

Gradient descent is an optimisation technique that is used to minimise (or maximise) functions. In the context of machine learning, it is often used to alter model parameters in order to minimise the cost function. Starting with an initial set of parameters, the algorithm determines the gradient or slope of the cost function and adjusts the parameters in the direction that minimises the cost function the greatest.

19.Explain the ROC curve and AUC.

Ans:

The ROC curve depicts a classifier’s performance over all classification criteria graphically. The graph contrasts the True Positive Rate (sensitivity) and False Positive Rate (1-specificity) for various threshold settings. The AUC (Area Under the Curve) is a scalar statistic that demonstrates the classifier’s overall performance over all possible thresholds. AUC of 1 denotes a perfect classifier, but AUC of 0.5 denotes no distinguishing capacity, which is equivalent to random guessing.

20.What’s the difference between clustering and classification?

Ans:

Both clustering and classification involve grouping data points based on similarities. However, classification is a supervised technique where the groups (classes) are predefined, and the goal is to categorise new data points into these known classes. Clustering, on the other hand, is an unsupervised technique where the number or nature of the groups (clusters) is not known in advance, and the goal is to discover these groups based on the data.

21.Define TF-IDF.

Ans:

The Term Frequency-Inverse Document Frequency statistic is used in NLP and information retrieval to indicate the significance of a phrase to a document in a collection or corpus.

22. What is the curse of dimensionality?

Ans:

It refers to the phenomenon in which the number of dimensions (features) rises, causing the feature space to become progressively sparse. This raises a number of issues in machine learning and data analysis. Distances between data points become less discernible in a high-dimensional environment, reducing the efficacy of distance-based algorithms, increasing the danger of overfitting, and exponentially increasing the processing cost.

23.How do you evaluate a regression model?

Ans:

Mean Absolute Error (MAE): The mean of the absolute deviations between the actual and projected values.

Mean Squared Error (MSE): The squared difference between the expected and actual values, averaged.

24. Describe collaborative filtering.

Ans:

It’s a method used in recommendation systems where users receive suggestions based on the likes and dislikes of similar users.

User-based: Recommends products by finding users who are similar to the target user and suggests items those similar users have liked.

Item-based: Finds items that are similar to those the target user liked and recommends similar items.

25.What distinguishes “bagging” from “boosting”?

Ans:

Bagging (Bootstrap Aggregating): Involves training separate models on different subsets of the training data (drawn with replacement) and then averaging (for regression) or voting (for classification) to produce the final prediction. It helps reduce variance. Example: Random Forest.

Boosting: Emphasises training models in a sequential manner such that each new model fixes the flaws of the preceding one.

26. What’s the difference between SQL and NoSQL?

Ans:

SQL databases are relational, use structured query language, and have a fixed schema. These are relational databases that store data in structured tables with rows and columns. They use SQL for querying and updating the data. NoSQL databases can be non-relational, schema-less, and include document, key-value, wide-column, or graph databases.These are non-relational databases that can store and retrieve data in ways other than tabular relations.

27. Define deep learning.

Ans:

The goal of the machine learning field known as “deep learning” is to develop algorithms that are modelled after the neural networks found in the human brain. Deep learning models are distinguished by the use of numerous layers of neurons, which enables them to explore and learn data representations automatically.

28.How does a Random Forest work?

Ans:

Random Forest builds multiple decision trees and merges their outputs (via voting or averaging) to improve accuracy and control over-fitting. Each tree is trained on a different subset of the data(bootstrap sample).. Each split considers a random subset of the characteristics. This randomness helps the model to be more robust and reduces overfitting.

29.Explain the K-means clustering algorithm.

Ans:

K-means partitions data into K clusters by iteratively assigning points to the nearest centroid and recalculating centroids until convergence.

- Initialize ‘k’ centroids randomly.

- Create ‘k’ clusters by assigning each data point to the closest centroid.

- Recalculate the centroid of each cluster as the mean of all the data points in that cluster.

30. What are support vector machines (SVM)?

Ans:

SVMs, or supervised learning models, are used in regression and classification applications. The basic purpose of an SVM is to determine the optimum hyperplane for classifying a dataset. The hyperplane of a linear SVM is a straight line. SVMs transfer non-linear data into a higher-dimensional space where a linear hyperplane may be utilised to segregate the data using a technique known as the kernel trick.

31. What is the difference between long and wide format data?

Ans:

Both long and wide formats are ways to structure observational data.

Wide Format: Each subject’s repeated replies will be in a single row, and each response will be in a different column in this style. It’s similar to a matrix, with rows representing subjects and columns representing observations.

Long Format: Here, each row is one-time point per subject. It’s more like a list where every observation for every subject gets its own row. This format is typically more useful for time series and panel data types of analysis.

32. Explain the concept of “imbalanced datasets.”

Ans:

An imbalanced dataset refers to a situation in classification where one class significantly outnumbers the other classes. For instance, in a binary classification, if 90% of the data belongs to Class A and only 10% to Class B, then it’s imbalanced. This can lead to challenges, as many algorithms will simply predict the majority class, leading to a high accuracy but poor generalisation.

33. How do you deal with multicollinearity?

Ans:

Techniques include removing correlated variables, using regularization, or employing methods like Principal Component Analysis (PCA).

Variance Inflation Factor (VIF): A measure to identify multicollinearity. Higher VIF indicates higher correlation.

Remove Variables: Drop one of the correlated variables.

Principal Component Analysis (PCA): Reduce dimensionality.

34. Define Neural Networks.

Ans:

Computational models called neural networks are based on the architecture of the human brain. They are made up of layers of networked nodes, often known as neurons. Each connection has a weight, which is adjusted during training. They can recognize patterns, classify data, and forecast outcomes, and they are fundamental to deep learning.

35.What is backpropagation?

Ans:

Backpropagation is a supervised learning algorithm for training multi-layer perceptrons (artificial neural networks), involving forwarding input data and adjusting weights using the gradient descent.

36. How do you handle large datasets?

Ans:

Techniques include using distributed systems like Spark, down sampling, using efficient data structures, and optimising database queries.

Sampling: Take a representative subset of data for analysis.

Distributed Systems: Tools like Hadoop and Spark can process large datasets across multiple machines.

37. What is an autoencoder?

Ans:

One kind of neural network used for unsupervised learning is an autoencoder. Its primary function is to encode input data into a compressed representation and then decode that representation back to the original data. Autoencoders are often used for dimensionality reduction or anomaly detection.

38. Define Time Series Analysis.

Ans:

Time Series Analysis involves studying ordered data points collected or recorded at specific time intervals to extract meaningful statistics, patterns, and characteristics. The goal is often to forecast future points based on historical data. Components of time series include trend, seasonality, and noise.

39.How does the k-NN (k-Nearest Neighbours) algorithm work?

Ans:

k-NN is a lazy learning algorithm used for classification and regression. For classification, a data point is assigned to the class most common among its ‘k’ closest training examples. The closeness is typically measured using distance metrics like Euclidean or Manhattan distance.

40. What’s the role of activation functions in neural networks?

Ans:

Non-linearity is introduced into the neural network model by activation functions, allowing it to capture complicated connections in the data. The network would stay linear regardless of depth if non-linear activation functions were not present. Sigmoid, tanh, softmax, and ReLU activation functions are often utilised.

41.Explain the difference between Batch Gradient Descent and Stochastic Gradient Descent.

Ans:

Batch Gradient Descent: This algorithm calculates the gradient of the entire dataset and updates the weights of the model in one step. While it can converge to a global minimum for convex loss surfaces, it can be computationally intensive for large datasets.

Stochastic Gradient Descent (SGD): SGD estimates the gradient using a single training example and updates the weights one example at a time. It can be faster and can escape local minima more effectively than batch gradient descent. However, its frequent updates can lead to significant noise in the convergence process.

42.What is feature scaling and why is it important?

Ans:

Feature scaling involves adjusting the range of the data to a standard scale, typically between 0 to 1 or a mean of 0 and standard deviation of 1. This ensures that all features contribute approximately proportionately to the final distance in algorithms that rely on distances (like k-NN and SVM).

43. Describe “early stopping” in the context of deep learning.

Ans:

Early stopping is a type of regularisation that is used to avoid overfitting while training a machine learning model, particularly deep learning. The model’s performance is tracked on a different validation set during training.

44. What is the role of a loss function in machine learning?

Ans:

A loss function, also known as a cost function, calculates the difference between the model’s predictions and the actual data. It quantifies how effectively or badly a certain model performs. During training, the objective is to update the model to minimise this loss function, hence improving the model’s accuracy.

45. What is one-hot encoding?

Ans:

One-hot encoding is a technique for converting categorical data into a binary (0 or 1) matrix. For each unique category in the original data, a new column is created in the matrix. For each data point, the column corresponding to its category gets a value of 1, while all other columns get a value of 0. This process makes the categorical data suitable for machine learning algorithms, as they require numerical input.

46.How does dropout help in reducing overfitting in neural networks?

Ans:

Dropout is a regularisation technique applied during training deep neural networks. At each training iteration, random neurons in the network are “dropped out” or turned off with a certain probability, preventing them from participating in forward and backward passes for that iteration.

47. What is the purpose of a validation dataset?

Ans:

A validation dataset is used during model training to assess the performance of the model after each epoch or iteration. It provides a check on overfitting and guides the fine-tuning of model parameters.

48. What are embeddings in the context of deep learning?

Ans:

Embeddings are dense vector representations of data, often used for words (word embeddings) or other categorical items. They convert discrete categorical values into a continuous form, capturing the semantic relationships between items.

49. Explain the difference between parametric and non-parametric models.

Ans:

Parametric Models: These have a fixed number of parameters, and their form is specified before the training starts. Examples include linear regression and logistic regression. They make strong assumptions about the data but are simpler and faster.

Non-Parametric Models:

These do not make fixed assumptions about the functional form of the data. They can have an infinite number of parameters, allowing for more flexibility. Examples include k-NN and decision trees. They can adapt to any functional form, making them more versatile but potentially more data-intensive.50. What is dimensionality reduction and why is it useful?

Ans:

Reducing computation time and storage requirements. Removing multicollinearity by eliminating redundant features. Enhancing data visualisation by reducing data to 2 or 3 dimensions. Mitigating the curse of dimensionality which can impair some machine learning algorithms. Common methods include Principal Component Analysis (PCA), t-distributed Stochastic Neighbor Embedding (t-SNE), and Linear Discriminant Analysis (LDA).

51. Explain the difference between a bar chart and a histogram.

Ans:

Bar Chart: A bar chart, which is used for categorical data, shows distinct categories or things with rectangular bars, with the length of each bar corresponding to the count or frequency of that category/item. Each bar is separated by a different space.

Histogram: Designed for continuous data or numerical data that’s been binned into intervals. A histogram displays the frequency or number of data points that fall within specified ranges or “bins”. Unlike bar charts, the bars touch each other, indicating continuity.

52. Describe the Central Limit Theorem.

Ans:

An essential idea in statistics is the Central Limit Theorem (CLT). It asserts that, regardless of the initial distribution of the random variable, the sampling distribution of the sample mean will resemble a normal distribution given a sizeable enough sample. This theorem is foundational for many statistical methods and hypothesis tests that assume a normal distribution.

53. What are the common steps in a data pre-processing pipeline?

Ans:

Data Cleaning: Handling missing values, correcting errors, and removing outliers.

Transformation: Normalization, and encoding categorical variables.

Feature Engineering: Creating new features from existing ones, which can improve model performance.

Feature Selection:Picking the most relevant features for modeling, which can reduce overfitting and computational expense.

54. How do you address the cold start problem in recommendation systems?

Ans:

Content-based Recommendations: Suggest items by matching the content of the items and a user profile, with content described in terms of several descriptors that are inherent to the item (e.g., a book might be described by its author, its genre, etc.

Hybrid Approaches: Combine collaborative and content-based filtering to provide recommendations.

Popular Items: Recommend popular items as a baseline until enough data is gathered.

Asking the User: Collect preferences from the user directly during onboarding.

55.What is transfer learning in deep learning?

Ans:

Transfer learning is a pre-trained model—typically one that has been trained on a sizable benchmark dataset—as the foundation for solving a new, unrelated issue. The main idea is that knowledge learned in one task can be applied (or “transferred”) to another. This method can be particularly valuable in scenarios where data is limited.

56.What distinguishes a box plot from a violin plot?

Ans:

Box Plot: Shows the median, quartiles, and possible outliers of data. It provides a summarized view of the data distribution.

Violin Plot: A box plot and a kernel density plot are combined to create a violin plot.

57. What is data augmentation in the context of deep learning?

Ans:

Data augmentation involves artificially expanding the training dataset using label-preserving transformations. For instance, in image datasets, one can create altered versions of the original images through techniques like rotation, zooming, flipping, or cropping.

58. How does collaborative filtering differ from content-based filtering in recommendation systems?

Ans:

Collaborative Filtering: This method makes recommendations based on users’ past behavior, not on the attributes of items. For instance, if users A and B bought the same items except one, then that one item could be recommended to the other user.

Content-based Filtering: This method uses item features to recommend additional items similar to what the user likes, based on previous actions or explicit feedback. For example, if a user liked a book by a particular author, the system would recommend other books by the same author.

59.Explain the concept of “residuals” in regression analysis.

Ans:

Residuals are the discrepancies between the values that were seen (actual) and those that the regression model anticipated. They serve to illustrate the prediction error and are employed in the evaluation of the regression model’s accuracy and suitability.

60.What’s an LSTM in the context of deep learning?

Ans:

Long Short-Term Memory (LSTM) is a specific kind of Recurrent Neural Network (RNN). RNNs are made to find patterns in data sequences like time series or natural language. However, standard RNNs have difficulty in learning long-term dependencies due to issues like vanishing or exploding gradients. LSTMs address this by incorporating memory cells that regulate the flow of information, allowing them to capture long-term dependencies and maintain information across longer sequences.

61. How is a test set different from a validation set?

Ans:

Validation Set: It is used during model training to evaluate the model’s performance and tune hyperparameters. By offering an objective assessment of a model fit on the training dataset while tweaking model hyperparameters, it aids in model selection and prevents overfitting.

Test Set: After training and validating the model, the test set provides a final, unbiased performance assessment. It is used to assess how the model performs on new, unseen data and provides an estimate of the model’s generalisation ability.

62. Explain max-pooling in convolutional neural networks.

Ans:

Max-pooling is a downsampling process used in convolutional neural networks (CNNs). Its principal goal is to gradually lower the spatial dimension of the representation. During this operation, for each patch of the feature map, the maximum value is taken, and the rest are discarded. It helps in making the network invariant to small translations and reduces overfitting.

63. What’s the difference between a scatter plot and a bubble chart?

Ans:

Scatter Plot: It is a graphical representation that uses dots to display values from two variables. First one plotted along the x-axis and the other plotted along the y-axis. It’s used to show relationships between two continuous variables.

Bubble Chart: An extension of the scatter plot. Each dot in a bubble chart corresponds to a data point, but there’s an additional dimension represented the size of the bubble.

64. Explain anomaly detection.

Ans:

Anomaly detection is the procedure of finding unusual data points, occurrences, or observations that significantly deviate from the average of the data. These anomalies can often be translated into problems like bank fraud, manufacturing defects, or errors in a text.

65.What’s the difference between Batch Normalization and Layer Normalization?

Ans:

Batch Normalization: Normalizes across the batch of data. For each feature, it computes the mean and variance for that feature in the batch and normalizes it.

Layer Normalization: Instead of normalizing across the batch, it normalizes across the features.

66. What are the challenges of working with time series data?

Ans:

Seasonality: Patterns that repeat at known time intervals, like sales spikes during holidays.

Trends: Underlying patterns that aren’t cyclic. It could be upwards, downwards, or even horizontal.

Autocorrelation: Past data points can influence the future in time series, unlike other data types where observations are usually assumed to be independent.

Non-stationarity: Statistical properties of time series, like mean and variance, can change over time, making models less predictable.

67. What is a Generative Adversarial Network (GAN)?

Ans:

GAN is created by two neural networks, the generator and the discriminator, that were trained together in a game. In order to generate data, the generator attempts to use a probability distribution. The discriminator then tries to distinguish between real data and fake data produced by the generator.

68. Describe bootstrapping in statistics.

Ans:

Bootstrapping is a statistical method that allows estimation of the sampling distribution of a statistic by repeatedly resampling with replacement from the data. It’s a powerful tool for assessing the reliability of statistical estimates and hypothesis tests, especially when the sample size is small or when the underlying distribution of the data is unknown.

69. What is cosine similarity?

Ans:

Cosine similarity is a statistic for determining how similar two vectors are regardless of size. Mathematically, the cosine of the angle generated by two vectors projected in a multidimensional space is measured. This measure is frequently used in text analysis to assess the similarity of texts or phrases.

70. How is Root Mean Square Error (RMSE) different from Mean Absolute Error (MAE)?

Ans:

RMSE: It gives a measure of the magnitude of the error. By squaring the residuals, it gives more weight to larger errors than smaller ones, punishing large errors more.

MAE: Measures the average size of mistakes regardless of direction, giving equal weight to all errors. It is less susceptible to outliers than RMSE.

71.What is regularisation in neural networks?

Ans:

Regularization is a method for keeping neural networks and other machine learning models from overfitting. Overfitting is when a model loses its capacity to generalise because it learns the training data, including its noise and outliers, too well. Common regularization techniques include L1 (Lasso) and L2 (Ridge) regularization. Dropout, although technically different, can also be seen as a form of regularization.

72. How does the “Attention Mechanism” work in deep learning models?

Ans:

The attention mechanism is a crucial development in deep learning, particularly for sequences, allowing models to focus on certain parts of the input data over others. Instead of storing the entire input phrase into a fixed-size vector for sequence-to-sequence tasks like machine translation, attention enables the model to concentrate on various sections of the input sentence at different stages of the output generation.

73.What is the “Exploding Gradient” problem in neural networks?

Ans:

The exploding gradient problem refers to the large increase in the magnitude of gradients during training. Such large gradients result in very large updates to neural network model weights during training, making the model unstable. If not managed properly, it can result in the model producing NaN values as outputs.

74. Explain the F1 score.

Ans:

Rectified Linear Unit is known as ReLU. Convolutional neural networks and deep learning models both frequently utilise this kind of activation function. The function itself is max(0, x), turning all negative values in its input to 0. This method aids in solving the vanishing gradient problem and is computationally efficient. However, it can be sensitive to outliers and can produce dead neurons that don’t activate.

75.What is multicollinearity?

Ans:

Multicollinearity arises in statistical modeling when two or more independent variables (predictors) in a model are highly correlated with each other. This correlation can make it difficult to ascertain the effect of each predictor variable independently. It can lead to unstable coefficient estimates in regression models and makes it hard to interpret the model.

76.How can you detect outliers in a dataset?

Ans:

Statistical Methods: Using z-scores, or the IQR (Interquartile Range) method.

Visualization: Box plots, scatter plots, and histograms can be effective tools for visual outlier detection.

Machine Learning: Algorithms like the Isolation Forest, One-Class SVM, and DBSCAN can be used.

77. What is the purpose of the “ReLU” activation function in neural networks?

Ans:

Rectified Linear Unit is known as ReLU. Convolutional neural networks and deep learning models both frequently utilise this kind of activation function. The function itself is max(0, x), turning all negative values in its input to 0. This method aids in solving the vanishing gradient problem and is computationally efficient. However, it can be sensitive to outliers and can produce dead neurons that don’t activate.

78.Define “Convolutions” as used in convolutional neural networks

Ans:

A convolution in the context of a CNN is a mathematical procedure that combines two sets of data. It resembles the process of adding a filter to an image in terms of image processing. In order to construct a feature map, the filter (or kernel), which is a smaller-sized matrix in terms of width and height, slides or convolves around the input data (such as an image).

79. What are the key aspects of a good data visualization?

Ans:

- Clarity

- Accuracy

- Simplicity

- Relevance

- Consistency

- Accessibility

- Engaging Design

- Appropriate Scale

80. Explain the significance of the bias and variance trade-off.

Ans:

Bias and variance are two sources of errors in models. Bias is the inaccuracy that results from using an overly simplified model to approximate the complexity of the real world. High bias can make the model miss relevant relations (underfitting). Variance refers to the model’s sensitivity to small fluctuations in the training set.

81.Why is “Naive” used in the “Naive Bayes” classifier’s name?

Ans:

The term “Naive” in “Naive Bayes” pertains to the algorithm’s foundational assumption that each feature is independent of any other feature, given the class variable. This is considered “naive” because, in many real-world scenarios, features are often interdependent.

82.What’s the difference between a left join and an inner join in SQL?

Ans:

A join operation in SQL joins rows from one or more tables together according to similar columns. Only the rows where there is a match in both tables are returned by a “inner join”. A row won’t show up in the result set if it doesn’t have a matching entry in another table.

83.What is a feedback loop in the context of machine learning?

Ans:

In machine learning, a feedback loop occurs when the model’s predictions are used as new input data, influencing future predictions. This can lead to self-reinforcing predictions. For instance, if a recommendation system only suggests popular items, then those items could become even more popular as a result, leading the model to recommend them even more frequently.

84. What is “momentum” in neural network training?

Ans:

Momentum is a variation on gradient descent, a method for quickening learning and avoiding local minima in the field of optimisation. To do this, it multiplies the most recent weight update by a portion of the prior one. This implies that the algorithm will continue to go in the direction it was previously going, hastening convergence

85. How does a “Residual Network” (ResNet) function?

Ans:

Residual Networks, or ResNets, are deep learning architectures that use “skip connections” or “shortcuts”. These connections allow activations to bypass one or more layers in the network. The primary advantage of this is to combat the vanishing gradient problem in very deep neural networks.

86. Describe the role of an activation function in a neural network.

Ans:

Activation functions introduce non-linearity to the network. Without them, no matter how many layers the neural network has, it would behave just like a single-layer perceptron, because summing these layers would give just another linear function. Non-linear activation functions allow neural networks to capture complex mappings from inputs to outputs, making them capable of learning intricate patterns from data.

87. What is the significance of R-squared in regression analysis?

Ans:

R-squared, frequently referred to as the coefficient of determination, indicates how much variance in the dependent variable can be anticipated by the independent variables. It indicates how well the model explains the variation in the outcome. A score of 1 for R-squared shows that the regression predictions exactly fit the data. A high R-squared value, however, does not always indicate that the model is a good fit; additional criteria and diagnostics should be evaluated.

88. What is a hidden layer in a neural network?

Ans:

Hidden layers in neural networks are layers between the input and output layers. These layers contain neurons that process inputs received from the previous layer and transfer values to the next layer. Hidden layers are essential in deep learning, allowing the network to learn features at various levels of abstraction.

89. What are eigenvectors and eigenvalues?

Ans:

In linear algebra, eigenvectors and eigenvalues are concepts related to matrices. Given a square matrix A, an eigenvector is a non-zero vector v such that when A multiplies v, the result is a scalar multiple of v. This scalar is the eigenvalue associated with the eigenvector. In simpler terms, the direction of the eigenvector remains unchanged, only its scale might change when the matrix A acts on it.

90. Explain the purpose of “dropout” in neural networks

Ans:

Dropout is a regularisation method for neural networks. Dropout involves randomly “dropping out” or shutting off a portion of the neurons in the layers to which it is applied during training. This ensures that no single neuron is overly dependent on its inputs, making the network more robust.

91.What is “collinearity” in data analysis?

Ans:

Collinearity is the term for a scenario in regression analysis when two or more predictor variables are closely linked, indicating that one may be accurately predicted linearly from the others. This can destabilise the model, making it difficult to ascertain the individual contribution of predictors to the response variable.

92.How do “batch size” and “number of epochs” influence the training process?

Ans:

Batch Size is the quantity of training cases used in a single iteration. A smaller batch size often provides a regularizing effect and lower generalization error. However, training with a small batch can be slower as fewer examples are processed at a time.

93. What is the “Adam” optimization algorithm?

Ans:

Adam, an optimisation approach used for developing neural networks, stands for Adaptive Moment Estimation. As an extension of stochastic gradient descent, it computes adaptive learning rates for each parameter. Adam preserves an exponentially decaying average of prior squared gradients in addition to earlier gradient averages with exponential decay, such as Adadelta and RMSprop.

94.Describe “feature engineering” in the context of machine learning.

Ans:

Feature engineering is selecting, transforming, or creating relevant input variables (features) to enhance the performance of machine learning models. Effective feature engineering can simplify complex models, improve model accuracy, and reduce computational or data needs. Examples include creating interaction terms, extracting information from dates, or transforming a continuous variable into categories.

95.How do “precision” and “recall” relate to the “confusion matrix”?

Ans:

Precision (also known as positive predictive value) is the ratio of accurately predicted positive observations to total anticipated positives. Recall (also known as Sensitivity or True Positive Rate) is the ratio of accurately predicted positive observations to all actual positives.

96.Why might data normalization or scaling be important?

Ans:

Data normalization or scaling ensures that features have the same scale, which is vital for many machine learning algorithms. Algorithms that compute distances between data points, like k-means clustering or k-nearest neighbors, are particularly sensitive to feature scales. Without proper scaling, features with larger ranges might dominate the model, potentially leading to suboptimal performance.

97.How is “Random Search” different from “Grid Search” for hyperparameter tuning?

Ans:

Grid Search systematically: searches through a predefined grid of hyperparameters. For each combination of parameters, it trains a model and evaluates it using a validation set.

Random Search, on the other hand, samples hyperparameter combinations from a distribution over possible parameter values.

98. What are the main types of biases that can occur in a dataset?

Ans:

Selection Bias: Occurs when the data collected isn’t representative of the entire population.

Measurement Bias: Arises from systematic errors in data collection or measurement.

Confounding Bias: Occurs when the effects of two or more variables are mixed, and the impact of one variable on the response is distorted because of another variable.

99. Explain the concept of “word embeddings” in NLP.

Ans:

Word embeddings are dense vector representations of words in a continuous vector space. The idea is to represent words by their contextual meaning. Words with similar meanings or that appear in similar contexts tend to be closer in this vector space. Techniques like Word2Vec, GloVe, and FastText have been popular for generating word embeddings.

100. What is “Transfer Learning”?

Ans:

Transfer Learning is a machine learning method by that model produced for one job is reused or changed as the starting point for a model on a different task. It is typical in deep learning to take models that have been pre-trained on big datasets and fine-tune them for specific tasks.