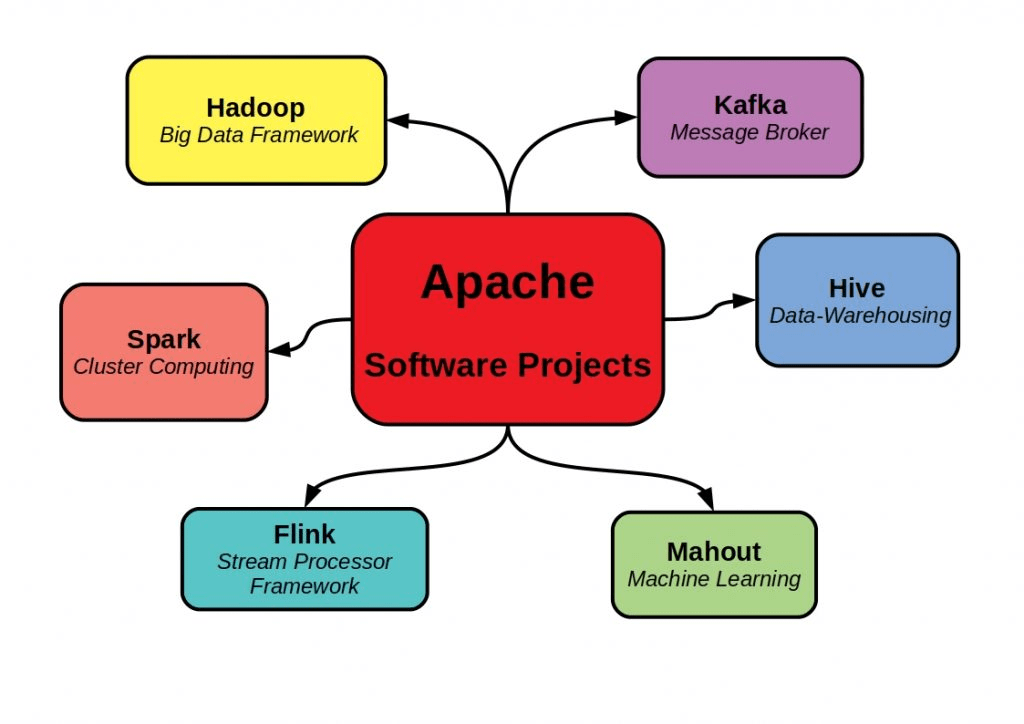

Apache Mahout is an open-source machine learning library designed to facilitate scalable and efficient development of intelligent applications. Built on top of distributed computing frameworks like Apache Hadoop and Apache Spark, Mahout offers a wide range of algorithms for tasks such as clustering, classification, recommendation, and dimensionality reduction. Leveraging its integration with Hadoop and Spark, Mahout can process large-scale datasets in parallel, making it suitable for big data analytics.

1. What is Apache Mahout?

Ans:

Apache Mahout is an open-source framework designed for creating scalable machine learning algorithms. It primarily focuses on collaborative filtering, clustering, and classification. Built on top of Apache Hadoop, Mahout leverages the power of distributed computing to handle large datasets efficiently. It provides a variety of pre-built algorithms and tools to facilitate the development of machine learning applications.

2. What are the primary use cases of Apache Mahout?

Ans:

- Collaborative Filtering: Creating recommendation systems that suggest products to users based on their past interactions.

- Clustering: Grouping data into clusters, useful for applications like customer segmentation and market basket analysis.

- Classification: Sorting data into predefined categories, applicable in areas such as spam detection and sentiment analysis.

3. What is the method of integration between Mahout and Hadoop?

Ans:

Apache Mahout integrates with Hadoop by leveraging the Hadoop Distributed File System (HDFS) and the MapReduce programming model to process large-scale data efficiently. Mahout’s algorithms are designed to run as distributed tasks on a Hadoop cluster, enabling them to handle vast amounts of data in parallel. By utilizing HDFS, Mahout ensures data is stored in a distributed manner, which enhances reliability and fault tolerance.

4. What are the key features of Apache Mahout?

Ans:

- Scalable Algorithms

- Hadoop Integration

- Spark Support

- Mathematical and Statistical Tools

- Extensibility

5. What is the different between Clustering Algorithms and Classification Algorithms?

Ans:

| Aspect | Clustering Algorithms | Classification Algorithms |

|---|---|---|

| Objective | Group similar data points together based on their features, aiming to discover natural groupings or clusters within the data. | Categorize data points into predefined classes or categories based on their features, aiming to predict the class labels of new instances. |

| Output | Unlabeled clusters or groups, where data points within the same cluster are more similar to each other than to those in other clusters. | Labeled instances assigned to specific classes or categories, indicating the predicted class for each data point. |

| Supervision | Typically unsupervised, meaning they do not require labeled training data and operate solely based on the input features. | Can be supervised or unsupervised, with supervised algorithms requiring labeled training data to learn the mapping between input features and class labels. |

| Application | Commonly used for exploratory data analysis, anomaly detection, and customer segmentation, among other tasks where the underlying structure of the data is of interest. | Widely used in tasks such as spam detection, sentiment analysis, and medical diagnosis, where the goal is to classify instances into distinct categories based on their features. |

6. How does Apache Mahout handle scalability?

Ans:

Apache Mahout handles scalability through its integration with distributed computing frameworks like Apache Hadoop and Apache Spark. By leveraging Hadoop’s HDFS and the MapReduce programming model, Mahout can process and store massive datasets across a cluster of machines, enabling parallel computation and fault tolerance. This distributed approach allows Mahout’s algorithms to scale horizontally, efficiently managing increased data volumes by adding more nodes to the cluster.

7. What machine learning algorithms does Mahout support?

Ans:

- Collaborative Filtering

- Clustering

- Classification

- Dimensionality Reduction Decomposition (SVD) and Principal Component Analysis (PCA).

- Frequent Pattern Mining

8. What is the history and origin of Apache Mahout?

Ans:

Apache Mahout began as a sub-project of Apache Lucene in 2008, aimed at developing scalable machine learning libraries. The goal was to create tools capable of handling large datasets using Hadoop’s distributed computing power. Mahout eventually became an independent top-level project under the Apache Software Foundation, continually evolving and expanding its features.

9. What are the key differences between Mahout and other machine learning libraries like Scikit-learn or TensorFlow?

Ans:

- Scale: Mahout is optimized for large-scale data processing with distributed frameworks like Hadoop and Spark, while Scikit-learn is ideal for smaller datasets on a single machine.

- Algorithm Focus: Mahout specializes in collaborative filtering, clustering, and classification. Scikit-learn offers a wider range of algorithms, including classification, regression, and clustering.

- Language Support: Mahout is primarily Java/Scala-based, Scikit-learn is Python-based, and TensorFlow supports multiple languages with strong Python support.

10. What are the advantages of using Apache Mahout?

Ans:

- Scalability

- Hadoop and Spark Integration

- Diverse Algorithms

- Open Source

- Extensibility

- Community Support

11. What is the process for installing Apache Mahout on a local machine?

Ans:

Installing Apache Mahout on a local machine typically involves downloading the Mahout distribution from its official website or using a package manager like Apache Maven. After downloading, unzip the package and configure any necessary environment variables. Detailed installation instructions are usually available in the Mahout documentation or community forums.

12. What are the minimum system requirements for running Apache Mahout?

Ans:

- The system requirements for running Apache Mahout vary depending on factors such as the specific use case and the size of the dataset. Generally, Mahout can operate on standard hardware configurations.

- However, for large-scale processing, it is advisable to use a distributed computing environment like Apache Hadoop or Apache Spark.

- Additionally, sufficient memory and processing power are essential for optimal performance.

13. What steps are involved in setting up Mahout on a Hadoop cluster?

Ans:

Setting up Mahout on a Hadoop cluster involves configuring Mahout to interact with Hadoop’s distributed file system (HDFS) and resource manager (YARN). This typically requires ensuring that Mahout’s configuration points to the Hadoop installation directory and verifying that Hadoop is properly configured and running on the cluster. Detailed instructions can be found in the Mahout documentation.

14. Which configuration files are crucial for the operation of Mahout?

Ans:

The crucial configuration files for the operation of Apache Mahout are primarily the Hadoop configuration files, as Mahout relies heavily on Hadoop’s infrastructure. Key files include `core-site.xml`, `hdfs-site.xml`, and `mapred-site.xml`, which define the core Hadoop settings, HDFS settings, and MapReduce settings, respectively. These files specify configurations such as file system paths, job tracker addresses, and resource management settings, ensuring Mahout can effectively utilize Hadoop’s distributed storage and processing capabilities.

15. What methods can be used to confirm the successful installation of Mahout?

Ans:

- To confirm the successful installation of Apache Mahout, can perform several checks.

- First, verify that the Mahout binaries are correctly installed by running the command `mahout` in terminal or command prompt.

- This should display a list of available Mahout commands and options, indicating that the executable is accessible.

- Next, check the environment variables to ensure that the Mahout home directory and relevant paths are properly set.

16. What is the process for configuring Mahout to use a specific version of Hadoop?

Ans:

To configure Apache Mahout to utilize a specific version of Hadoop, that need to ensure compatibility between Mahout and the desired Hadoop version. First, download and install the specific Hadoop version want to use. Then, adjust the Mahout configuration files and environment settings to point to the Hadoop installation. This involves setting environment variables such as HADOOP_HOME to the directory of the Hadoop installation and ensuring that the Hadoop binaries are included in system’s PATH.

17. What dependencies are necessary for installing Mahout?

Ans:

To configure Apache Mahout to utilize a specific version of Hadoop, it need to ensure compatibility between Mahout and the desired Hadoop version. First, download and install the specific Hadoop version want to use. Then, adjust the Mahout configuration files and environment settings to point to the Hadoop installation. This involves setting environment variables such as HADOOP_HOME to the directory of the Hadoop installation and ensuring that the Hadoop binaries are included in system’s PATH.

18. What steps are necessary to deploy Mahout on a cloud platform like AWS or Google Cloud?

Ans:

Deploying Mahout on a cloud platform like AWS or Google Cloud involves:

- Setting up a virtual machine instance or a cluster.

- Installing Mahout dependencies.

- Configuring Mahout to interact with cloud storage and computing resources.

- Cloud service providers typically offer documentation and tutorials for deploying Mahout on their platforms.

19. What is the process by which the K-means clustering algorithm works in Mahout?

Ans:

In Mahout, the K-means clustering algorithm starts by randomly selecting k initial cluster centroids. Each data point is then assigned to the nearest centroid, forming initial clusters. The centroids are recalculated as the mean of the points in each cluster. This process of assignment and updating repeats iteratively until the centroids no longer change significantly or a predetermined number of iterations is reached.

20. What are some strategies for troubleshooting common installation issues with Mahout?

Ans:

Review the installation documentation to ensure all dependencies are installed correctly. Verify the accuracy of environment variables, particularly `MAHOUT_HOME` and `HADOOP_HOME.` Check Mahout and Hadoop logs for any error messages or stack traces. Seek assistance from online forums and communities where other Mahout users and developers may offer guidance.

21. What approach does Mahout use to manage data preprocessing?

Ans:

- Apache Mahout offers several methods for managing data preprocessing tasks as part of its machine learning workflow.

- One common approach is to leverage Mahout’s integration with Apache Spark, which provides powerful data processing capabilities.

- Spark’s DataFrame API and SQL capabilities allow users to perform various preprocessing tasks such as cleaning, transforming, and aggregating data efficiently in a distributed manner.

22. What data formats does Mahout accommodate for input?

Ans:

Apache Mahout accommodates various data formats for input, offering flexibility in handling different types of data. One commonly used format is the SequenceFile format, which is native to Hadoop and is efficient for storing large amounts of binary key-value pairs. Mahout also supports input data in text format, where each line typically represents a single data point or record. This format is simple and widely used, making it convenient for many applications.

23. How is data imported into Mahout?

Ans:

Data can be imported into Apache Mahout through various methods, depending on the specific data format and source. One common approach is to leverage Mahout’s integration with Apache Hadoop and Apache Spark, allowing users to import data stored in Hadoop Distributed File System (HDFS) or Spark’s distributed data structures such as RDDs (Resilient Distributed Datasets) or DataFrames.

24. What function does the `Seq2Sparse` command serve in Mahout?

Ans:

- The Seq2Sparse command in Apache Mahout serves the function of converting input data stored in the SequenceFile format into a sparse matrix representation.

- This command is particularly useful for preprocessing data before applying machine learning algorithms, as many Mahout algorithms require input data in sparse matrix format for efficient computation and storage.

- Seq2Sparse speeds up processing and reduces memory use by converting data into a sparse matrix, ideal for high-dimensional datasets in text mining and recommendation systems.

25. What steps are involved in transforming raw data into a Mahout-compatible format?

Ans:

Raw data transformation into a Mahout-compatible format involves preprocessing techniques like cleaning, transforming, and feature extraction. Additionally, Mahout equips users with tools like `seqdirectory` and `seq2sparse` to facilitate the conversion of raw data into Mahout-friendly formats. These capabilities enhance the overall efficiency and effectiveness of the machine learning processes within Mahout.

26. Why is data normalization significant in Mahout?

Ans:

Data normalization is crucial in Mahout to ensure uniform scales and distributions among features, thereby improving the performance and convergence of machine learning algorithms. Normalized data fosters unbiased feature treatment and leads to more accurate model predictions, ultimately contributing to better overall results in analysis and decision-making.

27. What methods can be used to manage missing values in datasets within Mahout?

Ans:

- Mahout provides avenues for addressing missing values in datasets, including imputation.

- techniques like substituting missing values with the mean, median, or mode of the respective feature. Moreover, specific Mahout algorithms inherently accommodate missing values during model training.

28. How is data partitioned into training and testing sets in Mahout?

Ans:

In Apache Mahout, data partitioning into training and testing sets can be achieved using various methods depending on the specific machine learning algorithm being used and the user’s preferences. One common approach is to manually split the dataset into two subsets: one for training the model and another for evaluating its performance. This can be done by randomly selecting a portion of the data for training and reserving the remaining portion for testing.

29. What strategies does Mahout use to handle large datasets?

Ans:

Mahout adeptly manages large datasets by harnessing the capabilities of distributed computing frameworks like Apache Hadoop and Apache Spark. It orchestrates computations across multiple nodes in a cluster, enabling parallel processing and scalability to effectively process extensive data volumes, ensuring efficient data analysis and machine learning operations.

30. What visualization tools can be employed for Mahout-processed data?

Ans:

- While Mahout itself lacks built-in visualization tools, users can employ third-party libraries such as Apache Zeppelin, Jupyter Notebooks, or visualization libraries in languages like Python or R to visualize data processed by Mahout.

- These tools empower users to craft interactive visualizations and glean insights from the analyzed data.

31. What clustering algorithms does Mahout support?

Ans:

Mahout adeptly manages large datasets by leveraging distributed computing frameworks like Apache Hadoop and Apache Spark. It orchestrates computations across multiple nodes in a cluster, enabling efficient parallel processing and scalability. Additionally, Mahout offers various clustering algorithms, including K-means and Fuzzy K-means, designed for both basic and advanced clustering tasks in large-scale data environments.

32.Which environment variables need to be configured for Mahout?

Ans:

Mahout efficiently manages large datasets by leveraging Apache Hadoop and Apache Spark for distributed computing, enabling parallel processing across multiple nodes. It provides various clustering algorithms, such as K-means and Fuzzy K-means, suitable for both basic and advanced tasks. Essential environment variables include MAHOUT_HOME for the installation directory, JAVA_HOME for the JDK directory, and HADOOP_HOME for Hadoop integration.

33. What methods is used to determine the number of clusters for K-means in Mahout?

Ans:

- Determining the number of clusters (k) in K-means can be approached in several ways.

- The Elbow Method involves plotting the sum of squared distances from each point to its cluster centroid against the number of clusters, looking for an ‘elbow’ point where the rate of decrease slows.

- Additionally, domain knowledge and cross-validation, which tests various values of k and assesses cluster quality, can be used to select the optimal number of clusters.

34. What is the Canopy Clustering algorithm in Mahout?

Ans:

Canopy Clustering in Mahout is a pre-clustering algorithm designed to speed up more computationally intensive clustering methods like K-means. It uses two distance thresholds, T1 (loose) and T2 (tight), where T1 is more significant than T2. Data points are scanned, and each point within T1 of a center forms a canopy. Points within T2 are removed from consideration for forming new canopies.

35. What methods can be used to evaluate the quality of clusters produced by Mahout?

Ans:

Cluster quality in Mahout can be assessed using several metrics. The Sum of Squared Errors (SSE) measures compactness, where lower values indicate better clustering. The Silhouette Coefficient evaluates both cohesion and separation, while the Davies-Bouldin Index favors lower values for improved clustering. Normalized Mutual Information (NMI) checks alignment with known labels, aiding in validation.

36. What are the steps to perform clustering on a dataset using Mahout?

Ans:

To perform clustering with Mahout, first prepare the dataset in a sequence file or another Mahout-compatible format. Select the clustering algorithm wish to use, such as K-means or Canopy, and configure its parameters, including the number of clusters, distance measure, and convergence criteria. Run the clustering job, typically using a Hadoop cluster for handling large datasets. After execution, evaluate the cluster quality using various metrics and interpret the results to gain insights or refine the clustering process as needed.

37. What is the process for interpreting the output of a clustering algorithm in Mahout?

Ans:

- Interpreting the output of a clustering algorithm in Mahout involves examining cluster centroids to understand the central points of each cluster and reviewing which data points are assigned to which clusters.

- Checking the size of each cluster helps identify any imbalances or dominant clusters. Evaluating metrics like SSE and the silhouette score provides a quantitative assessment of cluster quality.

- Visualizing the clusters using scatter plots or dimensionality reduction techniques can also help in understanding their separation and cohesion.

38. What is Fuzzy K-means clustering in Mahout?

Ans:

Fuzzy K-means, or Fuzzy C-means, is a variation of K-means where each data point can belong to multiple clusters with varying degrees of membership. In Mahout, this method allows for soft clustering, with points having a degree of belonging to each cluster represented by a membership matrix. Centroids are updated based on the weighted memberships of points, and the algorithm iterates until convergence, resulting in more flexible clustering that can capture complex data structures.

39. What methods can be used to visualize clusters created by Mahout?

Ans:

Visualizing clusters created by Mahout can be done using scatter plots for 2D or 3D data to observe their separation. For high-dimensional data, dimensionality reduction techniques like PCA or t-SNE can reduce the data to two or three dimensions for visualization. Plotting cluster centroids helps visualize the cluster centers. Tools like R, Python (with libraries such as Matplotlib and Seaborn), or web-based dashboards can be used to create interactive visualizations, aiding in the interpretation and presentation of clustering results.

40. What are some typical applications of clustering in Mahout?

Ans:

- Clustering with Mahout is commonly used in various applications—market segmentation groups customers based on purchasing behavior.

- Document clustering organizes documents or articles into topics.

- Anomaly detection identifies unusual patterns or outliers in data. Image segmentation partitions images into meaningful segments. Recommender systems group similar users or items to provide personalized recommendations.

41. What classification algorithms are available in Mahout?

Ans:

Mahout supports several classification algorithms, including Naive Bayes, Complementary Naive Bayes, Random Forest, Logistic Regression, and Decision Trees. These algorithms cater to various classification tasks, from simple probabilistic models to more complex ensemble methods and regression-based approaches. Additionally, Mahout provides tools for evaluating model performance, such as accuracy metrics and confusion matrices, helping users refine their models effectively.

42. How does the Naive Bayes classifier work in Mahout?

Ans:

In Mahout, the Naive Bayes classifier operates on the principle of conditional probability, assuming that features are independent. The algorithm calculates prior probabilities for each class and the likelihood of each feature given a class from the training data. For classification, it uses Bayes’ theorem to compute the posterior probability of each class given the input features and assigns the class with the highest probability to the data point.

43. What is the purpose of the `TrainClassifier` command in Mahout?

Ans:

- The `TrainClassifier` command in Mahout builds a classification model from a training dataset.

- This command processes the training data to learn the parameters of the chosen classification algorithm, such as Naive Bayes or Random Forest.

- The resulting model can then be used to predict the classes of new data points. The `TrainClassifier` command is essential for creating models that generalize from training data to make accurate predictions on new, unseen data.

44. What methods is used to evaluate the performance of a classifier in Mahout?

Ans:

To evaluate a classifier’s performance in Mahout, various metrics and methods are used. Standard metrics include accuracy, precision, recall, F1-score, and the area under the ROC curve (AUC). Additionally, confusion matrices provide insight into the classifier’s performance by showing true positives, false positives, true negatives, and false negatives. Cross-validation, where the dataset is repeatedly split into training and testing subsets, ensures robust and reliable performance metrics.

45. What is the Random Forest algorithm in Mahout?

Ans:

The Random Forest algorithm in Mahout is an ensemble learning method that creates multiple decision trees during training and outputs the class that is the mode of the classes predicted by individual trees. This approach improves classification accuracy by averaging the results of multiple models, reducing overfitting, and enhancing generalization. Mahout’s implementation of Random Forest can efficiently handle large datasets due to its parallel processing capabilities.

46. What strategies is used to handle imbalanced datasets in Mahout for classification?

Ans:

- Several techniques can be used to handle imbalanced datasets in Mahout. Resampling methods such as oversampling the minority class or undersampling the majority class help balance the class distribution.

- Cost-sensitive learning adjusts misclassification costs to give higher penalties for errors in the minority class.

- Algorithms like SMOTE (Synthetic Minority Over-sampling Technique) can create synthetic examples for the minority class.

47. What is the process for using cross-validation with classifiers in Mahout?

Ans:

In Mahout, cross-validation involves splitting the dataset into multiple folds, with each fold serving as a validation set while the remaining folds are used for training. This process repeats numerous times, ensuring each fold is used for validation once. The results from each iteration are averaged to provide a reliable estimate of the classifier’s performance. Cross-validation helps assess the model’s generalization ability and reduces the risk of overfitting.

48. What metrics can be used to evaluate classification models in Mahout?

Ans:

Classification models in Mahout can be evaluated using metrics such as accuracy, which measures the proportion of correctly classified instances; precision, which indicates the accuracy of optimistic predictions; recall, which measures the ability to find all positive instances; F1-score, the harmonic mean of precision and recall; and the area under the ROC curve (AUC), which assesses the model’s ability to distinguish between classes.

49. What methods can be used to perform feature selection for classification in Mahout?

Ans:

Feature selection in Mahout can be performed using techniques such as information gain, chi-square tests, and mutual information, which measure the relevance of each feature to the target variable. By evaluating these scores, the most informative features can be selected for inclusion in the model. Feature selection reduces the dataset’s dimensionality, improves model performance, and decreases computational costs.

50. What is logistic regression in Mahout, and how is it implemented?

Ans:

- Logistic regression in Mahout is a classification algorithm that models the probability of a binary outcome based on one or more predictor variables.

- It uses the logistic function to map predicted values to probabilities between 0 and 1.

- Mahout implements logistic regression through gradient descent optimization to find the best-fitting parameters that minimize prediction error.

51. What methods does Mahout use to implement collaborative filtering?

Ans:

Mahout implements collaborative filtering by using user-item interactions to generate recommendations. It offers both user-based and item-based collaborative filtering methods. User-based filtering recommends items by identifying users with similar preferences to the target user, while item-based filtering suggests items that are similar to those the target user has previously liked.

52. What is the difference between user-based and item-based collaborative filtering in Mahout?

Ans:

In Mahout, user-based collaborative filtering finds and recommends items by locating users with similar preferences to the target user. In contrast, item-based collaborative filtering identifies items identical to those the target user has already liked. User-based filtering focuses on similarities between users, whereas item-based filtering emphasizes similarities between items.

53. What steps can be taken to prepare data for building a recommendation system in Mahout?

Ans:

To prepare data for a recommendation system in Mahout, need a user-item interaction matrix, typically derived from user behavior logs such as ratings, clicks, or purchases. This data is usually formatted as a sequence file or a CSV file with columns for user IDs, item IDs, and interaction values (e.g., ratings). Data preprocessing steps may include normalizing interaction values, handling missing values, and filtering out users or items with insufficient interactions to ensure the model’s robustness.

54. What is the role of similarity measures in Mahout’s recommendation algorithms?

Ans:

- Similarity measures are crucial in Mahout’s recommendation algorithms, determining the relationships between users or items.

- Measures such as cosine similarity, Pearson correlation, and Jaccard index quantify how similar two users or items are.

- The choice of similarity measure affects the accuracy and performance of the recommendation system by influencing which items or users are considered identical and recommended to the target user.

55. How to evaluate the performance of a recommendation system in Mahout?

Ans:

Evaluating a recommendation system in Mahout involves using metrics like precision, recall, F1-score, mean absolute error (MAE), and root mean squared error (RMSE). Precision and recall measure recommendation accuracy, while MAE and RMSE assess the prediction accuracy of ratings. Cross-validation, which involves repeatedly splitting the dataset into training and testing subsets, ensures robust performance evaluations by testing the model on different data splits.

56. What is the SVD recommender in Mahout?

Ans:

The SVD (Singular Value Decomposition) recommender in Mahout is a matrix factorization technique that decomposes the user-item interaction matrix into lower-dimensional matrices. This approach captures latent factors representing user preferences and item characteristics. The SVD recommender predicts user ratings for items by reconstructing the interaction matrix from these lower-dimensional matrices, enabling recommendations based on inferred user preferences.

57. What strategies can be used to handle cold start problems in Mahout’s recommendation systems?

Ans:

- To address cold start problems in Mahout’s recommendation systems, various strategies can be employed.

- For new users, initial preference data can be gathered through onboarding questionnaires or demographic-based recommendations.

- Hybrid approaches that combine collaborative and content-based filtering can also help mitigate cold-start issues by utilizing available data from both users and items for initial recommendations.

58. What steps are involved in integrating Mahout’s recommendation system with a web application?

Ans:

Integrating Mahout’s recommendation system with a web application involves several steps. First, deploy the recommendation model as a service that the web application can query, typically using REST APIs or microservices. The web application collects user interactions and sends them to the recommendation service, which processes the data and returns recommendations. Regularly updating the model with new interaction data ensures the recommendations remain relevant.

59. What are some common challenges in building recommendation systems with Mahout?

Ans:

Common challenges in building recommendation systems with Mahout include dealing with sparse data, where many users have interacted with only a few items, leading to insufficient information for accurate recommendations. Cold start problems for new users or items can also be challenging to manage. Ensuring scalability to handle large datasets efficiently is another frequent issue.

60. What methods can be used to enhance the accuracy of recommendations in Mahout?

Ans:

- To improve the accuracy of recommendations in Mahout, several strategies can be applied.

- Incorporating additional data, such as user demographics or item attributes, can enhance the model’s understanding of user preferences.

- Hybrid models combining collaborative and content-based filtering can leverage the strengths of both approaches.

61. What dimensionality reduction techniques does Mahout support?

Ans:

Mahout provides several dimensionality reduction techniques, including Principal Component Analysis (PCA), Singular Value Decomposition (SVD), and Random Projection. These methods reduce the number of features in a dataset while preserving essential information, facilitating more manageable and efficient data analysis for machine learning tasks.

62. How does Principal Component Analysis (PCA) work in Mahout?

Ans:

Principal Component Analysis (PCA) in Mahout transforms high-dimensional data into a new coordinate system defined by orthogonal axes (principal components) that capture the maximum variance in the data. By projecting the data onto these new axes, PCA reduces the number of dimensions while maintaining as much variability as possible, allowing for a more straightforward and more effective analysis.

63. What is Singular Value Decomposition (SVD) in Mahout?

Ans:

- Singular Value Decomposition (SVD) in Mahout is a matrix factorization method that decomposes a matrix into three components: U, Σ (a diagonal matrix of singular values), and V*.

- This decomposition aids in understanding data structure, reducing dimensions, and uncovering latent factors.

- SVD is especially useful in recommendation systems and large-scale data processing, revealing underlying patterns and relationships.

64. What factors should be considered when selecting the number of dimensions to reduce in Mahout?

Ans:

Choosing the number of dimensions to reduce in Mahout involves balancing simplicity and information retention. This can be done by examining the explained variance ratio in PCA or the singular values in SVD. Typically, dimensions are chosen to illustrate a high percentage (e.g., 90-95%) of the variance. Domain knowledge and specific problem requirements also guide this decision.

65. What are the benefits of dimensionality reduction in Mahout?

Ans:

Dimensionality reduction in Mahout offers several benefits: it reduces computational costs and storage needs, improves model performance by removing irrelevant features, and enhances data visualization. It helps mitigate the curse of dimensionality, where an increase in features can degrade machine learning performance by focusing on the most significant features.

66. What techniques can be used to visualize high-dimensional data after it has been reduced with Mahout?

Ans:

- After dimensionality reduction with Mahout, high-dimensional data can be visualized using 2D or 3D plots.

- Techniques like PCA reduce the data to two or three principal components, which can be plotted using standard visualization tools such as scatter plots.

- This aids in understanding the data structure, identifying clusters, and detecting outliers.

67. What approach does Mahout take to handle sparse data during dimensionality reduction?

Ans:

Mahout handles sparse data in dimensionality reduction using algorithms and data structures optimized for sparsity. Techniques like SVD and PCA are implemented to work efficiently with sparse matrices, ensuring that computations remain scalable and effective, which is crucial for large-scale applications like recommendation systems and text analysis.

68. What is the role of feature extraction in Mahout?

Ans:

Feature extraction in Mahout transforms raw data into a set of features suitable for machine learning tasks. This process reduces data dimensionality while retaining important information. Techniques such as PCA and SVD are commonly used, creating new features that capture the underlying data structure and enhance machine learning model performance.

69. What is the process for interpreting the results of dimensionality reduction in Mahout?

Ans:

- Interpreting dimensionality reduction results in Mahout involves understanding the new set of features or dimensions created.

- In PCA, principal components show directions of maximum variance, with the first few capturing the most significant data patterns.

- In SVD, singular values and corresponding vectors reveal data structure and relationships.

- Analyzing these results helps identify important features and understand intrinsic data properties.

70. What are some typical applications of dimensionality reduction using Mahout?

Ans:

Dimensionality reduction with Mahout is commonly applied in preprocessing data for machine learning algorithms, enhancing recommendation system performance, visualizing high-dimensional datasets, and reducing storage and computational costs. It is also used in text analysis to simplify term-document matrices and in bioinformatics for genetic data analysis. These techniques simplify complex datasets, making them more manageable and interpretable for various applications.

71. What strategies can employ to optimize Mahout’s performance when working with large datasets?

Ans:

- Improving Mahout’s performance with large datasets requires several strategies.

- Employing distributed computing frameworks like Hadoop and Spark can significantly enhance scalability and efficiency.

- Tuning configuration settings, such as increasing cluster nodes and adjusting memory allocation, can also improve performance.

72. What are some typical performance bottlenecks in Mahout?

Ans:

Common performance bottlenecks in Mahout include inefficient data preprocessing, inadequate memory allocation, and suboptimal configuration settings. Network latency and disk I/O can also impede performance, especially with large distributed datasets. Inefficient algorithms or similarity measures that do not scale well with data size can lead to excessive computation time. Ensuring proper data partitioning and distribution helps mitigate these bottlenecks.

73. What methods can be used to parallelize machine learning tasks in Mahout?

Ans:

Parallelizing machine learning tasks in Mahout is achieved through integration with distributed computing frameworks like Hadoop and Spark. These frameworks divide tasks into smaller subtasks, which run concurrently across multiple cluster nodes. Mahout’s algorithms are designed to leverage this parallelism, distributing data and computations to improve efficiency.

74. What role does caching play in enhancing Mahout’s performance?

Ans:

- Caching is vital for improving Mahout’s performance by storing frequently accessed data in memory, reducing the need for repetitive disk I/O operations.

- This proves particularly useful for iterative algorithms where the same data is accessed multiple times.

- By caching intermediate results and commonly used datasets, Mahout significantly reduces computation time and enhances overall efficiency.

75. What methods can be used to monitor Mahout’s performance on a Hadoop cluster?

Ans:

Monitoring Mahout’s performance on a Hadoop cluster involves using tools like Hadoop’s built-in monitoring interfaces, such as ResourceManager and JobHistoryServer. These tools track resource usage, job progress, and cluster health. Additional tools like Ganglia, Nagios, or Cloudera Manager provide comprehensive monitoring, alerting, and visualization of cluster performance metrics. Regularly reviewing logs and performance reports helps identify bottlenecks, resource contention, and other issues affecting performance.

76. What are some recommended practices for tuning Mahout’s algorithms for improved performance?

Ans:

Best practices for tuning Mahout’s algorithms include selecting suitable algorithms and similarity measures based on specific data and problem requirements. Configuring the number of maps, reducing tasks to match cluster capacity, and optimizing memory allocation settings are crucial. Preprocessing data to ensure it is clean and well-structured can also enhance performance—additionally, iterative testing, parameter tuning, and adjusting based on performance metrics help achieve optimal results.

77. What strategies does Mahout use to manage memory for large-scale machine learning tasks?

Ans:

- Mahout manages memory for large-scale machine learning tasks by leveraging distributed computing frameworks like Hadoop and Spark, which handle memory across cluster nodes.

- It uses in-memory data structures and caching to optimize performance, ensuring frequently accessed data is stored in memory.

- Configuring memory allocation settings appropriately and employing techniques like data partitioning and parallel processing effectively manage memory.

78. What methods can be used to profile and debug Mahout’s code to identify and resolve performance issues?

Ans:

Profiling and debugging Mahout’s code for performance issues involves using tools like YourKit, VisualVM, or JProfiler to identify bottlenecks and inefficiencies. These tools offer insights into CPU and memory usage, highlighting hotspots and potential performance issues. Reviewing logs and tracing execution flow can help pinpoint specific areas causing slowdowns.

79. What strategies can be employ to optimize data preprocessing in Mahout?

Ans:

Optimizing data preprocessing in Mahout involves several strategies, including cleaning and normalizing data to ensure consistency and quality. Efficiently handling missing values and outliers is crucial. Utilizing distributed processing frameworks like Hadoop and Spark for preprocessing large datasets significantly improves performance. Implementing feature selection and dimensionality reduction techniques to reduce data size without losing essential information also helps.

80. What strategies do be implemented to ensure that Mahout’s algorithms scale effectively in a production environment?

Ans:

- Ensuring Mahout’s algorithms scale effectively in a production environment requires leveraging distributed computing frameworks like Hadoop and Spark for large-scale data processing.

- Properly configuring the cluster, including memory allocation, node count, and task distribution, is essential.

- Implementing efficient data partitioning and caching strategies, along with selecting scalable algorithms, ensures the system can handle increasing data volumes and workloads effectively.

81. How does Mahout integrate with Apache Spark?

Ans:

Mahout seamlessly integrates with Apache Spark by offering its Spark shell, facilitating distributed machine-learning tasks. Mahout’s algorithms are adapted to operate efficiently on Spark’s resilient distributed datasets (RDDs), leveraging Spark’s parallel processing capabilities. Users can incorporate Mahout’s functionality within Spark applications, accessing algorithms through either the Spark MLlib interface or Mahout’s APIs directly.

82. What is the Mahout Samsara DSL, and how is it utilized?

Ans:

The Mahout Samsara DSL (Domain Specific Language) is a high-level abstraction layer built on top of Apache Spark. Its primary purpose is to simplify the development of distributed machine-learning workflows. By providing a declarative syntax for expressing machine learning algorithms, the Samsara DSL streamlines the definition of complex data processing pipelines. It abstracts away the intricacies of distributed computing, allowing users to focus on algorithm design and data analysis tasks with ease.

83. What steps can be taken to develop custom algorithms in Mahout?

Ans:

- Developing custom algorithms in Mahout involves extending its existing implementations or creating entirely new ones.

- Users can leverage Mahout’s extensible architecture and APIs to craft custom algorithms tailored to their specific needs.

- This typically entails defining the algorithm’s logic, data preprocessing steps, and parameter tuning strategies.

84. What ways does Mahout facilitate online learning algorithms?

Ans:

Mahout supports online learning algorithms like stochastic gradient descent (SGD), which update model parameters incrementally as new data arrives. This allows for continuous adaptation of machine learning models to dynamic datasets, enabling real-time decision-making and personalized recommendations in applications such as online advertising and recommender systems.

85. What advantages does Mahout gain from its integration with Apache Flink?

Ans:

The integration of Mahout with Apache Flink offers numerous benefits, including enhanced scalability, fault tolerance, and support for complex event processing. Flink’s capabilities in stream processing complement Mahout’s distributed machine learning algorithms, enabling real-time analytics and decision-making on large-scale streaming data. Moreover, Flink’s efficient memory management and support for iterative processing make it well-suited for running Mahout’s iterative algorithms, thereby improving performance and resource utilization.

86. How can Mahout’s functionality be extended using third-party libraries?

Ans:

Extending Mahout’s functionality with third-party libraries involves integrating external tools and libraries into Mahout’s ecosystem to enhance its capabilities. This includes incorporating specialized algorithms, data processing tools, or visualization libraries that complement Mahout’s existing functionalities. By leveraging Mahout’s interoperability and extensibility features, users can seamlessly integrate third-party libraries to address specific use cases or extend its capabilities beyond its core functionalities.

87. What methods does Mahout use to manage distributed computing tasks?

Ans:

- Mahout manages distributed computing tasks by integrating with frameworks such as Apache Hadoop and Apache Spark.

- It partitions data across multiple nodes in a cluster and distributes computations in parallel, leveraging the scalability and fault tolerance features of these frameworks.

- Mahout’s algorithms are designed to operate efficiently in distributed environments, enabling users to process large-scale datasets and execute complex machine-learning tasks across clusters of machines.

88. What are some practical applications of Mahout in real-world scenarios?

Ans:

Mahout finds application in various real-world scenarios, including recommendation systems, fraud detection, text mining, and customer segmentation. For instance, in e-commerce, Mahout’s recommendation algorithms power personalized product suggestions, enhancing user engagement and sales. In finance, Mahout aids in detecting fraudulent transactions and identifying patterns in financial data.

89. What ways are available for individuals to contribute to the development of Apache Mahout?

Ans:

Contributing to Apache Mahout’s development involves participating in community discussions, reporting bugs, and submitting patches or code contributions. Users can contribute to Mahout’s codebase by implementing new features, fixing bugs, or improving documentation. Engaging with the Mahout community through mailing lists, forums, and collaborative platforms like GitHub fosters collaboration and knowledge sharing, enabling collective contributions to the project’s growth and evolution.

90. What are the upcoming directions and plans for Apache Mahout?

Ans:

- The future directions and plans for Apache Mahout include enhancing scalability, performance, and usability through integration with emerging technologies and frameworks.

- This may involve further optimizations for distributed computing environments, support for advanced machine learning techniques, and improved interoperability with other Apache projects and ecosystem tools.