Oracle Data Warehouse Application Console (DAC) is a metadata-driven management tool associated with Oracle Business Intelligence Enterprise Edition (OBIEE) and Oracle Business Intelligence Applications (OBIA). It enables the design, execution, and monitoring of ETL processes for loading data into the Oracle BI Applications data warehouse. DAC uses a metadata-driven approach to define and manage data integration tasks.

1. What is Oracle DAC?

Ans:

Oracle Data Integrator (ODI) is a comprehensive data integration tool designed by Oracle Corporation. It facilitates the extraction, transformation, and loading (ETL) of data within an organization’s data infrastructure. ODI enables seamless integration with various data sources, including databases, applications, and flat files, providing a unified platform for data movement. Its architecture emphasizes declarative design and utilizes a unique knowledge module approach for extensibility.

2. List the key features of Oracle DAC.

Ans:

- Integrated Design Environment

- Declarative Design Paradigm

- Knowledge Modules (KM)

- CDC (Change Data Capture) Support

- Integration with Oracle Fusion Middleware

- Open and Extensible Architecture

3. What is the purpose of the DAC repository?

Ans:

The DAC (Data Warehouse Administration Console) repository serves as a centralized metadata repository in Oracle DAC, playing a pivotal role in managing and storing metadata related to ETL (Extract, Transform, Load) processes. It serves as a comprehensive catalog, housing information about data sources, mappings, transformations, load plans, and execution details.

4. How does Oracle DAC handle data extraction?

Ans:

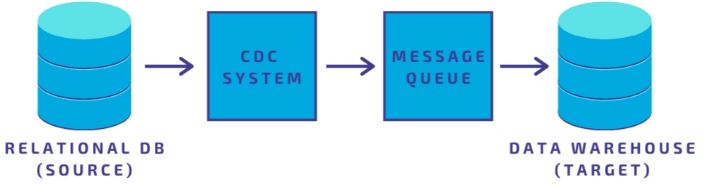

Oracle Data Integrator (ODI), not Oracle Data Warehouse (DAC), is the primary tool for data extraction in Oracle’s data integration ecosystem. ODI supports various extraction methods, including Full, Incremental, and CDC (Change Data Capture). In full extraction, all source data is extracted and is suitable for initial loads. Incremental extraction identifies and extracts only new or modified data since the last load, minimizing processing time.

5. Explain the concept of Execution Plans in Oracle DAC.

Ans:

- In Oracle DAC, Execution Plans represent the orchestrated sequence of tasks that define the flow of Extract, Transform, and Load (ETL) processes within the data integration framework.

- These plans provide a structured approach to managing the execution of various ETL tasks, ensuring that they are performed in a logical order.

6. What are the components of the Oracle DAC architecture?

Ans:

The Oracle Data Integrator (ODI) Application Adapter for DAC (Data Warehouse Administration Console) is the key component of the Oracle DAC architecture. This adapter enables Oracle DAC to interact with ODI, facilitating the extraction, transformation, and loading (ETL) processes. The components of the Oracle DAC architecture include the DAC Server, DAC Repository, DAC Metadata Navigator, DAC Client, DAC Scheduler, DAC Load Plan Generator, Execution Plan Generator, and DAC Metadata Loader.

7. How does Oracle DAC handle error handling and logging?

Ans:

- Oracle DAC employs robust mechanisms for error handling and logging, ensuring transparency and facilitating effective troubleshooting during ETL processes.

- When errors occur, DAC captures detailed information in error tables, recording specifics such as error codes, descriptions, and timestamps.

- The error tables act as a centralized repository, allowing administrators to identify and analyze issues quickly.

8. What are Oracle DAC’s integration capabilities?

Ans:

The all-inclusive data integration platform Oracle Data Integrator (ODI) is made for smooth integration capabilities in Oracle Data Integrator (ODI). It makes reliable connections and adapters for various data sources available, which makes it easier to extract, transform, and load (ETL) data in a variety of contexts. With its support for batch and real-time data integration situations, ODI offers flexibility in meeting a range of data processing needs.

9. What role does the DAC Scheduler play in ETL procedures?

Ans:

- In the Oracle Data Integrator (ODI) system, the DAC Scheduler is essential for coordinating and automating ETL (Extract, Transform, Load) operations.

- It serves as the primary element in charge of creating, overseeing, and carrying out planned jobs, guaranteeing the prompt and efficient completion of ETL processes.

- Effective resource use is made possible by the Scheduler, which enables administrators to create variable timetables based on time intervals, dependencies, or outside events.

10. Elaborate on the support for change data capture (CDC) in Oracle DAC.

Ans:

In Oracle Data Warehouse deployments, Oracle Data Integrator (ODI) is frequently used for Change Data Capture (CDC) and is frequently coupled with the Oracle Data Warehouse Application Console (DAC). Here, DAC functions as a metadata-driven process automation instrument. ODI interfaces are used to detect and record changes in source data in order to provide Change Data Capture in Oracle DAC.

11. Describe Execution Plans in Oracle DAC and their significance in managing ETL workflows.

Ans:

Definition: Execution Plans in Oracle DAC represent the high-level workflow for a set of related ETL tasks.

Logical Workflow: They define the logical sequence of tasks to be executed during an ETL process.

Task Dependencies: Execution Plans allow users to specify dependencies between tasks, ensuring that certain tasks are completed before others begin.

Reusability: Execution Plans can be reused across different scenarios, promoting a modular and reusable approach to ETL design.

12. Discuss the DAC Scheduler in managing ETL job scheduling and execution.

Ans:

The DAC Scheduler plays a pivotal role in orchestrating ETL job scheduling and execution within Oracle DAC. It provides a centralized platform for automating and managing the timing of ETL tasks, supporting flexible scheduling options such as time-based, event-based, and dependency-based schedules. With robust dependency management, it ensures tasks are executed in the correct order, optimizing the overall efficiency of the ETL processes.

13. Explain the importance of metadata-driven development in Oracle DAC.

Ans:

Metadata-driven development is crucial in the context of Oracle Data Integrator (ODI) and its predecessor, Oracle Data Warehouse Administration Console (DAC). DAC relies heavily on metadata, which includes information about data structures, transformations, and dependencies. This approach enhances development efficiency by providing a centralized repository for storing and managing metadata, allowing developers to design, implement, and maintain data integration processes with a clear understanding of the underlying data.

14. How does Oracle DAC contribute to ensuring data quality?

Ans:

Data Profiling and Cleansing: ODI includes robust data profiling capabilities, allowing users to analyze data quality by assessing completeness, accuracy, and consistency.

Data Validation and Error Handling: ODI incorporates mechanisms for data validation through the use of constraints, checks, and rules during data transformation.

Metadata-Driven Development: ODI follows a metadata-driven development approach, centralizing the definition of data quality rules and standards.

Data Quality Auditing and Monitoring: ODI provides auditing and monitoring capabilities to track the execution of ETL jobs and detect anomalies.

15. Where Oracle DAC is more suitable compared to other ETL tools?

Ans:

- Oracle Data Integrator (ODI) is a comprehensive ETL (Extract, Transform, Load) tool that is particularly well-suited for scenarios where tight integration with Oracle databases and applications is a priority.

- One notable example is in organizations heavily invested in Oracle technologies, such as Oracle E-Business Suite or Oracle Database, where Oracle DAC (Data Warehouse Administration Console) can seamlessly integrate with these systems.

16. Differentiate Oracle DAC from Oracle ODI.

Ans:

| Aspect | Oracle DAC | Oracle ODI | |

| Purpose |

Metadata-driven ETL for Oracle BI Apps |

Comprehensive data integration platform | |

| Integration Scope | Primarily for Oracle BI Applications | Supports both structured and unstructured data | |

| Extensibility |

May have limitations in handling various data sources |

Highly extensible, adaptable to diverse data scenarios | |

| Typical Use Case | Orchestrating ETL for Oracle BI data warehouse | Designing end-to-end ETL processes for various data sources |

17. Discuss the security features in Oracle DAC.

Ans:

- Oracle Data Integrator (ODI) and Data Warehouse Administration Console (DAC) work together to provide robust security features.

- DAC offers role-based access control, allowing administrators to define roles with specific privileges.

- Users are assigned to these roles, ensuring fine-grained control over access to DAC functionalities.

18. What does Oracle DAC support in the ETL process for the various types?

Ans:

Filter Transformation: This transformation allows the extraction of particular rows from a source dataset based on defined criteria.

Expression Transformation: Expression transformations are used to perform calculations or manipulate data values during the transformation process.

Aggregator Transformation: Aggregator transformations are crucial for performing aggregate calculations such as sum, average, count, and others on groups of data.

Join Transformation: Join transformations are used to combine data from more sources based on common keys or conditions.

19. How does Oracle DAC handle slowly changing dimensions (SCDs)?

Ans:

Oracle Data Integrator (ODI), not Oracle Data Warehouse (DAC), is the tool commonly associated with handling slowly changing dimensions (SCDs). DI provides robust support for SCDs through its built-in knowledge modules. These modules facilitate the implementation of different SCD types, such as Type 1 (overwrite), Type 2 (historical), and Type 3 (add new attribute).

20. Explain the role of Source System Adapters in Oracle DAC.

Ans:

In Oracle Data Integrator (ODI) and its companion tool, Oracle Data Warehouse Administration Console (DAC), Source System Adapters play a pivotal role in facilitating the extraction of data from diverse source systems. These adapters serve as connectors between DAC and various source systems, enabling seamless data integration processes.

21. How does Oracle DAC handle dependencies between tasks in Execution Plans?

Ans:

- In Oracle DAC, Execution Plans are sequences of tasks that define the workflow for data integration processes.

- Dependencies between tasks are managed through the definition of preconditions and postconditions.

- Preconditions specify conditions that must be met before a task can be executed, ensuring a logical flow in the execution sequence.

22. What are Aggregate Navigation Rules in Oracle DAC?

Ans:

Aggregate Navigation Rules in DAC allow developers to define relationships between detailed and aggregate tables based on specific conditions or criteria. These rules guide the query execution engine to navigate through the available aggregates, selecting the most suitable ones to fulfill a given query. This optimization reduces the need to scan the entire dataset, minimizing query response times and improving the overall efficiency of data retrieval operations.

23. Discuss the advantages of using Oracle DAC.

Ans:

- Seamless EBS Integration

- Metadata Management

- Workflow Automation

- Performance Optimization

- Built-in Error Handling and Logging

24. What is the Execution Unit in Oracle DAC?

Ans:

In Oracle Data Integrator (ODI), the Execution Unit in the context of Oracle Data Warehouse Application Console (DAC) refers to a logical grouping of tasks or processes that are executed together during the ETL (Extract, Transform, Load) process. The Execution Unit serves as a container for organizing and managing the execution of related tasks within a data warehouse job.

25. Explain Conformed Dimensions in Oracle DAC.

Ans:

- In Oracle Data Integrator (ODI) and Oracle Data Warehouse Application Console (DAC), conformed dimensions play a crucial role in maintaining consistency across data warehouse systems.

- Conformed dimensions are shared dimensions that are consistent and standardized across multiple data marts or subject areas within the same data warehouse.

26. Discuss the role of the DAC Console in Oracle DAC.

Ans:

The DAC Console (Data Warehouse Administration Console) in Oracle DAC (Data Warehouse Administration Console) plays a crucial role in managing and administering data warehouse processes. It serves as a centralized hub for designing, monitoring, and managing the entire data integration and ETL (Extract, Transform, Load) process.

27. How does Oracle DAC contribute to data quality assurance?

Ans:

Oracle Data Integrator (ODI) plays a significant role in data quality assurance through various features and functionalities. Firstly, ODI supports data profiling, allowing users to analyze and understand the quality of data within source systems. This includes identifying anomalies, inconsistencies, and potential issues that may impact data integrity. Additionally, ODI offers data cleansing capabilities, enabling the transformation and standardization of data to meet predefined quality standard.

28. Explain Conformed Dimensions in Oracle DAC.

Ans:

- Conformed Dimensions refer to shared dimension tables across more subject areas or data marts within a data warehouse.

- These dimensions maintain a consistent structure and content, ensuring uniformity and integrity across various business areas.

29. How does Oracle DAC handle data transformation?

Ans:

In Oracle Data Warehouse setups, Oracle Data Integrator (ODI) is frequently utilized for data transformation instead of Oracle Data Warehouse (DAC). If you’re talking about Oracle DAC (Data Warehouse Administration Console), you should be aware that Oracle no longer actively develops or supports DAC, making it an old technology.

30. Explain Aggregate Navigation Rules in Oracle DAC.

Ans:

Aggregate Navigation Rules play a crucial role in optimizing query performance within a data warehouse environment. These rules explain how aggregated data is navigated and accessed by the DAC server during the extraction, transformation, and loading (ETL) processes. By specifying the path through which the DAC server should access aggregated data, these rules help streamline data retrieval and enhance overall query efficiency.

31. How does Oracle DAC handle data splitting?

Ans:

- Oracle DAC achieves data splitting in Oracle BI Applications by employing execution plans, allowing tasks to run in parallel.

- Users configure task properties to control parallelism, specifying the number of threads or processes.

- Load plans coordinate the execution of multiple plans, providing overall process control.

- DAC supports various data partitioning strategies, enhancing efficiency in distributing data.

32. What are Aggregate Persistence Rules in Oracle DAC?

Ans:

Oracle Data Warehouse Application Console (DAC) and Aggregate Persistence Rules play a crucial role in managing and optimizing the performance of data warehouse processes. These rules define how aggregate tables, which store pre-aggregated data for faster query response, are maintained and refreshed within the data warehouse.

33. Explain the role of the DAC Metadata tables in Oracle DAC.

Ans:

- Data Warehouse Administration Console (DAC), DAC Metadata tables play a crucial role in managing and storing metadata information related to data integration processes.

- These tables store critical details about the execution of ETL (Extract, Transform, Load) processes orchestrated by DAC.

- Information such as workflow execution logs, session details, and dependencies between different tasks is stored in these tables.

34. Discuss the concept of Slowly Changing Fact (SCF) tables in Oracle DAC.

Ans:

Slowly Changing Fact (SCF) tables refer to the handling of changes in factual data over time. SCF tables are designed to manage historical changes to numeric measures, allowing for a comprehensive analysis of data trends and evolution. These tables typically include effective date columns to capture the validity period of each fact, allowing users to track changes over time.

35. How does Oracle DAC support the integration of unstructured data sources?

Ans:

Oracle DAC is primarily designed for managing structured data ETL processes in Oracle BI Applications. For integrating unstructured data sources, Oracle provides tools like Oracle Data Integrator (ODI) and Big Data SQL, offering comprehensive solutions for handling both structured and unstructured data within the Oracle ecosystem.

36. What is the DAC Command Line Interface (CLI) in Oracle DAC?

Ans:

- The DAC Command Line Interface (CLI) in Oracle Data Integrator (DAC) is a utility that enables users to interact with DAC functionality through a text-based interface.

- This interface provides a set of commands that users can execute to perform various tasks related to the management and execution of data integration processes.

37. Explain the concept of Aggregate Join Indexes in Oracle DAC.

Ans:

Aggregate Join Indexes in Oracle DAC (Data Warehouse Administration Console) is a mechanism to enhance query performance in data warehouses by precomputing and storing aggregated results of complex join operations. These indexes are designed to accelerate query response times by eliminating the need for repetitive and resource-intensive joins during query execution.

38. How does Oracle DAC handle data lineage?

Ans:

The Oracle Data Integrator Application Adapter for Oracle Business Intelligence Data Warehouse (DAC) is a component made especially for Oracle Business Intelligence Enterprise Edition (OBIEE) settings and Oracle’s data integration and ETL (Extract, Transform, Load) tool. By offering a consolidated metadata repository that records details about the movement and modification of data inside the OBIEE data warehouse, DAC makes data lineage easier.

39. How is the versioning and deployment of ETL code managed by Oracle DAC?

Ans:

With the release of version 12c, Oracle Data Integrator (ODI) took the position of Oracle Data Warehouse Administration Console (DAC) as the recommended ETL tool for Oracle Business Intelligence Enterprise Edition (OBIEE).

ODI uses version control systems such as Subversion or Git to handle ETL code versioning. When necessary, developers can work together, keep track of changes, and revert to earlier iterations. Additionally, ODI facilitates package-based deployment, which makes it possible to advance ETL code.

40. What does the Oracle DAC DAC Security Model entail?

Ans:

The purpose of Oracle DAC’s DAC (Data Warehouse Administration Console) security model is to guarantee restricted access and safe administration of operations pertaining to data warehouses. It takes advantage of Oracle’s role-based security methodology, which assigns rights and privileges to roles rather than to specific people.

41. How does Oracle DAC handle multi-dimensional data modeling?

Ans:

Oracle Data Integrator (ODI) replaced Oracle Data Warehouse Administration Console (DAC) as the preferred tool for ETL (Extract, Transform, Load) processes in Oracle BI Applications. However, DAC was known for its handling of multi-dimensional data modeling. It provided a metadata-driven approach, allowing users to define and manage multi-dimensional data models through a graphical user interface.

42. Discuss the options available for monitoring ETL processes in Oracle DAC.

Ans:

Execution Logs: Oracle DAC provides detailed execution logs that capture information about each step of the ETL process, aiding in identifying errors or performance bottlenecks.

Error Handling and Notifications: DAC offers robust error handling mechanisms, allowing users to define error thresholds and configure notifications for immediate alerts in case of failures during the ETL process.

Job Scheduling and Dependencies: Monitoring job schedules and dependencies within Oracle DAC ensures that ETL processes are executed in the correct order, minimizing data inconsistencies and failures.

Performance Monitoring: DAC includes tools for monitoring performance metrics such as load times, data volumes, and resource utilization.

43. What is the DAC Metadata Load process in Oracle DAC?

Ans:

The DAC Metadata Load process in Oracle Data Integrator (DAC) holds paramount significance in managing and integrating metadata for efficient data warehouse operations. This process involves extracting metadata from various source systems, transforming it to align with the data warehouse model, and loading it into the DAC repository.

44. How is Oracle DAC integrated with OBIA?

Ans:

Oracle Data Warehouse Application Console (DAC) is tightly integrated with Oracle Business Intelligence Applications (OBIA) by providing pre-built ETL processes tailored for OBIA data warehousing. DAC orchestrates the ETL tasks, leveraging OBIA-specific metadata to dynamically generate and execute processes. This integration streamlines data extraction, transformation, and loading, ensuring efficient and automated data flow into the Oracle BI data warehouse.

45. How does Oracle DAC handle change control of ETL configurations?

Ans:

- Oracle Data Integrator (ODI) is typically used for ETL (Extract, Transform, Load) processes, not Oracle Data Warehouse (ODW) or Data Warehouse Administration Console (DAC).

- However, assuming you are referring to ODI, it handles change control and versioning of ETL configurations through its version management and deployment capabilities.

46. Discuss the options available for data cleansing in Oracle DAC.

Ans:

DAC provides several options to address these concerns. First, DAC supports data profiling tools that analyze source data to identify inconsistencies and anomalies. Second, it offers transformation capabilities within ODI for data cleansing, allowing users to apply various data quality rules during the ETL (Extract, Transform, Load) process. Third, DAC facilitates the implementation of data validation checks at both source and target levels, ensuring the integrity of the data throughout the integration pipeline.

47. How does Oracle DAC support data archiving and purging?

Ans:

Archiving Strategy: Oracle DAC facilitates data archiving by allowing users to define archiving policies based on business rules.

Data Purging: DAC supports data purging through configurable purging policies, enabling users to specify criteria for removing obsolete data from the data warehouse.

48. Explain the concept of Sessionization in Oracle DAC.

Ans:

In DAC, a session represents a unit of work or a task within an ETL process. Sessions are typically associated with the extraction, transformation, or loading of a specific set of data. Sessionization involves breaking down the overall ETL process into individual sessions based on the logical flow of the data.

49. Discuss the role of the DAC Metadata Navigator in Oracle DAC.

Ans:

Source System Metadata: The DAC Metadata Navigator allows you to manage metadata related to source systems. This includes details about source tables, columns, and the structure of the data you are extracting.

Data Movement Monitoring: The DAC Metadata Navigator provides a means to monitor and manage the flow of data through the ETL processes. This includes tracking the movement of data from source systems to target data warehouses or data marts.

Task Dependencies: The Metadata Navigator helps identify dependencies between different tasks in the ETL process. Understanding these dependencies is crucial for ensuring the correct execution sequence of tasks and avoiding issues related to data consistency.

50. How does Oracle DAC support the integration of external scripts?

Ans:

- Oracle DAC, or Data Warehouse Administration Console, primarily focuses on managing and automating ETL processes for Oracle BI Applications.

- While DAC itself does not directly support the integration of external scripts, it relies on Informatica PowerCenter as its ETL engine.

- Informatica PowerCenter, the underlying ETL tool for DAC, provides extensive support for integrating external scripts and custom code.

51. Discuss the advantages of using Oracle DAC in a real-time data integration.

Ans:

Advantages of using Oracle DAC in a real-time data integration environment include its robust metadata management, which allows for seamless tracking and integration of various data sources. The platform’s ability to automate and streamline ETL (Extract, Transform, Load) processes enhances efficiency and reduces manual intervention. Oracle DAC provides a centralized control and monitoring mechanism, ensuring better governance and visibility into real-time data flows.

52. Explain the concept of Aggregator Pushdown in Oracle DAC.

Ans:

Aggregator Pushdown refers to the optimization technique where the aggregation operations are pushed down to the source database during data integration processes. Instead of performing aggregations within the ETL (Extract, Transform, Load) tool or the target database, DAC leverages the capabilities of the source database to process aggregations directly at the source.

53. Discuss the DAC Plug-in Architecture in Oracle DAC.

Ans:

- The DAC Plug-in Architecture in Oracle Data Integrator (DAC) plays a pivotal role in enhancing the extensibility and flexibility of the ETL (Extract, Transform, Load) process.

- It allows users to seamlessly integrate custom or third-party components into the DAC framework, enabling tailored solutions to meet specific business requirements.

54. Explain the role of the DAC Load Plan in Oracle DAC.

Ans:

ETL Workflow Execution: Load Plans in DAC are used to define and execute end-to-end ETL workflows.

Dependency Management: Load Plans specify the dependencies between different ETL tasks.

Parallel Execution: Load Plans enable the parallel execution of multiple ETL tasks.

55. How does Oracle DAC handle surrogate keys in data warehousing?

Ans:

Oracle Data Integrator (ODI), not Oracle Data Warehouse (DAC), is commonly used for data integration in Oracle’s data warehousing solutions. Nevertheless, if you are referring to DAC (Data Warehouse Administration Console), it is part of Oracle Business Intelligence (OBIEE) and is typically used for managing metadata and ETL processes.

56. Explain the concept of “Late Arriving Facts” in Oracle DAC.

Ans:

- “Late Arriving Facts” refers to the handling of delayed or belated data that arrives after the initial data load process has taken place.

- This scenario often occurs in data warehousing when new information is added to source systems at a later time than expected.

- To accommodate such late arrivals, DAC provides mechanisms to identify, capture, and integrate this new data seamlessly into the existing data warehouse structure.

57. In what ways does Oracle DAC facilitate data integration?

Ans:

Connectivity to Cloud Sources: Oracle Cloud, Amazon Web Services (AWS), Microsoft Azure, and other cloud databases and apps are just a few of the cloud-based sources that can be connected to through the technologies and connectors that ODI offers.

Pre-built Knowledge Modules: One of ODI’s many features is its pre-built Knowledge Modules (KMs), which make it easier to integrate particular platforms and technologies, like cloud-based sources.

58. Discuss the DAC Aggregate Navigation Wizard in Oracle DAC.

Ans:

Aggregate Navigation Wizard in Oracle DAC plays a crucial role in optimizing data warehouse performance. This tool enables users to define and manage aggregate tables efficiently, helping to enhance query performance and reduce response times. By guiding users through a step-by-step process, the wizard simplifies the creation of aggregate tables, taking into account factors such as dimensions, hierarchies, and summarization levels.

59. How does Oracle DAC handle data consistency and reconciliation?

Ans:

- Oracle DAC (Data Warehouse Administration Console) manages data consistency and reconciliation across multiple data marts in a distributed environment through its centralized metadata repository.

- It maintains a unified view of ETL (Extract, Transform, Load) processes, ensuring consistency in data transformations and mappings across various data marts.

60. Discuss the DAC Metadata Validation process in Oracle DAC.

Ans:

AC Metadata Validation process ensures the integrity and consistency of metadata within the data warehouse. This crucial step involves verifying the accuracy of metadata definitions, mappings, and transformations before executing data integration processes. During validation, DAC assesses the compatibility of source and target metadata, confirming that mappings adhere to defined business rules.

61. Explain the DAC Execution Plan Generator in Oracle DAC.

Ans:

The DAC (Data Warehouse Application Console) Execution Plan Generator in Oracle DAC plays a crucial role in managing the execution of ETL (Extract, Transform, Load) processes within a data warehouse. It is responsible for creating optimized execution plans based on defined business rules, source systems, and target data warehouse structures.

62. Discuss the handling of data type conversions in Oracle DAC to ensure data integrity.

Ans:

Source Data Inspection: Thoroughly analyze source data types and ensure compatibility with target data types in the data warehouse. Identify potential mismatches to mitigate conversion issues.

Precision and Scale Alignment: Maintain precision and scale alignment during conversions to avoid data loss or unexpected rounding. Adjust target data types accordingly to accommodate the source data characteristics.

Error Handling Mechanisms: Implement robust error handling mechanisms to capture and log conversion errors. This helps in identifying and resolving issues promptly, maintaining the overall quality of the data warehouse.

63. Discuss the role of the DAC Recovery process in Oracle DAC.

Ans:

- The recovery process in Oracle DAC plays a crucial role in ensuring data integrity and system resilience.

- It is designed to recover from unexpected failures or disruptions during ETL (Extract, Transform, Load) processes.

- The recovery process helps restore the system to a consistent state by reprocessing failed or interrupted tasks and minimizing data inconsistencies.

64. Describe the function of the DAC. What is Oracle DAC Seed Data?

Ans:

When developing and configuring metadata for data warehouse processes, seed data is essential. It provides the necessary data for the execution of ETL (Extract, Transform, Load) procedures and acts as the basis for DAC metadata. Predefined variables and specifications, such as source and target system details, transformation rules, and execution parameters, are included in the Seed Data to make the setup of data warehousing activities easier.

65. How does Oracle DAC support the development of data validation rules?

Ans:

Oracle Data Integrator (ODI) and Oracle Data Warehouse Administration Console (DAC) work together to support the development and management of data validation rules. DAC serves as the metadata and execution engine for Oracle Business Intelligence Applications, including pre-built data integration and validation processes.

66. What effect does Oracle DAC’s parallel processing have?

Ans:

By dividing up work across several processors at once, parallel processing in Oracle DAC (Data Warehouse Administration Console) greatly improves ETL (Extract, Transform, Load) speed. This method shortens the total amount of time needed for data extraction and transformation by speeding up data integration procedures.

67. How does Oracle DAC support data partitioning strategies?

Ans:

- Oracle Data Integrator (ODI) is often used for data integration tasks, including Extract, Transform, and Load (ETL) processes.

- Oracle Data Integrator’s (ODI) support for data partitioning strategies is primarily through its ability to leverage parallel processing and data distribution methods.

- By partitioning data, ODI can enhance performance and optimize resource utilization during ETL operations.

68. Explain the concept of Dimensional Integrity in Oracle DAC.

Ans:

Dimensional Integrity in Oracle Data Warehouse Administration Console (DAC) refers to the consistency and accuracy of data across different dimensions within a data warehouse. It ensures that relationships and hierarchies among various data elements are maintained, preventing inconsistencies in reporting and analysis.

69. Discuss the impact of metadata-driven development in Oracle DAC.

Ans:

- Metadata-driven development in Oracle Data Integrator (ODI) and Oracle Data Warehouse Application Console (DAC) offers significant advantages by centralizing and managing metadata throughout the development lifecycle.

- It enhances collaboration among development teams by providing a unified repository for data definitions, transformations, and business rule and promotes consistency and reduces errors by ensuring that changes to metadata propagate seamlessly across the entire data integration process.

70. Explain Late Binding Views in Oracle DAC.

Ans:

The concept of Late Binding Views in Oracle Data Warehouse Application Console (DAC) refers to the dynamic generation of SQL statements during runtime rather than at design time. Unlike Early Binding Views, which are predefined and static, Late Binding Views allow for flexibility in adapting to changing data structures and business requirements.

71. Discuss the DAC Metadata Repository Cache in Oracle DAC.

Ans:

The DAC (Data Warehouse Administration Console) Metadata Repository Cache in Oracle DAC plays a crucial role in enhancing performance and efficiency during data integration processes. It serves as a temporary storage mechanism for metadata, storing frequently accessed metadata information to reduce the need for repeated database queries.

72. How does Oracle DAC handle the extraction of data from multiple source systems?

Ans:

- Oracle Data Integrator (ODI) is a comprehensive data integration platform used for extracting, transforming, and loading (ETL) data from multiple source systems with diverse formats and structures.

- Oracle DAC (Data Integrator Application Console) leverages ODI capabilities to handle this complexity efficiently. DAC supports various data source connectors and utilizes ODI’s flexible data models to accommodate different data formats.

73. Discuss the DAC Aggregate Persistence Wizard in Oracle DAC.

Ans:

The DAC Aggregate Persistence Wizard in Oracle Data Integrator (DAC) plays a crucial role in managing and optimizing data aggregation processes within the data warehouse environment. This wizard facilitates the creation and management of aggregate persistence strategies, which are essential for improving query performance.

74. Explain “Agile Development” in the context of Oracle DAC.

Ans:

- Agile Development in the context of Oracle DAC (Data Integrator and Application Adapter for Oracle Business Intelligence) refers to an iterative and flexible approach to software development.

- In Oracle DAC, Agile principles are applied to streamline data integration and business intelligence projects, allowing for continuous improvement and quick response to changing requirements.

75. Discuss the DAC Scheduler in Oracle DAC.

Ans:

The DAC Scheduler in Oracle Data Integrator (DAC) is a critical component responsible for automating and managing the execution of ETL (Extract, Transform, Load) processes. It enables users to schedule and coordinate the execution of various tasks, ensuring timely and efficient data integration. The Scheduler supports the creation of complex workflows by defining dependencies among tasks and orchestrating their execution sequence.

76. What is Oracle DAC’s DAC Recovery Manager?

Ans:

- An important part of maintaining the dependability and integrity of data warehouse operations is the Recovery Manager in Oracle DAC (Data Warehouse Administration Console).

- The DAC repository is managed and restored by the Recovery Manager, which also gives managers the resources they need to recover from unforeseen events like system failures or data corruption.

77. Discuss the significance of the DAC Incremental Update process.

Ans:

The DAC Incremental Update process in Oracle Data Integrator (ODI) is of paramount significance for maintaining data accuracy and efficiency in data warehousing. This process allows for incremental loading of data, enabling organizations to update only the changed or newly added records in the target data warehouse, reducing processing time and resource utilization.

78. How can DAC architecture be imported and exported?

Ans:

- Users choose the components they want to export, including mappings, configurations, and execution plans, by using the “Export” function in the DAC client.

- As a result, an export file with the configurations and metadata is created. The user uses the “Import” feature on the target environment, supplying the required connection information and indicating the export file.

79. What do you mean by an authentication file?

Ans:

An authentication file is a configuration file that contains essential information used for authentication purposes, typically in the context of accessing secure systems or services. This file securely stores authentication credentials, such as usernames, passwords, or access tokens, in a structured format. It acts as a secure repository for sensitive information, preventing the need to embed credentials directly into code or scripts, which can pose security risks.

80. Does DAC maintain a record of the refresh dates for each source?

Ans:

- Oracle Document states that only tables that are the primary source or primary target on tasks in an execution plan that has been finished are tracked for refresh dates.

- If the refresh date against the table is null, the DAC executes the entire load command for tasks on which a table is the primary source or target.

- If there are several primary sources, either an incremental load or a full load will be initiated by the earliest refresh date.

81. What do the DAC’s Index, Table, and Task Actions mean?

Ans:

Index action: Modify how dropping and adding indexes is done by default.

Table action: Modify the way that tables are truncated and analyzed by default.

Task action: This may include new features for the task behavior, such as preceding, succeeding, failing, and restarting upon failure.

82. How does DAC manage Runtime Parameters?

Ans:

All parameters related to an ETL run, including those defined externally to DAC, those held in flat files, and static and runtime parameters defined in DAC, are read and evaluated by DAC during an ETL execution. DAC creates a unique parameter file for each Informatica session after combining all of the parameters for the ETL operation and removing any duplicates.

83. Is it possible to run more than one execution plan simultaneously in DAC?

Ans:

In SQL Server Data Tools (SSDT) and SQL Server Management Studio (SSMS), the Data-tier Application (DAC) framework is designed to deploy and manage database schema and data. DAC doesn’t inherently support running multiple execution plans simultaneously. Each DAC operation, such as deployment or upgrade, is typically a single atomic action.

84. How is a DAC different from a traditional database deployment?

Ans:

- A DAC (Data Access Component) differs from a traditional database deployment in its architecture and approach to data management.

- Unlike traditional databases that rely on a centralized server for data storage and processing, DACs distribute data across multiple nodes or components.

85. What steps are involved in deploying a DAC package?

Ans:

A DAC (Data-tier Application) package must be deployed in stages. First, use SQL Server Data Tools or a comparable tool to construct the DAC package. The database schema and related objects are contained in this package. Next, confirm that the version and compatibility of the target SQL Server instance match the requirements specified in the package.

86. Is it possible to run the SQL script using DAC?

Ans:

Create a DAC Package: Begin by creating a DAC package, which is a compressed file containing the necessary information for deploying the database schema.

Deploy the DAC Package: Deploy the DAC package to your target SQL Server instance using the SqlPackage utility or through SQL Server Management Studio (SSMS).

Specify Connection Parameters: Ensure that you provide accurate connection parameters such as server name, database name, and authentication credentials.

87. What are DACPAC and BACPAC?

Ans:

The schema and configuration of a SQL Server database are contained in a package format called DACPAC, which makes version management and deployment of database updates simple. In essence, it is a condensed version of the database schema.

A BACPAC, on the other hand, is a specific type of DACPAC that consists of the database structure together with the data that goes with it. Due to this, customers may effortlessly transfer databases between on-premises and cloud settings with BACPAC, making it appropriate for entire database migration.

88. Can DAC be used with both on-premises and cloud-based SQL Server instances?

Ans:

Yes, a Data Access Control (DAC) can be utilized with both on-premises and cloud-based SQL Server instances. DAC ensures secure access to databases by managing permissions and controlling data access. This enables organizations to maintain consistent and centralized control over their data regardless of the hosting environment.

89. How can you upgrade a database using DAC?

Ans:

Firstly, ensure you have the necessary permissions to perform the upgrade, including administrative privileges on both the source and target servers. Next, use the DAC wizard or command-line tools to generate a DAC package from the source database. This package contains information about the schema, objects, and dependencies. Transfer the generated DAC package to the target server where the upgraded database will reside. On the target server, run the DAC package using the DAC wizard or command-line tools. This will apply the schema changes and upgrade the database to the new version.

90. What are the advantages of using DAC in database deployment?

Ans:

- DAC enhances security by actively monitoring and auditing database activities, helping to detect and prevent unauthorized access or malicious actions.

- It facilitates compliance with regulatory requirements by generating detailed audit logs and reports, ensuring organizations meet data protection and privacy standards.