Oracle GoldenGate is a robust real-time data integration and replication solution, offering high availability, data integration, change data capture, and data replication capabilities across heterogeneous IT environments. The 19c version emphasizes extreme performance, simplified configuration, and enhanced integration with Oracle Database, while also extending support for cloud environments and ensuring advanced security. Additionally, Oracle provides complementary tools like Oracle GoldenGate Veridata for high-speed data comparison and verification between databases. With its versatility and capabilities, Oracle GoldenGate plays a crucial role in ensuring real-time data consistency and availability in complex enterprise systems.

1. What exactly is Oracle GoldenGate?

Ans:

Oracle GoldenGate is a data replication and integration solution that enables the real-time movement and synchronization of data between different databases, whether they are Oracle databases or non-Oracle databases.

2. How is Oracle GoldenGate different from regular database copying methods?

Ans:

Oracle GoldenGate offers real-time data replication and synchronization, which means that changes are propagated as soon as they occur in the source database. This is different from traditional methods like periodic batch ETL (Extract, Transform, Load) processes, which are typically not real-time and may result in data lag.

3. How does Oracle GoldenGate architecture work?

Ans:

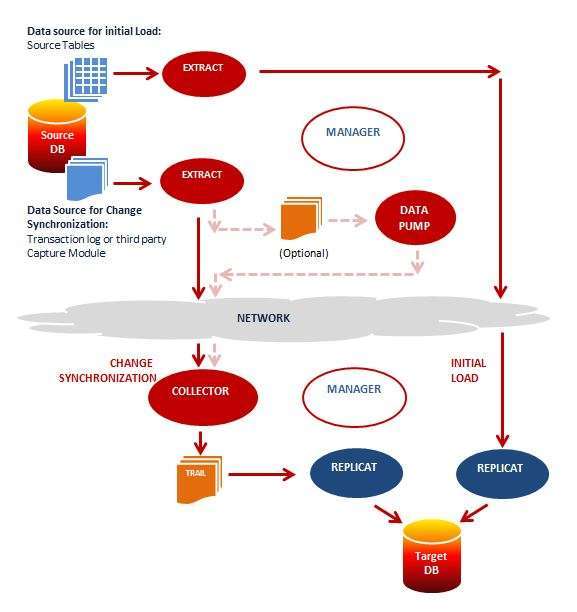

Oracle GoldenGate uses a capture-and-apply mechanism. It captures changes made to source data and then applies those changes to target databases in real time. It employs a combination of extract processes, trail files, and replicate processes to achieve this.

4. How do you set up and configure Oracle GoldenGate to copy data between databases?

Ans:

Setting up Oracle GoldenGate involves defining the source and target databases, configuring extract and replicat processes, specifying data mapping and transformation rules, and ensuring network connectivity between source and target systems.

5. When should you use different GoldenGate copying methods, and what are they?

Ans:

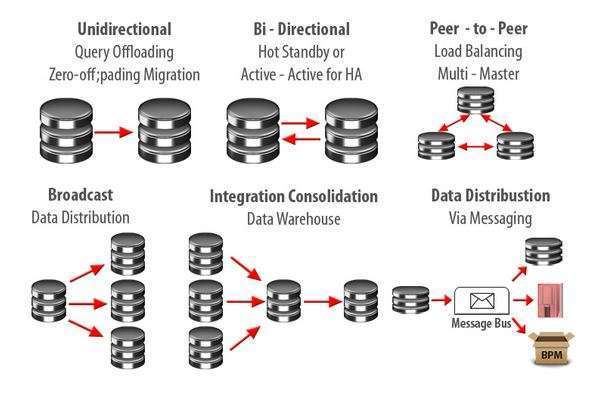

Oracle GoldenGate provides various copying methods, such as unidirectional, bidirectional, and multi-master replication For example, unidirectional replication is suitable for one-way data flow, while bidirectional replication is used when changes can occur in both source and target databases.

6. What do Extract and Replicat do in Oracle GoldenGate copying?

Ans:

- Extract processes capture data changes from the source database and write them to trail files.

- Replicat processes read the data changes from trail files and apply them to the target database.

7. How does Oracle GoldenGate handle problems when the same data changes in different ways in different databases?

Ans:

Oracle GoldenGate employs conflict resolution techniques to handle conflicts that arise when the same data is changed differently in different databases. Users can define conflict resolution rules to determine how conflicts are resolved, such as preferring changes from one database over another or merging conflicting changes.

8. How can Oracle GoldenGate help with keeping data safe and available in case of disasters or problems?

Ans:

Oracle GoldenGate can be used to create a disaster recovery (DR) solution by continuously replicating data changes to a standby database. In the event of a disaster or database failure, the standby database can be brought online to ensure data availability and minimize downtime.

9. What can Oracle GoldenGate do for data transformation?

Ans:

Oracle GoldenGate provides data transformation capabilities, allowing you to modify, filter, or route data as it is replicated. This is useful for various purposes:

- Data cleansing

- Data filtering

- Data transformation

10. What can you do to make sure data stays secure while using Oracle for copying?

Ans:

- Strong authentication and authorization.

- Encryption for data in transit and GoldenGate trails.

- Strict access control with RBAC.

- Network security through firewalls and access restrictions.

- Regularly update and patch GoldenGate software.

- Encrypt sensitive configuration files and credentials.

11. What are the additional logging requirements?

Ans:

Additional logging requirements in Oracle GoldenGate depend on your specific configuration and use case. You may need to configure supplemental logging in the source database to capture specific data changes accurately.

12. What makes up the Goldengate Replication’s Principal Elements?

Ans:

- Extract: Captures and reads the source database changes.

- Pump: Transfers the captured changes to the target system.

- Replicat: Applies the changes to the target database.

- Trails: Files used to store captured changes for replication.

13. What Kind Of Encryption Supports Goldengate?

Ans:

Oracle GoldenGate supports encryption for data transmission using SSL/TLS. You can configure SSL encryption to secure data replication between the source and target systems.

14. How Does The Credential Store Add Credentials?

Ans:

You can add credentials to the Oracle Credential Store by using the ogg_cred utility or the Oracle Wallet Manager. With the help of these tools, you may safely store and manage the users and passwords that Oracle GoldenGate processes require.

15. How Can Information Be Retrievably From The Oracle Credential Store?

Ans:

You can retrieve information from the Oracle Credential Store using the getWalletPassword command or through GoldenGate parameter files by referencing the stored credentials.

16. In a coordinated model, how does the replica function?

Ans:

The replication processes on the target side (Replicat processes) are synchronized with the arrival of data changes from the source (Extract and Pump processes). This coordination ensures that changes are applied in the correct order, maintaining data consistency.

17. If a trail file is encrypted, how would you view it using a log dump?

Ans:

You can use the logdump utility provided by Oracle GoldenGate to view encrypted trail files. You need to provide the necessary encryption key or passphrase to decrypt and view the content.

18. What are the available Ogg Initial Load methods?

Ans:

Oracle GoldenGate provides several methods for initial data loading, including:

- Direct Load: Uses SQL*Loader or external tables.

- Initial Data Pump: Uses data pump files generated by Extract.

- Import/Export: Uses Oracle Data Pump utilities.

- Database Cloning: Cloning the source database.

19. What is the best procedure for Ogg extract files removal?

Ans:

Generally, you can use the PURGEOLDEXTRACT command to remove old extract files. Be cautious when removing files to ensure data integrity and retention policies are met.

20. What are the common use cases for GoldenGate in real-world scenarios?

Ans:

- Data warehousing and reporting.

- High availability and disaster recovery solutions.

- Database migrations and upgrades.

- Cloud database integration.

- Data consolidation.

21. How does Oracle GoldenGate handle data consistency and synchronization between source and target databases?

Ans:

Oracle GoldenGate ensures data consistency and synchronization by capturing changes from the source database’s transaction logs, transforming them into a platform-agnostic format, and applying them to the target database.

22. What role does the Oracle GoldenGate Manager play in monitoring and managing replication processes?

Ans:

The Oracle GoldenGate Manager is responsible for monitoring and managing replication processes. It provides a centralized control point for starting, stopping, and monitoring data replication tasks.

23. What is the significance of checkpoints in Oracle GoldenGate?

Ans:

Checkpoints in Oracle GoldenGate represent a point in time up to which data has been successfully applied to the target database. They play a crucial role in ensuring data consistency and replication integrity.

24. How do checkpoints impact replication performance?

Ans:

Frequent checkpoints can impact replication performance, so finding the right balance between checkpoint frequency and performance is essential.

25. What are the advantages of bi-directional replication?

Ans:

- Real-time synchronization of data between databases.

- Improved data consistency and integrity.

- Support for active-active configurations.

- Reduced downtime during maintenance and upgrades.

26. What potential challenges to be aware of when implementing Oracle GoldenGate?

Ans:

- Configuration complexity.

- Monitoring and troubleshooting.

- Data conflict resolution.

- Resource utilization and performance tuning.

- Licensing costs.

27. How does Oracle GoldenGate support heterogeneous replication?

Ans:

Oracle GoldenGate supports heterogeneous replication by capturing source database changes in a platform-independent format and then applying them to the target database in its native format.

28. What is the licensing model for Oracle GoldenGate?

Ans:

Oracle GoldenGate typically uses a per-processor licensing model, where you need to purchase licenses for the number of processors (CPU cores) in the source and target systems where GoldenGate is running.

29. Are there any methods for keeping track of and improving GoldenGate’s performance?

Ans:

- Regularly monitoring replication lag.

- Tuning extract and replicating processes for optimal performance.

- Properly sizing and configuring the GoldenGate environment.

- Implementing a robust monitoring and alerting system.

30. What’s the primary purpose of Oracle GoldenGate?

Ans:

GoldenGate’s primary purpose is to enable real-time data replication and synchronization between heterogeneous databases, supporting various use cases like reporting, high availability, migrations, and data integration.

31. How does GoldenGate handle database schema changes?

Ans:

GoldenGate handles schema changes by capturing and replicating DDL (Data Definition Language) statements, allowing for synchronization of database schema changes between source and target databases.

32. Explain data consistency in GoldenGate.

Ans:

Data consistency in GoldenGate ensures that replicated data remains synchronized and accurate between source and target databases, preventing data inconsistencies or conflicts during the replication process.

33. What is conflict detection and resolution in GoldenGate?

Ans:

Conflict detection and resolution in GoldenGate involve identifying and resolving conflicts that may occur when data changes are made simultaneously in both the source and target databases during bidirectional replication.

34. Describe the Extract process in GoldenGate.

Ans:

The Extract process in GoldenGate captures and extracts data changes from the source database’s transaction logs, converting them into a platform-independent format for replication.

35. How can you ensure data integrity in GoldenGate?

Ans:

Ensuring data integrity in GoldenGate involves setting up proper configuration, checkpointing, and monitoring to guarantee that data changes are accurately and consistently replicated between the source and target databases.

36. What are common troubleshooting methods for GoldenGate?

Ans:

- Monitoring replication lag

- Reviewing log files

- Checking configuration settings

- Verifying network connectivity

37. How does GoldenGate support cloud replication?

Ans:

GoldenGate supports cloud replication by facilitating real-time data synchronization between on-premises databases and cloud-based databases, allowing businesses to move data to and from the cloud.

38. What’s the role of the GoldenGate Hub architecture?

Ans:

The GoldenGate Hub architecture acts as a central hub for data integration, enabling multiple sources to replicate data to a centralized target, simplifying data consolidation and distribution.

39. How do you upgrade GoldenGate?

Ans:

- Backup existing configurations and data.

- Install the new GoldenGate version.

- Update configuration files, if necessary.

- Test the upgrade in a non-production environment.

- Apply the upgrade to the production environment while minimizing downtime.

40. How does GoldenGate handle large objects (LOBs)?

Ans:

GoldenGate handles large objects (LOBs) by replicating them in chunks, breaking them into manageable pieces, and then reconstructing them on the target database.

41. What security features does GoldenGate offer?

Ans:

GoldenGate provides security features including user identification, data encryption, and access control to guarantee the confidentiality and integrity of replicated data.

42. What are the topologies Supported By Goldengate?

Ans:

Oracle GoldenGate supports various replication topologies,

43. Explain conflict avoidance in GoldenGate.

Ans:

Conflict avoidance in GoldenGate involves configuring rules and strategies to prevent data conflicts from occurring during bidirectional replication, reducing the need for conflict resolution.

44. What’s Integrated Apply in GoldenGate?

Ans:

Integrated Apply in GoldenGate is a replication method that applies changes directly to the target database’s internal structures, bypassing SQL processing, and improving replication performance.

45. How do you set up data filtering in GoldenGate?

Ans:

Data filtering in GoldenGate is set up by specifying filter criteria in the configuration file, allowing you to include or exclude specific data based on conditions such as table names or column values.

46. What are performance bottlenecks in GoldenGate?

Ans:

Performance bottlenecks in GoldenGate can arise from factors like network latency, resource limitations, configuration inefficiencies, high transaction rates, or inadequate hardware.

47. Describe the GoldenGate heartbeat mechanism.

Ans:

A periodic signal between the source and target databases to confirm that the replication processes are running and the connection is active. It helps detect failures and ensures data consistency.

48. Recommend disaster recovery strategies for GoldenGate.

Ans:

Disaster recovery strategies for GoldenGate include setting up active-passive configurations, real-time data replication to a secondary site, regular backups, and ensuring failover and failback procedures are well-defined.

49. What databases can Oracle GoldenGate replicate data between?

Ans:

Oracle GoldenGate can replicate data between various databases, including Oracle Database, Microsoft SQL Server, IBM Db2, MySQL, and others, supporting both homogeneous and heterogeneous replication.

50. What’s the purpose of checkpoints in GoldenGate?

Ans:

The purpose of checkpoints in GoldenGate is to mark a specific point in time up to which data changes have been successfully applied to the target database. They play a crucial role in ensuring data consistency and integrity during replication.

51. How does Oracle GoldenGate handle data compression during replication?

Ans:

Oracle GoldenGate supports data compression natively, allowing you to reduce network bandwidth usage and improve replication performance. It uses various compression algorithms and techniques to minimize data transfer size.

52. What is Oracle GoldenGate Veridata used for?

Ans:

Veridata is a tool used for data comparison and validation. It helps ensure data consistency between source and target databases during replication, detecting and resolving discrepancies.

53. What is the Oracle GoldenGate Microservices architecture?

Ans:

A modern deployment option that separates GoldenGate components into microservices, making it more flexible and scalable. It simplifies administration and enhances overall manageability.

54. How can you monitor GoldenGate’s performance in real time?

Ans:

You can monitor Oracle GoldenGate performance in real-time using tools like Oracle Enterprise Manager (OEM) or built-in GoldenGate Monitor. These tools provide detailed metrics, logs, and dashboards to track replication performance.

55. What is Oracle GoldenGate’s role in data warehousing?

Ans:

Oracle GoldenGate plays a crucial role in data warehousing by enabling real-time or near-real-time data replication from operational databases to data warehouses.

56. How does GoldenGate support the replication of transactional data?

Ans:

- GoldenGate captures changes in the source database.

- It transforms and formats data for compatibility.

- Data is transmitted in real-time or batch mode.

- Ensures minimal latency for near real-time replication.

57. What’s the difference between active-active and active-passive replication in GoldenGate?

Ans:

| Aspect | Active-Active Replication | Active-Passive Replication | |

| Configuration | Multiple databases actively process read and write transactions. | A primary database processes read and write transactions, while one or more standby databases receive replicated data. | |

| Use Case | Load-balanced web applications, high availability, and even distribution of read and write operations. | Disaster recovery, failover, and reporting purposes where data is replicated for backup or reporting but not actively processed. | |

| Benefits | Load balancing, high availability, scalability, and even distribution of workloads. | Data protection, failover readiness, reporting, and analytics support. | |

| Challenges | Data conflict resolution is required to maintain consistency. | Data may be read-only in standby databases, and failover processes need to be carefully managed. |

58. How do you troubleshoot lagging replication in Oracle GoldenGate?

Ans:

- Check Oracle GoldenGate logs for errors and warnings.

- Review Extract and Replicat configuration.

- Check for resource constraints (CPU, memory, disk).

- Optimize source and target database performance.

59. What’s the significance of trail files?

Ans:

Trail files serve as a transaction log, ensuring data consistency and integrity during replication. Trail files are crucial for GoldenGate to capture, transform, and apply changes between databases.

60. What’s the process for rolling back a failed GoldenGate replication change?

Ans:

Rolling back a failed GoldenGate replication change typically involves identifying the problematic transaction or change, stopping GoldenGate replication temporarily, restoring the affected data to its previous state, and then resuming replication.

61. What are the best practices for securing Oracle GoldenGate configurations?

Ans:

- Restricting access to GoldenGate components and credentials.

- Encrypting network communication between source and target databases.

- Implementing strong authentication and authorization mechanisms.

- Monitoring and auditing GoldenGate activities for suspicious or unauthorized access.

62. Explain the term “DDL replication” in Oracle GoldenGate.will the underlying source and databases behave?

Ans:

DDL replication in Oracle GoldenGate refers to the process of replicating Data Definition Language (DDL) changes, such as table creation, alteration, or deletion, from the source database to the target database.

63. How does Oracle GoldenGate ensure data consistency in distributed environments?

Ans:

Oracle GoldenGate ensures data consistency in distributed environments through its log-based change capture and replication mechanism.

64. What is the GoldenGate Monitor and what does it do?

Ans:

GoldenGate Monitor is a web-based graphical user interface (GUI) tool provided by Oracle for monitoring and managing Oracle GoldenGate deployments. It allows administrators to monitor the status, performance, and health of their GoldenGate processes, extractors, replicats, and data pump processes in real time.

65. How does GoldenGate support character set conversion during replication?

Ans:

It supports character set conversion during replication by using the CONVERTCHARACTER parameter to specify the source and target character sets for data transformation.

66. After configuring the Oracle GoldenGate integrated capture process with default settings, I’ve noticed that as the data load increases, the extract process falls behind by an hour or more, causing a degradation in database performance. How can you address this performance issue?

Ans:

When operating in integrated capture mode, it is crucial to ensure that you allocate an adequate amount of memory to STREAMS_POOL_SIZE. The recommended practice is to allocate STREAMS_POOL_SIZE at the instance level and assign the MAX. SGA (System Global Area) at the GoldenGate process level, as demonstrated below:

- SQL> alter system set STREAMS_POOL_SIZE=3G

- TRANLOGOPTIONS INTEGRATEDPARAMS (MAX_SGA_SIZE 2048, PARALLELISM 4)

By configuring STREAMS_POOL_SIZE at the instance level and setting the MAX_SGA_SIZE appropriately in the GoldenGate process parameters, you can optimize memory allocation for the integrated capture process and improve its performance, ensuring that it can handle increased data loads without lagging behind.

67. Can you describe Oracle GoldenGate’s support for data masking and obfuscation?

Ans:

Oracle GoldenGate offers data masking and obfuscation through built-in features like column mapping and transformation to protect sensitive data during replication.

68. What’s the role of GoldenGate’s Initial Load capability?

Ans:

Initial Load capability is used to populate the target database with existing data before real-time replication begins, ensuring data consistency.

69. How can you monitor and manage GoldenGate remotely using command-line tools?

Ans:

You can monitor and manage Oracle GoldenGate remotely using command-line tools like GGSCI (GoldenGate Software Command Interface) for tasks like status checking and configuration updates.

70. Explain the difference between synchronous and asynchronous replication in Oracle GoldenGate.

Ans:

| Aspect | Synchronous Replication | Asynchronous Replication |

| Data Consistency | Ensures strong data consistency between source and target. | May result in temporary data inconsistency between source and target. |

| Latency | Introduces latency in write operations because they wait for replication to complete. | Write operations are faster, with lower latency, as they don’t wait for replication. |

| Data Loss Risk | Minimal data loss risk since changes are not acknowledged until replicated. | Higher data loss risk because changes are acknowledged before replication. |

| Complexity | Typically more complex to set up and manage due to the need for immediate and guaranteed communication. | Often simpler to set up and manage because it doesn’t require immediate communication. |

71. How does Oracle GoldenGate handle data conflicts in multi-master replication scenarios?

Ans:

GoldenGate handles data conflicts in multi-master replication scenarios through conflict detection and resolution mechanisms, including custom scripts, to maintain data consistency.

72. Mention the recommended approach for handling downtime during GoldenGate upgrades.

Ans:

- Plan and schedule the upgrade during a maintenance window.

- Back up GoldenGate configuration, trail files, and metadata.

- Gracefully stop GoldenGate processes using GGSCI.

- Ensure replication activities are complete.

73. Explain GoldenGate’s support for unidirectional and bidirectional replication.

Ans:

GoldenGate supports unidirectional replication by capturing changes from a source database and applying them to a target. It also supports bidirectional replication, where changes are captured and applied in both directions between source and target databases.

74. How does Oracle GoldenGate deal with changes to table structures in source databases?

Ans:

Using Data Definition Language (DDL) replication, which captures and replicates these structural changes to the target database, allowing schema evolution to be synchronized.

75. What are the considerations for optimizing performance in a high-transaction environment?

Ans:

Considerations for optimizing Oracle GoldenGate performance in a high-transaction environment include tuning parameters, minimizing network latency, increasing bandwidth, using parallel processing, and optimizing the database for efficient change capture.

76. What are some alternatives to GoldenGate for database replication and synchronization?

Ans:

Tools like SQL Server Replication, MySQL Replication, PostgreSQL’s logical replication, and third-party solutions like SharePlex and Attunity are alternatives to Oracle GoldenGate for database replication and synchronization.

77. What’s the primary role of the Oracle GoldenGate Capture process?

Ans:

- It captures data changes, such as inserts, updates, and deletes, from the source database’s transaction logs or redo logs.

- It converts these captured changes into a platform-independent, logical format called the “trail.”

- The trail contains a record of all the changes made to the source database tables.

- The Capture process ensures that the changes are captured in the order they occurred, and it maintains data consistency and integrity during the replication process.

78. Explain Oracle GoldenGate’s support for database upgrades and migrations.

Ans:

- Enabling continuous data replication from the old source database to the new target database.

- Minimizing downtime during the upgrade process.

- Facilitating a seamless switch to the new database once replication is up-to-date.

- Reducing disruption to business operations during the upgrade.

79. What’s the significance of the Extract and Replicat parameter files in GoldenGate configurations?

Ans:

The Extract and Replicat parameter files in GoldenGate configurations are crucial as they contain configuration settings and instructions for data extraction and replication processes. These files define how data is captured from the source and applied to the target, making them fundamental to the functioning and behavior of GoldenGate replication.

80. How does GoldenGate support data filtering based on specific criteria?

Ans:

In order to capture and replicate only the appropriate data, GoldenGate provides data filtering through the Extract and Replicat procedures.

81. Describe the concept of “pump” processes in Oracle GoldenGate.

Ans:

The pump processes in Oracle GoldenGate are in charge of moving trail data around the system. They ensure effective data transfer and replication in spread contexts and include Local Extract Pump, Remote Extract Pump, and Replicat Pump.

82. What role does the GoldenGate Collector process play in replication architectures?

Ans:

- Gathers data from GoldenGate Extract processes.

- Consolidates data into a single stream.

- Balances data load among Extract processes.

- Forwards data to target databases.

- Supports high availability configurations.

83. How does GoldenGate handle the replication of database sequences and triggers?

Ans:

GoldenGate replicates database sequences and triggers by capturing and applying the SQL statements responsible for their changes in the source database.

84. How does Oracle GoldenGate handle changes to column data types in source tables?

Ans:

Oracle GoldenGate handles changes to column data types by using column mapping and transformation functions to ensure the data is transformed appropriately during replication.

85. What’s the purpose of the Oracle GoldenGate Data Pump utility?

Ans:

The Data Pump utility is used for initial data loading, bulk data movement, and data extraction from source databases into target databases.

86. What’s the recommended strategy for testing and validating GoldenGate configurations?

Ans:

The recommended strategy for testing and validating Oracle GoldenGate configurations involves using a separate test environment that mirrors the production setup to ensure the accuracy and reliability of replication.

87. How can you ensure high availability for GoldenGate processes and components?

Ans:

High availability for Oracle GoldenGate processes and components can be achieved through redundancy, failover mechanisms, and monitoring tools to ensure continuous data replication and minimal downtime.

88. Explain the role of the Oracle GoldenGate Credential Store feature.

Ans:

Store feature securely stores credentials, keys, and passwords used by Oracle GoldenGate processes, enhancing security and simplifying credential management.

89. How Integrated Capture differ from Classic Capture?

Ans:

Oracle GoldenGate Integrated Capture is an integrated and more efficient capture mechanism compared to Classic Capture, which is older and has a separate capture process. Integrated Capture is the preferred method for Oracle Database.

90. What are the various modes of Oracle GoldenGate operation?

Ans:

Oracle GoldenGate operates in various modes, including

- Classic capture and integrated capture

- Batch and online modes

- Uni-directional and bi-directional modes

91. What is Change Data Capture (CDC) in the context of GoldenGate?

Ans:

CDC refers to the process of capturing and replicating changes made to data in source tables, ensuring data consistency between source and target databases.

92. What are the different types of capture methods in Oracle GoldenGate?

Ans:

Oracle GoldenGate supports various capture methods, including

- Log-Based Capture (CDC)

- Trigger-Based Capture

- Direct Bulk Capture

93. How can you optimize the initial load process?

Ans:

- Preprocess and cleanse data

- Use parallel processing

- Employ bulk loading techniques

- Minimize indexes and constraints temporarily

- Implement Change Data Capture (CDC)

- Schedule load during low activity

94. What is the role of the Integrated Capture feature?

Ans:

Integrated Capture is a feature that captures data changes directly from database redo logs, eliminating the need for a separate Extract process and enhancing performance.

95. How does the Pump process fit into the GoldenGate architecture, and what is its purpose?

Ans:

Pump processes move captured data between components in GoldenGate, ensuring efficient data transfer between Extract and Replicat processes in distributed environments.

96. What are the responsibilities of the Receiver process?

Ans:

The Receiver process receives trail data on the target side, manages conflict resolution, and ensures the data is applied correctly to the target database.

97. What are the key features of Oracle GoldenGate Monitor?

Ans:

- Real-time monitoring

- Alerting and notifications

- Performance metrics tracking

- Security auditing and reporting

- Historical data analysis