Apache Scala is a powerful programming language known for its functional programming capabilities and concise syntax. Designed to run on the Java Virtual Machine (JVM), Scala integrates seamlessly with Java and features robust static typing, making it ideal for scalable and efficient application development. Its advantages, such as pattern matching, immutability, and higher-order functions, distinguish it in various software development tasks, from big data processing with frameworks like Apache Spark to web applications.

1. What is Apache Scala?

Ans:

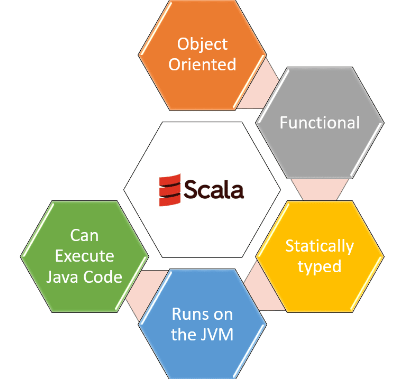

Apache Scala is a highly versatile programming language renowned for seamlessly merging object-oriented and functional programming paradigms. Operating on the Java Virtual Machine (JVM), Scala is meticulously crafted to offer brevity, expressiveness, and scalability. Its unique blend of features empowers developers to write concise yet powerful code, facilitating the development of complex and high-performance applications across various domains.

2. How does Scala compile to Java bytecode?

Ans:

Scala code undergoes compilation to Java bytecode through the Scala compiler, a process that transforms Scala source code into Java bytecode, making it executable on the Java Virtual Machine (JVM). This seamless compilation process facilitates interoperability with Java libraries and codebases, allowing Scala developers to leverage existing Java ecosystems while enjoying the benefits of Scala’s expressive and concise syntax.

3. How does Scala manage null values?

Ans:

Scala advocates for the utilization of Option types to manage null values effectively. Option[T] serves as a container that can hold a value (Some(value)) or indicate absence (None), thereby addressing the issue of NullPointerExceptions commonly encountered in Java. This approach encourages safer and more explicit handling of potentially null values, enhancing code reliability and robustness in Scala applications.

4. What are the primary features of Scala?

Ans:

- Functional programming skills, type inference for static typing, object-oriented concepts, and smooth Java interoperability are some of Scala’s main advantages.

- In addition, it supports immutability, has a clear syntax, and may be used to create scalable and maintainable programs.

- Another significant benefit is its rich collection of libraries and frameworks, which facilitate rapid development and enhance productivity.

5. Describe the interaction of Scala with Java.

Ans:

Scala facilitates seamless interaction with Java, enabling direct invocation of Java code from Scala and access to Java libraries. It also allows for the extension of Java classes within Scala. Conversely, Java can utilize Scala classes, call Scala code, and leverage Scala libraries, fostering interoperability and facilitating the integration of both languages within projects.

6. Explain the distinction between Scala and Java.

Ans:

| Aspect | Scala | Java |

|---|---|---|

| Type System | Strong static typing with type inference | Strong static typing |

| Syntax | Concise syntax, supports functional programming | More verbose syntax, object-oriented paradigm |

| Interoperability | Seamless integration with Java libraries | Native support for Java libraries |

| Functional | Fully supports functional programming paradigm | Functional programming features introduced later |

| Immutability | Emphasizes immutability | Immutability not emphasized but can be achieved |

7. What does immutability signify in Scala?

Ans:

- In Scala, immutability refers to objects whose state remains unchanged after creation, enhancing code safety and facilitating concurrency.

- Scala prominently favors immutable data structures, promoting robustness and simplifying parallel and concurrent programming.

- By ensuring that data remains constant, Scala reduces the risk of unintended side effects and enhances code reliability, making it well-suited for building scalable and concurrent applications.

8. What is the notion of type inference in Scala?

Ans:

- Type inference in Scala is a compiler feature that deduces the types of variables, expressions, and functions based on context, without requiring explicit type annotations.

- This capability reduces the need for developers to manually specify types, thereby enhancing code conciseness and improving readability.

- By inferring types dynamically, Scala promotes cleaner and more maintainable code while still providing the benefits of static typing.

9. What are higher-order functions in Scala?

Ans:

Higher-order functions in Scala are functions capable of accepting other functions as parameters or returning functions as results. This functionality enables advanced functional programming techniques such as function composition and behavior delegation. Additionally, higher-order functions facilitate code reusability and abstraction, allowing developers to write more flexible and concise code.

10. How does Scala accommodate both object-oriented and functional programming?

Ans:

Scala supports object-oriented programming through constructs like classes, objects, inheritance, and encapsulation. Simultaneously, it facilitates functional programming with features like first-class functions, immutable data structures, pattern matching, and higher-order functions, providing developers with versatile programming options.

11. What are the different approaches to defining a function in Scala?

Ans:

- Scala offers a variety of methods for defining functions, catering to different coding styles and needs.

- These methods include traditional method definitions, anonymous functions (lambdas), and function literals.

- Such diverse options not only provide flexibility but also empower developers to express functions concisely and effectively, enhancing code readability and maintainability.

12. What is the significance of the “val” and “var” keywords in Scala?

Ans:

- In Scala, “val” declares immutable variables, ensuring their values cannot be altered once initialized, thus promoting immutability and thread safety.

- On the other hand, “var” declares mutable variables, allowing for reassignment, albeit sacrificing immutability.

- Using “val” is generally preferred in functional programming as it leads to safer and more predictable code, reducing potential side effects.

13. How are tuples portrayed in Scala?

Ans:

Tuples in Scala represent heterogeneous collections of fixed size, denoted by parentheses and comma-separated values. For instance, a tuple with two elements can be created as (value1, value2), facilitating the grouping of different data types into a unified structure. They are particularly useful for returning multiple values from a method without the need for a custom class or data structure.

14. Define case classes and elucidate their usage in Scala.

Ans:

Case classes in Scala are immutable data structures primarily employed for modeling immutable data entities. They are declared using the “case class” keyword, which automatically generates boilerplate code for operations such as equality comparison and pattern matching, making them ideal for data representation. Additionally, case classes provide built-in support for destructuring, allowing for easier extraction of values from instances.

15. What is pattern matching in Scala?

Ans:

- Pattern matching in Scala serves as a robust mechanism for deconstructing data structures and executing code based on matched patterns.

- It enables concise and expressive handling of various cases, such as matching values, types, or structures, thereby offering versatility in control flow and data manipulation.

- Additionally, pattern matching can simplify complex conditional logic, making the code easier to read and maintain while enhancing overall code clarity and robustness.

16. What are traits, and how are they utilized in Scala?

Ans:

- Traits in Scala resemble interfaces in other programming languages, defining object types by specifying the signatures of methods they contain.

- They can be mixed into classes to provide reusable components, fostering code reuse and composition while enabling behaviors akin to multiple inheritance.

17. How does Scala handle exceptions?

Ans:

Scala manages exceptions using the “try-catch-finally” construct, similar to Java. Additionally, Scala encourages the use of functional constructs like “Option” and “Try” for error handling, promoting safer and more expressive approaches to handling exceptional cases. This functional approach allows developers to avoid null pointer exceptions and makes the code more readable and maintainable.

18. Explain the function of “lazy val” in Scala.

Ans:

In Scala, “lazy val” is employed to declare values that are computed lazily, deferring their initialization until their first access. This feature optimizes resource usage and proves particularly useful for initializing expensive computations or values. Additionally, it helps avoid unnecessary calculations if the value is never accessed, improving overall performance. By leveraging “lazy val,” developers can enhance the efficiency of their applications while maintaining clean and readable code.

19. Describe implicit parameters in Scala.

Ans:

- Implicit parameters in Scala allow values to be passed implicitly to functions.

- Declared using the “implicit” keyword in function parameters, they enable the compiler to automatically resolve and inject values from the current scope, enhancing code readability and flexibility.

- This feature also facilitates the implementation of type classes, enabling developers to define behavior for different types without modifying their original definitions, thus promoting code reuse and modular design.

20. How are closures implemented in Scala?

Ans:

- Closures in Scala retain references to variables declared outside their scope, even after the enclosing scope concludes execution.

- Leveraging lexical scoping, functions maintain access to variables in their enclosing lexical scope, resulting in robust and adaptable behavior.

- This ability allows closures to create stateful functions that can capture and manipulate external variables, enabling powerful functional programming patterns and enhancing code reusability.

21. What are the different varieties of collections available in Scala?

Ans:

Scala provides a diverse set of collection types, including lists, arrays, sets, maps, sequences, streams, queues, stacks, and vectors. Each collection serves distinct data manipulation needs and offers varied performance characteristics and immutability traits. Additionally, Scala collections come with a rich set of methods for functional transformations and operations, enabling developers to efficiently process and manipulate data.

22. Explain the differentiation between mutable and immutable collections in Scala.

Ans:

Mutable collections in Scala permit alterations to their elements post-creation, whereas immutable collections remain unaltered once initialized. While immutable collections ensure thread safety and encourage functional programming practices, mutable collections offer flexibility in modifying elements. This distinction allows developers to choose the appropriate collection type based on specific application needs and performance considerations.

23. How can a list be instantiated in Scala?

Ans:

In Scala, you can instantiate a list using the `List` keyword followed by elements enclosed in parentheses. For example:

- val myList = List(1, 2, 3, 4, 5)

This creates a list containing integers 1 through 5. Lists in Scala are immutable by default and can hold elements of any type.

24. Define higher-order functions and illustrate their usage.

Ans:

- Higher-order functions in Scala refer to functions that Return functions or take in other functions as parameters as results.

- They facilitate abstraction and code reuse by allowing the behavior to be parameterized.

- For instance, functions like “map,” “flatMap,” and “filter” exemplify standard higher-order functions used for data transformation and filtering.

25. How are map, flatMap, and filter operations applied in Scala?

Ans:

- In Scala, the “map” function applies a transformation to each element of a collection, returning a new collection with the transformed elements.

- “flatMap” performs a similar transformation but flattens the resulting nested collections, while “filter” selects elements from a collection based on a predicate condition.

- Additionally, these operations are often used in a functional programming style, enabling concise and expressive manipulation of data within collections.

26. What is the concept of currying in Scala?

Ans:

Currying in Scala involves converting a function that accepts multiple arguments into a sequence of functions, each accepting a single argument. This technique facilitates partial application of arguments and enhances the composability and flexibility of function usage. By allowing functions to be applied in stages, currying can simplify code and improve readability. Additionally, it enables more concise and expressive function definitions, making it easier to create higher-order functions and reusable components.

27. Define tail recursion and its significance in functional programming.

Ans:

Tail recursion occurs when a function calls itself as the last operation, with the result of the recursive call immediately returned. It is essential in functional programming as it enables iterative algorithms to be expressed recursively without the risk of stack overflow, thereby improving performance and code readability. Furthermore, many modern compilers and interpreters optimize tail-recursive functions, transforming them into iterative loops to enhance efficiency.

28. How can parallel collections be created in Scala?

Ans:

- Parallel collections in Scala can be generated by invoking the “par” method on existing collections and converting them into parallel counterparts.

- This enables concurrent processing of collection elements across multiple threads, potentially enhancing performance for parallelized operations.

29. What is the difference between foldLeft and foldRight functions in Scala?

Ans:

- Both “foldLeft” and “foldRight” are higher-order functions utilized to aggregate the elements of a collection into a single value.

- However, they diverge in the direction they traverse the collection. “foldLeft” traverses from left to right, while “foldRight” traverses from right to left.

- Another key distinction is that “foldLeft” is generally more efficient for large collections, as it can be tail-recursive, whereas “foldRight” may lead to stack overflow errors with large collections due to its non-tail-recursive nature.

30. Describe the concept of monads in functional programming.

Ans:

Monads in functional programming represent a design pattern employed to encapsulate computation steps and manage side effects. They offer a standardized approach to chaining operations and handling context, providing benefits such as compositionality, error handling, and asynchronous computation. Standard monads feature operations like “flatMap” and “map” for sequencing computations.

31. Explain the concurrency model in Scala.

Ans:

The concurrency model in Scala is structured around the Actor model, accommodating both shared-state concurrency and message-passing concurrency. It leverages constructs such as actors, futures, and software transactional memory (STM) to facilitate safe and efficient concurrent programming practices. This approach not only simplifies the complexities of concurrent programming but also enhances scalability and responsiveness in applications.

32. What are futures, and how are they employed for asynchronous programming in Scala?

Ans:

- Futures in Scala represent asynchronous computations that can conclude at a later time.

- They enable non-blocking operations and manage results asynchronously.

- Futures allow developers to express concurrent tasks without blocking the primary thread, thereby enhancing application responsiveness.

33. Describe the actor model in Scala.

Ans:

- In Scala, the actor model furnishes a high-level abstraction for concurrent and distributed computing.

- Actors are autonomous entities communicating exclusively through message passing.

- This model ensures state encapsulation and supports the development of scalable and resilient systems.

34. Define software transactional memory (STM) in Scala.

Ans:

Software Transactional Memory (STM) in Scala is a concurrency control mechanism facilitating safe access to shared memory by multiple threads. It ensures transactional integrity by encapsulating memory accesses within atomic transactions, thus mitigating data race issues and guaranteeing thread safety. This approach simplifies concurrent programming by allowing developers to reason about code without the complexity of traditional locking mechanisms.

35. How does Scala manage thread synchronization?

Ans:

Scala employs various synchronization mechanisms, such as locks, synchronized blocks, and atomic operations, to manage thread synchronization. Additionally, Scala’s concurrency constructs, like actors and STM, offer higher-level abstractions for handling concurrency and ensuring thread safety. These features enable developers to write concurrent applications more intuitively, reducing the complexity typically associated with thread management.

36. What is the distinction between concurrency and parallelism?

Ans:

- Concurrency involves managing multiple tasks concurrently, with their execution potentially interleaved over time.

- Parallelism, however, entails executing various tasks simultaneously across multiple processors or cores, resulting in genuine simultaneous execution.

- While concurrency focuses on structuring a program to handle multiple tasks effectively, parallelism aims to enhance performance by utilizing hardware resources more efficiently to reduce overall execution time.

37. What are parallel collections, and how are they implemented in Scala?

Ans:

- Parallel collections in Scala are specialized data structures supporting parallel execution of operations on their elements.

- They utilize multi-threading to execute operations like mapping, filtering, and reducing concurrently, thereby enhancing performance for CPU-bound tasks.

- Additionally, they automatically manage the distribution of workload across available processor cores, allowing developers to easily leverage parallelism without needing to handle low-level threading details.

38. How can a thread be created in Scala?

Ans:

Threads in Scala can be instantiated by extending the “Thread” class and overriding its “run” method or by passing a function or code block to the “Thread” constructor. Scala also provides higher-level concurrency constructs like futures and actors for concurrent programming. These abstractions simplify the development of concurrent applications by managing the complexity of thread management and enabling easier handling of asynchronous tasks.

39. Explain the concept of atomic operations in Scala.

Ans:

Atomic operations in Scala ensure that specific operations on shared variables occur atomically, without interruption. They prevent race conditions and data inconsistency by offering mutual exclusion guarantees, typically achieved through constructs like atomic variables and compare-and-swap operations. By utilizing atomic operations, developers can build thread-safe applications that maintain data integrity in concurrent environments.

40. What is the role of the “volatile” keyword in Scala?

Ans:

- The “volatile” keyword in Scala designates variables whose values may be altered by several threads.

- It guarantees that writing and reads to the variable are visible to all threads and prevents compiler optimizations that could reorder or cache variable accesses, thereby upholding thread safety in concurrent environments.

41. What do higher-kinded types represent in Scala?

Ans:

Higher-kinded types in Scala are type constructors that can take other type constructors as parameters. They allow for abstraction over type constructors, enabling the creation of generic data structures and higher-level abstractions. This feature is particularly useful for building flexible and reusable components, as it provides a way to represent and manipulate types at a higher level of abstraction, promoting code modularity and expressiveness.

42. What is the concept of variance in Scala?

Ans:

Variance in Scala defines the inheritance relationship between parameterized types concerning their type parameters. It categorizes type parameters as covariant (+), contravariant (-), or invariant, determining how subtyping relationships are preserved or reversed in generic types. Covariance allows a subclass type to be substituted for a superclass type, enhancing flexibility in collections. Contravariance, on the other hand, enables a superclass type to be substituted for a subclass type, ensuring type safety in function parameters.

43. How does Scala address type erasure?

Ans:

- Scala handles type erasure to ensure compatibility with Java’s runtime representation of generics.

- Type information is erased at compile time but can be partially retained through reified type tags or manifest implicit evidence, allowing limited runtime introspection of generic types.

- This mechanism enables developers to work with generics while maintaining type safety, although it may limit certain operations, such as determining the actual type of a generic at runtime.

44. What are type classes, and how are they implemented in Scala?

Ans:

- Type classes in Scala serve as a design pattern for defining generic behaviors for types independently of their hierarchy.

- They are implemented as traits with generic methods, enabling instances of the trait to be defined for various types, thus facilitating ad-hoc polymorphism and code reuse.

- This approach allows developers to extend functionality without modifying existing types, promoting a modular design and enhancing code maintainability across different applications.

45. Describe the purpose of simplicity in Scala.

Ans:

In Scala, implicits serve as a powerful mechanism for automatic parameter resolution and implicit conversions. They allow developers to write code with concise syntax by enabling implicit parameter passing and type enrichment. Moreover, implicits play a crucial role in facilitating the creation of Domain-Specific Languages (DSLs), implementing type classes, and incorporating other advanced language features.

46. What are macros, and how are they applied in Scala?

Ans:

Macros in Scala are compile-time metaprogramming constructs enabling developers to generate and manipulate code during compilation. They offer powerful capabilities for code generation, optimization, and domain-specific language design, thereby enhancing expressiveness and compile-time safety. By allowing the creation of reusable code patterns and reducing boilerplate, macros can significantly improve developer productivity.

47. Explain existential types in Scala.

Ans:

- Existential types in Scala represent types for which only the existence of specific properties or methods is known without specifying the exact type.

- They prove helpful in abstracting types with unknown structures or when dealing with types with hidden or unspecified information.

- Additionally, existential types facilitate the creation of more flexible and reusable code by allowing functions and data structures to operate on a broader range of types while maintaining type safety.

48. What do abstract type members signify in Scala?

Ans:

- Abstract type members in Scala denote type declarations defined within traits or classes without specifying their concrete implementation.

- They allow subclasses to provide concrete types, thereby enabling flexibility and decoupling between traits and their implementations.

- This mechanism facilitates the creation of more generic and reusable code, as it allows for type substitution while maintaining type safety across different implementations.

49. Define the “cake pattern” in Scala.

Ans:

The “cake pattern” in Scala serves as a design pattern for managing dependencies and modularizing applications through composition. It involves defining traits to represent components and composing them through linearization to assemble complex systems, thereby promoting flexibility, testability, and maintainability. This pattern allows for the creation of highly modular code, where individual components can be easily replaced or extended without affecting the entire system.

50. How does Scala manage pattern matching in conjunction with generics?

Ans:

Scala’s pattern matching supports generics through type erasure and type pattern matching. While the exact type information may be erased at runtime, Scala’s pattern matching can match against the generic structure, enabling powerful and concise pattern-matching capabilities for generic types. This allows developers to write more flexible and reusable code. Additionally, pattern matching can be utilized with sealed traits, providing exhaustive checking and ensuring all cases are handled, which enhances code safety and maintainability.

51. What testing frameworks are available in Scala?

Ans:

- Scala offers a variety of testing frameworks, including ScalaTest, Specs2, and ScalaCheck, tailored to meet diverse testing needs.

- These frameworks support various testing methodologies such as unit testing, property-based testing, and behavior-driven development (BDD).

- With their robust features and flexible capabilities, Scala’s testing frameworks empower developers to ensure the reliability, correctness, and quality of their code across different testing scenarios and use cases.

52. How is ScalaTest used for unit testing?

Ans:

- ScalaTest simplifies unit testing in Scala by offering a rich set of testing styles and matches.

- Tests can be structured using various styles like FlatSpec, FunSuite, or WordSpec, while assertions are performed using expressive matches, enhancing the readability and maintainability of test suites.

- Additionally, ScalaTest provides powerful support for asynchronous testing and property-based testing, allowing developers to effectively test concurrent code and validate properties of functions, further improving the robustness of their applications.

53. What is the role of SBT (Scala Build Tool)?

Ans:

SBT serves as a build tool for Scala projects, streamlining project management, dependency resolution, and build automation. It employs a Scala-based DSL for defining build configurations, ensuring high customization and suitability for different project structures and needs. Additionally, SBT supports incremental compilation, allowing developers to compile only the parts of the code that have changed, which significantly reduces build times.

54. How does SBT differ from Maven?

Ans:

While both SBT and Maven are build tools, they diverge in technology and build configuration syntax. SBT, based on Scala, utilizes a Scala-based DSL for configuration, offering greater flexibility and expressiveness tailored for Scala projects. Conversely, Maven employs XML for configuration and is predominantly geared towards Java projects. Additionally, SBT supports incremental builds, allowing for faster compilation times, which enhances developer productivity.

55. What are the benefits of Scala development with IntelliJ IDEA?

Ans:

- IntelliJ IDEA provides comprehensive Scala development support via its Scala plugin, offering features like code completion, syntax highlighting, refactoring tools, and integration with build tools like SBT.

- It also facilitates debugging and integrates seamlessly with version control systems, enhancing the development workflow.

- Furthermore, it includes support for running and testing Scala applications directly within the IDE, streamlining the development and testing processes.

56. What is the purpose of scalafmt in Scala development?

Ans:

- Scalafmt serves as a code formatting tool for Scala, ensuring consistent and standardized code style across projects.

- It automatically formats Scala code based on configurable rules, minimizing the need for manual formatting and enhancing code readability and maintainability.

- Furthermore, Scalafmt integrates seamlessly with popular IDEs and build tools, streamlining the development workflow and promoting adherence to coding standards throughout the development process.

57. How is ScalaCheck utilized for property-based testing?

Ans:

ScalaCheck is a property-based testing library that generates test cases automatically based on properties specified by developers. It enables testing across a wide range of input data, uncovering edge cases and potential bugs not covered by traditional example-based unit tests, thereby enhancing test coverage and robustness. Additionally, it promotes a more formal approach to testing by allowing developers to express the intended behavior of their code as properties, leading to clearer and more maintainable tests.

58. What is the purpose of sbt-assembly in Scala?

Ans:

sbt-assembly is an SBT plugin employed for creating fat JARs (JAR files containing all dependencies) for Scala projects. It simplifies deployment by packaging the project along with its dependencies into a single executable JAR file, making the distribution and execution of Scala applications more manageable. Additionally, sbt-assembly supports merging resource files and managing conflicting dependencies, ensuring a smoother build process.

59. How can Scala applications be debugged?

Ans:

- Scala applications can be debugged using standard debugging techniques supported by IDEs like IntelliJ IDEA and Eclipse.

- Developers can quickly find and fix problems, step through code execution, check variables, and establish breakpoints.

- Additionally, logging frameworks such as Logback and SLF4J can be integrated to provide detailed runtime information, helping developers trace issues that may not be easily identifiable during standard debugging sessions.

60. What profiling tools are available for Scala applications?

Ans:

- Profiling tools such as YourKit, VisualVM, and JProfiler analyze the performance of Scala applications.

- These tools offer insights into memory usage, CPU utilization, and execution hotspots, assisting developers in optimizing their code for better performance and resource efficiency.

- Additionally, they provide real-time monitoring capabilities, allowing developers to track application performance during runtime and identify potential bottlenecks or memory leaks that could affect overall application stability.

61. What are the web framework options available for Scala?

Ans:

- Scala provides a range of web frameworks, including Play Framework, Akka HTTP, Finch, and Scalatra.

- Each caters to diverse web development requirements and offers varying levels of abstraction and functionality.

- Additionally, these frameworks promote reactive programming principles, allowing developers to build highly responsive and resilient applications that can efficiently handle concurrent users and real-time data streams.

62. What is an overview of Play Framework architecture?

Ans:

The Play Framework adopts a reactive, non-blocking architecture built on top of Akka and Netty. Employing an actor-based model for handling asynchronous and concurrent requests, Play embraces a stateless, RESTful approach while prioritizing modularity and developer efficiency. It also features built-in support for WebSockets and HTTP/2, enabling real-time communication and improved performance. Furthermore, Play’s seamless integration with Scala and Java makes it a versatile choice for building modern web applications.

63. How is routing managed in Play Framework?

Ans:

The Play Framework manages routing through its “routes” file, employing a straightforward syntax that maps HTTP requests to specific controller actions. By incorporating HTTP methods, URL patterns, and corresponding controller methods, Play enables developers to create clear and expressive routing configurations. This approach ensures seamless handling of incoming requests, promoting efficient and organized development of web applications.

64. What is Akka HTTP, and how does it differ from Play Framework?

Ans:

- Akka HTTP, distinct from Play Framework, serves as a standalone toolkit for crafting reactive, HTTP-based systems through Akka actors.

- Unlike Play Framework, which provides a comprehensive web development framework, Akka HTTP focuses on offering lower-level APIs for HTTP request handling.

- This approach prioritizes flexibility and performance over high-level abstractions, empowering developers to create highly efficient and tailored solutions for building scalable and resilient web applications.

65. How can WebSockets be integrated with Akka HTTP?

Ans:

- Integrating Websockets with Akka HTTP involves leveraging the “handleWebSocketMessages” directive to define WebSocket message processing.

- By implementing WebSocket handlers using Akka actors, developers effectively manage client connections, handle messages, and propagate updates.

- Additionally, Akka HTTP supports backpressure and flow control, ensuring that message flow is managed efficiently to prevent overwhelming either the client or the server during high-load scenarios.

66. How does Scala integrate with Apache Spark?

Ans:

Scala serves as the primary language for Apache Spark, offering a robust API and expressive syntax for developing Spark applications. Its functional programming features, such as higher-order functions and immutable data structures, align well with Spark’s distributed computing paradigm, enabling developers to write concise and efficient code. Additionally, Scala’s strong static typing enhances type safety, reducing the likelihood of runtime errors in Spark applications.

67. What is Scala’s role in Apache Flink?

Ans:

- In Apache Flink, Scala assumes a pivotal role as one of the primary languages for application development.

- Leveraging Scala’s functional programming attributes and concise syntax, developers can articulate intricate data processing pipelines efficiently.

- Additionally, Scala’s powerful type system enhances code safety and reliability, enabling developers to catch errors at compile time rather than at runtime.

68. What differentiates Spark RDDs from DataFrames/Datasets?

Ans:

Spark RDDs (Resilient Distributed Datasets) offer distributed object collections with fine-grained control over data processing. In contrast, DataFrames and Datasets furnish higher-level abstractions, featuring schema inference and optimization for structured data processing and SQL-style queries. While RDDs provide flexibility and low-level control, DataFrames and Datasets enhance performance through catalyst optimization and execution plans.

69. How does Scala approach data serialization in Spark?

Ans:

- Scala defaults to Java serialization in Spark, though developers can opt for more efficient serialization formats like Kryo by configuring Spark explicitly.

- Kryo serialization, known for its superior performance and reduced overhead, enhances data serialization in Spark.

- Additionally, using Kryo can significantly decrease the amount of memory required for data storage, leading to improved overall performance and reduced latency during data processing tasks.

70. What are the advantages of using Scala for distributed computing?

Ans:

Scala offers several benefits for distributed computing, including concise syntax, robust functional programming constructs, and seamless integration with distributed frameworks like Apache Spark and Apache Flink. Scala’s emphasis on type safety, immutability, and parallelism aids in crafting scalable and resilient distributed applications.

71. How does Scala contribute to machine learning development?

Ans:

Scala plays a pivotal role in machine learning advancement by leveraging libraries such as Apache Spark MLlib, Breeze, and Deeplearning4j. Its succinct syntax, functional programming prowess, and seamless integration with distributed computing platforms make it an ideal language for crafting scalable and efficient machine-learning pipelines.

72. What is Scala’s significance within Apache Mahout?

Ans:

- Scala stands at the forefront of Apache Mahout, an open-source machine-learning library.

- With its concise syntax and functional programming paradigms, Scala empowers the development of scalable and distributed machine-learning algorithms within the Mahout ecosystem.

- Additionally, Scala’s strong type system and support for parallel processing allow developers to build robust, high-performance machine-learning applications that can efficiently handle large datasets and complex computations.

73. What machine learning libraries are available in Scala?

Ans:

- Scala offers a plethora of machine learning libraries, including Apache Spark MLlib, Breeze, Deeplearning4j, Smile, and Mahout.

- These libraries provide diverse functionalities, spanning distributed computing, numerical analysis, and deep learning, thereby addressing a broad spectrum of machine learning demands.

- Additionally, their seamless integration with Scala’s functional programming features enhances the ability to write expressive and concise code, making it easier to implement complex machine learning algorithms.

74. How can Scala be integrated with TensorFlow?

Ans:

Scala seamlessly integrates with TensorFlow, a prominent deep learning framework, through libraries such as TensorFlow Scala and TensorFlow for Scala. These tools furnish Scala APIs, enabling effortless fusion of Scala’s functional programming capabilities with TensorFlow’s deep learning prowess. This integration allows developers to leverage Scala’s expressive syntax and type safety while building complex machine learning models.

75. Exploring Breeze for Numerical Computing in Scala.

Ans:

Breeze serves as a premier numerical computing library for Scala, offering robust support for linear algebra, numerical processing, and scientific computations. Its efficient implementation of mathematical operations renders it invaluable for machine learning tasks requiring numerical analyses, matrix manipulations, and statistical computations.

76. What ORM libraries are tailored for Scala?

Ans:

- Scala boasts a plethora of ORM (Object-Relational Mapping) libraries, including Slick, Quill, and Doobie.

- These tools simplify database interaction by abstracting SQL intricacies and providing type-safe database access via Scala DSLs or functional constructs.

- Furthermore, they enhance productivity by enabling developers to write less boilerplate code and focus on business logic, resulting in cleaner and more maintainable codebases.

77. What is the role of Slick in Scala?

Ans:

Slick, an ORM library for Scala, streamlines database access and manipulation through a type-safe DSL. By enabling developers to define database schemas, execute queries, and compose database operations using Scala’s functional programming features, Slick enhances productivity and code maintainability. Additionally, it supports asynchronous database access, allowing applications to remain responsive while performing I/O operations.

78. How is asynchronous database query execution performed in Scala?

Ans:

- Scala facilitates asynchronous execution of database queries through libraries like Slick or Quill.

- These frameworks offer asynchronous APIs, enabling non-blocking query execution and efficient resource utilization in concurrent applications.

- Furthermore, they provide a functional approach to constructing queries, allowing developers to write cleaner and more expressive code that is easier to understand and maintain.

79. What are the benefits of adopting Quill for database operations?

Ans:

Quill confers numerous advantages for database access in Scala, including type safety, composability, and compile-time query validation. Its concise DSL streamlines query writing minimizes boilerplate code and prevents common runtime errors associated with SQL queries. Additionally, Quill supports asynchronous execution, enabling non-blocking database interactions for improved performance. Its ability to generate SQL dynamically also allows for greater flexibility in adapting to different database backends.

80. How are database migrations managed in Scala?

Ans:

Scala adeptly handles database migrations with tools such as Flyway or Liquibase. These utilities manage database schema changes through configuration files or Scala scripts, ensuring versioning and seamless execution across diverse environments. By automating the migration process, they reduce the risk of errors and facilitate collaborative development. Additionally, these tools support rollbacks, enabling developers to revert to previous states if necessary, thereby enhancing the reliability of database management.

81. What are the advantages of utilizing Cats for functional programming in Scala?

Ans:

Cats furnish a comprehensive suite of functional programming abstractions and type classes, fostering the creation of expressive and composable functional code. Its lightweight nature, extensive documentation, and vibrant community support position it as a preferred tool for enthusiasts of functional programming in Scala. Additionally, Cats encourages the use of functional patterns such as monads and functors, which can significantly improve code clarity and reusability.

82. Explaining the Purpose of Monix in Scala.

Ans:

- Monix is a powerful library for asynchronous and reactive programming in Scala.

- It offers abstractions for managing asynchronous computations, handling concurrency, and constructing reactive streams, empowering developers to build high-performing and resilient applications.

- Additionally, Monix integrates well with existing Scala ecosystems and provides robust support for backpressure, enabling efficient handling of data flows and resource management in reactive systems.

83. How does ScalaZ contribute to functional programming in Scala?

Ans:

- ScalaZ (pronounced “scalaz”) enriches the landscape of functional programming in Scala by delivering a robust array of type classes, data types, and abstractions.

- It champions principles like purity, immutability, and composability, enabling developers to craft succinct, expressive, and type-safe functional code.

84. What is the objective of FS2 in Scala?

Ans:

FS2 (Functional Streams for Scala) emerges as a functional streaming library tailored for Scala, offering a purely functional, composable, and declarative approach to stream processing. It empowers developers to define and manipulate streams of data in a manner that’s both type-safe and resource-safe, making it an optimal choice for constructing reactive and scalable applications.

85. How is ZIO harnessed for effectful programming in Scala?

Ans:

ZIO stands as a potent library for effectful programming in Scala, furnishing abstractions for managing side effects, asynchronous computations, and resource handling. It facilitates the construction of purely functional and type-safe applications, championing traits like composability, testability, and referential transparency. Additionally, ZIO provides powerful error handling capabilities, allowing developers to define and manage failures explicitly.

86. What are the deployment strategies for Scala applications?

Ans:

Deploying Scala applications to production often involves packaging the application alongside its dependencies into executable artifacts (such as JAR files), configuring the requisite deployment environments, and utilizing deployment tools like Docker, Kubernetes, or conventional deployment scripts for orchestration and scaling. Additionally, it is crucial to implement monitoring and logging solutions to track application performance and identify potential issues.

87. How can Scala applications be horizontally scaled?

Ans:

- Horizontal scaling of Scala applications revolves around distributing application workload across multiple instances or nodes to accommodate escalating load or traffic.

- Achieving this entails deploying applications within a clustered environment, employing load balancers, and leveraging distributed computing frameworks like Apache Spark or Akka for scalable processing.

88. What monitoring tools are available for Scala applications?

Ans:

A myriad of monitoring tools cater to Scala applications, including Prometheus, Grafana, New Relic, and Kamon. These tools furnish features for monitoring application performance, collecting pertinent metrics, identifying anomalies, and diagnosing issues, thereby ensuring the reliability and stability of Scala applications in production. Furthermore, they enable developers to visualize data in real-time, facilitating proactive management and optimization of application performance.

89. What strategies optimize memory usage in Scala applications?

Ans:

Optimizing memory usage in Scala applications entails a multifaceted approach involving the identification and resolution of memory leaks, minimization of object allocation, optimization of data structures, and fine-tuning of JVM settings. Techniques such as memory usage profiling, garbage collection analysis, and memory profiling tools aid in pinpointing and remedying memory-related concerns.

90. What are the best practices for dependency management in Scala projects?

Ans:

Adhering to best practices in dependency management for Scala projects involves:

- Utilizing dedicated dependency management tools like sbt or Maven.

- Specifying explicit dependency versions.

- Minimizing dependency scope.

- Leveraging dependency injection for enhanced modularity.