If you are preparing for Mahout Interview, then you are at the right place. Today, we will cover some mostly asked Mahout Interview Questions, which will boost your confidence. The Mahout course learning Tools for use on analyzing Big-data, how to setup Apache mahout cluster, History of Mahout, etc. Therefore, Mahout professionals need to encounter interview questions on Mahout for different enterprise Mahout job roles. The following discussion offers an overview of different categories of interview questions related to Mahout to help aspiring enterprise Mahout Professionals.

1. What is Apache Mahout, and how does it fit into the Apache Hadoop ecosystem?

Ans:

Apache Mahout is an open-source machine learning library that runs on top of the Apache Hadoop distributed computing framework. It’s designed to efficiently implement scalable and distributed machine learning algorithms. Mahout enables the processing of large datasets in parallel by leveraging the power of Hadoop’s distributed file system (HDFS) and MapReduce paradigm. This integration allows for the effective utilization of distributed computing resources to perform machine learning tasks on massive datasets.

2. Explain the collaborative filtering algorithm in Apache Mahout and provide an example scenario where it might be used.

Ans:

Collaborative filtering in Mahout is a technique used to make automatic predictions about the preferences of a user by collecting preferences from many users (collaborating). For example, in a movie recommendation system, if user A and user B have similar preferences for movies, and user A likes a movie that user B has already seen but not user A, the system can recommend that movie to user A based on the collaborative filtering algorithm.

3. How does Mahout handle item-based recommendation, and what are the advantages of this approach?

Ans:

- Mahout’s item-based recommendation involves calculating item similarities based on user preferences.It suggests products based on what a user has already expressed interest in.

- The advantages of item-based recommendation include scalability, as the model size depends on the number of items rather than users, and it often performs well in scenarios where user preferences change less frequently than item characteristics.

4. Explain the concept of matrix factorization in the context of Mahout. Provide a scenario where matrix factorization is beneficial.

Ans:

Matrix factorization in Mahout involves decomposing a user-item interaction matrix into two lower-dimensional matrices. This technique is often used in collaborative filtering to discover latent factors representing user and item preferences. In a movie recommendation system, matrix factorization helps identify underlying features such as genres, allowing for more accurate and personalized recommendations based on user preferences.

5. Describe the difference between content-based and collaborative filtering in Mahout. Provide an example scenario for each.

Ans:

| Content-Based Filtering | Collaborative Filtering |

|---|---|

| It recommends items to a user based on the features of items and the preferences expressed by the user for those features. For instance, recommending articles based on a user’s past reading history. | It recommends items based on the preferences of other users. For example, suggesting movies based on the preferences of users who have similar movie-watching behavior. |

6. How does Mahout handle the issue of data sparsity in collaborative filtering?

Ans:

Mahout addresses data sparsity in collaborative filtering by employing techniques such as matrix factorization. By decomposing the user-item interaction matrix into lower-dimensional matrices, Mahout can identify latent factors that capture underlying patterns in sparse data. This allows for more accurate recommendations even when users have rated or interacted with only a small subset of items.

7. Explain the role of the “similarity measure” in Mahout’s recommendation algorithms. Provide an example of a similarity measure and its application.

Ans:

The similarity measure in Mahout determines how similar two items or users are. An example is the Pearson correlation coefficient, which measures the linear correlation between two variables. In a recommendation system, this measure can be used to assess how closely the preferences of two users align. A higher Pearson correlation coefficient suggests a stronger similarity, influencing the recommendation of items.

8. Discuss the use of Mahout in large-scale data processing and its advantages in a distributed computing environment.

Ans:

Mahout leverages Apache Hadoop for large-scale data processing. Its algorithms are designed to run in a distributed computing environment, allowing for the efficient processing of massive datasets. The advantages include scalability, fault tolerance, and parallel processing capabilities, making Mahout suitable for handling big data and performing complex machine learning tasks on distributed computing clusters.

9. Explain how Mahout can be integrated with other Apache technologies for a comprehensive data analytics solution.

Ans:

Mahout can be integrated with Apache technologies such as Hadoop, Spark, and Flink to create comprehensive data analytics solutions. For example, Mahout’s machine learning algorithms can be executed on a Hadoop cluster using MapReduce, or on Apache Spark for in-memory processing. This integration allows organizations to leverage the strengths of different Apache projects to build robust and scalable data analytics pipelines.

10. Discuss the challenges and considerations when deploying Mahout-based models in a production environment.

Ans:

Deploying Mahout-based models in production requires addressing challenges such as model maintenance, real-time processing, and scalability. Considerations include choosing the right algorithm, optimizing parameters, and ensuring integration with existing systems. Additionally, monitoring and updating models to adapt to evolving data patterns is crucial for maintaining the effectiveness of Mahout-based solutions in a production environment. Regular evaluation and testing are essential to ensure the continued accuracy and relevance of deployed models.

11. Describe the difference between user-based and item-based collaborative filtering in Mahout. Give an example of a situation in which you would pick one over the other.

Ans:

- User-Based Collaborative Filtering: Makes suggestions for products based on the tastes of users who share the same interests as the target user. This approach is effective when user preferences are stable over time.

- Item-Based Collaborative Filtering: Recommends items similar to those the user has already expressed interest in. This is beneficial when item characteristics remain relatively constant. For instance, in a music streaming service, user-based collaborative filtering may be preferred if users have consistent music preferences, while item-based collaborative filtering might be more suitable for recommending similar songs.

12. Explain the concept of implicit feedback and how Mahout addresses it in collaborative filtering. Provide an example scenario.

Ans:

Implicit feedback refers to user interactions that are not explicitly provided, such as clicks, views, or purchase history. Mahout can handle implicit feedback by incorporating user behavior into collaborative filtering models. For instance, in an e-commerce platform, if a user frequently views a particular category of products without making a purchase, Mahout can use implicit feedback to recommend similar items within that category based on user behavior.

13. How does Mahout handle the cold-start problem in recommendation systems, and what strategies can be employed to mitigate this issue?

Ans:

- The cold-start problem occurs when a recommendation system lacks sufficient data about new users or items. Mahout addresses this by combining content-based and collaborative filtering approaches.

- For example, when dealing with a new user, Mahout may initially provide recommendations based on item characteristics (content-based) and gradually incorporate collaborative filtering as the user interacts more with the system. This hybrid approach helps mitigate the cold-start problem by leveraging available data and improving recommendations over time.

14. Discuss the role of Apache Mahout in the context of machine learning model evaluation and validation.

Ans:

Mahout provides tools for model evaluation and validation, crucial steps in building effective machine learning models. It includes metrics such as Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) to assess the performance of recommendation models. Additionally, Mahout facilitates cross-validation techniques to ensure the robustness of models by partitioning the dataset into training and testing sets. These evaluation tools help data scientists fine-tune parameters and optimize models for better predictive accuracy.

15. Explain the significance of the “recommendation context” in Mahout’s recommendation algorithms. Provide an example scenario where the recommendation context is important.

Ans:

The recommendation context in Mahout refers to additional information, such as time, location, or user behavior, that can influence the recommendation process. For example, in a retail setting, the context could be the time of day. Mahout allows for incorporating context-aware recommendations, ensuring that recommendations are more relevant based on specific conditions. This is crucial in scenarios where user preferences vary depending on contextual factors, such as recommending different products during holidays or weekends.

16. How does Mahout handle the scalability of recommendation algorithms in the context of growing datasets?

Ans:

- Mahout employs distributed computing capabilities, particularly through integration with Apache Hadoop and Apache Spark, to handle the scalability of recommendation algorithms. As the dataset grows, Mahout’s algorithms can efficiently scale across multiple nodes in a cluster, distributing the computational workload.

- This scalability ensures that Mahout can process and generate recommendations for large and ever-expanding datasets, making it suitable for enterprise-level applications with massive amounts of user and item interactions.

17. Discuss the role of Mahout in building real-time recommendation systems. Provide an example scenario where real-time recommendations are critical.

Ans:

Mahout supports the development of real-time recommendation systems through integration with streaming frameworks like Apache Flink. Real-time recommendations are crucial in scenarios like online retail, where user preferences may change rapidly. Mahout’s ability to process streaming data in real-time enables the generation of up-to-the-moment recommendations based on the latest user interactions, ensuring a more dynamic and responsive user experience.

18. Explain the process of model training and updating in Mahout’s recommendation algorithms.

Ans:

Mahout’s model training involves using historical data to create initial collaborative filtering models. These models can be updated over time to adapt to changing user preferences and behavior. The updating process typically involves retraining the model with new data. For instance, in a news recommendation system, Mahout may periodically update the model to consider the most recent articles read by users, ensuring that recommendations stay relevant and accurate.

19. Discuss the role of Mahout in handling diversity and serendipity in recommendation systems. Provide an example scenario.

Ans:

- Mahout addresses diversity and serendipity by incorporating techniques that ensure recommendations go beyond the user’s immediate preferences.

- For instance, in a music streaming service, Mahout may introduce novel songs that are not directly aligned with the user’s existing preferences but have characteristics similar to those the user enjoys. This promotes serendipitous discoveries and enhances the diversity of recommendations, creating a more engaging and satisfying user experience.

20. How does Mahout contribute to the interpretability of recommendation models, and why is interpretability important in machine learning?

Ans:

Mahout allows users to interpret recommendation models by providing insights into the factors influencing recommendations. Interpretability is crucial in machine learning as it helps users understand why certain recommendations are made. For example, in an e-commerce platform, Mahout might provide explanations such as “this item is recommended because it’s frequently purchased together with items in your shopping history.” This transparency builds trust with users and enables businesses to make informed decisions about refining their recommendation strategies.

21. Explain the role of Mahout in handling temporal dynamics in recommendation systems. Provide an example scenario where temporal dynamics play a significant role.

Ans:

Mahout can incorporate temporal dynamics by considering the time of user interactions when making recommendations. For instance, in a news recommendation system, Mahout may prioritize recent articles over older ones to ensure that users receive up-to-date and relevant news. Temporal dynamics are crucial in scenarios where user preferences evolve with time, and Mahout’s ability to factor in time stamps enhances the accuracy and relevance of recommendations.

22. Describe the challenges and strategies involved in handling imbalanced datasets in Mahout’s machine learning models.

Ans:

- Imbalanced datasets can affect the performance of machine learning models. In Mahout, strategies to handle imbalanced datasets include adjusting class weights, oversampling the minority class, or using different evaluation metrics like precision and recall.

- For example, in a fraud detection system, where fraudulent transactions are rare compared to legitimate ones, Mahout may employ techniques to ensure the model effectively identifies and addresses imbalances, improving the accuracy of predictions.

23. Discuss the advantages and limitations of Mahout’s support for deep learning in recommendation systems.

Ans:

Mahout provides support for deep learning through its Samsara module. The advantages include the ability to model complex relationships in data. However, limitations may arise in terms of computational requirements and the need for substantial labeled data. In a scenario where the recommendation system requires understanding intricate patterns in user behavior, Mahout’s deep learning capabilities can be beneficial, but careful consideration of computational resources and data availability is essential.

24. Explain the role of Mahout’s clustering algorithms in recommendation systems. Provide an example scenario where clustering can enhance recommendations.

Ans:

Mahout’s clustering algorithms, such as k-means, can group users or items with similar characteristics. In a movie recommendation system, clustering could be used to identify groups of users with similar taste preferences. This information can then be leveraged to recommend movies based on the preferences of users within the same cluster. Clustering enhances recommendations by capturing underlying patterns and enabling a more personalized approach, particularly in scenarios with diverse user preferences.

25. Discuss the impact of data preprocessing on the performance of Mahout’s recommendation algorithms. Provide examples of common preprocessing techniques.

Ans:

Data preprocessing is crucial for optimizing Mahout’s recommendation algorithms. Techniques such as data cleaning, handling missing values, and normalizing data can significantly impact model performance. For instance, in an e-commerce platform, preprocessing may involve handling incomplete user profiles or normalizing user ratings to a consistent scale. Effective preprocessing ensures that Mahout’s algorithms receive high-quality input data, leading to more accurate and reliable recommendations.

26. Explain the role of Mahout in building hybrid recommendation systems. Provide an example scenario where a hybrid approach is beneficial.

Ans:

- Mahout supports hybrid recommendation systems that combine collaborative filtering and content-based filtering. In a music streaming service, a hybrid approach might involve recommending songs based on both user preferences and the genre or artist of the songs.

- This hybridization allows Mahout to leverage the strengths of multiple recommendation techniques, providing more robust and accurate suggestions, especially in scenarios where individual approaches may have limitations.

27. Discuss the concept of cross-domain recommendation and how Mahout facilitates recommendations across different domains. Provide a scenario where cross-domain recommendations are valuable.

Ans:

- Cross-domain recommendation involves making recommendations based on user behavior in one domain to improve recommendations in another domain. Mahout facilitates cross-domain recommendations through its ability to transfer knowledge between domains.

- For example, in an e-commerce platform, Mahout might use user preferences in the clothing domain to enhance recommendations in the electronics domain, recognizing that users with similar preferences may have similar interests across different product categories.

28. Explain the concept of feature engineering in Mahout’s machine learning models. Provide examples of feature engineering techniques.

Ans:

Feature engineering involves creating new features or modifying existing ones to enhance the performance of machine learning models. In Mahout, feature engineering is crucial for improving the effectiveness of recommendation algorithms. For instance, in a book recommendation system, Mahout might engineer features such as the average rating of a book, the number of times it has been reviewed, or the author’s popularity. These engineered features provide additional information to Mahout’s algorithms, improving the accuracy of recommendations.

29. Discuss the role of Mahout in handling the trade-off between accuracy and diversity in recommendation systems. Provide an example scenario where balancing accuracy and diversity is essential.

Ans:

- Mahout allows users to fine-tune recommendation models to balance accuracy and diversity. In a movie recommendation system, balancing accuracy ensures that recommended movies align closely with a user’s preferences, while diversity ensures that a variety of genres or styles is considered.

- Striking the right balance is crucial, especially in scenarios where users may appreciate recommendations that align with their preferences but also desire variety to discover new and unexpected content.

30. Explain the concept of reinforcement learning in the context of recommendation systems, and discuss how Mahout can be utilized in such scenarios.

Ans:

Reinforcement learning in recommendation systems involves continuously learning and adapting based on user interactions. Mahout’s extensibility allows for the integration of reinforcement learning techniques to optimize recommendations over time. For example, in a video streaming platform, Mahout might use reinforcement learning to adapt recommendations based on user feedback, learning from user interactions to improve the overall recommendation strategy. This adaptive approach ensures that Mahout’s recommendation models evolve with user preferences, providing more personalized and relevant suggestions.

31. Discuss the challenges and strategies involved in handling noisy data when training Mahout’s recommendation models.

Ans:

- Noisy data, such as outliers or inaccuracies in user preferences, can impact the performance of Mahout’s recommendation models. Strategies for handling noisy data include outlier detection, robust feature engineering, and the use of outlier-resistant algorithms.

- For example, in an online shopping platform, Mahout might employ techniques to identify and filter out anomalous purchase patterns, ensuring that noisy data doesn’t unduly influence the recommendation model.

32. Explain how Mahout addresses the scalability challenges associated with real-time recommendation systems. Provide an example scenario where real-time recommendations are critical.

Ans:

Mahout’s integration with Apache Flink allows for the development of real-time recommendation systems that scale efficiently. In scenarios like online retail, where user preferences may change rapidly, Mahout’s ability to process streaming data in real-time ensures that recommendations reflect the most recent interactions. This is critical for providing users with up-to-the-minute suggestions, enhancing the user experience and engagement.

33. Describe the role of Mahout in handling privacy and security concerns in recommendation systems. Provide examples of privacy-preserving techniques.

Ans:

Mahout plays a role in addressing privacy concerns by implementing techniques such as anonymization and differential privacy. For instance, in a healthcare recommendation system, Mahout may use differential privacy to protect sensitive patient information. This ensures that recommendations are made without compromising individual privacy, maintaining the confidentiality of user data in scenarios where privacy is paramount.

34. Discuss the importance of interpretability in Mahout’s recommendation models and how it contributes to user trust. Provide examples of scenarios where interpretability is crucial.

Ans:

- Interpretability in Mahout’s recommendation models is vital for user trust. For example, in a financial advisory platform, Mahout may provide explanations for investment recommendations based on factors like historical market trends or risk assessments.

- This transparency helps users understand the rationale behind recommendations, fostering trust and enabling users to make informed decisions, particularly in domains where the consequences of recommendations are significant.

35. Explain the concept of implicit feedback and its role in enhancing recommendation accuracy in Mahout. Provide examples of scenarios where implicit feedback is prevalent.

Ans:

Implicit feedback in Mahout involves user interactions that are not explicitly provided but can be inferred from behavior, such as clicks or views. In a music streaming service, implicit feedback may include the frequency of song plays or the duration of listening sessions. Mahout leverages this implicit feedback to enhance recommendation accuracy, as it provides valuable insights into user preferences, especially in scenarios where explicit feedback, like ratings, is sparse.

36. Discuss Mahout’s support for feature selection and its impact on recommendation model performance. Provide examples of scenarios where feature selection is critical.

Ans:

Mahout supports feature selection techniques to identify and use the most relevant features for recommendation models. In a product recommendation system, feature selection may involve determining which product attributes (features) most influence user preferences. By selecting the most informative features, Mahout can improve model performance and reduce computational complexity, especially in scenarios where the dataset contains numerous features, some of which may be less relevant.

37. Explain the concept of contextual bandits in Mahout and provide a scenario where contextual bandits are advantageous in recommendation systems.

Ans:

- Contextual bandits in Mahout involve making sequential decisions by considering contextual information. In an online advertising platform, contextual bandits could be used to dynamically adjust ad recommendations based on user behavior.

- For example, Mahout might adapt recommendations based on a user’s recent interactions, optimizing the balance between exploration (trying new recommendations) and exploitation (recommending known successful items), ensuring an adaptive and personalized recommendation strategy.

38. Discuss the role of cross-validation in Mahout’s model evaluation process and why it is essential. Provide examples of scenarios where cross-validation is particularly valuable.

Ans:

Cross-validation in Mahout involves splitting the dataset into multiple subsets for training and testing the model iteratively. This technique helps assess the model’s generalization performance and robustness. For instance, in a travel recommendation system, cross-validation ensures that the model consistently performs well across different user groups or regions, providing a more reliable estimate of the model’s predictive accuracy in diverse scenarios.

39. Explain the concept of multi-armed bandits in Mahout and how they can be applied in recommendation systems. Provide an example scenario.

Ans:

Multi-armed bandits in Mahout involve making decisions between multiple actions (arms) to maximize a reward. In a content recommendation system, Mahout’s multi-armed bandits could be used to dynamically allocate resources to different recommendation strategies. For instance, the system might experiment with recommending new content (exploration) while still prioritizing known popular items (exploitation), optimizing the overall recommendation strategy based on user feedback and engagement.

40. Discuss Mahout’s support for feature extraction in recommendation systems and its impact on model performance. Provide examples of scenarios where feature extraction is crucial.

Ans:

Mahout supports feature extraction techniques to identify relevant patterns in data for recommendation models. In a news recommendation system, feature extraction might involve identifying key topics or sentiment from articles. Extracted features enhance the model’s ability to capture nuanced user preferences, particularly in scenarios where the dataset contains complex and high-dimensional data, improving the overall performance of Mahout’s recommendation algorithms.

41. You’re building a product recommendation engine for an e-commerce platform. How would you leverage Mahout for this task?

Ans:

- Utilize collaborative filtering algorithms like item-based CF or matrix factorization to analyze user purchase history and identify similar items.

- Train recommendation models on historical data, considering both explicit (ratings) and implicit (purchases) feedback.

- Employ online learning techniques to continuously update models based on real-time user interactions.

- Integrate the recommendation engine seamlessly into the user interface for personalized product suggestions.

42. Explain how Mahout can handle sparsity in user-item interaction matrices, and why sparsity is a common challenge in recommendation systems.

Ans:

Mahout addresses sparsity by employing matrix factorization techniques. In a music streaming service, the user-item interaction matrix can be sparse as users often interact with only a fraction of available songs. Matrix factorization helps Mahout fill in missing values by identifying latent factors, capturing underlying patterns even in sparse data. This is essential in recommendation systems where users typically engage with a small subset of the entire catalog.

43. A streaming service wants to personalize music recommendations based on user listening patterns. Which Mahout algorithms would you consider and why?

Ans:

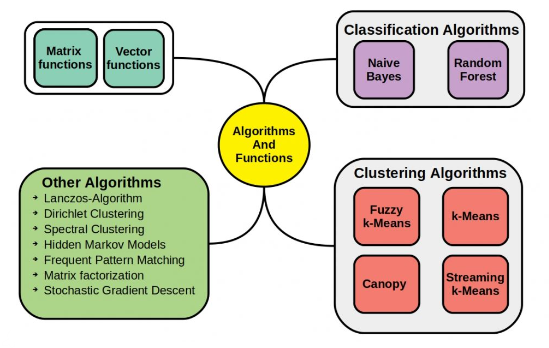

- Opt for streaming k-means clustering to dynamically group users with similar listening preferences.

- Utilize item-to-item co-occurrence analysis to discover songs frequently listened to together.

- Implement stochastic gradient descent (SGD) for online model training based on new listening data.

- Consider hybrid approaches combining content-based filtering with collaborative filtering for richer recommendations.

44. Discuss Mahout’s support for hyperparameter tuning and its importance in optimizing recommendation models. Provide examples of hyperparameters in Mahout.

Ans:

Hyperparameter tuning in Mahout involves optimizing parameters to improve model performance. For example, in a movie recommendation system, hyperparameters could include the number of latent factors or the regularization term. Mahout provides tools for systematic hyperparameter tuning, allowing data scientists to experiment with different configurations and select the optimal set for achieving better model accuracy and generalization.

45. You’re tasked with identifying fraudulent transactions in a large financial dataset. How can Mahout be applied for this scenario?

Ans:

- Leverage anomaly detection algorithms like one-class Support Vector Machines (SVM) to identify outlier transactions deviating from normal patterns.

- Implement frequent itemset mining to discover unusual purchase sequences potentially associated with fraud.

- Utilize classification algorithms like Naive Bayes or Random Forest to build a model predicting fraudulent activity based on transaction features.

- Continuously monitor model performance and retrain as needed to adapt to evolving fraud patterns.

46. Explain the role of Mahout in handling outliers and anomalies in user behavior data when building recommendation models. Provide examples of scenarios where outlier detection is crucial.

Ans:

Outliers in user behavior, such as extreme ratings or unusual purchase patterns, can impact the robustness of recommendation models. Mahout may use outlier detection techniques to identify and handle such anomalies. In an e-commerce platform, detecting outliers in purchase amounts is crucial to ensure that extreme transactions don’t disproportionately influence the recommendation model, leading to more balanced and reliable suggestions.

47. A social media platform wants to automatically cluster its users based on their interests and activities. Which Mahout algorithms would you recommend and how would you evaluate their effectiveness?

Ans:

- Implement k-means clustering for initial user grouping based on features like user engagement, followed by spectral clustering for finer community discovery.

- Evaluate cluster cohesion by measuring intra-cluster similarity and inter-cluster dissimilarity.

- Compare clustering results with ground truth data if available, or analyze user interactions within clusters to assess their meaningfulness.

- Monitor cluster stability over time and adjust algorithms or parameters as needed.

48. Discuss Mahout’s support for handling contextual information in recommendation systems and provide examples of scenarios where context-aware recommendations are valuable.

Ans:

Mahout supports contextual information to enhance recommendations. For instance, in a weather app providing activity recommendations, Mahout could consider weather conditions as contextual information. This allows the system to suggest indoor activities on rainy days and outdoor activities when the weather is sunny, demonstrating the value of context-aware recommendations in improving user satisfaction and relevance.

49. You’re working on a natural language processing task involving document classification. How can Mahout be used in this context?

Ans:

- Utilize feature vectors representing word frequencies or topic distributions in documents.

- Implement multinomial Naive Bayes or Random Forest classifiers to assign documents to predefined categories.

- Evaluate model performance metrics like accuracy, precision, and recall.

- Employ dimensionality reduction techniques like Principal Component Analysis (PCA) to improve model efficiency with high-dimensional feature vectors.

50. Explain the role of Mahout in addressing the “gray sheep” problem in collaborative filtering and provide an example scenario.

Ans:

The “gray sheep” problem occurs when a user’s preferences do not align well with any other users, making collaborative filtering challenging. Mahout can handle this by employing hybrid models that combine collaborative and content-based filtering. In a niche hobby community, a user with unique preferences might be considered a “gray sheep.” Mahout’s hybrid approach ensures that recommendations are based not only on similar users but also on item characteristics, mitigating the impact of the “gray sheep” problem.

51. A news website wants to personalize content recommendations for each user. How would you design a recommendation system using Mahout, considering both news freshness and user preferences?

Ans:

- Implement time-aware collaborative filtering algorithms that prioritize recent articles while leveraging user history.

- Utilize content-based filtering based on article topics and keywords to personalize recommendations further.

- Employ hybrid approaches combining collaborative and content-based filtering for balanced recommendations.

- Consider A/B testing different recommendation strategies to optimize personalization effectiveness.

52. Discuss Mahout’s support for cross-domain recommendations and provide an example scenario where recommendations across different domains are valuable.

Ans:

Mahout facilitates cross-domain recommendations by transferring knowledge between domains. In a streaming service, where users have distinct preferences for both music and movies, Mahout can use cross-domain recommendations. If a user has a well-established music preference, Mahout may leverage that knowledge to recommend movies with similar soundtracks, ensuring a more comprehensive and personalized recommendation experience across different content domains.

53. A healthcare provider wants to analyze patient data to identify patients at risk of certain diseases. How can Mahout be used for predictive analytics in this context?

Ans:

- Utilize linear regression or logistic regression models to predict disease risk based on patient demographics, medical history, and other relevant features.

- Implement anomaly detection algorithms to identify patients with unusual medical readings potentially indicating early disease stages.

- Employ techniques like feature selection and regularization to improve model accuracy and avoid overfitting.

- Continuously monitor model performance and retrain with updated data to ensure its relevance.

54. Explain the impact of data distribution on the performance of Mahout’s recommendation algorithms. Provide examples of scenarios where skewed data distribution is common.

Ans:

Data distribution can affect the performance of recommendation models. In an e-commerce platform, some products may be extremely popular, leading to a skewed distribution of user interactions. Mahout’s algorithms need to handle such scenarios by considering techniques like adjusting class weights or using evaluation metrics that are robust to imbalanced data. This ensures that recommendations are not disproportionately influenced by the popularity of a few items.

55. A travel website wants to recommend hotels to users based on their budget, travel preferences, and location. How would you integrate Mahout into their recommendation engine?

Ans:

- Utilize geospatial clustering algorithms to group hotels based on their location and proximity to specific user interests.

- Implement price-aware collaborative filtering algorithms to recommend hotels within user budget constraints.

- Integrate content-based filtering based on hotel amenities and descriptions to personalize recommendations further.

- Consider a multi-step approach where users initially select specific locations or interests, followed by personalized hotel recommendations within those criteria.

56. Discuss Mahout’s support for deep learning models and their application in recommendation systems. Provide examples of scenarios where deep learning is beneficial.

Ans:

Mahout’s Samsara module supports deep learning models for recommendation. Deep learning is beneficial in scenarios where complex relationships in user-item interactions need to be captured. For example, in a video streaming service, deep learning can model intricate patterns in user viewing history, considering factors like genres, directors, and even subtle preferences. Mahout’s integration with deep learning enables the development of more sophisticated and accurate recommendation models.

57. A marketing agency wants to segment its customer base for targeted advertising campaigns. Which Mahout algorithms would be helpful, and how would you ensure campaign effectiveness?

Ans:

- Implement k-means or fuzzy k-means clustering to segment customers based on purchasing behavior and demographics.

- Utilize association rule mining to discover purchase patterns associated with specific product categories or brands.

- Develop targeted ads based on customer segments and their likely interests identified through Mahout analysis.

- Monitor campaign performance metrics like click-through rates and conversion rates to adjust your segments and targeting strategies continuously.

58 . Explain how Mahout handles the trade-off between model accuracy and computational efficiency in recommendation systems. Provide examples of scenarios where balancing this trade-off is crucial.

Ans:

Mahout addresses the trade-off between model accuracy and computational efficiency by offering configurable parameters. In a real-time recommendation system, where low-latency responses are essential, Mahout might use a more computationally efficient algorithm, sacrificing some accuracy for faster recommendations. Conversely, in a batch processing scenario, Mahout may prioritize accuracy over computational speed, striking a balance based on the specific requirements of the recommendation application.

59. A large company wants to analyze employee sentiment from internal communication channels. How can Mahout be used to gain insights into employee morale and engagement?

Ans:

- Utilize topic modeling algorithms like Latent Dirichlet Allocation (LDA) to identify themes and topics discussed in employee communications.

- Implement sentiment analysis techniques to understand the overall sentiment of employee messages towards the company or specific topics.

- Analyze the extracted topics and sentiment trends over time to identify potential concerns or areas for improvement within the company culture.

- Integrate these insights into human resource initiatives or communication strategies to address employee morale and engagement.

60. You’re asked to optimize Mahout training jobs for performance and resource utilization. Which factors would you consider and what techniques would you implement?

Ans:

- Analyze job execution logs to identify bottlenecks and optimize data partitioning, mapper/reducer configurations, and resource allocation.

- Consider job scheduling techniques like MapReduce2’s Flow Control or YARN’s Fair Queuing to efficiently manage cluster resources.

- Explore optimization libraries like Mahout-Streaming to improve performance for large-scale datasets and streaming scenarios.

- Implement advanced data compression techniques like Snappy or GZIP to reduce network traffic and storage requirements.

61. Discuss Mahout’s role in providing personalized recommendations in scenarios with limited user interaction data. Provide examples of scenarios where user interaction data is sparse.

Ans:

Mahout can provide personalized recommendations even in scenarios with limited user interaction data by employing content-based filtering. For instance, in a new social media platform, where users have made few interactions, Mahout may initially rely on content-based recommendations based on user profiles or preferences specified during onboarding. As more user interactions accumulate, Mahout can gradually incorporate collaborative filtering to further enhance personalization.

62. A financial institution needs to personalize credit card offers based on user spending patterns and risk profiles. How would you design a Mahout-based solution with high accuracy and explainability?

Ans:

- Utilize gradient boosting algorithms like XGBoost for their accuracy and feature importance insights.

- Implement feature engineering techniques to create relevant features from transaction data, like spending categories, frequency, and average amount.

- Integrate explainable AI (XAI) libraries like SHAP or LIME to explain individual credit offer decisions, enhancing user trust and transparency.

- Consider federated learning approaches to train models on distributed user data while preserving privacy and security.

63. An online learning platform wants to recommend personalized learning paths for students based on their progress and engagement. How would you combine collaborative and content-based filtering in this scenario?

Ans:

- Implement a hybrid recommender system combining item-based collaborative filtering with content-based filtering based on course topics, difficulty levels, and prerequisite knowledge.

- Utilize matrix factorization techniques like SVD to capture latent factors representing both user preferences and course features.

- Employ dynamic weighting strategies to adjust the influence of collaborative and content-based components based on user data sparsity or the availability of content information.

- Consider cold-start and new item problems, employing content-based recommendations for cases where limited collaborative data is available.

64. Explain the role of Mahout’s preference data model in building recommendation systems. Provide examples of scenarios where preference data is essential.

Ans:

Mahout’s preference data model represents user-item interactions, where users express preferences through ratings or interactions. In a product recommendation system, user preferences are crucial for suggesting items that align with their interests. Mahout leverages this preference data to build accurate models, ensuring that recommendations are tailored to individual user tastes and preferences.

65. Discuss Mahout’s capabilities in handling contextual bandits for dynamic and adaptive recommendations. Provide examples of scenarios where contextual bandits are advantageous.

Ans:

Mahout’s support for contextual bandits enables adaptive recommendations based on contextual information. In a news recommendation system, contextual bandits could be applied to dynamically adjust article suggestions based on user behavior, improving engagement. By considering factors like recent reading history or trending topics, Mahout’s contextual bandits enhance the system’s ability to provide timely and personalized recommendations.

66.. You’re tasked with deploying a Mahout recommendation engine in production with high availability and fault tolerance. Which architectures and practices would you recommend?

Ans:

- Implement a distributed recommendation architecture using tools like Spark or Beam for scalability and elastic scaling on cloud platforms.

- Utilize ZooKeeper for service discovery and leader election to ensure high availability and automatic failover in case of node failures.

- Employ caching mechanisms like Memcached or Redis to store frequently accessed recommendations and reduce server load.

- Implement a monitoring and alerting system to track model performance, system health, and identify potential issues proactively.

67. Explain the concept of temporal dynamics in recommendation systems and how Mahout can adapt to changes over time. Provide examples of scenarios where temporal dynamics play a significant role.

Ans:

Temporal dynamics in recommendation systems involve adjusting recommendations based on changes over time, such as seasonality or evolving user preferences. In a retail platform, Mahout can adapt to temporal dynamics by considering factors like shopping trends during holidays. By incorporating time-sensitive information, Mahout ensures that recommendations stay relevant, accounting for dynamic shifts in user behavior.

68. Discuss the role of reinforcement learning in Mahout’s recommendation algorithms and how it contributes to continuous model improvement. Provide examples of scenarios where reinforcement learning is beneficial.

Ans:

Mahout’s reinforcement learning capabilities enable continuous model improvement based on user feedback. In a music streaming service, reinforcement learning could be used to adjust recommendations in real-time, learning from user interactions to refine the music suggestion strategy. This adaptive approach ensures that Mahout’s recommendation models evolve with user preferences, providing more personalized and relevant suggestions over time.

69. An e-commerce platform wants to identify churned customers and target them with personalized re-engagement offers. How would you leverage Mahout for churn prediction and customer segmentation?

Ans:

- Implement survival analysis techniques like Cox Proportional Hazards model to predict customer churn time based on purchase history and engagement metrics.

- Utilize k-means or DBSCAN clustering to segment customers based on churn risk and spending patterns.

- Develop targeted re-engagement campaigns offering personalized discounts or recommendations based on customer segments and their predicted churn probability.

- Continuously monitor campaign performance and update churn prediction models with new data for improved accuracy and effectiveness.

70. Explain Mahout’s support for cross-validation in model evaluation and validation. Provide examples of scenarios where cross-validation is crucial for assessing model performance.

Ans:

Cross-validation in Mahout involves systematically partitioning data into subsets for model evaluation. In a hotel recommendation system, cross-validation ensures that the model consistently performs well across different user segments or travel preferences. This is crucial for assessing the robustness of the model and ensuring that recommendations generalize effectively to diverse scenarios, enhancing overall reliability.

71. Discuss Mahout’s role in addressing privacy and security concerns in recommendation systems, specifically in scenarios where user data must be safeguarded.

Ans:

Mahout contributes to privacy and security in recommendation systems by implementing techniques such as anonymization or differential privacy. In a healthcare recommendation system, where patient data must be protected, Mahout’s differential privacy features ensure that recommendations are made without compromising individual privacy, maintaining the confidentiality of sensitive user information.

72. Explain how Mahout handles the “long-tail” problem in recommendation systems and provides accurate suggestions for less popular items. Provide examples of scenarios where the long-tail problem is common.

Ans:

The “long-tail” problem refers to the challenge of accurately recommending less popular or niche items. In a streaming platform with a vast content catalog, Mahout addresses this by employing hybrid models that combine collaborative filtering with content-based recommendations. This ensures that even items with fewer interactions receive accurate suggestions based on both user preferences and item characteristics.

73. Discuss Mahout’s support for feature extraction in recommendation systems and its impact on capturing relevant patterns in user behavior. Provide examples of scenarios where feature extraction is critical.

Ans:

Mahout supports feature extraction to identify relevant patterns in user behavior for recommendation models. In an online learning platform, feature extraction might involve identifying key topics from the courses a user has interacted with. Extracted features enhance Mahout’s ability to capture nuanced user preferences, especially in scenarios where users have diverse learning interests.

74. Explain the role of Mahout in handling evolving user preferences in recommendation systems. Provide examples of scenarios where user preferences may change over time.

Ans:

Mahout can handle evolving user preferences by continuously updating recommendation models based on new interactions. In a fashion e-commerce platform, where seasonal trends impact user preferences, Mahout’s adaptive approach ensures that recommendations align with changing fashion tastes. By incorporating the latest user interactions, Mahout adapts to evolving preferences, providing more relevant and timely suggestions.

75. Discuss the considerations and challenges when integrating Mahout with distributed computing frameworks for scalable recommendation systems. Provide examples of scenarios where scalable solutions are crucial.

Ans:

Integrating Mahout with distributed computing frameworks like Apache Hadoop or Apache Spark addresses scalability challenges. In a large-scale e-commerce platform, where the user and item interaction data is vast, Mahout’s ability to scale across multiple nodes in a distributed environment ensures efficient processing and generation of recommendations. Considerations include load balancing, data shuffling, and fault tolerance to handle the complexity of massive datasets.

76. A music streaming service wants to recommend new songs to users based on their emotional states inferred from listening patterns. How would you combine Mahout with emotion recognition tools to implement this?

Ans:

- Utilize Mahout’s item-to-item co-occurrence algorithms to analyze listening patterns and identify songs frequently listened to together.

- Integrate an external emotion recognition API or service to analyze audio features and infer emotional states during song playback.

- Develop a hybrid recommendation system that combines song co-occurrence with user-specific emotional preferences for personalized recommendations.

- Consider online learning techniques to continuously update model preferences based on user feedback and new listening data.

77. A company wants to predict customer lifetime value (CLTV) using transaction data. How would you approach this with Mahout and explain the potential challenges?

Ans:

- Implement survival regression models like Cox Proportional Hazards to predict the duration of customer relationships and estimate CLTV.

- Utilize feature engineering techniques to create relevant features from purchase history, such as frequency, recency, and product categories.

- Account for censored data where customers may not churn within the available observation period and employ appropriate statistical methods.

78. Explain Mahout’s support for model persistence and why it is crucial in real-world recommendation systems. Provide examples of scenarios where model persistence is essential.

Ans:

Mahout’s model persistence allows saving and reusing trained models. In a dynamic e-commerce environment where user preferences change frequently, persistent models ensure that the recommendation system can quickly adapt to evolving trends. By storing and loading models efficiently, Mahout contributes to the seamless and continuous operation of recommendation systems.

79. Discuss the role of Mahout in handling implicit feedback in recommendation systems. Provide examples of scenarios where implicit feedback is more prevalent than explicit feedback.

Ans:

Mahout excels in handling implicit feedback, such as clicks or views, where users may not explicitly rate items. In a social media platform, implicit feedback could include user engagement metrics like shares or comments. Mahout leverages this implicit feedback to infer user preferences, especially in scenarios where users are more likely to interact with content than provide explicit ratings.

80. A company wants to predict customer lifetime value (CLTV) using transaction data. How would you approach this with Mahout and explain the potential challenges?

Ans:

- Implement survival regression models like Cox Proportional Hazards to predict the duration of customer relationships and estimate CLTV.

- Utilize feature engineering techniques to create relevant features from purchase history, such as frequency, recency, and product categories.

- Account for censored data where customers may not churn within the available observation period and employ appropriate statistical methods.

- Be aware of potential biases and address them through data cleaning and model validation techniques.

81. You’re tasked with analyzing large-scale geospatial data using Mahout. How would you choose appropriate algorithms and optimize performance for distributed processing?

Ans:

- Utilize geospatial clustering algorithms like DBSCAN or KMeans with spatial distance metrics to identify location-based patterns and clusters.

- Implement MapReduce or Spark processing frameworks for efficient parallelization and scalability on distributed clusters.

- Consider geospatial libraries like GeoSpark or Mahout-Geospatial for optimized spatial data indexing and analysis.

- Utilize data partitioning techniques and efficient distance calculation algorithms to minimize network overhead and processing time.

82. Explain the concept of model explainability in Mahout and its importance in building transparent and trustworthy recommendation systems. Provide examples of scenarios where explainability is critical.

Ans:

Model explainability in Mahout provides insights into why specific recommendations are made. In a financial advisory platform, where investment decisions are consequential, explainability is crucial. Mahout can reveal that a recommendation is based on factors like historical market trends or risk assessments, fostering user trust and confidence in the recommendation system.

83. Discuss Mahout’s support for ensemble methods in recommendation systems and how they contribute to improved accuracy. Provide examples of ensemble techniques used in Mahout.

Ans:

Mahout supports ensemble methods, which combine multiple models to enhance accuracy. In a movie recommendation system, Mahout may use techniques like bagging or boosting to create an ensemble of collaborative filtering and content-based models. This combination leverages the strengths of different approaches, resulting in a more robust and accurate recommendation system.

84. A research project aims to recommend scientific articles based on researcher interests and citation networks. How would you adapt Mahout’s collaborative filtering algorithms for this domain?

Ans:

- Define articles as “items” and researchers as “users” in the collaborative filtering framework.

- Instead of traditional rating data, utilize citation relationships as implicit feedback for article preference.

- Consider incorporating semantic similarity between article topics and keywords into the recommendations to refine suggestions based on research interests.

- Adapt item-based co-occurrence algorithms to analyze citation patterns and identify related articles relevant to the researcher’s field.

85. Explain the role of Mahout’s clustering algorithms in building diverse and personalized recommendation systems. Provide examples of scenarios where clustering enhances diversity.

Ans:

Mahout’s clustering algorithms, such as k-means, group users or items with similar characteristics. In an online shopping platform, clustering can enhance diversity by identifying groups of users with distinct preferences. Mahout can then recommend items that cater to the diverse tastes within each cluster, ensuring a more personalized and varied set of suggestions.

86. Discuss Mahout’s support for feature engineering in recommendation systems and its impact on model performance. Provide examples of feature engineering techniques.

Ans:

Feature engineering in Mahout involves creating or modifying features to improve model performance. In a job recommendation system, Mahout might engineer features such as the relevance of a job to a user’s skills or the recency of the job posting. These engineered features provide additional information for Mahout’s algorithms, enhancing the accuracy and relevance of recommendations.

87. Explain the concept of hybrid recommendation systems in Mahout and provide examples of scenarios where a combination of collaborative and content-based filtering is beneficial.

Ans:

Mahout supports hybrid recommendation systems that combine collaborative and content-based filtering. In a music streaming service, a hybrid approach might involve recommending songs based on both user preferences and the genre or artist of the songs. This combination leverages the strengths of each approach, providing more accurate and diverse recommendations.

88. You’re working on a recommendation system for cold-start scenarios where new users or items have limited data. How would you leverage Mahout to address this challenge?

Ans:

- Implement content-based filtering as an alternative or fallback mechanism for cold-start situations.

- Utilize item metadata, descriptions, or content analysis to recommend new items based on similarities to other well-rated items.

- Employ collaborative filtering with matrix factorization techniques that can capture latent features from limited data and generalize to new users or items.

- Consider hybrid approaches that combine content-based and collaborative filtering to provide personalized recommendations even with limited data

89. Discuss the role of Mahout in handling cross-domain recommendations and provide examples of scenarios where recommendations across different domains are valuable.

Ans:

Mahout facilitates cross-domain recommendations by transferring knowledge between domains. In a travel recommendation system, Mahout might use user preferences in hotel choices to enhance recommendations for local attractions, recognizing that users with similar hotel preferences may have similar preferences for activities.

90. Explain Mahout’s support for model evaluation metrics and their significance in assessing recommendation system performance. Provide examples of evaluation metrics used in Mahout.

Ans:

Mahout provides various model evaluation metrics to assess recommendation system performance. In a restaurant recommendation system, metrics like precision, recall, and F1 score can be crucial for measuring the accuracy and completeness of suggestions. Mahout’s evaluation metrics help data scientists fine-tune models for optimal performance.