- What is MapReduce?

- Main features of MapReduce

- How Does MapReduce Work?

- The Map Phase

- Shuffle and Sort Phase

- The Reduce Phase

- Word Count as a MapReduce Application

- Benefits of MapReduce

- Applications of MapReduce

- Challenges with MapReduce

- Best Practices for Using MapReduce

What is MapReduce?

MapReduce is the programming style applied to make large data processing parallel in form on multiple computers. Its real objective is processing a vast amount of data by splitting it into independent smaller items and scattering them onto nodes spread all over a cluster. For instance, in a MapReduce example, the nodes then perform the arithmetic and return the resulting computation as output for their computation, which eventually gets combined for the final production.

It was designed in two basic stages: Map and Reduce.

- Map: This is the first stage in which input data processing occurs; it converts input data into key-value pairs.

- Reduce: The second stage aggregates all the intermediate key-value pairs and produces the output.

- This strategy efficiently executes big data by MapReduce without sacrificing parallelism, fault tolerance, or scalability.

- Input Data: The natural input some operation needs to be applied upon. Consequently, they are usually maintained in a distributed file system, like the Hadoop Distributed File System and then divided into chunks. Input Data Input data is the raw input to be processed by MapReduce. The type of data that is kept mainly is typically dispersed in a Distributed File System, such as Hadoop Distributed File System.

- Mapper: The Mapper processes input data and breaks it into intermediate key-value pairs, generating one for every meaningful piece of information. For example, in a word-counting application, this mirrors how Apache NetBeans helps streamline the development process, allowing developers to focus on key components.

- Reducer: The Reducer accumulates the intermediate data to produce the final result. It is a summation of values for the same key. For example, a word count application sums the counts for each word, eventually giving the total frequency of that word.

- JobTracker and TaskTracker: it schedules jobs on the cluster level while executing tasks on individual nodes. This is the master that oversees the entire MapReduce job. It is responsible for planning and coordinating tasks, monitoring follow-up on the progress, and managing failures.

- Output:the final production is processed normally rec, lived normally written to the distributed file system. Depending on the application and requirement, this output can be in any of the above forms or files ready for visualization or reporting.

- (“MapReduce”, 1)

- (“is”, 1)

- (“amazing”, 1)

- Mapper 1: (“MapReduce”, 1), (“is”, 1)

- Mapper 2: (“MapReduce”, 1), (“amazing”, 1)

- (“MapReduce”, [1, 1])

- ( “is”, [1])

- ( “amazing”, [1])

- ( “is”, 1)

- (“amazing”, 1)

- Map Phase: A Mapper reads a chunk of the text and produces key-value pairs, where the key is the word and the value is 1.

- Shuffle and Sort Phase: The middle key-value pairs are shuffled and sorted so that the same word’s appearances become grouped.

- Reduce Phase: The Reducer adds up the values for each word to yield its total frequency within the document.

- This very simple yet extremely powerful example portrays exactly how MapReduce can solve problems in the real world containing a lot of data easily.

- Scalability: It can accept almost gigantic amounts of data and process petabytes across thousands of clusters of machines. What impresses me about MapReduce is the scalability of a cluster. Processing petabytes of data, often spread across thousands of machines in a cluster, is possible.

- Fault Tolerance: MapReduce automatically handles system failures. If one node fails, its tasks are reassigned to other nodes without losing progress. MapReduce helps avoid failures while reducing their impact and maintaining integrity through the saving of intermediate results so that it can be used even for mission-critical applications.

- Parallelism: Dividing any task into many smaller units ensures significant parallelism, which can speed up the processing of large data sets. For example, while one Mapper is on a chunk of data, others can process other chunks in parallel, thus fully utilizing the available resources without idling.

- Simplicity: It abstracts away the complexity associated with the distributed computing paradigm, thus making it easy for developers to implement parallel algorithms for data processing. It has a simplicity of development complexity. It abstracts the complexities associated with distributed computing, making the hurdle an easier one to cross for developers.

- Log Analysis: Analyzing huge amounts of server logs on patterns, user activity, and errors that could have been incurred.MapReduce is best suited for analyzing huge server logs to deduce important information. Organizations can process and analyze logs from web servers, application servers, and network devices to ascertain patterns, user behaviour, and possible errors.

- Web Indexing: Tightly coupled indexing over an extremely large web search index is created by processing data from the web, similar to how Google operates. Web indexing is a classic application of MapReduce, particularly for search engines like Google. Using data collected from the web, MapReduce processes and indexes this information for efficient retrieval, much like how Amazon Kinesis handles real-time data streams to ensure timely processing and accessibility.

- Data Mining: it involves finding patterns and trends by using massive datasets. This often appears in recommender systems or customer analysis.MapReduce is highly used in data mining to discover patterns and trends from large amounts of data. Businesses use it to analyze customer behaviour, and market segmentation and identify trends that lead to decision-making.

- Machine Learning: Distributed algorithms for machine learning need to be trained on massive data sets. For instance, using MapReduce, one can use frameworks like Apache Mahout to execute large-scale machine learning algorithms directly in distributed environments. Such capability will be essential for applications such as fraud detection up to the detection of images since the available training data volume could be large and complex.

- Latency: The Shuffle and Sort phase can suffer significant latency issues, especially for real-time or near-real-time data processing cases. Latency is one of MapReduce’s major problems and generally occurs in this phase. Shuffle and Sort entails information redistribution across nodes; hence, this phase causes considerable delays.

- Complexity for Complex Workflows:While it’s quite easy to write jobs for pretty simple workloads, more complex tasks must be implemented as chained MapReduce jobs, adding complexity. Chaining raises the complexity of workflows and thus makes them harder to handle and debug. Generally, when the number of jobs grows, entire pipelines become more difficult to manage and optimize, which may lead to growth in development times and errors.

- Iterative Processing: MapReduce is not well-suited for iterative operations, as most machine learning algorithms require multiple passes over the data. This inefficiency makes it difficult to apply MapReduce to applications like clustering or deep learning. In contrast, Scala offers frameworks like Apache Spark, designed for efficient iterative processing.

- Limited Expressiveness: While MapReduce provides a simple programming model, it is less expressive than many more advanced data processing frameworks. Complex operations, such as joins, can be awkward to implement and optimize within the MapReduce paradigm; performance for rich data structures or relation workloads could be more optimal in these systems.

- Combiner Functions: A combiner function reduces the data movement between Map and Reduce’s phases, making it efficient. This reduces network traffic as well as, generally speaking, execution time. For example, if you are computing word count in a huge dataset, Combiners sum counts locally on each Mapper node and write only the aggregated values to the Reducer.

- Data Locality:All tasks scheduled on nodes should be done so that nodes store relevant data. This will save network overheads. Suppose you have a split dataset across several nodes; ensure each Mapper receives a block of data to process sitting on the same node.

- Compression: To ensure minimal bandwidth and storage costs for the intermediate data produced by the Mapper to Reducer process. For example, if you operate with enormous log files, compressing the intermediate output can speed up the job completion time by saving on data transfer overhead.

Eager to obtain your MapReduce Professional Certification? Check out the MapReduce Certification Course now offered at ACTE!

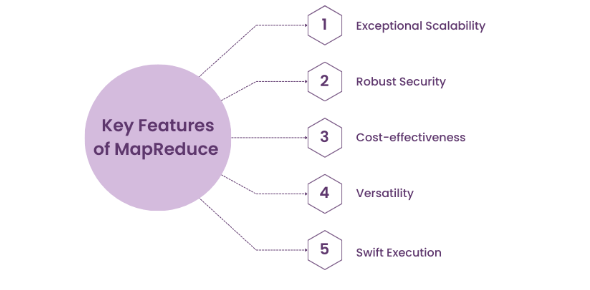

The main features of MapReduce

How Does MapReduce Work?

1. Mapping Phase: Input data is split into a subset of small units of data and sent to the Mapper. Mapper further processes that input in the form of key-value pairs as an intermediary.

2. Shuffle and Sort: In this stage, when the Mapper has exhausted all its processes, the system shuffles and sorts intermediate data so that all values associated with the same key are grouped. Data would have been sorted at this stage before being forwarded to the Reducer.

3. Reduction Phase: Input the set of data for the Reducer. The combining function is applied to accumulate and aggregate the result, and finally, the final output is emitted. This order allows an application to process a lot of data. It distributes the job across various machines and organizes the output.

The Map Phase

The MapReduce procedure is divided into two steps. The first step is called the Map phase, in which the data input is segmented into smaller units, mainly data blocks. The process of Mapping splits the input data into various units, which then every Mapper processes. Each Mapper receives a definite amount of input data and applies the predefined function to transform that data into a key-value pair.

For instance, suppose you have a long document and want to know how often words occur. In the Map phase, the document is broken up into tiny pieces; then, the operation is done on one piece at a time to find individual words. Such a word would have associated with it, like the number 1, the number of times such a word occurs.

For example, if “MapReduce is amazing,” is the input, the Mapper would produce the following

Achieve your MapReduce Certification by learning from industry-leading experts and advancing your career with ACTE’s MapReduce Certification Course.

Shuffle and Sort Phase

The output of the Map phase in a MapReduce example should be shuffled and sorted according to the key-value pairs generated by the Mapper. All key-value pairs with the same key are grouped and sorted before being sent to the Reducer. This process is similar to how Elasticsearch organizes and indexes data for efficient retrieval and querying, ensuring that related information is easily accessible.

It reorders the intermediate data such that all equal keys are placed together during the shuffle and sort phase. Let’s consider in the above word counting example that two different Mappers output the following key-value pairs,

In this phase, it would regroup and sort the data to produce the following output:

The Reduce Phase

The Reduce collects pairs of key values gathered during the Shuffle and Sort phase. It walks through each unique key, computing its values with an aggregate function, most frequently a sum, to obtain the final answer.

In the word-count example, the Reducer accumulates the list of values for each key. Accordingly, with respect to the key “MapReduce” with values [1, 1], the Reducer outputs “MapReduce, 2,” meaning that the word “MapReduce” appears twice in the document. The other words are processed similarly:

Word Count as a MapReduce Application

A typical MapReduce problem is the word count problem, where we need to count the frequency of a word in a huge text file. Here is what a simplified version of MapReduce looks like on this problem: InputA huge document or a text file.

Looking to Master Data Science? Discover the Data Science Master Program Available at ACTE Today!

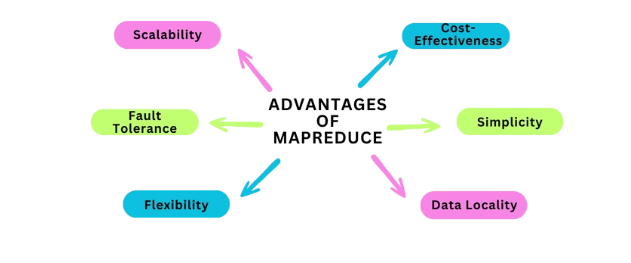

Benefits of MapReduce

Applications of MapReduce

MapReduce is used in many fields and scenarios wherein enormous processing of data occurs:

Challenges with MapReduce

Getting Ready for an MapReduce Job Interview? Browse Our Complete Set of MapReduce Interview Questions to Aid Your Preparation!

Best Practices for Using MapReduce

With these best practices, you will smoothly reduce your execution time on your MapReduce jobs, maximizing efficiency and performance, especially when dealing with very big data sets. For instance, using a MapReduce example can help illustrate how to effectively implement these techniques to achieve optimal results.

Conclusion

It is a great tool for handling massive datasets by dividing jobs into functions called Map and Reduce. Not only does it simplify parallel processing, but it also offers scalability and fault tolerance. This is particularly beneficial for processing large data sets and is useful in various industries for big data applications like log analysis, indexing, and machine learning, much like how Apache Solr excels in search and indexing capabilities for large volumes of data.