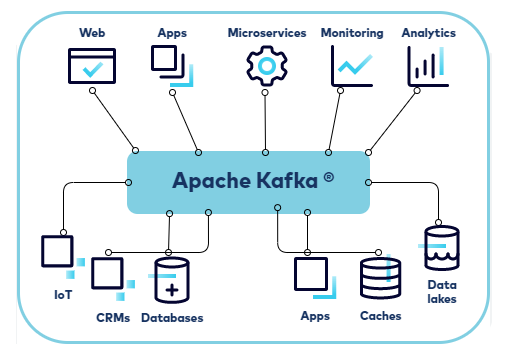

Real-time data pipelines and streaming applications can be constructed with Apache Kafka, an open-source distributed event streaming platform. Initially created by LinkedIn, it was subsequently made available to the public as a component of the Apache Software Foundation. Applications can send and receive streams of records (messages or events) in a fault-tolerant and durable way with Kafka’s scalable, publish-subscribe messaging system.

1. What is Apache Kafka?

Ans:

Apache Kafka is an open-source distributed event streaming platform for building real-time data pipelines and streaming applications. It is designed to handle high-throughput, fault-tolerant, and scalable streaming of data.

2. Explain the publish-subscribe messaging model.

Ans:

The publish-subscribe model involves two main components: publishers and subscribers. Publishers send messages to a central hub called a “topic,” and subscribers express interest in specific topics. When a publisher sends a message to a topic, all interested subscribers receive a copy of the message. This model enables scalable and decoupled communication between components.

3. Define topics and partitions in Kafka.

Ans:

Topics are logical channels through that messages are published and consumed. Partitions are the divisions within topics that allow Kafka to parallelize processing and provide scalability.

4. What are Kafka’s key components?

Ans:

Critical components of Kafka include:

- Producers

- Consumers

- Brokers

- Topics

- Partitions

- Zookeeper

- Consumer groups

5. Explain the role of producers and consumers in Kafka.

Ans:

Producers are responsible for publishing messages to Kafka topics, while consumers subscribe to topics and process the news. Producers and consumers facilitate communication within the Kafka ecosystem.

6. What is a broker in Kafka?

Ans:

A broker is a Kafka server that stores data and manages communication between producers and consumers. It handles incoming messages, stores them in topics, and serves them to consumers.

7. Explain message serialization in Kafka.

Ans:

Message serialization in Kafka involves converting messages into a binary or text format before they are sent to a topic. This is necessary because Kafka stores and transmits messages as byte arrays. Common serialization formats include JSON, Avro, and Protobuf.

8. Differentiate between Apache Kafka and traditional message-oriented middleware.

Ans:

| Aspect | Apache Kafka | Traditional MOM | |

| Messaging Model |

Submit-Publish (including Topics) |

Publish-Subscribe and Point-to-Point | |

| Data Storage | Data is stored on disk for durability. | Data may or may not be preserved | |

| Scalability | scalable horizontally | Scaling might necessitate server vertical scaling. | |

| Message Retention | Message retention time that can be customized | possibly have little or no internal retention | |

| Fault Tolerance | Fault tolerance built-in with data replication | Possibly integrated with fault tolerance or not |

9. What is Zookeeper in the context of Kafka?

Ans:

Zookeeper is a distributed coordination service used by Kafka for managing and coordinating distributed systems. It helps maintain maintain configuration information, naming, and providesdistributed synchronization and group services.

10. How does Kafka ensure fault tolerance?

Ans:

Kafka ensures fault tolerance through replication. Each partition has multiple replicas, and one replica is designated as the leader. If a broker fails, one of the replicas takes over as the leader, ensuring continuous availability and durability of data.

11. Explain the role of a partition in Kafka.

Ans:

A partition is a unit of parallelism and scalability in Kafka. Each topic is divided into partitions, allowing for parallel processing and horizontal scalability. Each partition is assigned to a specific broker in a Kafka cluster.

12. List some important Kafka command-line tools.

Ans:

Some important Kafka command-line tools include:

- kafka-topics.sh: Create, describe, or delete topics.

- kafka-console-producer.Sh: Publish messages to a Kafka topic.

- kafka-console-consumer.Sh: Consume and display messages from a Kafka topic.

- kafka-configs.sh: Manage topic configurations.

- kafka-server-start.sh and kafka-server-stop.Sh: Start and stop Kafka brokers.

13. What is the purpose of the replication factor in Kafka?

Ans:

The replication factor determines the number of replicas (copies) of a partition. Replication provides fault tolerance and ensures that even if a broker fails, another replica can take over, preventing data loss.

14. Describe the role of a consumer group in Kafka.

Ans:

A consumer group is a set of consumers that work together to consume messages from Kafka topics. Each partition in a case can be consumed by only one consumer within a group, enabling parallel processing and load distribution.

15. How are topics created in Kafka?

Ans:

Topics can be created in Kafka using the Kafka command-line tools or programmatically using the Kafka API. The creation involves specifying the topic name, replication factor, and the number of partitions.

16. Explain the retention policy for topics in Kafka.

Ans:

Kafka lets you set a topic’s retention policy. It establishes how long Kafka should keep messages related to a subject.

- There are two primary policies for retention:

- Time-based retention: Communications are held onto for a predetermined amount of time.

- Messages are kept until a predetermined size threshold is reached in a size-based retention system.

17. What is a log segment in Kafka?

Ans:

The fundamental unit of storage in Kafka is a log segment.

- It is a representation of a sequential, append-only file that stores messages on disk.

- A topic’s partitions are separated into several log segments.

- A new log segment is created when an existing one reaches its specified size or time limit.

- High-throughput data storing and retrieval is made possible by this design’s support for efficient and sequential writes.

18. Can a Kafka topic have multiple producers and consumers?

Ans:

- A Kafka topic can indeed have more than one producer.

- Messages on the same topic can be independently published by several producers.

- A Kafka topic can also have more than one consumer.

- Users have the option to subscribe to the topic and handle the messages on their own.

19. How does a Kafka producer work?

Ans:

A Kafka producer sends messages to a specified topic. It is responsible for choosing the partition to which the message will be sent, and it can optionally specify a key for the message. Producers can be configured to wait for acknowledgment from brokers or operate in an asynchronous mode.

20. Describe the architecture of Kafka.

Ans:

Kafka has a distributed architecture comprising producers, consumers, brokers, Zookeeper, and topics. Brokers form a cluster, and cases are divided into partitions, allowing for horizontal scalability. Zookeeper is used for coordination and management tasks.

21. What is the purpose of the Kafka key in a message?

Ans:

The Kafka key is an optional field in a message that helps determine the partition to which the message will be sent. If a key is provided, messages with the same key are guaranteed to go to the same partition, ensuring order and consistency for messages with a common key.

22. Explain the difference between KStreams and KTables.

Ans:

KStreams:

- Depicts a Kafka abstraction of an infinite stream of records.

- Real-time, continuous data processing is done.

- Ideal in situations where updates happen frequently and data flows continuously.

- KStream operations are stateful and include filtering, aggregations, transformations, and more.

KTables:

- Depicts an abstraction of a Kafka table’s changelog stream.

- Symbolizes a moment in time when the data was captured.

- Ideal in situations where a point-in-time snapshot suffices and data changes less frequently.

- KTables support stateful operations such as joins, filtering, aggregations, and more.

23. Describe the process of message acknowledgment in Kafka.

Ans:

Kafka producers can be configured to receive acknowledgment from brokers after a message is successfully written to a partition. The acknowledgment can be set to be synchronous or asynchronous. In synchronous mode, the producer waits for acknowledgment before sending the next message, ensuring data durability.

24. How does a Kafka consumer handle partition assignment?

Ans:

Kafka consumers use a process called rebalancing to handle partition assignment. When a consumer joins or leaves a consumer group, or when the number of partitions changes, Kafka triggers a rebalance. During rebalancing, partitions are redistributed among the consumers to maintain an even workload.

25. What is Kafka Connect?

Ans:

Kafka Connect is a framework for building and running connectors that stream data between external systems and Kafka topics. It simplifies the integration of Kafka with various data sources and sinks, allowing for seamless and scalable data movement.

26. Differentiate between source and sink connectors.

Ans:

Source Connectors:

- Definition: Data is ingested into Apache Kafka from an external system using source connectors.

- Functionality: Produces events or data changes from the source system to Kafka topics.

- As an illustration Use Case:Establishing an event stream connection between Kafka and a relational database to stream database changes.

Connectors for sinks:

- Definition: Data can be exported from Apache Kafka to an external system using sink connectors.

- Functionality: Reads messages from topics in Kafka and writes them to an external system, usually a data warehouse or storage system.

- As an illustration Use Case: Exporting information to a database, data lake, or other messaging system from Kafka topics.

27. Give an example of a use case for Kafka Connect.

Ans:

A common use case is ingesting data from a relational database (source) into Kafka using a source connector, and then streaming that data to a data warehouse or Elasticsearch (sink) using a sink connector. Kafka Connect simplifies the ETL (Extract, Transform, Load) process.

28. What are Kafka Streams?

Ans:

Kafka Streams is a library in Kafka that enables stream processing of data directly within the Kafka ecosystem. It allows developers to build real-time applications and microservices that can process and analyze data from Kafka topics.

29. How does windowing work in Kafka Streams?

Ans:

Windowing in Kafka Streams involves dividing the stream of data into time-based or count-based windows. This allows developers to perform aggregate operations on data within specific time intervals or based on the number of events.

30. What security features does Kafka provide?

Ans:

Data in transit between producers, brokers, and consumers is encrypted using SSL/TLS encryption.

- Authentication:Facilitates multiple authentication methods, including SSL client authentication and SASL (Simple Authentication and Security Layer).

- Authorization: Based on user roles and permissions, authorization provides granular access control to topics and operations.

- Encryption at Rest: Enables data stored on disk to be encrypted.

- Audit Logging: For compliance and monitoring purposes, security-related events are recorded and logged.

31. What are some key metrics to monitor in Kafka?

Ans:

- Throughput: Calculate how many messages are sent and received in a given amount of time.

- Consumer Lag: Monitor the interval of time between the consumer’s position in the log and the most recent message.

- Broker Metrics: Keep an eye on metrics unique to each broker, like CPU, disk I/O, and network usage.

- Partition Metrics: Monitor each partition’s metrics, such as in-sync replicas, leader elections, and under-replicated partitions.

- Metrics about Producer and Consumer Performance: Keep an eye on metrics like request rate, errors, and network latency that are associated with the performance of producers and consumers.

32. Describe SSL authentication in Kafka.

Ans:

SSL authentication in Kafka involves using SSL/TLS to secure communication between clients and brokers. It includes the exchange of certificates between clients and brokers to authenticate each other, ensuring a secure and encrypted channel.

33. What is SASL in the context of Kafka?

Ans:

SASL (Simple Authentication and Security Layer) is a framework for authentication in Kafka. It supports various mechanisms such as PLAIN, GSSAPI, and SCRAM, allowing users to authenticate securely against Kafka brokers.

34. Explain the importance of tuning Kafka configurations.

Ans:

Tuning Kafka configurations involves adjusting settings related to producers, consumers, and brokers to meet specific performance, scalability, and reliability requirements. Proper configuration tuning is essential for optimizing resource utilization and achieving desired system behaviour.

35. What is the role of the Kafka Producer API’s batching feature in performance?

Ans:

The batching feature in the Kafka Producer API allows producers to group multiple messages into a single batch before sending them to a broker. Batching reduces the overhead of sending individual messages, improving throughput and overall performance.

36. How can you optimize Kafka for high-throughput scenarios?

Ans:

- Partitioning: To distribute load among brokers and allow for parallel processing, set up the number of partitions appropriately.

- Batching: Producers can send more messages in each request by increasing the batch size, which lowers overhead.

- Compression: When sending messages, use compression to minimize their size.

- Producer and Consumer Tuning: For best results, change parameters such as batch size, buffer size, and number of connections.

37. How can you monitor Kafka using JMX?

Ans:

Kafka exposes Java Management Extensions (JMX) metrics that can be monitored using tools like JConsole or Grafana. JMX provides detailed insights into the internal workings of Kafka, allowing administrators to track various performance and health metrics.

38. Explain the concept of the “exactly-once” semantics in Kafka.

Ans:

“Exactly-once” semantics in Kafka ensures that messages are processed and delivered instantly, without duplication or loss. Kafka achieves this by combining idempotent producers, transactional producers, and consumer offsets to provide end-to-end processing exactly once.

39. How do you create a topic using the Kafka command-line tool?

Ans:

You can create a topic using the following command:

- bash

- Copy code

- afka-topics.sh –create –topic <'topic_name'> –bootstrap-server <'broker_address'> –partitions <'num_partitions'> –replication-factor <'replicati_fact

40. How does Kafka handle broker failures?

Ans:

Kafka ensures fault tolerance by replicating partitions across multiple brokers. If a broker fails, one replicas becomes the leader, and consumers can continue reading from the new leader without data loss. This process is transparent to producers and consumers.

41. How can Kafka integrate with Apache Storm?

Ans:

Kafka can integrate with Apache Storm through the use of the Kafka Spout. The Kafka Spout allows Storm topologies to consume data from Kafka topics. Storm can process the data in real time, providing a powerful combination for handling streaming data and complex event processing.

42. What role does Kafka play in event-driven microservices?

Ans:

- Event Sourcing: Microservices architectures can use event sourcing patterns thanks to Kafka’s dependable event log.

- Decoupling Microservices: Serves as a mediator, allowing communication between independent microservices.

- Fault Tolerance: Kafka offers a strong foundation for microservices because of its distributed architecture and fault-tolerant features, which guarantee resilience in the face of failures.

- Scalability: Microservices’ requirements for growth and scalability are supported by Kafka’s horizontal scalability, which enables handling of high event volumes.

43. Explain the integration of Kafka with Apache Spark.

Ans:

Kafka can integrate with Apache Spark through the Kafka Direct API or the Spark Streaming API. Spark can consume data from Kafka topics, process it in a distributed manner, and then store or analyze the results. This integration is commonly used for real-time data processing and analytics.

44. How can Kafka be used in a microservices architecture?

Ans:

Kafka can be used as a communication backbone between microservices. Microservices can produce and consume events through Kafka topics, enabling asynchronous communication, decoupling services, and providing fault tolerance.

45. What are some best practices for Kafka deployment?

Ans:

Some best practices for Kafka deployment include:

- Adequate resource provisioning for brokers.

- Proper configuration tuning.

- Regular monitoring and performance optimization.

- Ensuring high availability and fault tolerance.

- Securing the Kafka cluster with authentication and encryption.

- Properly sizing and configuring partitions.

46. How can you handle schema evolution in Kafka?

Ans:

Schema evolution in Kafka involves managing changes to the structure of messages over time. Techniques include backward compatibility, forward compatibility, and using tools like the Confluent Schema Registry to store and manage schemas, allowing consumers to evolve independently of producers.

47. Give examples of industries or scenarios where Kafka is commonly used.

Ans:

Kafka is commonly used in industries such as finance for real-time fraud detection, e-commerce for tracking user activity, telecommunications for processing streaming data from network devices, and logistics for tracking shipments in real-time.

48. Explain how Kafka can be used for log aggregation.

Ans:

Kafka can be used for log aggregation by acting as a central hub for collecting log messages from various services and applications. Producers send log messages to Kafka topics, and consumers (log aggregators) subscribe to these topics to process and store the logs centrally.

49. List some popular tools in the Kafka ecosystem.

Ans:

Some popular tools in the Kafka ecosystem include:

- Confluent Platform

- Kafka Connect

- Kafka Streams

- Confluent Control Center

- Schema Registry

- MirrorMaker

- hsqlDB

50. What is the Confluent Platform, and how does it relate to Kafka?

Ans:

Confluent Platform is a distribution of Apache Kafka that includes additional tools and components for enterprise use. It provides additional features like the Confluent Schema Registry, Kafka Connect, hsqlDB, and Confluent Control Center to enhance Kafka’s functionality.

51. Explain the storage format of Kafka logs.

Ans:

Kafka stores logs on disk in a series of log segments. Each log segment is a sequentially written file that contains messages. Log segments are immutable, and Kafka appends new messages to the end of the segments. The index files allow efficient retrieval of messages.

52. How does Kafka handle message retention?

Ans:

Kafka handles message retention through configurable retention policies. Messages in a topic are retained for a specific duration or until a certain size limit is reached. Once the retention criteria are met, older notes are deleted to free up space while ensuring durability.

53. Name some Kafka client libraries for different programming languages.

Ans:

Kafka client libraries are available for various programming languages, including:

- Java (Apache Kafka clients)

- Python (confluent_kafka)

- Scala (Akka Streams)

- .NET (Confluent.Kafka)

- Go (sarma)

- Node.js (node-rdkafka)

- Ruby (ruby-Kafka)

54. Explain the role of the Kafka Consumer API’s poll() method.

Ans:

The poll() method in the Kafka Consumer API fetches records from subscribed topics. It initiates the process of consuming messages by retrieving a batch of documents from Kafka brokers. The frequency of calling poll() determines how quickly the consumer processes incoming messages.

55. How does Kafka achieve horizontal scalability?

Ans:

Kafka achieves horizontal scalability by allowing the distribution of partitions across multiple brokers. Adding more brokers to the cluster and increasing the number of cells for topics enables Kafka to scale horizontally, accommodating increased load and data volume.

56. How can you test a Kafka producer?

Ans:

Kafka producers can be tested by:

- Verifying that messages are successfully sent to Kafka topics.

- Testing error handling and retry mechanisms.

- Assessing the performance and throughput under different conditions.

- Ensuring proper serialization and key assignment.

57. What strategies can be employed to test Kafka consumers?

Ans:

Strategies for testing Kafka consumers include:

- Validating proper consumption of messages from topics.

- Testing consumer group rebalancing.

- Assessing error handling and recovery.

- Verifying the consumer’s ability to handle different message loads.

58. How does increasing the number of partitions impact Kafka’s scalability?

Ans:

Increasing the number of partitions in Kafka enhances scalability by allowing for parallelism in data processing. More cells mean that Kafka can distribute the workload across a more significant number of brokers and consumers, facilitating better utilization of resources and improved throughput.

59. Explain the Avro serialization format in Kafka.

Ans:

Avro is a binary serialization format supported by Kafka. It provides schema evolution, allowing for changes in the message structure over time. The Confluent Schema Registry is often used with Avro to manage and store schemas, ensuring compatibility across producers and consumers.

60. What is log compaction in Kafka?

Ans:

Log compaction is a feature in Kafka that retains only the latest record for each key in a log, ensuring that the log maintains the latest state of the data. It helps in scenarios where retaining the entire history of messages is not necessary, and only the most recent update for each key needs to be preserved.

61. What serialization formats does Kafka support?

Ans:

Kafka supports various serialisation formats, including:

- JSON

- Avro

- Protobuf

- Apache Thrift

- String (UTF-8)

- ByteArray

62. Describe the steps involved in deploying Kafka in a production environment:

Ans:

- Plan and Design Determine the number of brokers, partitions, and replication factors. Design topics and partitioning strategies.

- Install Kafka: Install Kafka on dedicated servers or virtual machines following the platform-specific installation instructions.

- Configure Kafka: Adjust configuration files for producers, consumers, and brokers based on your deployment requirements.

- Set Up Zookeeper: Deploy and configure Zookeeper, as it is used for coordination and management tasks in Kafka.

63. How can Kafka be used in a cloud-based environment?

Ans:

In a cloud-based environment, Kafka can be deployed on cloud services such as AWS, Azure, or Google Cloud. Cloud-based Kafka deployments offer scalability, flexibility, and managed services that simplify operational tasks. Kafka clusters can be provisioned as virtual machines, containerized applications, or fully managed services provided by cloud providers.

64. Explain the role of Kafka in serverless architectures.

Ans:

In serverless architectures, Kafka can be used as an event source or event bus to facilitate communication between serverless functions. Events generated by one function can be published to Kafka topics, and other functions can subscribe to these topics for event-driven processing. Kafka provides the decoupling required for serverless applications.

65. How can you ensure high availability in a Kafka deployment?

Ans:

To ensure high availability in a Kafka deployment:

- Deploy Kafka brokers on multiple servers.

- Use replication with multiple in-sync replicas (ISRs) for each partition.

- Implement proper monitoring to detect and address issues promptly.

- Set up Zookeeper in a highly available configuration.

- Consider deploying Kafka in multiple data centres for geographic redundancy.

66. When is log compaction useful?

Ans:

Log compaction is useful when:

- The goal is to maintain the latest state for each key in the log.

- The log has a large amount of data with frequent updates, and retaining the entire history is not essential.

- The log is used for maintaining changelog data, such as maintaining the state of a database.

67. What is consumer rebalancing in Kafka?

Ans:

Consumer rebalancing in Kafka occurs when consumers join or leave a consumer group or when the number of partitions changes. During rebalancing, Kafka redistributes partitions among consumers to ensure an even distribution of the workload and maintain parallelism.

68. How does Kafka handle consumer failures during rebalancing?

Ans:

During consumer rebalancing, Kafka ensures that the consumers continue processing messages without loss. The partitions are temporarily reassigned to other consumers in the group, and the new consumer processes the messages from the last committed offset. This ensures fault tolerance and uninterrupted message processing.

69. Name some tools used for monitoring Kafka.

Ans:

Some tools used for monitoring Kafka include:

- Prometheus

- Grafana

- Confluent Control Center

- JMX (Java Management Extensions)

- Burrow

- Datadog

- New Relic

70. How can you use Prometheus to monitor Kafka?

Ans:

To use Prometheus to monitor Kafka:

- Configure Kafka to export JMX metrics.

- Set up a Prometheus server and configure it to scrape Kafka metrics.

- Use Grafana or Prometheus’s built-in UI to visualize and create dashboards for Kafka metrics.

71. Explain the role of in-sync replicas (ISRs) in Kafka.

Ans:

In-sync replicas (ISRs) are replicas of a partition that are caught up with the leader. Kafka considers only the replicas in the ISR for electing a new leader. ISRs help maintain data consistency and prevent data loss during broker failures.

72. What is the default retention period for Kafka topics?

Ans:

The default retention period for Kafka topics is seven days. After seven days, messages older than the retention period are eligible for deletion based on the configured retention policy.

73. What factors should be considered when designing the architecture for a Kafka-based system?

Ans:

Considerations for Kafka-based system architecture include:

- Number of brokers and partitions for scalability.

- Replication factors for fault tolerance.

- Message serialisation formats.

- Security measures such as encryption and authentication.

- Monitoring and logging strategies.

74. How can you configure data retention in Kafka?

Ans:

Data retention in Kafka can be configured by setting the log. Retention. Ms (for time-based retention) or log. Retention. Bytes (for size-based retention) properties in the broker configuration. These properties determine how long messages are retained or when they are deleted based on size.

75. What is a dead-letter queue in Kafka?

Ans:

A dead-letter queue in Kafka is a special topic where messages that cannot be processed successfully are sent. It allows for the isolation and reprocessing of failed messages, enabling developers to analyze and address issues without impacting the main processing flow.

76. Name some popular Kafka connectors.

Ans:

Some popular Kafka connectors include:

- JDBC Source and Sink Connectors

- Elasticsearch Sink Connector

- HDFS Sink Connector

- Amazon S3 Sink Connector

- Twitter Source Connector

- Debezium CDC Connector

- MongoDB Connector

77. How can you implement a dead-letter queue in Kafka?

Ans:

To implement a dead-letter queue in Kafka, configure producers to send failed messages to a designated topic (dead-letter queue). Consumers of this topic can then handle failed messages separately, allowing for retries or analysis of the issues causing the failures.

78. Explain the integration of Kafka with Apache Flink.

Ans:

Apache Flink can integrate with Kafka to consume, process, and produce data in real time. Flink’s Kafka connectors allow for seamless integration, and Flink can act as both a Kafka producer and consumer, making it suitable for stream processing applications.

79. What role does Apache Camel play in Kafka integration?

Ans:

- Integration Framework: Apache Camel is an open-source integration framework that simplifies the integration of various systems, protocols, and data formats.

- Kafka Component: Apache Camel includes a Kafka component that provides out-of-the-box support for integrating with Apache Kafka.

- Abstraction of Kafka Operations: Camel abstracts the complexities of Kafka operations, making it easier to produce and consume messages without dealing with low-level Kafka APIs.

- Unified Integration: Allows developers to define routes and transformations using a domain-specific language, promoting a unified and expressive way to integrate Kafka with other systems.

80. How does Apache Flink consume data from Kafka?

Ans:

Apache Flink consumes data from Kafka using the Flink Kafka Consumer. The consumer subscribes to Kafka topics, reads messages, and processes them as part of Flink’s stream-processing application. Flink provides exact-once semantics when consuming data from Kafka.

81. Describe the integration of Kafka with Apache Camel.

Ans:

Apache Camel provides a Kafka component that allows for easy integration with Kafka. With Camel, you can produce and consume messages from Kafka topics using various Camel components and endpoints, making it convenient for developers to incorporate Kafka into their applications.

82. What should be taken into account when running Kafka on Kubernetes?

Ans:

Considerations for running Kafka on Kubernetes include:

- Persistent storage for data retention.

- Resource allocation for CPU and memory.

- Configuring network policies for communication.

- Handling external access to Kafka brokers.

- Properly configuring StatefulSets for stable pod identities.

- Monitoring and logging setup for Kubernetes-based Kafka clusters.

83. How can you create a custom connector in Kafka Connect?

Ans:

To create a custom connector in Kafka Connect, implement the Connector and Task interfaces, specifying how data should be handled during source and sink operations. Package the connector into a JAR file and configure Kafka Connect to use the custom connector.

84. How can Kafka be used in Internet of Things (IoT) applications?

Ans:

Kafka is used in IoT applications to handle the massive volume of real-time data generated by sensors and devices. Kafka facilitates data ingestion, processing, and analytics by acting as a reliable and scalable messaging platform. It enables seamless communication between IoT devices and backend systems.

85. How can you monitor and address consumer lag in Kafka?

Ans:

- Monitor consumer lag: To determine the time difference between the consumer’s position and the most recent message in a partition, periodically examine the consumer lag metrics.

- Employ Consumer Lag Instruments: Use tools such as Confluent Control Center, Kafka Manager, and Burrow to gain insights into the health and lag of your consumer group.

- Construct Customer Lag Alerts: Create alerting systems based on consumer latency thresholds to receive real-time notifications of possible problems.

86. Explain the role of Kafka in real-time data streaming for IoT.

Ans:

In real-time data streaming for IoT, Kafka acts as a central hub for ingesting and processing data from sensors and devices. It ensures reliable and low-latency communication between devices and backend systems, enabling real-time analytics, monitoring, and decision-making in IoT applications.

87. What is consumer lag in Kafka?

Ans:

Consumer lag in Kafka refers to the delay or difference between the latest available message in a partition and the offset of the last processed message by a consumer. Consumer lag occurs when a consumer is not able to keep up with the incoming stream of messages.

88. How can Kafka be used in compliance with GDPR regulations?

Ans:

To comply with GDPR regulations, Kafka implementations should include encryption, access controls, and audit logs. Anonymization techniques should be applied to sensitive data, and proper data retention policies should be defined. Consent management and data subject rights should also be considered in Kafka-based systems.

89. Explain the deployment of Kafka in a Kubernetes environment.

Ans:

Deploying Kafka in a Kubernetes environment involves creating Kafka broker pods, configuring services, and using Kubernetes StatefulSets to manage Kafka broker instances. Kubernetes Operators and Helm charts are commonly used to simplify the deployment and management of Kafka clusters on Kubernetes.

90. Explain the concept of data anonymization in Kafka.

Ans:

Data anonymization in Kafka involves replacing or modifying sensitive information in messages to protect the privacy of individuals. Techniques may include masking, tokenization, or generalization of data. Anonymization is crucial for complying with data privacy regulations and ensuring ethical data processing.

91. Describe the integration of Kafka with machine learning pipelines.

Ans:

- Using Event Streaming to Incorporate Data: Utilize Kafka as a platform for event streaming to absorb data from multiple sources, such as logs, databases, and sensors.

- Processing Data in Real Time: For real-time data transformations, aggregations, and enrichments, use Kafka Streams or other stream processing frameworks.

- Model Training Based on Events: Put in place an event-driven architecture wherein pertinent events in the Kafka stream trigger the training of machine learning models.

92. How can Kafka be used in real-time data processing for machine learning?

Ans:

Kafka supports real-time data processing for machine learning by providing a continuous stream of data that can be fed into machine learning models. Producers can publish raw data to Kafka topics, and consumers can process, preprocess, and feed the data into machine learning pipelines for training and inference.

93. What is log indexing in Kafka?

Ans:

Log indexing in Kafka involves creating an index of messages within a log segment to facilitate efficient message retrieval based on offsets or timestamps. Indexing improves the speed of message lookups and is crucial for optimizing the performance of Kafka consumers.

94. How does Kafka use indexes to improve message retrieval?

Ans:

Index files and log segments are how Kafka arranges data. Log segments are files that are appended only. Every log segment has a corresponding index file.

- Index Entries: Pointers to message locations within the log segment are provided by the entries in the index file. Usually, each entry contains both the physical file offset and the message offset.

- Binary Search: To quickly find a message’s location with a given offset within a log segment, Kafka applies a binary search algorithm to the index entries.

- Random Access: By utilizing the offsets of the messages, the index facilitates efficient retrieval of information without requiring a thorough log scan.

95. Explain the use of Kafka in Change Data Capture scenarios.

Ans:

Kafka is commonly used in Change Data Capture (CDC) scenarios to capture and propagate changes in a database in real time. Producers within the database publish change events to Kafka topics, and consumers (CDC applications) subscribe to these topics to capture and process database changes.