- Understanding Queues and FIFO Principle

- Java Queue Interface Overview

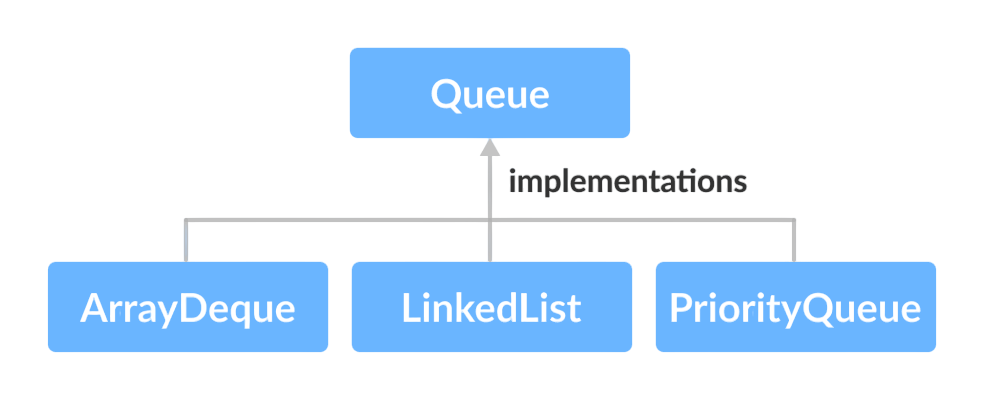

- Common Implementations (LinkedList, PriorityQueue)

- Enqueue and Dequeue Operations

- Peeking vs Polling

- Blocking Queues: ArrayBlockingQueue, LinkedBlockingQueue

- Thread-Safe Queues(ConcurrentLinkedQueue)

- Bounded vs Unbounded Queues

- Iterating over a Queue

- Use Cases in Applications

- Performance and Choosing the Right Queue

- Code Examples and Interview Tips

Understanding Queues and FIFO Principle

A queue in Java is a linear data structure that follows the FIFO (First-In-First-Out) principle. This means the element added first will be removed first, much like people standing in a queue at a ticket counter. The queue is an essential concept in data structures and algorithms, widely used in scheduling, buffering, and asynchronous data transfer. In Java, queues are part of the java.util package and are implemented as an interface. While the FIFO rule is the standard behavior, Java’s queue framework also includes variations like priority queues Java Training where order is determined by comparator logic rather than insertion sequence.Understanding Queues and FIFO Principle is fundamental to working with data structures that process elements in a specific order. By understanding queues and FIFO principle, you learn how elements are added at the back and removed from the front, ensuring a fair and predictable flow. This knowledge is key in many programming tasks such as scheduling, buffering, and handling requests efficiently.

To Earn Your Java Training Certification, Gain Insights From Leading Web Developer Experts And Advance Your Career With ACTE’s Java Training Today!

Java Queue Interface Overview

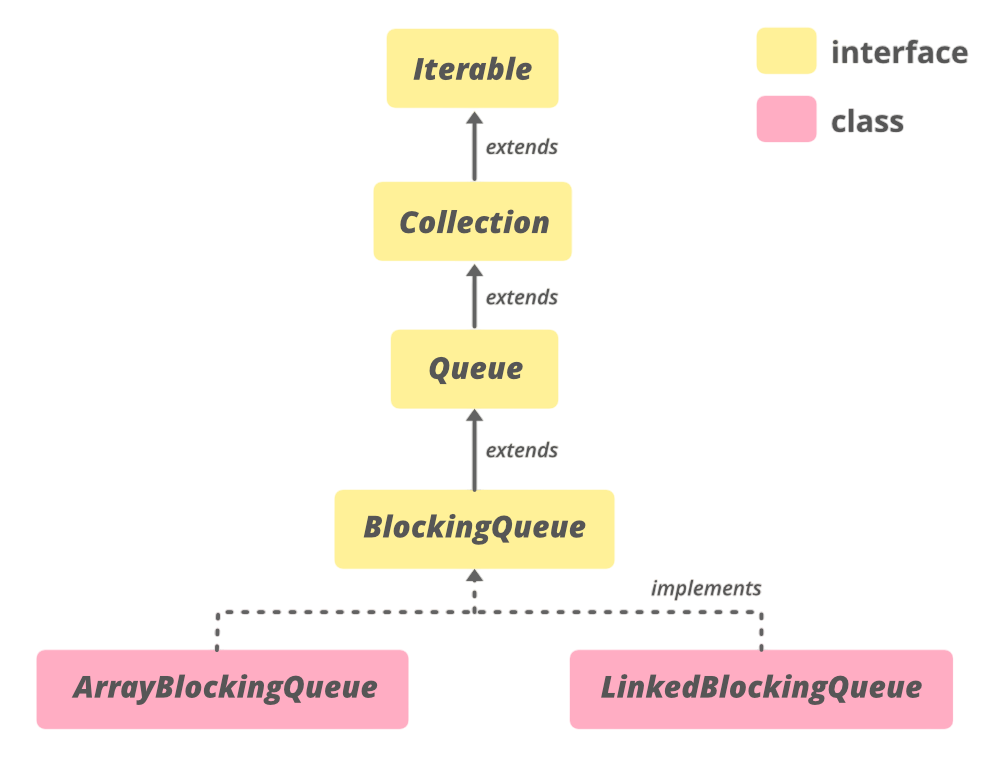

The Queue interface in Java extends the Collection interface, meaning it inherits all standard collection methods while adding queue-specific ones.The standard operations for queue data structures are defined by Java’s Queue Interface. Using a standard set of methods, it enables developers to work with many queue types, including priority queues and linked lists. Implementing effective and adaptable queue-based solutions requires an HashMap in Java understanding of the Queue Interface. This interface is generic (Queue

- offer(E e) – Adds an element without throwing an exception if the queue is full.

- add(E e) – Inserts an element but throws an exception if the queue is full.

- poll() – Retrieves and removes the head, returning null if empty.

- remove() – Retrieves and removes the head, throwing an exception if empty.

- peek() – Retrieves the head without removing it, returns null if empty.

- element() – Retrieves the head without removing it, throws an exception if empty.

Common Implementations (LinkedList, PriorityQueue)

Since each node in a linked list points to the next, adding or removing items is simple and doesn’t need moving anything about. Conversely, a PriorityQueue is a unique type of queue in which things are released according to their priority rather than merely the order in which they were added. In order to swiftly locate and eliminate the item with the highest priority, PriorityQueues are typically constructed utilizing heaps.Java provides several classes that implement the Queue interface, each with unique properties:

- LinkedList – Implements both List and Queue, making it versatile. Ideal for FIFO operations where insertion and removal at both ends are frequent.

- PriorityQueue – Orders elements according to natural ordering or a specified comparator. Does not follow strict FIFO but instead prioritizes elements Java Classes and Objects .

- ArrayDeque – A resizable array implementation of Deque, faster than LinkedList for most queue operations.Each implementation differs in performance characteristics, making the choice dependent on the application’s needs.

- Enqueue: offer() or add() methods.

- Dequeue: poll() or remove() methods.

- Queue queue = new LinkedList

- <>();

- queue.offer(“A”);

- queue.offer(“B”);

- queue.poll(); // removes “A”

- peek() – Returns the head without removing it.

- poll() – Returns and removes the head.This distinction is crucial in applications like message processing, Java Training where you might want to preview an item before deciding to remove it.

- ArrayBlockingQueue – Fixed-size queue backed by an array; elements are ordered FIFO.

- LinkedBlockingQueue – Optionally bounded and backed by linked nodes; offers higher throughput under contention.Blocking queues block threads when they attempt to dequeue from an empty queue or enqueue to a full queue, What Is the Java API making them ideal for concurrent applications.

- for (String item : queue) {

- System.out.println(item);

- }

- Task Scheduling – Managing jobs in a print queue or OS scheduler.

- Order Processing – E-commerce checkout systems.

- Messaging Systems – Kafka or RabbitMQ consumers often process messages in queue-like structures HashMap in Java.

- Traffic Management – Network packets queued before processing.

- Multithreading – Producer-consumer scenarios using BlockingQueue.

- LinkedList – O(1) insertion/removal at both ends but higher memory overhead.

- ArrayDeque – Faster for most queue operations but not thread-safe.

- PriorityQueue – O(log n) insertion due to heap-based ordering.

- Blocking Queues – May incur locking overhead but ensure thread safety. Choosing the right queue involves balancing speed, memory, ordering requirements, and thread-safety.

- Queue

numbers = new PriorityQueue<>(); - numbers.offer(3);

- numbers.offer(1);

- numbers.offer(2);

- while (!numbers.isEmpty()) {

- System.out.println(numbers.poll());

- }

- Output:

- 1

- 2

- 3

Would You Like to Know More About Java Training? Sign Up For Our Java Training Now!

Enqueue and Dequeue Operations

In queue terminology, enqueue means C++ and Java adding an element to the queue, and dequeue means removing the head element. In Java:

Using offer() instead of add() is generally preferred to avoid exceptions when the queue has capacity restrictions.

Peeking vs Polling

Peeking and polling are both used to inspect the head element, but the difference lies in removal:

Blocking Queues: ArrayBlockingQueue, LinkedBlockingQueue

BlockingQueue is a subinterface of Queue in java.util.concurrent designed for thread-safe producer-consumer patterns.In concurrent programming, blocking queues, such as LinkedBlockingQueue and ArrayBlockingQueue, are thread-safe queues used to control data exchange between producer and consumer threads. Because ArrayBlockingQueue has finite capacity and employs a fixed-size array, it blocks consumers when it is empty and producers when it is full. In contrast, LinkedBlockingQueue is based on linked nodes and is usually unbounded (or optionally constrained), providing more performance and flexibility in handling different workloads.

Are You Interested in Learning More About FullStack With Java Training? Sign Up For Our Java Training Today!

Thread-Safe Queues (ConcurrentLinkedQueue)

The ConcurrentLinkedQueue class is a lock-free, thread-safe implementation of a queue based on linked nodes. It offers better scalability in highly concurrent environments compared to blocking queues because it does not block threads. This makes it well-suited for message passing in multi-threaded applications where low latency is critical.When several threads access shared data at once, thread safety becomes essential. Data corruption and unexpected behavior in concurrent applications are avoided by ensuring thread safety C++ and Java. To enable dependable multi-threaded processes, thread safety is a key component of many Java queue systems.Concurrent programming requires Thread-Safe Queues to guarantee data consistency when several threads visit a queue at once. In multi-threaded systems, thread-safe queues provide for dependable and seamless communication between threads by avoiding frequent problems like race situations and data corruption.

Bounded vs Unbounded Queues

A bounded queue has a fixed capacity, and attempts to add elements beyond its limit either fail or block (depending on implementation). Examples include ArrayBlockingQueue and bounded LinkedBlockingQueue.An unbounded queue, Java Classes and Objects such as ConcurrentLinkedQueue or unbounded LinkedBlockingQueue, grows dynamically as needed, only limited by available memory. Choosing between the two depends on whether you want to limit memory usage or ensure predictable behavior under load.

Preparing for Java Job Interviews? Have a Look at Our Blog on Java Training Interview Questions and Answers To Ace Your Interview!

Iterating over a Queue

While queues are typically used for FIFO operations, sometimes you need to inspect all elements without altering the structure. This can be done using enhanced for-loops or iterators:

However, keep in mind that iteration does not guarantee order in implementations like PriorityQueue, where elements are not stored strictly in insertion order.

Use Cases in Applications

Queues are widely used in real-world applications:

Performance and Choosing the Right Queue

Performance varies based on implementation:

Code Examples and Interview Tips

Example:

Be prepared to explain the difference between poll() and remove(), when to use BlockingQueue vs ConcurrentLinkedQueue, Java Training and how a PriorityQueue maintains order internally.

Upcoming Batches

| Name | Date | Details |

|---|---|---|

| Java Training | 09 - Feb - 2026(Weekdays) Weekdays Regular |

View Details |

| Java Training | 11 - Feb - 2026(Weekdays) Weekdays Regular |

View Details |

| Java Training | 14 - Feb - 2026(Weekends) Weekend Regular |

View Details |

| Java Training | 15 - Feb - 2026(Weekends) Weekend Fasttrack |

View Details |