These AWS SysOps Interview Questions have been designed specially to get you acquainted with the nature of questions you may encounter during your interview for the subject of AWS SysOps. As per my experience good interviewers hardly plan to ask any particular question during your interview, normally questions start with some basic concept of the subject and later they continue based on further discussion and what you answer.we are going to cover top 100 AWS SysOps Interview questions along with their detailed answers. We will be covering AWS SysOps scenario based interview questions, AWS SysOps interview questions for freshers as well as AWS SysOps interview questions and answers for experienced.

1. Define SysOps?

Ans:

Let’s start with the fundamentals before we get into the questions. The practice of overseeing and maintaining an organization’s IT infrastructure is known as systems operations or SysOps. This covers everything, including servers, networks, hardware, and software.

2. What are a SysOps Engineer’s primary duties?

Ans:

The stability, security, and scalability of an organization’s IT infrastructure are the responsibilities of a SysOps Engineer. This covers duties including system monitoring, problem-solving, putting security measures in place, and performance optimization.

3. How do vertical and horizontal scaling differ from one another?

Ans:

Vertical scaling includes adding additional resources to a single machine to boost capacity, whereas horizontal scaling entails adding more machines to a system.

4. What’s a load balancer used for?

Ans:

Incoming network traffic is divided among several servers by a load balancer, which keeps no server overcrowded and guarantees that all requests are processed quickly.

5. What is cloud formation?

Ans:

With the help of a program called AWS CloudFormation, you can use code to construct and manage AWS infrastructure resources. This promotes uniformity across environments and aids in automating the resource provisioning process.

6. What is an instance of an EC2?

Ans:

A virtual machine that operates on the Amazon Web Services (AWS) cloud is called an EC2 instance. It can be modified with different operating systems, apps, and services to suit unique corporate demands.

7. What’s an AMI?

Ans:

A virtual machine image that is already configured and used to build EC2 instances is called an Amazon Machine Image (AMI). It has all the data required to start an instance, including the operating system, application server, and apps.

8. What is a bucket in S3?

Ans:

A cloud-based object storage solution called Amazon Simple Storage Solution (S3) a reliable and scalable data storage solution. A S3 bucket is a storage container that holds files or photos in S3.

9. What is the VPC?

Ans:

With the help of the Amazon Virtual Private Cloud (VPC) service, you can establish a private network inside the AWS cloud. It gives your resources a safe, segregated environment and gives you command over network traffic.

10. What is the IAM?

Ans:

One tool that lets you control access to AWS resources is called AWS Identity and Access Management (IAM). It allows you to build and manage groups, users, and permissions so that only people with permission can use your resources.

11. Explain CloudTrail.

Ans:

AWS One is a service that keeps track of all API calls performed to Amazon web services. It offers insight into resource modifications and user behaviour, which is beneficial for troubleshooting, security analysis, and compliance auditing.

12. Describe CloudWatch.

Ans:

Amazon One monitoring tool that offers insight into AWS apps and resources is called CloudWatch. Metrics, logs, and events are gathered and monitored, and alarms can be set off based on pre-established thresholds.

13. Describe Lambda.

Ans:

With serverless computing services with Amazon, like Lambda, you can execute code without provisioning or managing servers. It can launch backend processes, create serverless apps, and launch additional AWS services.

14. What’s Elastic Beanstalk on AWS?

Ans:

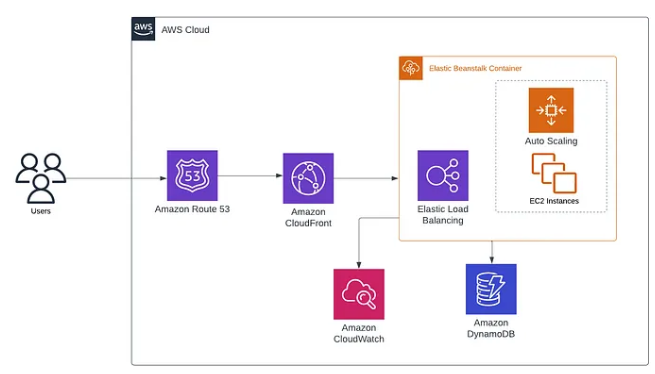

AWS Elastic Beanstalk is one solution that simplifies the deployment and management of apps in the AWS cloud. It takes care of the scaling, deployment, and keeping an eye on your application so you can concentrate on writing code.

15. What is 53 Route?

Ans:

You can use Amazon Route 53, a highly available and scalable DNS solution, to direct traffic to external endpoints or AWS services. DNS record management and domain name registration are further uses for it.

16. Describe RDS.

Ans:

A managed database service called Amazon Relational Database Service (RDS) makes setting up, running, and growing a relational database in the cloud simple. Popular database engines are supported, including MySQL, PostgreSQL, and SQL Server.

17. Describe DynamoDB.

Ans:

Amazon At every scale, DynamoDB is a NoSQL database service that is quick and adaptable, offering reliable latency of just one millisecond at any size. It helps store and utilize APIs or SDKs to access and retrieve any volume of data.

18. What is load balancing that is elastic?

Ans:

One of AWS’s services, Elastic Load Balancing (ELB), automatically splits incoming traffic among several targets, including containers or EC2 instances. It can dynamically scale in response to traffic and increase fault tolerance and availability.

19. Explain Auto Scaling.

Ans:

Autoscaling lets you change your resources’ capacity in response to demand. You may use it to ensure your apps can handle unexpected spikes in traffic and are always available.

20. Describe CloudFront.

Ans:

Users can get content from Amazon CloudFront, a content delivery network (CDN), including photos, videos, and static files. It caches content at edge locations worldwide for quicker delivery and reduced latency.

21. What is EBS?

Ans:

Persistent data can be stored with EC2 instances using Amazon Elastic Block Store (EBS), a block-level storage solution. It offers low-latency, high-performance storage volumes that can be connected to EC2 instances.

22. What is EFS?

Ans:

Files can be shared and stored across several instances using the fully controlled and scalable Amazon Elastic File System (EFS). It supports standard file system interfaces like NFS and offers a straightforward interface.

23. Describe SES.

Ans:

Emails, including transactional and marketing, can be sent using Amazon Simple Email Service (SES), a cloud-based email-sending solution. It offers an easy and affordable means of sending and receiving emails.

24. How does CloudFormation Stack work?

Ans:

A group of AWS resources built and maintained collectively is called a CloudFormation stack. It may be used to create sophisticated systems and offers a means of automating the development and management of resources.

25. Describe Elasticache.

Ans:

Amazon Web applications can function better when they employ ElastiCache, a managed in-memory data store solution. It works with well-known caching engines like Redis and Memcached.

26. Describe CloudHSM.

Ans:

Amazon A hardware security module (HSM) called CloudHSM offers cryptographic functions and safe key storage for your cloud-based apps. It can be applied to assist in fulfilling security and compliance needs.

27. Describe AWS Glue.

Ans:

AWS Glue is an extract, transform, and load (ETL) service fully managed by Amazon and facilitates data movement between data repositories. It may be connected with other AWS services and offers a serverless environment for ETL processes.

28. Describe Amazon Batch.

Ans:

Batch jobs at any size can be executed with AWS Batch, a fully managed batch processing solution. It offers a method for running batch operations on AWS quickly and effectively without requiring infrastructure management.

29. What is Snowball in AWS?

Ans:

Consider using AWS Snowball, a petabyte-scale data transfer service for transferring massive volumes of data into or out of AWS. It offers a very safe and impenetrable method of communicating.

30. What is CodeDeploy by AWS?

Ans:

Applications can be automatically deployed to serverless Lambda functions, on-premises instances, and Amazon EC2 instances with AWS CodeDeploy, a fully managed deployment solution. With little downtime, updates and rollbacks can be implemented using it.

31. How do you maintain and track your AWS infrastructure’s high availability and performance?

Ans:

- Monitor resource usage, system-wide performance metrics, transaction volumes, and latency with Amazon CloudWatch and issue alarms based on predefined thresholds.

- I am practising AWS Auto Scaling to balance capacity and preserve application availability.

- By using AWS Trusted Advisor to examine your AWS infrastructure, you may optimize it, lower expenses, improve performance, and strengthen security.

- By using AWS CloudTrail, you may enable risk, operational, governance, and compliance audits for your AWS account.

In response, maintaining and keeping an eye on infrastructure in AWS entails:

32. Describe AWS’s Shared Responsibility Model.

Ans:

- The AWS Shared Responsibility Model outlines which security features (cloud security) are offered by AWS and which are the user’s responsibility. the consumer (cloud security).

- Hardware, software, networking, and facilities comprise the infrastructure that powers AWS services, which is all under AWS’s protection.

- On the other hand, customer data, identity and access management, operating system, network and firewall setup, application security, and data encryption requirements are the customer’s responsibility.

33. How are data security and integrity in AWS S3 ensured?

Ans:

- Maintaining numerous versions of an object with S3 Versioning.

- Using SSL/TLS to encrypt data while it is being sent to S3.

- Encrypting data at rest using S3 Server-Side Encryption (SSE).

- Using IAM Policies and S3 Bucket Policies to control access to S3 buckets.

- Managing the encryption keys that SSE uses by using AWS KMS.

- By needing MFA to delete S3 items, MFA Delete adds an extra degree of protection.

The following actions can be taken to ensure data security and integrity in Amazon S3:

34. Why is an Amazon VPC applicable regarding security, and what is its purpose?

Ans:

- Division of a network into public and private subnets.

- One can regulate incoming and outgoing network traffic by security groups and network access control lists (ACLs).

- VPN connections to your workplace network to enable secure communication.

- Hardware-based VPN equipment specifically designed for increased security.

- The capacity to establish and implement policies for resources connected to VPCs using AWS IAM.

To launch AWS services in a virtual network of your choosing, you can provision isolated areas of the AWS Cloud using the Amazon Virtual Private Cloud (VPC). It is advantageous for security since it offers:

35. How do you manage backups and disaster recovery in AWS?

Ans:

- Use Amazon RDS and Amazon EBS snapshots to implement regular backups.

- To automate and consolidate data protection across all AWS services, use AWS Backup.

- It ensures version control in Amazon S3 to restore deleted data accidentally or on purpose.

- We are utilizing Amazon S3 Cross-Region Replication (CRR) to improve disaster recovery and provide lower latency data access.

- I developed and executed a disaster recovery strategy using technologies like AWS CloudEndure, which offers continuous virtual machine replication.

In AWS, backups and disaster recovery are managed by:

36. How would you recognize and stop a denial-of-service assault on your Amazon infrastructure?

Ans:

- Utilizing Amazon Shield, especially the Advanced edition, to offer prolonged defense against DDoS attacks.

- Putting AWS WAF into practice to shield web apps from widespread online vulnerabilities.

- AWS CloudFront and Route 53 are being used to absorb and divert attack traffic.

- Use Amazon CloudWatch to monitor load balancer records and network traffic.

- Limiting network access control lists (ACLs) and security groups to your application.

In AWS, DDoS attacks can be recognized and countered by:

37. How may an instance that is failing a status check be troubleshooted?

Ans:

- Examine the EC2 console’s System Log to obtain diagnostic data.

- If the System Status Check fails, you can attempt restarting the instance (if EBS backs it) and migrating it to a different host.

- Ensure the software is configured appropriately, and the instance is booting up properly in case of an Instance Status Check failure.

- To explore and communicate with the instance, use the Amazon EC2 API or the AWS CLI.

- Create an AMI of the instance as a last option so you can restart it on new hardware.

If a status check is unsuccessful, take into account the following actions:

38. In an AWS environment, how are configuration changes managed?

Ans:

- We are monitoring and Documenting changes to AWS resource configurations using AWS Config.

- You may automate AWS service provisioning and updates by using Amazon CloudFormation for infrastructure as code.

- I am using AWS OpsWorks to implement a configuration management Actor.

- We are utilizing AWS Systems Manager to organize resources into groups and distribute settings, tasks, and compliance audits among them.

In response, configuration modifications can be handled by:

39. How can OS patching for EC2 instances be automated?

Ans:

- Use AWS Systems Manager Patch Manager to scan and apply patches to your EC2 instances.

- OS patching and other processes can be automated using recipes or manifest using AWS OpsWorks for Chef or Puppet.

- Bespoke AMIs with pre-applied patches that can be used in an auto-scaling environment or created and deployed for new instances.

To automate OS patching for EC2 instances, use the following method:

40. How would you go about optimizing AWS infrastructure costs?

Ans:

- Auto-scaling into practice to balance resource availability and demand.

- Utilizing savings or Reserved Instances plans to cut expenses while gradually dedicating to regular use.

- Using Spot Instances to take advantage of reduced rates for workloads that are flexible and resistant to interruptions.

- I am making use of cost-optimization suggestions from AWS Trusted Advisor.

- Evaluating and clearing out resources regularly or right-sizing instances to fit workload requirements.

- We put data lifecycle policies in services like Amazon S3 to archive or shift data to less expensive storage classes.

- AWS offers cost-management and billing solutions for tracking, managing, and analyzing service costs.

To optimize AWS infrastructure costs, one can do the following:

41. How do you keep an AWS environment compliant?

Ans:

- They are assessing and verifying that AWS resource configurations adhere to compliance guidelines using AWS Config.

- AWS CloudTrail into practice to record, keep track of, and log account activity.

- We employ AWS Companies to Configure Service Control Policies (SCP) to provide centralized management over several AWS accounts.

- Use AWS Artifact to retrieve AWS compliance reports whenever needed.

- We regularly examine IAM roles and permissions to ensure that the least privilege is followed.

To preserve compliance, one can do the following:

42. What role does AWS Lambda play in operational task automation?

Ans:

- Data backup happens automatically.

- Notifying recipients when certain events occur.

- Responding to changes in infrastructure using health checks and auto-remediation.

- Resource management is done automatically and without human interaction using bespoke logic.

AWS Lambda enables you to run backend code without building or managing servers in response to events (such as HTTP requests via Amazon API Gateway, database updates in DynamoDB, etc.). This serverless computing is beneficial for:

43. Explain Amazon CloudFront’s function in AWS infrastructure administration.

Ans:

- Reducing latency by caching frequently accessible content at edge sites near end users.

- Integrating AWS Shield to defend against DDoS attacks.

- Combining with AWS WAF improves application security.

- Providing the best possible user experience by acting as a distribution channel for both dynamic and static web content.

Amazon CloudFront is a content delivery network (CDN) that interfaces with other Amazon Web Services to distribute content with minimal latency and fast transfer speeds. Among its functions are:

44. How is data security achieved in Amazon RDS?

Ans:

- AWS Key Management Service to encrypt data while it’s at rest (KMS).

- SSL/TLS encryption of data while it’s in transit.

- Database authentication and IAM policies into practice for restricted access.

- Automating snapshots and regularly backing up data.

- Making use of security groups to specify who has access to the database.

To safeguard Amazon RDS data, one can do the following:

45. How do you monitor how AWS resources and APIs are being used?

Ans:

- Amazon CloudWatch for log visualization, alarm setup, and metrics tracking.

- Recording, keeping track of, and archiving API call records with Amazon CloudTrail.

- You track user requests with Amazon X-Ray to find bottlenecks and debug apps monitoring IP activity within your VPC by analyzing VPC Flow Logs.

To monitor in AWS, one can do the following:

46. What role does the AWS Systems Manager play?

Ans:

- Unified view of operational data for all AWS services.

- On-site nodes and EC2 instances can be managed remotely.

- Automation of routine maintenance procedures like patching.

- Secrets and parameters are securely stored and retrieved.

- Maintenance of application configuration between instances.

AWS Systems Manager gives you visibility into and control over your AWS infrastructure. It is significant because of:

47. In what ways would you raise AWS’s data transfer rates?

Ans:

- Establishing a dedicated network link from on-premises to AWS using Amazon Direct Connect.

- Using Snowball or Snowmobile on AWS to transmit massive amounts of data.

- Data compression and optimization before transfer.

- Content caching at edge sites with Amazon CloudFront.

- S3 Transfer Acceleration is being used to enable quicker uploads to S3.

The following actions can be taken to increase data transfer rates:

48. How can latency problems in AWS be resolved?

Ans:

- Examining Amazon CloudWatch data to gain performance understanding.

- Tracing requests with Amazon X-Ray to identify bottlenecks.

- Checking database activity in Amazon RDS Performance Insights.

- Assessing network setups and enhancing VPC peering relationships.

- Reducing internal AWS latencies by allocating resources to the same region and availability zone.

To solve latency concerns, use the following methods:

49. How would you approach AWS secret management?

Ans:

- Rotate, manage, and retrieve secrets with AWS Secrets Manager.

- Private information is stored in the AWS Systems Manager Parameter Store as encrypted parameters.

- I am using IAM for access control and AWS Key Management Service (KMS) for encryption of secrets.

The answer is that security in AWS depends on secret management. It can be managed by:

50. What plans would you implement to maintain operations in a regional AWS outage?

Ans:

- Creating multi-regional application designs.

- Rerouting traffic to safe areas using DNS failover using Amazon Route 53.

- Amazon S3 Cross-location Replication into practice to backup data to a different location.

- Checking failover methods frequently to make sure they function as intended in the event of an actual outage.

The following are necessary to guarantee continuity during regional outages:

51. How can high availability and scalability be guaranteed in an AWS environment?

Ans:

To guarantee high availability and scalability, you can use several AWS services, including Amazon Elastic Load Balancing (ELB), Amazon Auto Scaling, and Amazon CloudFront. These services assist in allocating traffic among several availability zones (AZs) and automatically adjust resource availability in response to demand.

Additionally, you can utilize Amazon Route 53 to create multi-region architectures and route traffic to several availability zones. This makes it possible for your application to remain accessible even if one or more regions stop working.

You may also use Amazon DynamoDB Global Tables and Amazon RDS Multi-AZ for data replication. By doing this, you can be sure that your data will remain accessible even if one or more availability zones.52. Could you describe an Amazon Virtual Private Cloud (VPC) setup and configuration?

Ans:

- To create a virtual private cloud (VPC), sign in to the AWS Management Console, go to the dashboard, and choose “Create VPC.” Choose an IP range and a CIDR block, and give your virtual private cloud (VPC) a name.

An Amazon Virtual Private Cloud (VPC) must be set up and configured in a few steps:

Make subnets: Establish subnets within your virtual private cloud in various availability zones. Distributing resources among several availability zones enables you to achieve high availability. Set up the IP addressing for the VPC: You can set up DHCP settings in your VPC, including DNS servers, NTP servers, and domain names.

Establish a security group: Incoming traffic is managed by security groups.

53. How is Amazon CloudWatch used for resource management and monitoring on AWS?

Ans:

- Establish a CloudWatch alarm: Set up alerts that monitor metrics and send out notifications or modify the resources you watch automatically if a threshold is crossed.

- Measurement collection and tracking: To gather and monitor metrics from different AWS resources, use CloudWatch.

- Examine and evaluate logs: To keep an eye on, store, and retrieve your logs, use CloudWatch Logs.

- Build Dashboards: To display numerous metrics and alarms simultaneously in one location, build CloudWatch dashboards.

Amazon CloudWatch monitors AWS resources and the apps you use on AWS. Using CloudWatch, you may control and keep an eye on AWS resources as follows:

Installing the CloudWatch Agent on your instances will allow you to get more system-level data, including RAM utilization, disk usage, and network traffic.

54. Could you describe the setup and configuration procedures for Amazon (EFS) and Amazon (EBS)?

Ans:

- To build an EBS volume, use an SDK, the AWS Management Console, or the AWS Command Line Interface (CLI).

- Connect the volume to a specific example: To attach the volume to an EC2 instance, use an SDK, the AWS CLI, or the AWS Management Console.

- Mount and format the volume: After formatting it, Mount the volume on the instance.

- Set up the volume: Perform any necessary configurations on the volume, such as setting up a file system or RAID.

- Make a picture: To make a new volume or to use as a backup, take a picture of the volume.

Block storage for Amazon Elastic Compute Cloud (EC2) instances is provided by Amazon Elastic Block Store (EBS). You can make storage volumes with EBS and connect them to EC2 instances. Additionally, you can create new volumes from snapshots of your current volumes. An overview of how to install and set up EBS may be found here:

- Create a File System: To create a file system, use an SDK, the AWS CLI, or the AWS Management Console.

- Mount the File System: To mount the file system on one or more Amazon EC2 instances, use the AWS Management Console, the AWS CLI, or an SDK.

- Set Up the File System: Adjust the File System’s settings as necessary, including performance and access control configurations.

- Obtain File System Access: Use the standard interfaces and protocols to access the file system.

- Scale the File System: Adjust the file system’s performance and storage capacity as necessary.

- File System Monitoring: To monitor the file system, use CloudWatch.

A fully managed service called Amazon Elastic File System (EFS) enables file storage in the AWS Cloud to be simple to set up, scale, and use. An overview of how to install and set up EFS can be found here:

55. How are AWS Elastic Beanstalk apps managed and troubleshooted?

Ans:

- The Elastic Beanstalk command line interface (CLI), the AWS Management Console, or the Elastic Beanstalk management console can all be used to keep an eye on the condition of your Elastic Beanstalk setup.

- To keep an eye on your Elastic Beanstalk environment’s functionality and set up alerts to alert you to possible problems, use CloudWatch.

- View environment events, like deployments and failures, in real-time by utilizing Elastic Beanstalk’s event stream functionality.

- Use Elastic Beanstalk’s log streaming capability for real-time application log viewing and troubleshooting

The following actions can be performed to manage and troubleshoot AWS Elastic Beanstalk applications:

56. How is AWS Auto Scaling managed and troubleshooted?

Ans:

- Please verify that the scaling policies of the Auto Scaling group are configured correctly by reviewing them. Verify that the scaling policies are in place and that the target, minimum, and maximum capacity are all configured appropriately when the right conditions are met.

- Examine the past occurrences of the Auto Scaling group to determine whether any of them are affecting the group’s performance. For instance, problems may arise if instances are launched frequently.

- You can end a particular instance if something is wrong with it and start a fresh one to see if it fixes the problem.

- Look through the CloudTrail logs for errors or problems with the Auto Scaling group.

Several actions can be made to control and troubleshoot AWS Auto Scaling: Utilizing CloudWatch metrics, monitor the Auto Scaling group’s instances’ performance. This will enable you to find any problems affecting the instances’ performance, such as CPU, memory, or network use.

57. Describe the setup and configuration procedures for Amazon (SNS) and Amazon (SQS).

Ans:

- Open the AWS Management Console, go to SQS, click “Create new queue,” provide name/settings.

- Use IAM to set queue permissions, allowing only authorized users/applications.

- In SNS, click “Create topic,” provide name/settings.

- Use IAM to set topic permissions, ensuring only authorized users/apps can access.

- Specify communication recipients by setting endpoint to email, SQS queue, HTTPS endpoint, or Lambda function.

58. What is the process for handling and debugging Amazon Elastic Load Balancing?

Ans:

- Monitor load balancer performance with CloudWatch metrics (request/response counts, healthy/unhealthy host counts).

- Set CloudWatch Alarms with thresholds for key indicators to receive alerts.

- Utilize Amazon ELB Access Logs to track request/response details.

- Enable ELB Health Checks for automatic routing to healthy instances.

- Add/remove instances by updating load balancer settings via AWS Management Console, CLI, or SDKs.

- Follow Elastic Load Balancer best practices (e.g., two availability zones) using AWS Trusted Advisor.

- Troubleshoot load balancer issues with the AWS Elastic Load Balancer troubleshooting guide.

- For additional help, use the AWS Support Center to submit a case with AWS.

59. What is the meaning of cloud computing?

Ans:

Cloud computing is the practice of storing and retrieving data via the Internet. The on-demand, pay-as-you-go delivery of IT services via the Internet is known as cloud computing. Nothing is stored on the hard drive of your computer by it. Cloud computing allows you to access data from a remote server.

60. What functions does a SysOps Administrator Associate perform?

Ans:

- Initially, they oversaw AWS’s entire life cycle, including automation, security, and provisioning.

- Secondly, setting up the architecture and managing multi-tier systems.

- Thirdly, providing services like errata and kernel patching software updates and patching

- Fourthly, efficiently keeping an eye on the availability of performance levels.

- I am finally Establishing and overseeing disaster recovery.

The primary responsibility of an AWS administrator is to set up cloud management services for the company on AWS. An AWS Certified SysOps Administrator Associate also carries out additional yet significant duties.

61. What is the pillar of operational excellence?

Ans:

In order to create business value, the operational excellence pillar is centered on continuously improving processes and procedures while maintaining and monitoring systems. Major tasks include automating modifications, reacting to occurrences, and establishing guidelines to control daily operations.

62. What does the term “security pillar” mean to you?

Ans:

Data and system protection is a top focus for the security pillar. Important topics include data confidentiality and integrity, system security, privilege management (controlling who can do what), and putting policies in place to identify security events.

63. What is Reliability/Dependability Pillar?

Ans:

The dependability pillar guarantees that a workload completes its tasks precisely, reliably, and on schedule. A resilient workload quickly bounces back from setbacks to meet business and customer demand. The design of distributed systems, recovery planning, and change management are all important issues.

64. What is meant by the Performance Efficiency Pillar, in your opinion?

Ans:

The areas where IT and computer resources were employed effectively were the emphasis of the performance efficiency pillar. Important topics include monitoring performance, choosing the right resource kinds and sizes based on workload demands, and making wise decisions to maintain efficiency as business needs evolve.

65. What are the pillars of cost optimization?

Ans:

The goal of the cost optimization pillar is to cut expenses that aren’t essential. Understanding and managing financial expenditures, choosing the best and most appropriate amount of resource kinds, tracking expenditure over time, and scaling to meet needs without going over budget are all important subjects.

66. What are Amazon CloudWatch Logs?

Ans:

- AWS CloudWatch Logs is a service that lets users track, save, and access log files from many sources, including Route 53, AWS CloudTrail, and Amazon Elastic Compute Cloud (Amazon EC2) instances.

- Additionally, it enables you to combine logs from all of your programs, systems, and Amazon services into a solitary, extremely scalable offering.

- With logs, you can see all of your logs—regardless of source—as a unified, coherent flow of events arranged chronologically.

- You can also use logs to group logs by particular fields, query and sort them according to other dimensions, build custom computations using a robust query language, and visualize log data in dashboards.

67. What does the term “auto-scaling” mean?

Ans:

- AWS Auto Scaling analyzes your applications and automatically modifies capacity to provide reliable, consistent performance at the lowest possible cost. Moreover, AWS Auto Scaling streamlines the process of scaling applications across numerous resources and services in a matter of minutes.

- Through recommendations that help you optimize performance, costs, or a balance of the two, AWS Auto Scaling makes scaling easier.

- AWS Auto Scaling ensures that your applications always have the right resources at the right time.

68. What advantages does auto-scaling offer?

Ans:

- Quick setup scaling Target usage levels for various resources can be specified using AWS Auto Scaling’s user-friendly interface. Without switching between consoles, you can easily view the average consumption of each of your scalable resources.

- Make wise choices about scaling: Using AWS Auto Scaling, you can create scaling strategies that automate the way various resource groups react to variations in demand. Either availability, costs, or a combination of the two can be optimized.

- Based on your scaling, you may continue to achieve optimal application availability and performance even in situations where workloads are sporadic, erratic, or constantly changing. Your apps are kept under observation by AWS Auto Scaling to ensure that they are performing at the levels you have set.

69. Differentiate between scaling vertically and horizontally.

Ans:

The process of adding nodes to a computer system without making them smaller is known as horizontal scaling. The technique of growing a single instance or node’s size and computing power while lowering the number of nodes or instances is known as vertical scaling, in contrast.

70. Define “instance”?

Ans:

A single physical or virtual server is referred to as an instance in computer architecture. While the terms “node” and “instance” are equivalent in most systems, in certain systems an instance may nevertheless be able to perform the functions of many nodes.

71. What does Amazon EC2 offer?

Ans:

A cloud computing service called Amazon Elastic Computing Cloud (Amazon EC2) provides scalable, secure computing capacity. It was made with the intention of opening up web-scale cloud computing to developers. Furthermore, establishing and obtaining capacity is a breeze thanks to the user-friendly web service interface. You can operate on Amazon’s reliable computer infrastructure and have total control over your computing resources.

72. What characteristics does Amazon EC2 Services offer?

Ans:

- Amazon EC2 Fleet GPU Compute Instances to Maximize

- Compute Performance and Cost

- GPU Graphics Examples

- Elevated I/O Instances

- Enhanced CPU Setups

- Adaptable Storage Solutions

Several helpful and potent capabilities are available with Amazon EC2 to help develop enterprise-class applications that are scalable and resilient to failure.

First, examples of Bare Metal

73. What is the Amazon EFS?

Ans:

Amazon offers an elastic file system that is serverless and can be set up and forgotten. Building a file system and mounting it on an Amazon EC2 instance allows you to use Amazon EFS to read and write data to and from the file system.

74. What is provided by Amazon RDS Multi-AZ Deployments?

Ans:

Because of their enhanced durability and availability, RDS database (DB) instances in Amazon RDS Multi-AZ deployments are ideal for production database workloads. Additionally, when you create a Multi-AZ DB Instance, Amazon RDS creates a primary DB Instance and synchronously replicates the data to a backup instance in a different AZ.

75. What connection exists between AMI and instance?

Ans:

- There are numerous ways for customers to access Amazon EC2 through Amazon Web Services.

- Amazon Web Services command line interface, Amazon tools for Windows Powershell, and Amazon web services command line interface.

- You have to register for an Amazon Web Services account before you can access these resources. Moreover, several instances can be launched using a single AMI.

- Typically, an instance is a representation of the hardware on the host computer.

- Each sort of instance has different computational and memory capacities.

76. Briefly describe Amazon S3 replication.

Ans:

Amazon Simple Storage Service (S3) replication is a low-cost, fully managed, elastic, and adaptive solution that makes replicas of things between buckets. With the most flexibility and functionality available in cloud storage, S3 Replication enables you to meet your data sovereignty requirements as well as other business needs while maintaining the necessary controls.

77. What does the Recovery Time Objective (RTO) mean?

Ans:

RTO stands for maximum tolerated lateness.

The maximum allowable time interval between a service interruption and a service restoration is known as the RTO. This establishes the allowable window of time during which service is unavailable.

78. How can I use the EC2 Image Builder?

Ans:

Give specifics on the pipeline: Add details to your pipeline, like a name, description, tags, and an automated build schedule. If you would rather, manual builds are an option.

Select a recipe: Select between creating a container image or an AMI. Regarding the two kinds of output images,

simply choose a source image, give your recipe a name and version, then decide which components to add for building and testing.

79. Describe the configuration of the infrastructure: Using Image Builder?

Ans:

Specify the distribution parameters: Once the build is finished and all tests pass, select which AWS Regions to distribute your image to. Additionally, you can specify image distribution for other Regions, and the pipeline will automatically distribute your image to the Region in which it does the build.

80. How does CodeDeploy’s Blue/Green deployment work?

Ans:

The CodeDeploy-managed blue/green deployment approach is employed by the blue/green deployment type. Before directing production traffic to a newly deployed service, you can use this deployment type to confirm it./p>

81. How might traffic change in three different ways during a blue/green deployment?

Ans:

canary: Predefined parameters allow you to select the percentage of traffic that is moved to your updated task set in the first increment and the amount of time, expressed in minutes, that passes before the remaining traffic is shifted in the second increment.

Linear: Time is adjusted in identical steps, separated by the same amount of minutes. Predefined linear options are available, which indicate the number of steps and the percentage of the target that are shifted with each increment.

82. What is a process’s cycle time?

Ans:

Cycle time is the length of time required for a single cycle in a process. This covers the time needed to finish the task as well as the time needed for the system to reset in order to start the subsequent cycle.

83. How well-versed in AWS Config are you?

Ans:

Amazon Config is a service that lets you audit, examine, and check how your AWS resource configurations are set up. Config automatically compares recorded settings to intended configurations by monitoring and recording your AWS resource configurations in real time. You can examine modifications to the relationships and configurations of AWS resources by using Config. This facilitates compliance auditing, security analysis, change management, and operational troubleshooting.

84. What are the benefits of using AWS Control Tower for users?

Ans:

Customers with many AWS accounts may find cloud setup and governance to be complicated and time-consuming teams, causing your procedures to lag. The easiest method to set up and maintain a landing zone—a multi-account, secure AWS environment—is with AWS Control Tower. Additionally, AWS customers may use AWS Control Tower to quickly evaluate their compliance status and extend governance to new or existing accounts.

85. What advantages does utilizing AWS Control Tower offer?

Ans:

- A new AWS environment was swiftly set up and configured.

- It also automates continuous policy management.

- View your AWS environment’s policy-level summaries last.

86. What is AWS Certificate Manager?

Ans:

AWS websites and applications are protected by public and private certificates and keys, which are created, stored, and renewed by AWS Certificate Manager (ACM). Single domain names, several distinct domain names, and ACM certificates can all be secured wildcard domains, or mixtures thereof. An infinite number of subdomains can be protected with ACM wildcard certificates.

87. What does Amazon DynamoDB mean to you?

Ans:

Key-value and document databases such as Amazon DynamoDB may operate at any size with performance as fast as one digit millisecond. It is an internet-scale database that is fully managed, multi-region, multi-active, and durable. It has built-in security features, backup and restore capabilities, and in-memory caching. At its height, DynamoDB can accommodate over 20 million queries per second, handling over 10 trillion requests daily.

88. What is CloudFront by Amazon?

Ans:

- The web service Amazon CloudFront expedites the delivery of static and dynamic web material, including.html,.css,.js, and image files, to users.

- Your material is distributed across an extensive worldwide network of edge sites, or data centers.

- As soon as a guarantee that the material is delivered as soon as possible, when a user requests content that is served by CloudFront, the request is sent to the edge location with the lowest latency.

89. Which AWS tools are available for cost optimization and reporting?

Ans:

- Cost Explorer on Amazon

- AWS trustworthy partner

- Cloudwatch by Amazon

- AWS spending plan

- AWS cloudTrail

- Analytics for Amazon S3

AWS offers a number of tools for cost-optimization and reporting:

90. What does the Amazon Well-Architected Framework serve as?

Ans:

AWS Well-Architected enables cloud architects to build safe, effective, resilient, and high-performing infrastructure for their workloads and applications. It is based on five pillars: cost optimization, performance efficiency, security, dependability, and operational excellence. Customers and partners may evaluate architectures and create designs that can expand over time with consistency thanks to AWS Well-Architected.

91. Define RPO?

Ans:

The RPO is the longest period of time that can be allowed to pass since the last data recovery point. This determines the amount of data loss that can be accepted in between the service interruption and the last recovery point.

92. What are the Types of Auto scaling ?

Ans:

- Configuring an autoscaling policy

- Autoscaling unit

- Configuration of autoscaling tags

- Launch setup autoscaling

93. What is the purpose of DynamoDB?

Ans:

With single-digit millisecond response times at any scale, Amazon DynamoDB is a serverless, NoSQL, fully managed database service that lets you create and run cutting-edge apps while only paying for what you need. The figure illustrates Amazon DynamoDB’s primary functions as well as its interfaces with other AWS services.

94. What role does Aurora play in AWS?

Ans:

Using keys you generate and manage with AWS Key Management Service (KMS), Aurora assists you in encrypting your databases. When a database instance employs Aurora encryption, all automatically generated backups, snapshots, and replicas within the same cluster are encrypted, as is data kept at rest on the underlying storage.

95. What role does Redshift play in AWS?

Ans:

AWS-designed hardware and machine learning, Amazon Redshift leverages SQL to analyze structured and semi-structured data from operational databases, data lakes, and warehouses to provide the best pricing performance at any size.

96. What is Snowflake Datacloud?

Ans:

Infinite size, concurrency, and performance, hundreds of businesses can mobilize data on Snowflake’s Data Cloud, a worldwide network. Organizations can quickly find and securely exchange controlled data, unify their siloed data, and carry out a variety of analytic tasks inside the Data Cloud.

97. Which are the main elements that make up Amazon Glacier?

Ans:

Vaults and archives are essential components of the Amazon S3 Glacier data paradigm. A REST-based web service is S3 Glacier. Vaults and archives are the resources in terms of REST. The S3 Glacier data architecture also contains resources for notification and job configuration.

98. What applications does Amazon Glacier have?

Ans:

Amazon S3 Glacier storage classes for secure storage – AWS

You may get the best performance, maximum retrieval flexibility, and most affordable archive storage in the cloud with the Amazon S3 Glacier storage classes, which are designed specifically for data archiving. Three archive storage classes are now available for selection, each of which is tailored for a certain access pattern and storage period.

99. What drawbacks does Amazon Glacier have?

Ans:

The two most common problems with using Glacier are slow retrieval times and expensive retrieval costs. Most users also have the least amount of experience with these interactions.

100. Which Amazon S3 storage model is it?

Ans:

Data is stored as objects on Amazon S3. This method makes cloud storage extremely scalable. Different physical disk drives that are positioned across the data center can hold objects. To achieve genuine elastic scalability, Amazon data centers employ distributed file systems, specialized hardware, and software.