Amazon Web Services (AWS) is a comprehensive cloud computing platform provided by Amazon, offering a wide range of services such as computing power, storage, and databases. It enables businesses to scale and grow by providing on-demand resources and flexible pricing models. AWS supports various applications, from simple website hosting to complex machine learning and data analytics solutions. Its global infrastructure and robust security features make it a popular choice for enterprises and developers alike.

1. What is AWS, and why is it so popular?

Ans:

AWS is a completely comprehensive cloud computing service that provides most of the services required for building, deploying, and managing a business application. It ensures scalability, as resources can quickly be scaled up or scaled down based on individual needs. One of the reasons it has found popularity worldwide is that it supports many different programming languages and frameworks.

2. What are AWS Availability Zones and Regions?

Ans:

AWS Availability Zones and Regions are two integral parts of AWS infrastructure, which ensures high availability. A Region is a geographic area containing many Availability Zones and isolated data centres. Each Availability Zone has been engineered to be independent, providing redundancy and fault tolerance. This assures that applications remain available even if one data centre encounters issues.

3. What is the difference between Public cloud, Private cloud?

Ans:

| Aspect | Public Cloud | Private Cloud |

|---|---|---|

| Definition | Services are offered over the internet to multiple customers. | Dedicated cloud infrastructure for a single organization. |

| Ownership | Owned and managed by third-party service providers. | Owned and operated by the organization itself or a third party. |

| Scalability | Highly scalable, allowing easy access to resources as needed. | Limited scalability; scaling requires additional resources. |

| Cost | Generally lower costs due to shared resources. | Higher costs due to dedicated infrastructure and maintenance. |

| Security | Lower security control; data is shared with other users. | Higher security and compliance, as resources are isolated. |

| Use Cases | Ideal for non-sensitive applications and variable workloads. | Suitable for sensitive data and critical applications. |

4. What is Elastic Load Balancing (ELB), and how does it work?

Ans:

Elastic Load Balancing (ELB) is an intrinsic AWS service that automatically distributes incoming application traffic across multiple targets, such as EC2 instances. This distribution ensures no instance becomes overwhelmed, enhancing application availability and reliability. ELB continues to monitor the health of the registered instances and allows traffic only to healthy targets. It can handle variant traffic volumes, automatically scaling up to an equivalent change in demand.

5. What is the AWS Shared Responsibility Model?

Ans:

- The AWS Shared Responsibility Model clarifies what AWS and its customers are responsible for regarding security, including cloud services.

- AWS is accountable for securing the cloud infrastructure’s hardware, software, and network.

- Customers are responsible for managing cloud security for their applications and data.

- The model helps organizations know and understand their responsibilities and ensures security is well managed in the cloud.

6. What are the advantages of AWS Elastic Beanstalk?

Ans:

- AWS Elastic Beanstalk is a Platform as a Service that helps simplify the work of deploying and managing applications.

- It automatically takes care of several backend tasks like resource provisioning, scalingto allow, allowing developers to write more code.

- A big benefit of this approach is that users can deploy applications without knowing large infrastructure using common languages and frameworks.

- It also provides the service of monitoring to the user, making tracking an application’s performance very easy.

7. What is the meaning of the term AWS serverless architecture?

Ans:

- AWS serverless architecture allows developers to build and run applications without managing the infrastructure of servers.

- The developers have sufficient time for coding and defining functions instead of provisioning and maintaining servers.

- AWS automatically ramps up resources according to the growing traffic users must pay for what they use, which is very cost-effective.

- This model is fast to develop and deploy an application since it involves minimal overhead in the operations.

- Consequently, serverless architecture encourages innovation and agility in developing applications.

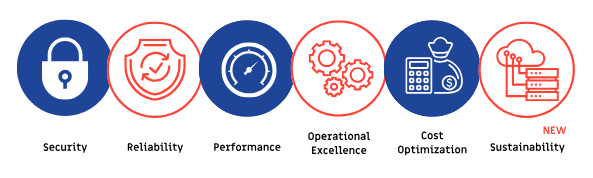

8. What is AWS Well-Architected Framework, and why is it important?

Ans:

The AWS Well-Architected Framework helps architects follow best practices for designing secure, high-performing, resilient, and efficient cloud infrastructures. It involves five pillars: operational excellence, security, reliability, performance efficiency, and cost optimization. These guidelines help cloud architectures identify areas that need improvement.

9. Explain how data is protected both at rest and in motion in AWS.

Ans:

AWS has several methods to secure data at rest and in motion. Encryption methods, such as AES-256, are commonly applied to data at rest, thus providing safety in stored data. On the side of data in transit, AWS has TLS to secure data on any network on which they move. Access control and identity management also enhance data security by ensuring that only authorized users access sensitive information. All these measures result in the integrity and confidence in protecting AWS services’ data.

10. What are the core components of the AWS Global Infrastructure?

Ans:

The AWS Global Infrastructure is designed to offer customers high-performance, scalable, and reliable cloud computing. Key components comprise Regions: geographically located availability zones hosting multiple Availability Zones. Each Availability Zone comprises separate data centres that increase fault tolerance and redundancy. Edge Locations are also an integral part of the infrastructure, primarily used for content delivery via Amazon CloudFront.

11. What is Amazon EC2, and how does it work?

Ans:

- Amazon EC2, for Elastic Compute Cloud, is a web service developed by Amazon to deliver scalable computing resources through cloud networking.

- Customers can access virtual servers called instances for running applications and workloads, users can choose configurations for different operating systems.

- Since Amazon EC2 operates using the pay-as-you-go pricing model, it makes the service enables the user to only pay for the actual compute capacity used.

- Such flexibility makes it very suitable for nearly every type of application, from simple websites to the most complex enterprise systems.

12. What are EC2 instance types and how does one choose the right one?

Ans:

The EC2 instance types fall into categories based on specific use cases. These include Compute-Optimized, Memory-Optimized, Storage-Optimized, and GPU instances. Each offers different CPU, memory, storage, and networking capacity combinations. AWS provides guidance through recommendations based on these dimensions to help choose the best instances according to those requirements. Users can also test different instance types to find the right one for the workloads.

13. What are EC2 Reserved Instances, and when should I use them?

Ans:

EC2 Reserved Instances: This is a type of pricing that allows users to reserve compute capacity for a term in advance, usually one or three years. This considerably saves costs if it compares to On-Demand pricing and suits predictable workloads. Reserved instances will be ideal for those organizations whose needs are quite steady-state or long-run because they assist in reducing the overall infrastructure cost.

14. Compare On-Demand Instances and Spot Instances in EC2.

Ans:

On-demand instances enable users to pay for compute capacity in multiples of one hour or second with no up-front commitment, thus offering flexibility for fluctuating workloads. Conversely, Spot Instances allows customers to bid for unused Amazon EC2 capacity at significantly reduced prices, making them cost-effective for flexible, interruption-tolerant applications. Availability is guaranteed with On-Demand Instances. Spot Instances may be terminated by AWS with minimal notice if needed elsewhere.

15. How Does Auto Scaling of EC2 Work?

Ans:

- EC2 Auto Scaling is a service that deals with the changes in the number of instances of the EC2 system.

- This allows applications to maintain performance during traffic spikes while keeping costs low when demand is low.

- Auto Scaling helps out in increasing high availability and fault tolerance through auto replacement of unhealthy instances.

- Scheduled scaling It also includes support for scheduled scaling that helps elaborate known changes in demand.

16. What are Elastic IP addresses?

Ans:

- Unlike regular IP addresses that may change if an EC2 instance is stopped or terminated, Elastic IPs persist with the user’s AWS account until released.

- In this way, any application that is using a static endpoint will always have an unchanging IP address.

- Elastic IPs are handy for remapping an instance if another fails to serve the application.

- With Elastic IPs, organizations need not experience any disruption to their business due to IP address changes.

17. What is an Amazon Machine Image (AMI), and how is it used?

Ans:

An Amazon Machine Image, or AMI, is a pre-configured template that contains the fundamental information needed to launch an EC2 instance. It should include the operating system, application server, and all applications required for a certain workload. Users can create custom AMIs from existing instances or choose from pre-built options available in the AWS Marketplace. AMIs make instance allocation easier as they are the same configuration for all cases.

18. How to optimize the performance of an EC2 instance?

Ans:

- There are several ways to improve the performance of EC2. One of the ways through which one can get the best performance from an EC2 instance is by choosing the right instance type based on workload characteristics.

- Such metrics through Amazon CloudWatch help detect bottlenecks and make performance corrections. Using EBS-optimized instances would enhance the storage performance since it provides dedicated bandwidth to each EBS volume.

- The correct number of cases to meet all demands will be ensured if Auto Scaling is implemented. Other factors for improved performance include optimizing application code and regular updates on software.

19. How does stopping an EC2 instance differ from terminating it?

Ans:

- Stopping an EC2 instance saves its current state so that it can later be restarted with no loss of data on the EBS volumes.

- When an instance is stopped, its compute resources are released, but the underlying storage is preserved.

- Termination permanently deletes an example and any data stored on instance store volumes.

- These differences distinguish between inefficient resource usage and assure data saving when needed.

20. How do EC2 instances get secured?

Ans:

- Security for the EC2 instances is provided through various measures, such as control over incoming and outgoing traffic through Security Groups and Network ACLs.

- Users may configure these options to allow access only from trusted sources. AWS IAM policies manage permissions and roles for not only the users but also any applications that may be accessed through EC2.

- The operating system and applications must also be routinely patched to mitigate vulnerabilities. Data in rest and transit are further supported with encryption.

21. What is Amazon S3, and how does it store information?

Ans:

Amazon S3 is a scalable object storage service where any amount of data can be stored and retrieved from anywhere on the web. Data is placed into “buckets,” which are basically containers for objects with unique keys. S3 is built to offer high durability 99.999999999% means data will be safe and ready 99.999999999% of the time. It can store all data types, such as documents, images, and backups. S3 also offers features such as versioning and lifecycle management to help optimize how data is stored.

22. Explain different S3 storage classes and use cases.

Ans:

Amazon S3 provides various storage classes. Different storage classes include the purpose for which they were built, offering different characteristics in terms of durability, availability, and cost. The S3 Standard class is suitable for frequently accessed data. The S3 Intelligent Tiering will dynamically move data to the lowest available cost tier based on access patterns. The S3 Standard-IA is for data accessed much less frequently but can still be retrieved at a faster rate.

23. How to protect an S3 bucket with policies and permissions?

Ans:

Security for an S3 bucket may be managed using bucket policies, IAM policies, or Access Control Lists. Hence, users and applications are allowed permission management. Bucket policies are defined in JSON to communicate the allowed or denied actions towards certain users or groups. IAM policies provide finer control over user access in AWS for safe interaction with S3 resources. ACLs can be used to add a higher layer of object-level control.

24. What is S3 Versioning, and why is it implemented?

Ans:

- S3 Versioning is a feature that preserves, recovers, and can restore every version of every object stored in an S3 bucket.

- When object versioning is enabled, any modification or deletion of the object creates a new version instead of overwriting the previous one.

- This is very important in protecting against accidental deletions or inadvertent overwrites and ensuring data integrity and recoverability.

- Versioning also supports compliance needs by maintaining a history of object changes.

25. How does AWS guarantee data durability and availability in S3?

Ans:

- AWS ensures data durability in S3 by automatically replicating objects across multiple Availability Zones within a Region.

- This architecture protects against hardware failures, maintaining accessibility even if one or more components fail.

- S3 is built with 99.999999999% (11 nines) durability, meaning objects are safely and reliably stored and can be recovered.

- S3 provides cross-region replication for better availability so that users can replicate data across different geographic regions.

26. What is S3 Cross-Region Replication (CRR)?

Ans:

S3 Cross-Region Replication (CRR) is an automatic policy-based feature replicating objects in different AWS Regions to support greater availability and disaster recovery. Once enabled, all new objects uploaded into the source bucket in one Region are copied to a specified destination bucket in another. This may be considered redundancy and increased data durability. CRR significantly benefits companies needing to meet regulation requirements or minimize latency by storing data closer to users.

27. What does S3 Lifecycle Management do and how to set it up?

Ans:

S3 Lifecycle Management is a feature that will automatically move objects between other S3 storage classes based on defined rules. It would let the user create policies to change the data of objects to use lower-cost storage or to delete objects after a certain period. This configuration helps in cost optimization of storage costs and managing large datasets over time. It may be a bucket or targeted on specific prefixes or tags. Configuring such policies will ensure data is stored efficiently while meeting organizational needs.

28. How to host a static website on S3?

Ans:

Host a static website using S3 by creating an S3 bucket with ready-to-serve web content. A static website can be hosted inside the bucket settings if required by the users. Here, they are given two options: an index document and an error document. Users must upload their static files into the created bucket and enable public access with the necessary permissions. S3 offers a web endpoint through which the hosted site can be accessed. It is the reason why S3 is used by many to host their static content very often.

29. What is S3 Transfer Acceleration and when can it be helpful?

Ans:

- S3 Transfer Acceleration is an option that speeds up content uploads to S3 by using Amazon CloudFront’s distributed edge locations.

- During the upload of the object to the intended S3 bucket, the request is routed to the nearest edge location. This reduces latency, especially for users located far from the S3 bucket’s Region.

- Transfer Acceleration is useful for applications that require fast data ingestion, such as uploading media or large data transfers.

30. How does S3 Object Lock ensure data immutability?

Ans:

- S3 Object Lock locks the objects so they cannot be deleted or overwritten, guaranteeing immutability guaranteeing immutability for a given period.

- This capability is critical for the purpose of compliance requirements where regulatory standards enforce data retention policies.

- Object Lock has two modes Governance Mode, which allows users with certain access to delete or overwrite the objects, and Compliance Mode, which strictly enforces immutability.

- Once configured, Object Lock helps lock down critical data to prevent unwanted or malicious changes by others. This feature remains a core component of ensuring data integrity and compliance in S3.

31. What is Amazon VPC?

Ans:

- Amazon VPC, which comes from Virtual Private Cloud, is a service AWS provides by creating a dedicated, isolated section of the AWS cloud where resources can be launched within a virtual network.

- Amazon VPC offers customizable control over elements, such as IP address ranges, subnets, route tables, and gateways.

- The significance of VPC lies in its innovation in security as it helps the users plan their intended topology, manage the incoming and outgoing traffic, and compartmentalize the resources per application.

- Moreover, VPC also fulfils the compliance need as the network configurations can be set according to specific requirements.

32. Describe the VPC subnets and provide examples of their usage.

Ans:

Subnets are subdivisions of a VPC’s IP address range that group resources based on security and operational requirements. Subnets can be either public or private. Public subnets are accessed directly to the Internet, and private subnets are not. This structure enables users to position resources most effectively, such as placing web servers in public subnets and databases in private subnets to maintain better security.

33. What are Security Groups, and how do they differ from Network ACLs?

Ans:

Security Groups are virtual firewalls which control incoming and outgoing traffic for EC2 instances within a VPC. They are at the instance level, which means that users can define protocols, ports, and source IP addresses which may reach the instances. Security Groups are more elastic and easier to manage than ACLs, but ACLs offer a more general range of control at the subnet level. It is necessary to comprehend both tools to design an all-inclusive security strategy in a VPC successfully.

34. What is the purpose of a VPC peering connection?

Ans:

VPC peering connection allows the private connection of two VPCs over AWS’s internal network such that the resources in both VPCs can communicate as if they were on the same network. This feature makes possible the sharing and collaboration of resources between two or more VPCs belonging to the same or different AWS accounts. With peering connections, multi-VPC architectures can prove especially useful due to their ability to provide the most efficient traffic flow without the need for cross-public Internet.

35. What is the difference between a NAT Instance and a NAT Gateway?

Ans:

A NAT Instance is a type of EC2 instance configured so that instances in private subnets can access the Internet but not accept incoming traffic. It offers customization and control over NAT functions. However, an Internet Gateway is a managed service that provides very similar capabilities but scales better and is easier to manage. NAT Gateways can automatically scale up with larger traffic and reduce admin overhead.

36. What is an Internet Gateway in AWS VPC?

Ans:

- An Internet Gateway is a scalable and redundant entity that interlinks a VPC to the Internet and allows public subnet resources to communicate with external networks.

- It makes instances with public IP addresses both a sender and receiver of traffic from the Internet. Internet Gateways also provide high availability as it would route traffic to multiple Availability Zones.

- An Internet Gateway must be provisioned for running public-facing applications and services hosted within a VPC.

37. How to secure a VPC?

Ans:

- To secure a VPC, have multiple levels of protection, such as using Security Groups and Network ACLs to control traffic into and out of instances.

- Private subnets that help isolate sensitive resources from access by the public Internet. Also, use AWS Identity and Access Management to limit access to authorized users for VPC resources.

- Monitoring can be done periodically to look for an activity that may be identified as malicious using VPC Flow Logs Data encryption, in transit and at rest, further enhancing the overall security of the VPC and all its resources.

38. What are VPC Flow Logs, and how are they used?

Ans:

- VPC Flow Logs capture details about the IP traffic going to and from network interfaces in a VPC.

- They provide detailed information on traffic flow and allow users to comprehend and diagnose connectivity problems.

- Flow log analysis will discover outliers in traffic patterns, track adherence to security policies, and streamline network performance.

- Users may store flow log data in Amazon S3 or Amazon CloudWatch Logs for further analysis and visualization.

- VPC Flow Logs contribute to thorough visibility and stronger security in cloud networking.

39. How to establish a VPN connection to a VPC?

Ans:

A user connects to a VPC through a virtual private gateway by creating a virtual private gateway on the side of the VPC and then pairing it with a customer gateway located in the customer’s premises. VPN connection can be configured from AWS Management Console, AWS CLI, or API. This configuration enables an encrypted tunnel for secure communications between the VPC and the on-premises network. VPN connections form a backbone that must securely reach into the cloud to extend the on-premises networks.

40. What does Elastic Network Interfaces (ENIs) do in AWS?

Ans:

Elastic Network Interfaces (ENIs) are virtual network interfaces that may be attached to a set of EC2 instances in a VPC. This permits multiple IP addresses to be assigned to cases and connects to several subnets for richer networking. Thus, ENIs support various use cases, including separating application traffic, providing failover strategies, or better security through the isolation of different network functions. Users can create ENIs and dynamically attach or detach them from instances as needed.

41. What is AWS IAM, and why is it important?

Ans:

AWS IAM is a service that enables secure management of access to AWS resources. It makes it easy to create and manage users, groups, roles, and permissions such that the right people perform only the right actions. IAM plays an important role in enforcing the principles of best security practices by observing the principle of least privilege. This insists that the users get restricted access only to the rights relevant to their job functions. This service supports multi-factor authentication (MFA) to enhance security.

42. What is the principle of least privilege in AWS IAM?

Ans:

- The principle of least privilege is a security approach used to limit users’ access rights to the minimum required to perform their job functions.

- In AWS IAM, it is granting permissions to users and roles only what they need to access a given resource or complete a given task.

- Reviewing and updating permissions as the roles for which permissions are being developed change is a crucial step toward ensuring compliance.

- The least privileged practices go a long way toward building a robust framework of controls over data access within AWS.

43. How are users, groups, and roles created and managed in IAM?

Ans:

- Users can be created individually or in bulk using the AWS Management Console, AWS CLI, or IAM API.

- Users can be organized into groups for streamlined permission management, allowing policies to be applied to the group rather than to each user individually.

- IAM policies can either allow or deny access by using a set of conditions that make it possible to run through users, groups, and roles effectively.

- A structured approach like this is integral to security and functional efficiency.

44. What are IAM policies? How do they work?

Ans:

- IAM policies are written permissions for actions that would be taken on AWS resources in JSON format.

- They specify what actions are allowed and denied, their associated resources, and under which conditions.

- There are two types of managed policies reusable policies with multiple entities and inline policies, which are given to only one user or role.

- Using IAM policies allows an organization to enforce best practices regarding security and manage resource access diligently.

45. What is MFA (Multi-Factor Authentication) and how to enable it?

Ans:

Multi-factor authentication (MFA) is a security feature that requires users to provide two or more verification forms before accessing an account or service. It adds another layer of security to AWS. When accessing AWS, users must enter a one-time code generated by an authenticator app or hardware token and their password. Users can enable MFA for individual accounts through the IAM console. This adds a more significant layer to avoid unauthorized access to AWS accounts.

46. How do IAM roles enable cross-account access?

Ans:

IAM roles enable support for cross-account access by providing users in one AWS account the means to securely access resources in another account. A role is created in the target account by specifying a trusted entity- the user or role from the source account- to provide temporary access. Users can assume the role through the AWS console, CLI, or API, which gives the permissions defined in that role for a specified duration. Such roles need proper management for safe and efficient collaboration.

47. Describe how AWS IAM enforces strong password policies.

Ans:

AWS IAM can set strong password policies by including requirements such as minimum length, complexity, and requirements in terms of password expiration. The policies can be set to require users to include a mix of uppercase and lowercase letters, numbers, and special characters in the IAM console. Apart from these, organizations can also set rules on password rotation regarding updates to the passwords periodically. Strong policies in place for passwords enforce security and limit unwanted access.

48. What is AWS Security Token Service?

Ans:

- AWS STS is a web service that enables users to generate temporary security credentials for accessing resources within AWS.

- These credentials can have limited access and can be used for scenarios like temporary access to users, cross-account access, or mobile apps.

- STS tokens can be configured with expiration periods so that access is granted for the necessary time.

- The users can make access control more dynamic and reduce the risks associated with long-term credentials this service would be critical to security.

49. How does AWS IAM provide access audit and tracking?

Ans:

- AWS IAM accesses auditing and tracking via AWS CloudTrail and detailed IAM reports.

- For thorough monitoring, CloudTrail captures API calls within an AWS account, including all IAM users’ and roles’ actions.

- It allows the organization to track access patterns and comply with all the set security policies, as well as identify a security incident.

- IAM monitors user activities and tracks login attempts, permission changes, etc.

- All these logs must be audited periodically to ensure that the AWS environment is secure and compliant.

50. What is an IAM Policy Simulator?

Ans:

- The IAM Policy Simulator is a feature that allows IAM users to test and validate IAM policies before throwing them into a live environment.

- It simulates the effects of policies on certain AWS actions and resources so we can better know which permissions have been granted or denied due to the policies.

- This tool is useful in troubleshooting access problems and ensuring policies meet the organization’s needs.

- Testing the Policies users can refine their access controls and minimize security risks by testing policies under controlled environments.

51. What is AWS Lambda, and how does it differ from EC2?

Ans:

AWS Lambda is a service for serverless computing that provides the capability to run code in response to events without ever provisioning, scaling, or managing servers. For EC2, which lets users own and manage virtual machines, Lambda takes care of the infrastructure, making deployments much easier. Lambda functions are event-driven and can automatically scale to accommodate varying workloads. Usage is aligned with actual compute time consumed so that it becomes cost-effective since users pay only for execution time.

52. What are the applications of AWS Lambda?

Ans:

AWS Lambda can be used in every imaginable use case, from data processing to real-time file processing or as a proxy for services sitting at the back of web and mobile applications. It stands out when developing microservices, handling IoT data streams, and executing scheduled tasks. Lamba works very well with other AWS services and is often an enabler for serverless APIs and webhooks. Its nature supports event-driven architecture and sustains automation and integration across AWS, thereby supporting application workflows.

53. How does AWS Lambda manage concurrency?

Ans:

AWS Lambda automatically scales the number of instances to draw requests of a given function based on incoming traffic. Users can limit concurrent executions to a maximum to prevent other resources from being overwhelmed. Reserved concurrency means that a given number of instances are always readily available for critical functions, while unreserved concurrency is adjustable based on demand. This automatic scaling ability makes Lambda handle workload variations effectively without degrading performance.

54. Discuss what cold starts are in Lambda and how to mitigate them.

Ans:

- Cold starts refer to a scenario where a Lambda function has not been invoked for some time, thus resulting in delay because the execution environment takes time to initialize.

- This can adversely affect applications that require prompt responses. Users can maintain the option to minimize cold starts by periodically invoking functions or by utilizing provisioned concurrency to pre-allocate instances.

- Function code optimization and reducing dependencies also help to minimize initialization time. These approaches ensure consistent performance, particularly in applications whose traffic varies intermittently.

55. What is the nature of AWS Lambda pricing?

Ans:

- AWS Lambda charges based on the number of requests and function execution duration in milliseconds.

- This pay-as-you-go model thus results in cost savings as no charges are imposed on idle time.

- In addition, a free tier is available for free that includes several requests and compute time every month.

- This pricing model makes Lambda the most cost-effective option for applications with variable workloads.

56. How long can a function take in execution?

Ans:

A Lambda function will run for a maximum of 15 minutes. If the code cannot be completed within this time, execution will be stopped. Users need to break down the workload into smaller work chunks for longer processing requirements or use other AWS resources, such as EC2 or AWS Step Functions. Remembering this time restriction in designing workloads that fit Lambda’s operational boundaries is critical.

57. How are S3 events invoked on Lambda functions?

Ans:

Lambda functions can be invoked by S3 events with Amazon S3 object upload, delete, or restoration. For such functionality, users can set up an S3 bucket notification using the following configuration: it invokes the lambda function for specific events. File uploads in real-time can be processed via S3 as images are resized, data transformed, or activities logged. S3 event notifications automatically leverage workflows and upgrade their application abilities through smooth integration.

58. How are AWS Lambda functions monitored?

Ans:

- AWS Lambda functions are monitored through Amazon CloudWatch, which collects many metrics and logs associated with the performance of the function.

- Detailed logs are generated for each invocation that helps users analyze the pattern of functions regarding the behaviour and troubleshoot the issue.

- One can also set up specific CloudWatch Alarms to notify the users whenever performance metrics exceed predefined limits so that their functions monitor and optimize perfectly.

59. What is AWS Lambda@Edge, and how is it used?

Ans:

- AWS Lambda@Edge is an extension of AWS Lambda for Amazon CloudFront. It allows users to execute code closer to the end-users.

- Using Lambda@Edge also helps reduce latency while enhancing the user experience.

- More importantly, real-time requests and responses can be modified to enable dynamic content generation and personalization.

- It is very effective where low-latency responses are to be given to users across the globe.

60. How does versioning work on AWS Lambda?

Ans:

- AWS Lambda supports versioning, where users can publish immutable versions of the functions to allow controlled deployments and rolled-back options.

- Each version gets an identifier, and new versions can be published as updates occur. Using aliases can point to certain versions, facilitating smoother transitions in production between versions.

- The version management feature makes testing and deployment processes much easier, as they will always support continuous development and ensure application stability.

61. What is Amazon RDS? How does it vary from a database?

Ans:

Amazon RDS is a managed service that makes the operation, deployment, and scaling of relational databases in the cloud very easy. In traditional databases, users must manage their hardware, perform backups, and maintain it. Unlike conventional databases, RDS takes care of all the administrative tasks and leaves room for concentration in software development. RDS is designed to work with multiple engines. The service provides autoscale and automated backups and has high availability.

62. Which of the databases are supported by AWS RDS?

Ans:

AWS RDS also supports several relational database engines, including Amazon Aurora, MySQL, PostgreSQL, Microsoft SQL Server, and Oracle. Each engine has distinct characteristics and compatibility with various applications. Once again, Aurora is the cloud-native database that should ensure high performance, availability, and compatibility with MySQL and PostgreSQL. By supporting several engine choices, the RDS enables users to choose the most appropriate database for any given application with the benefits of managed services.

63. Explain Multi-AZ deployment in Amazon RDS.

Ans:

Multi-AZ deployment with Amazon RDS provides high availability and durability since two or more database instances are copied in different Availability Zones (AZs). The setup allows for a primary instance for read/write operations and supports a synchronous standby instance again located in another AZ as a failover. If the primary instance becomes unavailable, then RDS automatically switches over to the standby instance, thus generating almost zero downtime.

64. What are RDS read replicas, and how can they be used to improve the performance of database operations?

Ans:

- RDS read replicas are standby copies of the primary database instance created primarily to serve read traffic and enhance the performance of read-intensive applications.

- Further, read replicas can also be promoted to stand-alone instances if needed, providing flexibility in database scaling.

- Users can spread query loads by making many read replicas in the same or different AWS regions; this reduces latency and generally improves an application’s overall performance.

- This feature especially benefits applications in demand of high availability and responsiveness and optimizes resource use.

65. How are backups automated in RDS?

Ans:

- Amazon RDS offers automated backups along with an inbuilt feature where a retention period of automated backups can be specified.

- The user can specify a retention period for the automated backup, which is typically between one and thirty-five days.

- Further, users can create manual snapshots at any time for long-term storage.

- The backup automatically backs up the data and allows easy restoration in case of losses or corruption.

66. What is the difference between Amazon RDS and Amazon Aurora?

Ans:

- While Amazon RDS is a managed service that supports various relational database engines, Amazon Aurora is a specific relational database engine developed by AWS that emphasizes high performance and availability.

- A cloud service, Aurora supports MySQL and PostgreSQL features such as auto-scaling, high availability, and real-time backups. It can offer much better performance than the usual MySQL and PostgreSQL.

- Though RDS has a management framework which supports multiple engines, its architecture is specifically optimized for performance and reliability to cater to high-demand applications.

67. How are RDS instances scaled?

Ans:

RDS instances can scale vertically and horizontally. Vertical scaling alters the class of instances to gain access to other CPU, memory, or storage resources. Depending on the database engine, this is typically done with very little downtime. Horizontal scaling is achieved using read replicas, whereby RDS distributes read workloads across multiple instances.Amazon Aurora supports automatic storage scaling, which allows the database to expand as needed without any manual intervention.

68. How does RDS handle database failover?

Ans:

Amazon RDS provides automated failover mechanisms with Multi-AZ deployments to ensure high availability. If the primary database instance fails due to failure or hardware maintenance, RDS seamlessly switches it to the standby instance in another AZ. This process reduces downtime and allows the application to use the database continuously. Failover events are notified to the users and the standby instance becomes the new primary endpoint, thus a smooth recovery and uninterrupted service.

69. How is data encrypted in RDS?

Ans:

Using AWS Key Management Service, Amazon RDS offers data encryption using KMS to manage encryption keys. This means that users can enable encryption at rest for RDS instances, ensuring that the data on disk is encrypted. Such protection helps guard against unauthorized access to sensitive information. In addition, encryption in transit can be performed through SSL/TLS to secure data travelling from the database and applications.

70. What are RDS Reserved Instances, and how can one save on costs?

Ans:

- RDS Reserved Instances allows customers to commit to a specific instance type for one or three years in exchange for savings of up to 75 % over On-Demand pricing.

- Users can save huge dollars for database workloads by committing to usage over time. Reserved Instances make the same instance type less expensive for predictable workloads over a commitment period.

- These prices help the organization optimize its database spending while remaining possessed of resources required by applications.

71. What is Amazon CloudFront, and how does it work?

Ans:

- Amazon CloudFront is a content delivery network (CDN) designed to speed up the distribution of static and dynamic web content like HTML, CSS, JavaScript, and images across the globe to users.

- It works by storing content in various edge locations so that the nearest users can access data from their closest location, reducing latency.

- When a viewer wants to access content, it routes the request to the nearest edge location that would yield the best response times.

- It integrates seamlessly with other AWS services, providing an all-inclusive solution to optimize content delivery and enhance user experiences.

72. How do create a CloudFront distribution?

Ans:

- Users prepare for establishing a CloudFront distribution by defining the distribution settings via the AWS Management Console, CLI, or SDK.

- This step identifies where the content came from, whether an S3 bucket or an HTTP server and allows for many configuration options.

- Once the distribution is created, it will have a domain name so users can access it properly.

- While propagation across edge locations may take some time, CloudFront can deliver content based on user location efficiently once it’s live.

73. What are CloudFront origin and edge locations?

Ans:

Origin refers to the content’s source. It may be an S3 bucket, an EC2 instance, or any external HTTP server in CloudFront. Edge locations are geographically distributed data centres that Cache content in CloudFront. When a client requests data, CloudFront first checks the nearest edge location to deliver the requested cached content; if not, it tries to fetch it from the origin. This architecture diminishes latency and improves performance by providing the closest content to customers.

74. How does CloudFront enhance content delivery performance?

Ans:

CloudFront improves content delivery using a distributed set of edge locations that cache content closer to users. Shortening the distance data must travel can reduce latency and improve load times. Dynamic content optimization, support for HTTP/2, and intelligent management of caching all further improve delivery speed, while requests are automatically routed to the closest edge location so that users can access more content more quickly.

75. What is CloudFront geo restriction, and how does it work?

Ans:

- Geo-restriction by CloudFront allows users to manage who can see the content depending on the geo-location of the viewer.

- The users can allow access to certain countries or deny access to them once geo-restriction settings have been configured.

- This is very useful for license enforcement and to achieve regulatory requirements.

- When a user attempts to access their requested content, the geo-location determination is done against the rules by CloudFront, and the requested content may be served.

76. How does CloudFront integrate with S3 for static content?

Ans:

- CloudFront also integrates well with Amazon S3 to efficiently deliver static content.

- In this integration, the user sets up a distribution whose origin is an S3 bucket.

- It can further improve security by prohibiting access to S3 content through signed URLs and cookies.

77. What does cache invalidation in CloudFront refer to?

Ans:

Cache invalidation refers to refreshing or removing cached objects from edge locations so that end users can access the latest version of files. When content is modified at the origin, users can issue an invalidation request with which files or paths to be invalidated. Cache invalidation maintains content accuracy and relevance but can add expense based on the number of requests.

78. What are CloudFront Signed URLs and Signed Cookies?

Ans:

CloudFront Signed URLs and Signed Cookies are security controls that decide who can access content through CloudFront. Signed URLs enable easy distribution of temporary access to specific content by including a signature and a time to live on the URL. Signed Cookies, for instance, allow access to multiple URLs through one cookie, which is easier for the user.

79. What is Lambda@Edge, and how does it enhance CloudFront’s capabilities?

Ans:

Lambda@Edge extends the functionality of AWS Lambda to CloudFront. Thus, using Lambda@Edge, people can run their custom code in response to events at edge locations of any CloudFront distribution. This can open up the possibility of making request and response changes in real time: dynamic content generation, user authentication, and so much more, all capable of performing well because of closer request processing, thereby reducing latency.

80. How does CloudFront issue certificates for SSL/TLS?

Ans:

- With CloudFront, data is safely transferred between users and edge locations. Users can manage their own SSL certificates through AWS Certificate Manager (ACM) or use their own custom certificates.

- When setting up the distribution on CloudFront, HTTPS helps ensure secure delivery of content.

- The SSL connections are automatically negotiated and include HTTP/2 support for enhanced security and performance. This ensures that transmitted data gets a safe experience while the user experience remains smooth.

81. How can They monitor using Amazon CloudWatch, and what is it?

Ans:

- Amazon CloudWatch is a monitoring and observability service. It gives insights concerning how the applications and infrastructure perform.

- Metrics, logs, and events are collected from various AWS resources for real-time system health monitoring.

- Using CloudWatch, users can establish custom dashboards, set up alarms for specific thresholds, and visualize data trends over time.

- CloudWatch is indispensable in optimizing resource utilization, troubleshooting, and ensuring reliability in their applications.

82. How are custom CloudWatch metrics made?

Ans:

- Custom CloudWatch metrics can be created via the CloudWatch GUI, CLI, or AWS SDKs.

- Users must supply the metric name, namespace, and data points to be monitored to create a metric, just like in any other metric naming method.

- This enables monitoring performance metrics unique to the application, like request volumes and error rates.

- After they are created, they can be seen on CloudWatch dashboards and can be used to trigger alarms when certain thresholds are reached.

- This enables the monitoring to be highly tailored to an application’s requirements.

83. What is AWS CloudTrail, and how does it monitor activity?

Ans:

AWS CloudTrail is a service that supports governance, compliance, and auditing by recording user activity within AWS accounts. It tracks API calls and events that contain caller identity, source IP address, and request time of calling. The log data is stored in Amazon S3, thus enabling users to analyze interactions with AWS resources. CloudTrail gives insight into resource usage and security compliance with value in forensic investigations; therefore, it’s the case with user activity tracking.

84. What is the difference between CloudWatch and CloudTrail?

Ans:

CloudWatch and CloudTrail. The one monitors the performance of resources and applications by gathering metrics and logs to give it an insight into the health of operations. The other one is that of user activity or API calls-a type of audit trail for actions performed in an AWS account. Whereas CloudWatch’s focus area remains in the realm of real-time monitoring and alerting, CloudTrail becomes very essential for governance and accountability with AWS resources.

85. How do set up alarms with CloudWatch for my EC2 instances?

Ans:

A user will specify which metric to monitor, such as CPU utilization, using the CloudWatch console or CLI. They will establish the threshold value that must be crossed so the alarm is activated and the desired state, such as triggering it when a specific metric value has been reached. This proactive management helps ensure responsiveness to performance issues.

86. What is AWS Config, and how can it help manage resources?

Ans:

- AWS Config gives visibility into AWS resource configurations, compliance checks, and change management in an AWS environment.

- It tracks, records, and continues to monitor configurations for AWS resources, users can measure the compliance of such resources against a certain level of desired configurations and policies.

- With AWS Config, one can track the changes made to resources over time due to the simplification of the identification and resolution of configuration drift.

- These many levels of visibility help maintain security best practices and comply with regulatory requirements.

87. What is AWS Trusted Advisor, and what are its benefits?

Ans:

- AWS Trusted Advisor is an online resource that gives real-time guidance on optimizing the cloud on AWS.

- It gives recommendations in five categories: cost optimization, performance, security, fault tolerance, and service limits.

- It will scan resource configurations and usage patterns and derive savings, performance improvements and security upgrades.

- Have more efficient use of AWS and better resource management if reviewing such recommendations regularly.

88. How to use AWS Systems Manager to manage EC2 instances?

Ans:

- AWS Systems Manager provides a single interface to manage EC2 instances and all other AWS resources.

- It automates operational tasks such as patch management, and software installation using features like Run Command and State Manager.

- Systems Manager also allows the use of Session Manager to access the instance securely without SSH keys.

- Central management reduces complexity in the management of EC2 instances while improving operational efficiency.

89. What is AWS Control Tower, and how does it simplify multi-account management?

Ans:

AWS Control Tower is a service that simplifies setting up and governing multi-account AWS environments. This allows the user to easily create new accounts with automated governance controls and gain visibility into compliance status across the organization. AWS Control Tower simplifies multi-account management to ensure and deliver efficiency and scalability to AWS environments as they maintain compliance with organizational policies.

90. How does AWS OpsWorks automatically provision resources?

Ans:

AWS OpsWorks is a configuration management service that enables the provisioning of resources to be completed through either Chef or Puppet. The application stacks, layers, and instances defined by the users form the basis with which OpsWorks operates for consistency in configuration and proper deployment of the applications. The result is an improved efficiency in operations with their hands freed up from infrastructure management with more time dedicated to developing applications.