These AWS DevOps Interview Questions are originally developed to acquaint you with the types of questions you would encounter during your AWS DevOps interview. Good interviewers, in my experience, usually intend to ask any specific question throughout your interview. In most cases, lots of different with a basic notion of the issue and then develop based on more discussions and your responses. The top 100 AWS DevOps Interview questions will be answered, along with extensive answers. AWS DevOps scenario-based interview questions, AWS DevOps interview questions for newcomers, and AWS DevOps interview questions and answers for application experts will all be handled.

1. What is AWS in DevOps?

Ans:

AWS is Amazon’s cloud service platform that users can carry out DevOps practices easily. The tools provided will help immensely to be automate manual tasks, thereby assisting teams to manage the complex environments and engineers to work efficiently with high velocity that DevOps provides.

2. DevOps and Cloud computing: What is need?

Ans:

Development and Operations are the considered to be one single entity in DevOps practice. This means that any form of Agile development, alongside Cloud Computing, will give it straight-up advantage in scaling practices and creating strategies to bring about a change in the business adaptability. If cloud is considered to be car, then DevOps would be wheels.

3. Why use AWS for DevOps?

Ans:

- AWS is the ready-to-use service, which does not need any headroom for software and setups to get started with.

- Be it one instance or scaling up to the hundreds at a time, with AWS, provision of computational resources is endless.

- The pay-as-you-go policy with the AWS will keep pricing and budgets in check to ensure that can mobilize enough and get an equal return on investment.

4. What does DevOps Engineer do?

Ans:

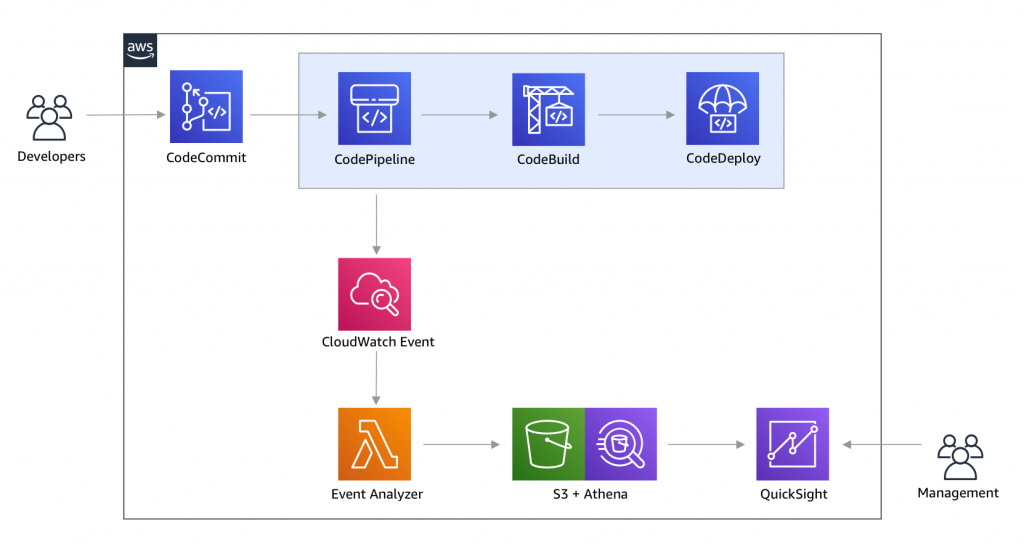

p>A DevOps Engineer is the responsible for managing the IT infrastructure of an organization based on the direct requirement of software code in an environment that is both the hybrid and multi-faceted. A Provisioning and designing appropriate deployment models, alongside validation and performance monitoring, are key responsibilities of a DevOps Engineer.5. What is CodePipeline in AWS DevOps?

Ans:

CodePipeline is the service offered by AWS to provide the continuous integration and continuous delivery services. Alongside this, it has the provisions of infrastructure updates as well. Operations like building, testing, and deploying after single build become very easy with set release model protocols that are defined by the user. CodePipeline ensures that can reliably deliver new software updates and features rapidly.

6. What is CodeBuild in AWS DevOps?

Ans:

AWS provides the CodeBuild, which is fully managed in-house build service, thereby helping in compilation of source code, testing, and the production of software packages that are ready to the deploy. There is no need for the management, allocation, or provision to scalebuild servers as this is automatically scaled. Build operations occur the concurrently in servers, thereby providing biggest advantage of not having to leave the any builds waiting in a queue.

7. What is CodeDeploy in AWS DevOps?

Ans:

CodeDeploy is a service that automates the process of deploying code to the any instances, be it local servers or Amazon’s EC2 instances. It helps mainly in handling all of complexity that is involved in updating applications for release. The direct advantage of the CodeDeploy is its functionality that helps users rapidly release the new builds and model features and avoid any sort of downtime during this process of the deployment.

8. What is CodeStar in AWS DevOps?

Ans:

CodeStar is one package that does the lot of things ranging from development to build the operations to provisioning deploy methodologies for users on AWS. A One single easy-to-use interface helps users easily manage all of activities involved in software development. One of the noteworthy highlights is that it helps for immensely in setting up continuous delivery pipeline, thereby allowing the developers to release code into production rapidly.

9. How can handle continuous integration and deployment in AWS DevOps?

Ans:

One must use the AWS Developer tools to help get started with the storing and versioning an application’s source code. This is followed by using services to automatically build, test, and deploy the application to the local environment or to AWS instances. It is advantageous, to start with CodePipeline to build the continuous integration and deployment services and later on use the CodeBuild and CodeDeploy as per need.

10. How can company like Amazon.com make use of AWS DevOps?

Ans:

Be it Amazon or any eCommerce site, they are mostly concerned with the automating all of frontend and backend activities in the seamless manner. When paired with the CodeDeploy, this can be achieved simply thereby helping the developers focus on building the product and not on the deployment methodologies.

11. Name example instance of making use of the AWS DevOps effectively.

Ans:

With AWS, users are provided with the plethora of services. Based on requirement, these services can be put to use effectively. For example, one can use a variety of services to build the environment that automatically builds and delivers AWS artifacts. These artifacts can later be pushed to the Amazon S3 using CodePipeline. At this point, options add up and give users lots of opportunities to deploy artifacts. These artifacts can either be deployed by using the Elastic Beanstalk or to local environment as per requirement.

12. What is Amazon Elastic Container Service (ECS) in AWS DevOps?

Ans:

Amazon ECS is the high-performance container management service that is more scalable and easy to use. It provides an easy integration to Docker containers, thereby allowing the users to run applications easily on EC2 instances using a managed cluster.

13. What is AWS Lambda in AWS DevOps?

Ans:

AWS Lambda is the computation service that lets users run their code without having to the provision or manage servers explicitly. Using AWS Lambda, users can run any piece of code for applications or services without a prior integration. It is as simple as the uploading a piece of code and letting Lambda take care of everything else required to run and scale code.

14. What is AWS CodeCommit in AWS DevOps?

Ans:

CodeCommit is the source control service provided in AWS that helps in the hosting Git repositories safely and in the highly scalable manner. Using CodeCommit, one can eliminate requirement of setting up and maintaining the source control system and scaling its infrastructure as per need.

15. Explain Amazon EC2?

Ans:

Amazon EC2, or Elastic Compute Cloud as it is called, is the secure web service that strives to provide the scalable computation power in the cloud. It is an integral part of the AWS and is one of the most used cloud computation services out there, helping the developers by making process of Cloud Computing straightforward and easy.

16. What is Amazon S3 in AWS DevOps?

Ans:

Amazon S3 or Simple Storage Service is the object storage service that provides the users with a simple and easy-to-use interface to store data and effectively retrieve it whenever and wherever required.

17. What is function of Amazon RDS in AWS DevOps?

Ans:

Amazon Relational Database Service (RDS) is the service that helps users in setting up the relational database in the AWS cloud architecture. RDS makes it simple to set up, maintain, and use database online.

18. How is CodeBuild used to automate release process?

Ans:

The release process can easily be set up and configured by first setting up CodeBuild and integrating it directly with the AWS CodePipeline. This ensures that build actions can be added continuously, and thus AWS takes care of continuous integration and continuous deployment processes.

19. Explain build project?

Ans:

A build project is an entity with the primary function to integrate with CodeBuild to help provide it the definition needed. This can include a variety of information such as:

- The location of source code

- The appropriate build environment

- What build commands to run

- The location to store the output

20. How is build project configured in AWS DevOps?

Ans:

A build project is configured easily using Amazon CLI (Command-line Interface). Here, users can specify the above-mentioned information, along with the computation class that is required to run the build, and more. The process is made straightforward and simple in AWS.

21. Which source repositories used with CodeBuild in AWS DevOps?

Ans:

AWS CodeBuild can easily connect with :

- AWS CodeCommit,

- GitHub,

- AWS S3

22. Which programming frameworks used with AWS CodeBuild?

Ans:

AWS CodeBuild provides ready-made environments for Python, Ruby, Java, Android, Docker, Node.js, and Go. A custom environment can also be set up by initializing and creating a Docker image. This is then pushed to the EC2 registry or the DockerHub registry. Later, this is used to reference the image in the users’ build project.

23. Explain build process using CodeBuild in AWS DevOps?

Ans:

- First, CodeBuild will establish a temporary container used for computing. This is done based on the defined class for the build project.

- Second, it will load the required runtime and pull the source code to the same.

- After this, the commands are executed and the project is configured.

- Next, the project is uploaded, along with the generated artifacts, and put into an S3 bucket.

- At this point, the compute container is no longer needed, so users can get rid of it.

- In the build stage, CodeBuild will publish the logs and outputs to CloudWatch Logs for the users to monitor.

24. Can AWS CodeBuild used with Jenkins in AWS DevOps?

Ans:

Yes, AWS CodeBuild can integrate with Jenkins easily to perform and run jobs in Jenkins. Build jobs are pushed to CodeBuild and executed, thereby eliminating the entire procedure involved in creating and individually controlling the worker nodes in Jenkins.

25. How can one view previous build results in AWS CodeBuild?

Ans:

It is simple to view the previous build results in the CodeBuild. It can be done either by the console or by making use of API. The results include the following:

- Outcome (success/failure)

- Build duration

- Output artifact location

- Output log (and the corresponding location)

26. Are there any third-party integrations used with AWS CodeStar?

Ans:

Yes, AWS CodeStar works well with the Atlassian JIRA, which is a good software development tool used by the Agile teams. It can be integrated with the projects seamlessly and can be managed from there.

27. Does AWS CodeStar used to manage existing AWS application?

Ans:

No, AWS CodeStar can only help users in the setting up a new software projects on AWS. Each CodeStart project will include all of development tools are CodePipeline, CodeCommit, CodeBuild, and CodeDeploy.

28. Why is AWS DevOps so important today?

Ans:

With the businesses coming into existence every day and the expansion of world of the Internet, everything from the entertainment to banking has been scaled to clouds. Most of companies are using the systems completely hosted on clouds, which can be used by the variety of devices.. AWS DevOps is integral in helping the developers transform way they can build and deliver new software in fastest and most effective way possible.

29. What are Microservices in AWS DevOps?

Ans:

Microservice architectures are design approaches taken when building single application as a set of services. Each of these services runs using its by own process structure and can communicate with the every other service using the structured interface, which is both the lightweight and easy to use. This communication is mostly based on the HTTP and API requests.

30. What is CloudFormation in AWS DevOps?

Ans:

AWS CloudFormation is one of the important services that give the developers and businesses the simple way to create the collection of AWS resources required and then pass it on to required teams in the structured manner.

31. What is VPC in AWS DevOps?

Ans:

A VPC (Virtual Private Cloud) is the cloud network that is mapped to the AWS account. It forms one among the first points in AWS infrastructure that helps users are create regions, subjects, routing tables, and even Internet gateways in AWS accounts. Doing this will provide users with the ability to use EC2 or RDS as per the requirements.

32. What is AWS IoT in AWS DevOps?

Ans:

AWS IoT refers to the managed cloud platform that will add the provisions for a connected devices to interact securely and smoothly with all of the cloud applications.

33. What is EBS in AWS DevOps?

Ans:

EBS or Elastic Block Storage is the virtual storage area network in the AWS. EBS names a block-level volumes of storage, which are used in the EC2 instances. AWS EBS is more compatible with the other instances and is reliable way of storing data.

34. What does AMI stand for?

Ans:

- AMI, also known as the Amazon Machine Image, is the snapshot of the root file system.

- It contains all of the information needed to be launch the server in the cloud.

- It consists of all of templates and permissions needed to be control the cloud accounts.

35. Why is buffer used in AWS DevOps?

Ans:

A buffer is used in the AWS to sync different components that are used to be handle incoming traffic. With the buffer, it becomes simpler to balance between the incoming traffic rate and usage of the pipeline, thereby ensuring unbroken packet delivery in all the conditions across cloud platform.

36. What is biggest advantage of adopting AWS DevOps model?

Ans:

The one main advantage that each business can leverage is maintaining high process efficiency and ensuring to keep costs as low as possible. With AWS DevOps, this achieved easily. Everything from having quick overall of how the work culture functions to helping teams work well together, it can only be as advantageous. Bringing development and operations are together, setting up structured pipeline for them to work, and providing with the variety of tools and services will reflect in quality of the product created and help in the serving customers better.

37. What is Infrastructure as Code (IaC)?

Ans:

| Term | Definition | Key Aspects | |

| Infrastructure as Code (IaC) |

Managing computing infrastructure through code for automation, version control, and reproducibility. |

Automation : Consistent and repeatable provisioning. Version Control : Track changes and collaborate. Reproducibility : Easily replicate environments. |

|

| Objective | Treat infrastructure like software code for automation, versioning, and reproducibility. | Scalability : Easily scale infrastructure. Consistency : Ensure uniformity across environments. Efficiency : Reduce manual errors through automation. |

|

| Tools and Languages | Terraform, Ansible, Puppet, Chef, YAML, JSON, etc. | UseCases : Cloud provisioning, configuration management, CI/CD integration. |

38. What are challenges when creating a DevOps pipeline?

Ans:

There are the number of challenges that occur with the DevOps in this era of technological outburst. Most commonly, it has to do with the data migration techniques and implementing new features easily. If data migration does not work, then system can be in an unstable state, and this can lead to the issues down the pipeline. However, this is a solved within the CI environment only by making use of feature flag, which helps in the incremental product releases. This, alongside the rollback functionality, can help in mitigating some of challenges.

39. What is hybrid cloud in AWS DevOps?

Ans:

A hybrid cloud refers to the computation setting that involves usage of a combination of private and public clouds. Hybrid clouds can be created using the VPN tunnel that is inserted between cloud VPN and on-premises network. Also, AWS Direct Connect has ability to bypass Internet and connect securely between the VPN and data center easily.

40. How AWS Elastic Beanstalk different from CloudFormation?

Ans:

EBS and CloudFormation are among important services in the AWS. They are designed in the way that they can collaborate with each other easily. EBS provides the environment where applications can be deployed ina cloud. This is integrated with the tools from CloudFormation to help manage lifecycle of the applications. It becomes more convenient to make use of a variety of AWS resources with this. This ensures more scalability in terms of using it for the variety of applications from a legacy applications to the container-based solutions.

41. What is Amazon QuickSight in AWS DevOps?

Ans:

Amazon QuickSight is the Business Analytics service in the AWS that provides easy way to build visualizations, perform analysis, and drive business insights from the results. It is the service that is fast-paced and completely cloud-powered, giving the users immense opportunities to explore and use it.

42. How does Kubernetes containers communicate in AWS DevOps?

Ans:

An entity called the pod is used to map between the containers in Kubernetes. One pod can contain a more than one container at a time. Due to the flat network hierarchy of a pod, communication between each of these pods in an overlay network becomes a straightforward.

43. How is beneficial to make use of version control?

Ans:

- Version control establishes the easy way to compare files, identify differences, and merge if any changes are done.

- It creates simple way to track the life cycle of application build, including the every stage in it like development, production, testing, etc.

- It brings about good way to establish the collaborative work culture.

44. What skills should successful AWS DevOps specialist possess?

Ans:

- Working of SDLC

- AWS Architecture

- Database Services

- Virtual Private Cloud

- AWS IAM and Monitoring

- Configuration Management

- Application Services, AWS Lambda, and CLI

45. How Is IaC Implemented Using AWS?

Ans:

Start by talking about age-old mechanisms of writing commands onto the script files and testing them in separate environment before deployment and how this approach is replaced by iac. Similar to codes written for the other services, with the help of aws, iac allows the developers to write, test, and maintain infrastructure entities in the descriptive manner, using formats like json or yaml. This enables easier development and faster deployment of the infrastructure changes.

46. Difference Between Scalability And Elasticity?

Ans:

Scalability is ability of the system to increase its hardware resources to handle increase in demand. It can be done by increasing hardware specifications or increasing processing nodes.Elasticity is ability of a system to handle the increase in workload by adding additional hardware resources when demand increases(same as scaling) but also rolling back scaled resources when the resources are no longer needed. This is particularly helpful in the cloud environments, where pay per use model is followed.

47. How Is Amazon RDS, DynamoDB And Redshift Different?

Ans:

Amazon rds is a database management service for a relational databases, it manages patching, upgrading, backing up of data, etc. Of databases for intervention. Rds is the db management service for a structured data only. Dynamodb, on other hand, is nosql database service, nosql deals with the unstructured data. Redshift is an entirely different service, it is data warehouse product and is used in the data analysis.

48. How Can Speed Up Data Transfer In Snowball?

Ans:

- By Performing the Multiple Copy Operations At One Time I.E. If Workstation Is Powerful Enough Can Initiate Multiple Cp Commands Each From Different Terminals, On Same Snowball Device.

- Copying From the Multiple Workstations To Same Snowball. Transferring Large Files Or By Creating the Batch Of Small File, This Will Reduce Encryption Overhead.

- On Switch Being Used, This Can Hugely Improve the Performance

49. Explain Amazon Ec2 Instance Like Stopping, Starting And Terminating?

Ans:

Stopping and starting instance: when an instance is stopped, the instance performs the normal shutdown and then transitions to a stopped state. All of its amazon ebs volumes are remain attached, and can start the instance again at a later time. And are not charged for the additional instance hours while the instance is inthe stopped state. Terminating an instance: when instance is terminated, the instance performs the normal shutdown, then the attached amazon ebs volumes are deleted unless volume’s delete ontermination attribute is set to be false. The instance itself is also deleted, and can’t start the instance again at later time.

50. What Is Importance Of Buffer In Amazon Web Services?

Ans:

A buffer will synchronize the different components and makes an arrangement additional elastic to a burst of load or a traffic. The components are prone to work in the unstable way of receiving and processing requests. The buffer creates an equilibrium linking various apparatus and crafts them effort at identical rate to supply the more rapid services.

51. What Are Components Involved In Amazon Web Services?

Ans:

There are 4 components involved and areas are belowamazon s3: with this, one can retrieve a key information which is occupied in creating cloud structural design and amount of produced information can be stored in this component that is consequence of the key specified. Amazon ec2: helpful to run the large distributed system on hadoop cluster.. Amazon sqs: this component acts as mediator between the different controllers. Also worn for a cushioning requirements those are obtained by manager of amazon.Amazon simpledb: helps in storing transitional position log and errands executed by the consumers.

52. Which Automation Gears Can Help With Spinup Services?

Ans:

The api tools can be used for a spinup services and also for written scripts. Those scripts could be coded in the perl, bash or other languages of preference. There is one more option that is patterned administration and stipulating tools are a dummy or improved descendant. A tool called scalr can also be used and finally, can go with the controlled explanation like a rightscale.

53. Explain Memcached Should Not Be Used?

Ans:

Memcached common misuse is to use it as data store, and not as cache never use memcached as only source of the information need to run your application. Data should always be available through the another source as well memcached is just a key or value store and cannot perform query over data or iterate over the contents to the extract information. Memcached does not offer the any form of security either in an encryption or authentication.

54. What Is Dogpile Effect? How Can Prevent This Effect?

Ans:

Dogpile effect is referred to event when the cache expires, and websites are the hit by the multiple requests made by a client at the same time. This effect can prevented by using semaphore lock. In this system when the value expires, the first process acquires lock and starts generating a new value.

55. How To Adopt AWS DevOps Model?

Ans:

- Transitioning To the DevOps Requires A Change In Culture And Mindset. At Simplest, DevOps Is About Removing Barriers Between Two Traditionally Siloed Teams, Development, And Operations.

- In Some Organizations, There May Not Even Be a Separate Development And Operations Teams; Engineers May Do the Both. With DevOps, The Two Teams Work Together To Optimize Both Productivity Of Developers And Reliability Of Operations.

- They Strive To the Communicate Frequently, Increase Efficiencies, And Improve Quality Of Services They Provide To the Customers. They Take Full Ownership For Services, Often Beyond Where Stated Roles Or Titles Have Traditionally Been Scoped By Thinking About End Customer’s Needs And How They Can Contribute To the Solving Those Needs.

56. What Is Amazon Elastic Container Service In Aws DevOps?

Ans:

Amazon elastic container service (ecs) is highly scalable, high-performance container management service that supports the docker containers and allows to easily run applications on the managed cluster of amazon ec2 instances.

57. Mention Key Components Of AWS?

Ans:

Route 53 : A DNS is a Web-Based Service Platform. Simple E-Mail Service: Sending Of the E-Mail Is Done By Using RESTFUL API Call Or Regular SMTP (Simple Mail Transfer Protocol).

Identity And Access Management : Improvised the Security And Identity Management Are Provided For AWS Account.

Simple Storage Device Or (S3) : It Is Huge Storage Medium, Widely Used For the AWS Services.

Elastic Compute Cloud (EC2) : Allows On-Demand Computing Resources For the Hosting Applications And Essentially Useful For Unpredictable Workloads

58. What Is AWS Developer Tools?

Ans:

AWS Developer Tools comprise services like AWS CodePipeline and AWS CodeBuild, streamlining application development and deployment on the AWS platform. These tools automate continuous integration, testing, and deployment, fostering collaboration and efficiency among developers.

59. Explain Relation Between Instance And Ami?

Ans:

It is the kind of template, which contains the software configuration such as an operating system, an application server, applications, etc. by using AMI, can launch an instance, that is copy of the AMI running as virtual server in the cloud. launch various kinds of the instances from a single AMI, and instance type determines a hardware of host computer, which is used for an instance. Each and every type of the instance provides the different compute and memory capabilities

60. List Out Various Deployment Models For Cloud?

Ans:

- Private Cloud

- Public Cloud

- Hybrid Clouds

61. Define Auto-scaling?

Ans:

It is the feature of AWS, that permits us to configure and automatically provision and to spin up a new instances without the requirement for intervention. may do this by setting thresholds and metrics for the monitoring. When those thresholds are crossed, choosing of new instance will be spun up, configured, and rolled into a load balancer pool. Then have scaled horizontally without any operator intervention. It is incredible feature of AWS and cloud virtualization. It Spin up new larger instance than the one presently running. And Pause that instance and detach root ebs volume from a server and discard.

62. What is use of Instacart in Aws Devops?

Ans:

The use of Instacart AWS Code Deploy to automate a deployments for all of its services like a front-end and back-end. With the help of AWS Code Deploy, can enable the Instacart developers which helps to focus on product and worry less about deployment of the operations.

63. Define Amazon Machine Image?

Ans:

Amazon machine image is basically a snapshot of a root filesystem and provides an information that is necessary to launch an ‘instance.’ Its instance is the virtual server in a cloud computing environment.

64. What is process of building hybrid cloud?

Ans:

In numerous ways to be build a hybrid cloud, a standard way of building the hybrid cloud is to design the Virtual Private Network tunnel between the cloud VPN and network on premise. The Direct Connect of the AWS may bypass the public internet and be used to be establish the secure connection between the VPN and private data center.

65. Explain how will handle revision control?

Ans:

In order to handle revision control, it is essential to first post on a GitHub or SourceForge. It will ensure that it can be seen by the everyone. Except from this, may also post the checklist from a clear last revision. This will make sure that if any unresolved cases exist, can be resolved simply.

66. Comparison between classic automation and orchestration?

Ans:

The Classic automation encompasses an automation of software installation as well as a system configuration such as user creation and security baselining. The orchestration process focuses on connection as well as interaction of existing and a providing services.

67. Describe Code Commit?

Ans:

It refers to ‘fully managed’ service of source control, which makes it easy for organizations for secured hosts as well as private Git repositories which are extremely scalable. It may eliminate the need for the operating one’s control system of its own source. Except this, do not have to worry about scaling of the infrastructure. And may use it for securely storing anything they like a source code and the binaries.

68. Mention some of important advantages of AWS Code Build?

Ans:

The AWS Code Build in the AWS DevOps, which refers to the completely managed service of built, it helps to run tests and assembles source code. Instead of this, this has potential to generate software packages which may be readily deployed. When users have the AWS Code Build, they are not required for the provision, to manage, and also to scale servers of build.

69. Explain VPC Peering?

Ans:

The VPC Peering connection, that refers to the network connection between the two Virtual Private Clouds. This is basically used to route traffic between them by making the use of a private IP addresses. Its instances in VPCs are able to interact with the one another when they exist within a same network. The connection of VPC Peering may help users by facilitating a data transfer

70. Define Continuous Delivery in AWS DevOps?

Ans:

Continuous Delivery is the practice of software development, where codes are the built automatically. In addition, testing and the preparation of these codes are done for their release to the production. The CD expands upon CI concept by deploying all the code changes to testing a production environment, it is done after a build stage

71. Describe a build project?

Ans:

This is used to describe a process of how to run a build in the Code Build, that includes information like how to identify a right place for source code, how to use environment of build, which build commands used to run it, and where output of build is stored. The build environment is nothing but combination of the operating system, runtime of programming language, and the tools, which is helped to run the build by CodeBuild.

72. What is process to configure a build project?

Ans:

It may be configured by using the console or through the CLI of AWS. can modify the repository location of a source, and the environment of runtime , commands of the build, and also role of IAM, which is created through the container, and class of compute needed for the build to run. It is optional that can identify commands of build with the help of the buildspec.yml file.

73. What are programming frameworks that CodeBuild supports in DevOps?

Ans:

It offers the preconfigured environments that are helped for the assisted versions, they are like Java, Python, Ruby, Go, Android,Node.js and Docker. may also customize the environment through designing an image of the Docker and uploading it to the EC2 of Amazon Container Registry and hub registry of Docker. Then may then refer to that image of custom in a project of build.

74. When build is run in CodeBuild of Devops, what happens?

Ans:

It is used to design the provisional container of compute class, that is defined in a project of built, and it is helped to load it through use of environment of specified runtime , source code download, which is used to commands execution that are projects configuration, also to uploading of artifact which is generated for an S3 bucket, and then it is also used to destroy container of the compute. While build, CodeBuild streams, the output of build for the service console and also CloudWatch Logs of Amazon.

75. How to use CodeBuild of aws devops with Jenkins?

Ans:

The plugin of CodeBuild for a Jenkins may help with the codebuilt integration for the Jenkins jobs of Jenkins. jobs of built are delivered to the CodeBuild, and also for the provisioning need the elimination and for a Jenkins worker nodes management.

76. List out some applications and how can build by using AWS CodeStar?

Ans:

The CodeStar may be helpful for creating a web applications, services, etc. those web applications run on the EC2 of Amazon, and Beanstalk of aws elastic or the AWS Lambda. Templates of the projects are available in the various programming languages like Java, Node.js, PHP, Ruby, Python etc.

77. what way AWS CodeStar users relate to IAM users?

Ans:

The users of the CodeStar are IAM customers, the CodeStar managed it to offer the access policies which are role based and pre-built across the environment of development. As users of CodeStar are built on it, may even get the advantages of administrative IAM. When involve an existing IAM customer to a project of a CodeStar, and global account policies exist in the IAM which are enforced.

78. How to add, remove or change customers for AWS CodeStar projects?

Ans:

Can add, change or remove customers for a projects of the CodeStar through the “Team” section of a CodeStar console. And may select to grant the users Owner, Contributor or permissions of viewer, may also remove the users or change their roles at any time.

79. How do containers communicate in Kubernetes?

Ans:

There are the three ways containers can communicate with each other:

- Through localhost and the port number exposed by a other container.

- Through the pod’s IP address.

- Through a service that has IP address and usually also has DNS name.

80. How is DevOps different from agile?

Ans:

The goal of DevOps is to improve the communication and collaboration between the large teams who are responsible for a developing software. This approach differs from that used in the Agile, where small collaborating groups work together on the adapting quickly as changes occur during development process.

81. Define DevOps lifecycle?

Ans:

- Plan

- Code

- Build

- Test

- Release

- Deploy

- Operate

- Monitor

82. Name few cloud security best practices?

Ans:

One best practice is that application security must be a fully automated to keep pace with the dynamic clouds and rapid software development practices. Another is that application security must be a comprehensive in scope. A third best practice is application security must provide the accurate and insightful information, not just a partial data.

83. Difference between Terraform and CloudFormation?

Ans:

Terraform is the tool from Hashicorp, and CloudFormation is from AWS. When comparing two, it’s important to realize that, unlike Terraform, CloudFormation is a not open source and it follows the roadmap of features set by Amazon. Terraform is a cloud-agnostic. It provides the users with quite a bit of flexibility and Terraform can be used for the any type of resource that can be interacted with by API.

84. What does CodeBuild play in release process automation?

Ans:

The release process can be a quickly set up and customized by the first setting up CodeBuild and integrating it directly with AWS CodePipeline. As a result, AWS handles continuous integration and deployment procedures and ensures build activities can be introduced continuously.

85. What do mean when refer to VPC endpoints?

Ans:

Without using Internet, a VPN, or Amazon Direct Connect, a VPC endpoint enables to create a secure connection between the VPC and another AWS service. These are more available, redundant, globally expandable and a redundant VPC components that enable communication between the instances in the VPC and AWS services without jeopardizing a network traffic or exceeding bandwidth restrictions.

86. What are some of main benefits of using version control?

Ans:

- Team members can use the Version Control System (VCS) to work on any file. The team will eventually be able to be combine all modifications into single version thanks to VCS.

- Every time save a newer project version, the VCS asks to summarize the changes made briefly. Also, get to view the file’s content modifications precisely. Will be able to see who changed a project and when.

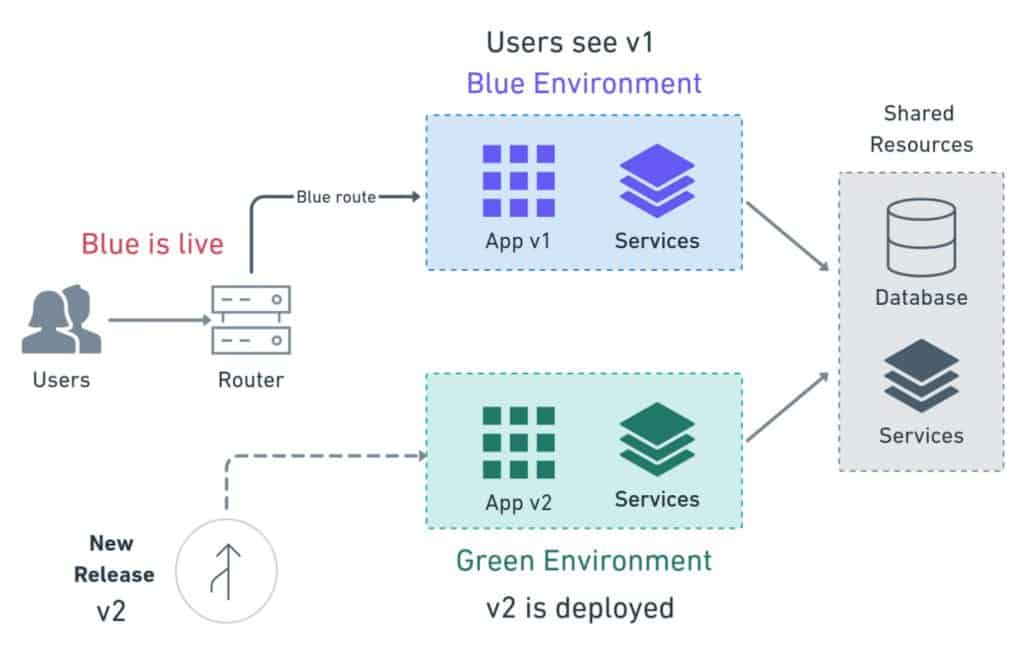

87. How does Blue/Green Deployment Pattern function?

Ans:

It is the well-liked strategy for a continuous deployment to cut down on downtime. Data is sent from a one instance to the next in this process. To utilize the new code version, must replace the old one with the new one. The old version is in blue environment, whereas a new one is in a green one. And must build a new instance from previous one after modifying a current version to use updated version.

88. What does “shift left to reduce failure” in DevOps mean?

Ans:

A DevOps idea called the “shifting left” can improve the security, performance, and other aspects. Think about this: if examine every DevOps process, may infer that security testing happens before a release. can strengthen the left side of development phase by employing a left-shift technique. Not just the development phase, but all the steps, can be combined before and during the testing. Because holes will be a found earlier, security will probably increase.

89. Describe most common branching techniques?

Ans:

Release branching –can duplicate a develop branch to create a release branch once it has a enough functionality for a release. No more additions can be made because this branch marks beginning of a subsequent release cycle. Feature branching – This strategy records all the modifications made to single feature inside a branch. The branch merges into a master once thoroughly assess the quality and approve using the automated tests. Task branching – With this branching architecture, every task is implemented in its branch. The task key is stated in a branch name.

90. In AWS DevOps, describe VPC Peering?

Ans:

A VPC peering link is the network connection between different Virtual Private Clouds. Using a private IP addresses essentially makes a traffic routing between them more accessible. As though they were on a same network, instances in VPCs can also communicate with the one another. A VPC Peering connection could benefit the users because it speeds up a data flow.