- Introduction to Buffers

- Why Buffers in node.js?

- Creating Buffers

- Writing to Buffers

- Reading from Buffers

- Buffer Methods

- Buffer and Streams

- Manipulating Buffers

- Security Considerations

- Real-world Use Cases

- Conclusion

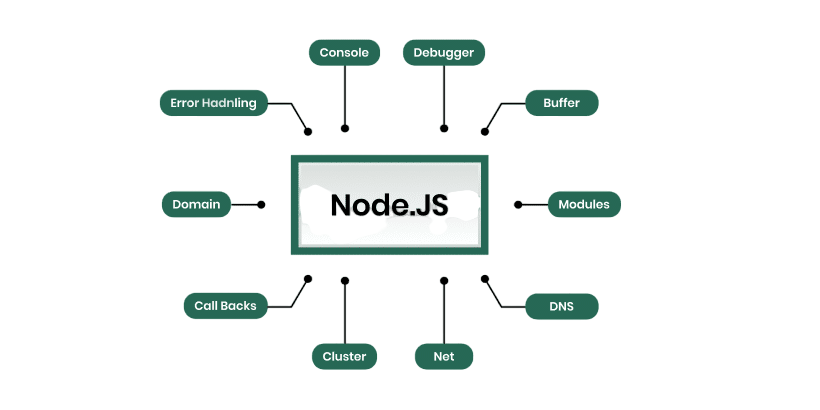

Introduction to Buffers

In Node.js, buffers play a fundamental role in handling binary data directly in memory. Unlike traditional JavaScript in browsers, which mostly deals with strings and objects, real-world Node.js applications often works with raw data, especially when dealing with streams, file systems, or network operations. Buffers are a global object in Node.js, meaning they can be accessed without importing any additional modules. They provide a way of dealing with binary data streams, HTTP server making them indispensable for building high-performance applications, particularly those that interact with low-level system resources.

Are You Interested in Learning More About Web Developer Certification? Sign Up For Our Web Developer Certification Courses Today!

Why Buffers in Node.js?

Node.js applications often require handling binary data, whether it’s reading from a file, receiving packets over a network, or processing image or audio data. JavaScript’s traditional data types are ill-suited for these tasks, hence the need for Buffers. Buffers allow Node.js to efficiently manage raw binary data directly in memory without converting it to other forms. This direct access not only improves performance but also provides better control over data manipulation. Without Buffers, real-world Node.js applications would struggle to offer the speed and efficiency needed for backend system resources.that require interaction with lower-level system components.

Creating Buffers

- Creating a buffer in Node.js is simple and can be done in multiple ways. One common method is using Buffer.alloc(size), which creates a buffer of the specified size, initialized with zeroes.

- Another way is Buffer.from(array), which creates a buffer containing the bytes in the array.

- If you already have a string, you can use Buffer.from(string[, encoding]) to create a buffer based on a string and optional encoding (like ‘utf8’, ‘ascii’, ‘base64’).

- There is also Buffer.allocUnsafe(size), which allocates a buffer quickly without initializing it for performance reasons, but it may contain old data, so it must be handled cautiously to avoid security risks.

Writing to Buffers

Writing to a buffer involves using the buffer.write(string[, offset[, length]][, encoding]) method. This function writes a string to the buffer at the specified offset, using the specified encoding, and optionally up to a specified length. By default, the encoding is ‘utf8’. Writing to buffers is useful when you need to manipulate chunks of data manually, for instance when creating a network protocol implementation or processing file data. Proper understanding of offsets and lengths is crucial when writing data to avoid overwriting unintended sections.

Reading from Buffers

- Reading from a buffer is just as important as writing.

- The simplest way is using buffer.toString([encoding[, start[, end]]]), which converts the buffer contents to a string.

- You can specify an encoding, a start index, and an end index to read only a portion of the buffer.

- For more fine-grained control, Node.js also provides methods like buffer.readUInt8(offset) to read an unsigned 8-bit integer at a given offset, or buffer.readInt16LE(offset) to read a signed 16-bit integer in little-endian format.

- These methods allow applications to precisely interpret and manipulate binary data at a very detailed level.

- Buffer Methods come equipped with many useful methods.

- Some of the most notable include buffer.equals(otherBuffer) for comparing buffers, buffer.copy(targetBuffer, targetStart, sourceStart, sourceEnd) for copying data between buffers, and buffer.slice(start, end) for creating a new buffer sharing the same memory.

- There are also methods like buffer.fill(value[, offset[, end]][, encoding]) for filling a buffer with a specified value.

- These methods provide developers with the necessary tools to efficiently manage binary data in memory, critical for performance-sensitive applications.

- Manipulating buffers often involves operations like slicing, concatenating, comparing, and filling.

- Node.js provides Buffer.concat(list[, totalLength]) for merging multiple buffers into one.

- This is especially useful when receiving data in multiple parts over a network. buffer.slice(start, end) allows creating a sub-buffer, but it’s important to note that it shares memory with the original buffer, so changes are reflected across both.

- Additionally, buffer.compare(otherBuffer) can be used to determine the sort order of two buffers, useful in applications like sorting binary keys in databases.

Excited to Obtaining Your web developer Certificate? View The web developer course Offered By ACTE Right Now!

Buffer Methods

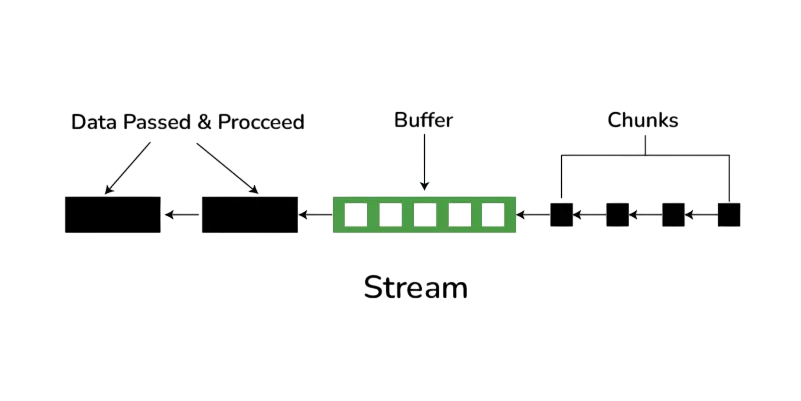

Buffer and Streams

In Node.js, Buffers and Streams often work hand-in-hand. Streams represent a continuous flow of data and when dealing with streams, the data is read or written in small chunks called buffers. For example, when reading a large file using a readable stream, the file is not loaded entirely into memory. Instead, it is read in buffer-sized chunks, improving memory usage and application performance. This chunked approach is essential when handling large files, video/audio streaming, or any other situation where loading entire data into memory is impractical or impossible.

Encoding in Buffers

Encoding is vital when dealing with buffers because it determines how data is interpreted. Node.js buffers support several encodings, including ‘utf8’, ‘ascii’, ‘base64’, ‘hex’, ‘latin1’, and ‘ucs2’. Choosing the correct encoding is crucial; for instance, using ‘utf8’ for text and ‘base64’ for binary data sent over HTTP. Encoding impacts how data is stored and retrieved, so developers must ensure consistency between encoding when writing and reading data. Buffer operations that don’t correctly handle encoding can result in corrupted data or unexpected behaviors.

Interested in Pursuing web developer certification Program? Enroll For Web developer course Today!

Manipulating Buffers

Security Considerations

Buffers, especially when created using Buffer.allocUnsafe(), can expose uninitialized memory, potentially leaking sensitive data if not handled properly. It is recommended to use Buffer.alloc() unless you have a compelling performance reason and are absolutely sure you will overwrite all parts of the buffer. Another security consideration is validating the size of incoming buffer data, especially when dealing with user input. Buffer overflow attacks can occur if applications trust external data sizes blindly, leading to possible crashes or vulnerabilities.

Real-world Use Cases

Buffer in Node.js are used extensively in real-world Node.js applications. For instance, when building an HTTP server, Node.js uses buffers internally to handle incoming requests and outgoing responses. In cryptography, operations like hashing and encryption require dealing with binary data in buffers. Media streaming services rely on buffers to efficiently transmit audio and video data. File manipulation tools use buffers to read, process, and write large files without exhausting memory. Database clients for binary data stores like Redis and MongoDB also rely on buffers to efficiently send and receive data packets.

Conclusion

Buffers are a powerful feature in Node.js that provide the capability to handling binary data efficiently and securely. Understanding how to create, manipulate, read, and write buffers is essential for developing high-performance applications that interact closely with files, networks, and system resources. While they are extremely useful, improper handling of buffers can lead to security vulnerabilities or application bugs. By mastering buffers and their related concepts such as streams and encoding,HTTP server, developers can build robust, efficient, and secure Node.js applications that handle data gracefully at any scale.