SAP Business Intelligence and SAP Business Warehouse are essential components of SAP’s software suite aimed at enabling business intelligence and efficient data management. SAP Business Intelligence (BI) encompasses reporting and analysis tools that facilitate the creation of interactive reports and dashboards. It also includes data visualization solutions like SAP Lumira and SAP Analytics Cloud. SAP Business Warehouse (BW), on the other hand, is a robust data warehousing solution. It serves as a centralized repository for an organization’s data, enabling the consolidation, transformation, and harmonization of data from various sources.

1. What is SAP BI/BW primary purpose in an organization?

Ans:

The primary purpose of SAP BI/BW in an organization is to gather, store, and analyze data, enabling data-driven decision-making. It serves as a central hub for consolidating and processing data from various sources, facilitating comprehensive reporting and analytics to support business operations and strategy.

2. What options are available in Transfer Rule?

Ans:

Transfer Rules provide several options, and ABAP (Advanced Business Application Programming) code is required when you need to implement custom logic or complex transformations. Some common options available in Transfer Rules include field mapping, constant values, and lookups to standard or custom tables.

3. What does different type of InfoSet joins?

Ans:

- Inner Join

- Left Outer Join

- Temporal Join

- Self Join

4. How would optimize the Dimensions?

Ans:

Use as many as the possible for performance improvement; Ex: Assuming there are 100 goods and 200 customers, the dimension size will be 20000 if both are made into one, whereas 300 rows will be created if the dimensions are made into separate columns.

5. What function does InfoCube play in the BW system?

Ans:

InfoCube is a multidimensional dataset that is utilized in a BEx query for analysis. A group of relational tables that are logically connected to implement a star schema make up an InfoCube. The numerous dimension tables are coupled with a Fact table in a star schema.

6. What does importance of table ROIDOCPRMS?

Ans:

It’s the source system for IDOC parameters. The data source system, data packet size, maximum number of lines in a data packet, and other data transport information are listed in this table. By altering the contents of this table, the control parameters option on SBIW allows for changes to the data packet size.

7. What does Data Target Administration Task?

Ans:

Delete index, generate index, construct a database statistics, initial fill of a new aggregates, roll up of filled aggregates, compression of infocube,activate ODS, complete deletion of a data target.

8. What does Parallel Process that could have Locking Problems?

Ans:

- Heirachy the attribute change run.

- Loading the master data from same info object; for ex: avoid master data from the different source systems at the same time.

- Rolling up for a same info cube.

- Selecting the deletion of info cube/ ODS and parallel loading.

- Activation or delection of ODS object when a loading parallel.

9. What are the key components of SAP BW?

Ans:

InfoProviders: Core data storage and modeling.

DataSources: Data extraction from source systems.

DTP (Data Transfer Process): Data loading and transformation.

BEx (Business Explorer): Reporting and analysis tool.

Metadata Repository: Data definition storage.

10. Explain the difference between SAP BI and SAP BW.

Ans:

| Aspect | SAP BI | SAP BW | |

| Purpose |

Reporting and analytics |

Data warehousing and integration | |

| Data Source | Extracts data for analysis | Stores, transforms, and manages data | |

| Reporting Tools | Lumira, Analytics Cloud | BEx (Business Explorer) | |

| Transformation |

Limited |

Extensive ETL and data modeling |

11. How would convert a Info Package Group into a Process Chain?

Ans:

Individual info packages are automatically inserted when you double-click the info package grp, choose the “Process Chain Maint” button, and provide the name and description.

12. How do create a real time InfoCube in administrator workbench?

Ans:

A real time InfoCube can be created by using the Real Time Indicator check box.

13. What does Data Loading Tuning one can do?

Ans:

- Watch the ABAP code in a transfer and update rules

- Load balance on a different servers

- Indexes on a source tables

- Use a fixed length files if load data from a flat files and put the file on application server

- Use a content extractor

14. What does use Of Bw Statistics?

Ans:

The sets of cubes delivered by the SAP is used to measure a performance for query, loading data etc., It also shoes usage of aggregates and the cost associated with then.

15. How are options used while defining aggregates?

Ans:

- * – groups according to the characterstics.

- H – Hierarchy.

- F – fixed value.

- Blank — none.

16. Which Data store object is used?

Ans:

DataStore object for direct updating the enables fast access to data for reporting and analysis. In how it handled data, it differed from conventional DSOs. Data is kept in the same format as it was when it was loaded onto the DataStore object so that applications may directly edit it.

17. What does I_step do when I write user exit for variables?

Ans:

I_Step is used in the ABAP code as a conditional check.

18. What all data sources have used to acquire data in SAP BW system?

Ans:

- SAP systems (SAP Applications/SAP ECC)

- A Relational Database (Oracle, SQL Server, etc.)

- Flat File (Excel, Notepad)

- A Multidimensional Source systems (Universe using UDI connector)

- Web Services that transfer a data to BI by means of push

19. Define Ods?

Ans:

The Operations data Source can overwrite an existing data in ODS.

20. How to do Define Exception Reporting in the Background?

Ans:

Use reporting agent for this from AWB. Click on the exception icon on left;give a name and description. Select exception from query for reporting(drag and drop).

21. What does Aggregate?

Ans:

- Aggregates are mini cubes, and have a subset of cubes .

- It contains the less amount of data.

- Cube has 1 billion records. And can store less amount of data in mini cube that works faster to increase the performance .

22. How is the SAP BW system’s Infoarea used?

Ans:

Info Area in SAP BI are used to group the similar types of object together. Info Area are used to manage the Info Cubes and Info Objects. Every Info Objects resides in an Info Area and can define it a folder which is used to be hold similar files together.

23. What does data types for characteristic Info Object?

Ans:

- CHAR

- NUMC

- DATS

- TIMS

There are 4types:

24. What does Model of Info Cube?

Ans:

Info cube model is the extended star schema.

25. What does 3 SAP Defined Dimensions?

Ans:

The 3 SAP defined dimensions are:

Data packet dimension (P): It contains the 3characteristics.a) request Id (b) Record type (c) Change run id.

Time dimension (T): It contains a time characteristics such as 0calmonth, 0calday etc.

Unit Dimension (U): It contains the basically amount and quantity related units.

26. When Virtual Providers are used based on DTP?

Ans:

- When only a some amount of data is used.

- Need to access up to date data from the SAP source system.

- Only a few users executes queries simultaneously on database.

27. How many tables does Info Cube contain?

Ans:

The info cube contains the two table’s E table and F (fact) table.

28. What does different types on Virtual providers?

Ans:

- VirtualProviders based on DTP

- VirtualProviders with the function modules

- VirtualProviders based on the BAPI’s

29. Why Co-pa is Generic Extraction and not a Business Content Extraction?

Ans:

CO-PA is more customizable and value fields and characteristics are explained differently between the customers, SAP has given CO-PA as generic extraction which can be customized.

30. What does different categories of Info objects in BW system?

Ans:

- Characteristics like a Customer, Product, etc.

- Units like a Quantity sold, currency, etc.

- Key Figures like a Total Revenue, Profit, etc.

- Time characteristics like the Year, quarter, etc.

Info Objects can be categorized into the below categories:

31. What does Fact Table?

Ans:

Fact table is the collection if facts and relations that means a foreign keys with the dimension. Actually fact table holds the transactional data.

32. Define Star Schema.

Ans:

A star schema is a type of data warehouse schema used for organizing data. It features a central fact table containing quantitative data and multiple dimension tables that provide context. This design simplifies queries, enhances performance, and is commonly used in business intelligence for efficient reporting and analysis.

33. What does Slowly Changing Dimension?

Ans:

Dimensions those changes with the time are called slowly changing dimension.

34. What is the use of Transformation and how mapping is done in BW?

Ans:

Transformation process is used to perform a data consolidation, cleansing and data integration. When data is imported from one BI object to another, transformation is performed on the data. Transformation is used to transform a source field into a destination object format. Transformation rules are used to map a source fields and target fields. Different rule types can be used for a transformation.

35. How to create connection with Lis Infostructures?

Ans:

LBW0 Connecting LIS InfoStructures to the BW.

36. Distinguish difference between Ods and Infocube and Multiprovider?

Ans:

ODS: Provides clear tables with granular data that can be overwritten, making it perfect for drilldown and RRI.

CUBE: Follows star schema, And can only append data, ideal for a primary reporting.

MultiProvider: Does not have a physical data. It allows to access a data from different InfoProviders (Cube, ODS, InfoObject). It is also preferred for a reporting.

37. What does Extractor types?

Ans:

- A Customer-Generated Extractors LIS, FI-SL, CO-PA.

- A BW Content FI, HR, CO, SAP CRM, LO Cockpit.

- DB View, InfoSet, Function Module.

Application Specific:

Cross Application (Generic Extractors):

38. What does Start Routines, Transfer Routines and Update Routines?

Ans:

Start Routines: The start procedure is invoked for each DataPackage once data is written to the PSA but before transfer rules are executed. It allows the complex computations for a key figure or characteristic.

Transfer / Update Routines: They are explained at the InfoObject level. It is like a Start Routine. It is independent of DataSource and can use this to define a Global Data and Global Checks.

39. What does steps involved in Lo Extraction?

Ans:

- RSA5 Select a DataSources

- LBWE Maintain a DataSources and Activate Extract Structures

- LBWG Delete Setup Tables

- 0LI*BW Setup tables

- RSA3 Check the extraction and the data in Setup tables

- LBWQ Check extraction queue

- LBWF Log for LO Extract Structures

- RSA7 BW Delta Queue Monitor

The steps are:

40. What does Bw Statistics and What is its use?

Ans:

They are group of the Business Content Info Cubes which are used to measure a performance for Query and Load Monitoring. It also shows usage of aggregates, OLAP and Warehouse management.

41. What does Delta Options available when load from Flat File?

Ans:

- Full Upload

- New Status for the Changed records (ODS Object only)

- Additive Delta (ODS Object & InfoCube)

The are 3 options for a Delta Management with Flat Files:

42. Explain Integration in SAP BW / BI.

Ans:

The efficiency of SAP BW may be improved by adopting in-memory technology. In particular, they enable effective processing of challenging scenarios with variable query types, big data quantities, increased query frequency, and complex computations.

43. What does steps to extract data from R/3?

Ans:

- Replicate DataSources

- Assign InfoSources

- Maintain Communication to the Structure and Transfer rules

- Create and InfoPackage

- Load Data

44. What is the Open Hub Service?

Ans:

The Open Hub Service enables us to distribute a data from an SAP BW system into an external Data Marts, analytical applications, and other applications. And can ensure a controlled distribution using several systems. The central object for an exporting data is InfoSpoke. can define a source and the target object for the data. BW becomes hub of an enterprise data warehouse. The distribution of a data becomes clear through the central monitoring from distribution status in the BW system.

45. How does an Info object be an Infoprovider ?

Ans:

when want to report on the Characteristics or Master Data. Have to right click on InfoArea and select ‘Insert characteristic as a data target’. For example, can make 0CUSTOMER as InfoProvider and report on it.

46. How to create a Condition and Exceptions in Bi.7.0?

Ans:

- 1. Execute queries one by one, one which is having a background colour as exception reporting are with an exceptions.

- 2. Open queries in BEX Query Designer. If finding exception tab at right side of a filter and rows/column tab, the query is having an exception.

From the query name or description, would not be able to judge whether query is having any exception.There are two ways of finding the exception against a query:

47. What does secondary indexes with respect to Info Cubes?

Ans:

This is used to load new Data Package into the InfoCube aggregates. If we have not performed a rollup then the new InfoCube data will not be available while reporting on the aggregate.

48. Define 0recordmode?

Ans:

It is info object , 0Record mode is used to identify a delta images in BW which is used in the DSO .it is automatically activated when activate DSO in BW. Like that in R/3 also have a field 0cancel. It holds the delta images in R/3. When ever extracting data from R/3 using the LO or Generic Etc. This field 0Cancel is mapping with the 0Record mode in BW. Like this BW identify a Delta images.

49. How does BEx analyzer connect to BW?

Ans:

Bex Analyzer is connected with the OLAP Processor. OLE DB Connectivity makes a Bex Analyzer connects with BIW.

50. Is Development tasks for Rms Release Work?

Ans:

The main task is Complete life cycle development of a SAP Authorization Roles. This includes the participating in high level, low level, RMS’s and technical development of roles.

51. What does Rms application?

Ans:

SAP Records the Management is component of the SAP Web Application Server for an electronic management of records and even paper-based information can be part of electronic record in SAP RMS.

The RMS divides the various business units logically thereby making it possible to provide a particular groups of users with the access to particular records, as needed within business processes.

52. Explain BI content implementation considerations.

Ans:

BI Content for a SAP Business Intelligence offers quick and cost-effective implementation. It also provides the model that is based on the experience gained from other implementations that can be used as guideline during the implementation.

53. How to Convert Bex Query Global Structure to Local Structure (steps Involved)?

Ans:

BeX query Global structure to local structure Steps a local structure when need to add structure elements that are unique to specific query. Changing the global structure changes structure for all the queries that use global structure. That is reason go for a local structure. Coming to navigation part–In the BEx Analyzer, from SAP Business Explorer toolbar, choose open query icon .

54. What does data flow in SAP BW/BI?

Ans:

A data flow depicts the specific scenario in SAP BW∕4HANA. It describes the set of SAP BW∕4HANA objects, including relationships and interdependencies. The BW Modeling Tools contain the various editors with graphical user interfaces that enable to create, edit, document and analyse data flows and objects.

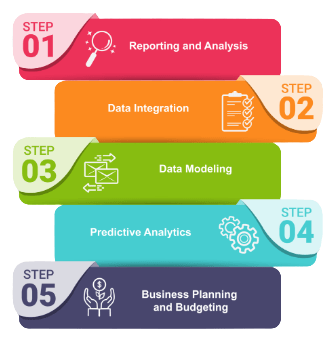

55. In SAP BW/BI what does main areas and activities?

Ans:

Data Warehouse : Integrating, collecting and managing the entire company’s data.

Analyzing and Planning : Using data stored in data warehouse.

Reporting : BI provides tools for reporting in a web-browser, Excel etc.

Broad cast publishing : To send an information to employees using an email, fax etc.

56. What is ODS in SAP BW/BI?

Ans:

‘Operational Data Store’ or ‘ODS’ is used for a detailed storage of data. It is the BW architectural component that appears between the PSA ( Persistent Staging Area) and infocubes, it allows the BEX reporting. It is primary use is for detail reporting rather than a dimensional analysis, and it is not based on star schema. ODS objects do not aggregate a data as infocubes do. To load data into an IDS object, new records are inserted, existing records are be updated, or old records are deleted as a specified by RECORDMODE value.

57. What does table partition in SAP BW / BI?

Ans:

Table partition is done to manage huge data to improve the efficiency of applications. The partition is based on the 0CALMONTH and 0FISCPER.

- Database partitioning

- Logical partitioning

There are 2 type of the partitioning :

58. How to gather the requirements for Implementation Project?

Ans:

One of the most important challenges in the any implementation is gathering and understanding end user and process team functional requirements. These functional requirements are represent the scope of analysis needs and expectations of end user.

- Business reasons for project and business questions

- Critical success factors for implementation

- Source systems that are involved.

These typically involve all of following:-

59. How can solve the data mismatch tickets between R/3 and Bw?

Ans:

Check a mapping at BW side for 0STREET in transfer rules. Check a data in PSA for same field. If the PSA is also doesn’t have complete data then check the field in RSA3 in a source system.

60. Distinguish difference between V1, V2, V3 jobs in Extraction?

Ans:

V1 Update: when ever create a transaction in R/3(e.g.,Sales Order) then entries get into the R/3 Tables(VBAK, VBAP..) and this takes place in the V1 Update.

V2 Update: V2 Update starts the few seconds after V1 Update and in this update a values get into Statistical Tables, from where do the extraction into BW.

V3 Update: Its purely for a BW extraction.

61. Define Rollup?

Ans:

Rollup is used to pack a new DataPackages into Info Cube aggregates.Because Info Cube data will not be available when reporting on aggregate, it is crucial to execute a rollup.

62. What does business intelligence?

Ans:

Business intelligence (BI) is a wide term for a group of software applications and technologies used to collect, store, analyse, and provide access to data in order to aid corporate users in making better business choices. Applications for business intelligence (BI) can be used for decision assistance, query and reporting, OLAP, statistical analysis, forecasting, and data mining.

63. What does OLAP?

Ans:

OLAP means Online Analytical Processing. It is database which contain a Historical data and the oldest data which is used to business people. It comes from data have put in an OLTP database. OLTP means the Online Transaction Processing.

64. What does the 90 Day Rule?

Ans:

Finish within the 90 days and prove using the delivered business content.

65. What does typical set up of Bw Team?

Ans:

Usually the team has project manager, one or more Functional consultants, one or more developers and one or more QA testers and usual basis team.

66. What does Project Phases within Asap?

Ans:

Project preparation: Initial stuff; do the conceptual review after this phase.

Business blue print: Functional spec; do the design review after this phase.

Realization: Develop; do configuration review after this phase.

Final preparation: QA and the other final stuff before moving to production; do performance review after this phase.

Go live and support: To the production and support.

67. Distinguish difference between ODS and Info-cubes?

Ans:

- ODS has key while Info-cubes does not have any key.

- ODS contains the detailed level data while Info-cube contains a refined data.

- While ODS uses a flat file format, Info-cube uses the Star Schema (16 dimensions).

- There can be two or more ODS under cube, so cube can contain a combined data or data that is derived from the other fields in the ODS.

The difference between the ODS and Info-cubes are:

68. Describe modelling.

Ans:

Data base design is accomplished by modelling. The design of a DB (Data Base) is determined by schema, which is described as a representation of tables and their relationships.

69. What does use of the process chain?

Ans:

The use of the process chain is to automate a data load process. It automates process like Data load, Indices creation, Deletion, Cube compression etc. Process chains are only to load a data’s.

70. What does multi-provider in SAP BI ?

Ans:

Multi-provider is the type of info-provider that contains a data from a number of info-providers and makes it available for a reporting purposes. Multi-provider does not contain any data.

A multi-provider allows to run reports using the several info-providers that are, it is used for creating a reports for one or more than one info-provider at a time.

71. What does Translation Key?

Ans:

Defines how exchange rate is calculated; the target currency can be fixed or can be determined at a time of translation.

72. What does steps to create Db Connect?

Ans:

- First verify that DB connection works from a server

- AWB -> Source systems -> create

- Select a Database source system

- Enter database user name, password and connect string

- Generate a data source using the transaction RSDBC

- Assign the data source to be info source

- Maintain and activate the communication to structure and transfer rules.

- Create an info package and load data

73. How to un-lock objects in Transport Organizer?

Ans:

- Log in to the SAP BW system.

- Open the Transport Organizer using transaction code SE01.

- Select the relevant transport request.

- Identify and select the locked objects within the request.

- Unlock the selected objects.

- Save and confirm the changes made.

To unlock objects in the SAP BW Transport Organizer:

74. What do Sap Enhancements to enhance Fir Data?

Ans:

SAP provides tools and methods for enhancing financial data processing in its financial modules. These enhancements include user exits, BAdIs, and customer exits, allowing organizations to add custom code and logic to tailor financial processes to their specific needs. Custom fields, enhanced reporting, and validation rules further enable the customization of financial data handling, ensuring data accuracy and consistency.

75. What does Pre-requisites for Db Connect?

Ans:

- DBSL must be installed on a SAP server

- Database specific DB Client must installed

- Table and field names must be in the capital letters

76. What does types of Multi-providers?

Ans:

Homogeneous Multiproviders: It consists of a technically identical info-providers, like infocubes with exactly the same characteristics and key figures.

Heterogeneous Multiproviders: These info-providers only have certain number of characteristics and key figures. It can be used for modelling of scenarios by dividing them into the sub-scenarios. Every sub-scenario is represented by own info-provider.

77. What does parameters available in User Exit for enhancing master data?

Ans:

I_T_FIELDS: List of a transfer structure fields.

I_T_DATA: Internal table containing data for master attributes; remember this contains all data.

LUPDMODE: Update a mode.

I_CHABASNM: Basic characteristics.

I_SOURCE: name of info source.

78. What does BEx Map in SAP BI?

Ans:

BEx Map is a Geographical Information System (GIS) developed by BW. BEx Map is a feature of SAP BI that provides geographical information such as a customer, customer sales region, and nation.

79. How do Debug User Exit?

Ans:

- Create an infinite loop on the value.

- Start extraction on BW.

- Now process on R/3 goes on infinite loop.

- Look for a process in SM5O.

- Debug process.

- With in debugger change the value of x to 1.

- Now can see and debug a code.

80. What does B/W statistics and how it is used?

Ans:

SAP cube sets are used to measure query, data loading, and other performance metrics. B/W statistics as a name suggests is useful in showing data about costs associated with B/W queries, OLAP, aggregative data etc. It is useful to measure performance of how quickly queries are calculated or how quickly the data is loaded into the B.

81. What does data target administration task?

Ans:

- Delete Index

- Generate Index

- Construct a database statistics

- Complete the deletion of data target

- Compression of info-cube etc.

Data target administration task are includes:

82. How query from Bw is used?

Ans:

The query on BW is a source for the retraction; data is retracted after a drill down using all the free characteristics (drill down is performed internally).

83. What does General Restrictions entail?

Ans:

- Allowed only for a cost based CO-PA.

- No delta functionality,but runs can cancelled.

- Retractor uses the RSCRM_BAPI to run query.

- Use valuation option if want CO-PA valuation.

84. What does data ware-housing hierarchy?

Ans:

A data aggregation hierarchy can be defined. It is the logical framework that uses ordered levels to organise data. For example, utilising a time dimension hierarchy, data may be aggregated from the month level to the quarter level to the year level.

85. What does Business Blue Print Stage in SAP BI?

Ans:

SAP has defined the business blueprint phase to help extract a pertinent information about company that is necessary for implementation. These blueprints are in the form of a questionnaires that are designed to probe for an information that uncovers how company does business. As such, they also serve to document implementation. Every business blueprint document essentially outlines the future business processes and business requirements

86. Define DTP.

Ans:

Data Transfer Process: Data transfer process (DTP) loads data inside BI from one item to another while considering transformations and filters. In a nutshell, DTP governs how data is transmitted between two permanent objects. It is used to load data from PSA to a data target (cube, ods, or infoobject), thereby replacing a data mart interface and an Info Package.

87. How can decide a query performance is slow or fast?

Ans:

- Can check that in RSRT tcode.

- Execute a query in RSRT and after that follow below steps.

- Go to SE16 and in resulting screen give a table name as RSDDSTAT for BW 3.x.

- RSDDSTAT_DM for BI 7.0 and press enter can view all the details about query like time taken to execute query and the timestamps.

88. What does statistical update and document update?

Ans:

A Synchronous Updating (V1 Update):

The statistics update is made synchronously with document update. While updating, if problems that result in termination of the statistics update occur, the original documents are NOT saved. The cause of termination should be investigated and problem solved. Subsequently, documents can be entered again.

89. What is Info Package?

Ans:

An InfoPackage is used to specify how and when to load a data to BI system from various data sources. An InfoPackage contains all information how data is loaded from a source system to a data source or PSA. InfoPackage consists of a condition for requesting data from the source system.

90. What does temporal join?

Ans:

Temporal Joins are used to map the period of time. At a time of reporting, other InfoProviders handle the time-dependent master data in such a way that the record that is valid for pre-defined unique key date is used every time. And can define the Temporal join that contains atleast one time-dependent characteristic or pseudo time-dependent InfoProvider.