- Introduction to Big Data

- Types of Big Data Analytics

- Tools Used in Analytics (Hadoop, Spark)

- Data Lifecycle Management

- Data Collection and Storage

- Real-Time vs Batch Analytics

- Applications in Industry

- Analytics Platforms and Cloud Tools

- Challenges in Implementation

- Conclusion

Introduction to Big Data

Big Data Analytics is the process of examining large and varied data sets to uncover hidden patterns, correlations, market trends, customer preferences, and other useful business information. The term “big data” refers to data that is so large, fast, or complex that it’s difficult or impossible to process using traditional methods. Organizations today gather data from various sources including social media, sensors, video/audio recordings, transactional data, and more. Data Analytics Training equips professionals to handle this complexity teaching them how to extract insights, build models, and make data-driven decisions using modern analytical tools. The goal of Big Data Analytics is to extract actionable insights from this massive volume of data, thereby enabling better decision-making. Big Data is typically characterized by the 5Vs, Volume (amount of data), Velocity (speed of data in and out), Variety (range of data types and sources), Veracity (uncertainty of data), and Value (usefulness of data).

Types of Big Data Analytics

Big Data Analytics can be broadly categorized into four types: descriptive, diagnostic, predictive, and prescriptive. Each type requires scalable storage and fast access to massive datasets. HBase and Its Architecture explains how HBase supports these analytics types by offering a column-oriented NoSQL model built on top of Hadoop HDFS ideal for real-time read/write operations across billions of records.

- Descriptive Analytics: This type provides insights into what has happened. It includes historical data analysis and reporting tools that allow businesses to understand trends and past behaviors.

- Diagnostic Analytics: This type digs deeper into the data to understand why something happened. It involves data mining and correlation analysis.

- Predictive Analytics: This type uses statistical models and machine learning techniques to forecast future outcomes based on historical data. Examples include sales forecasting and risk assessment.

- Prescriptive Analytics: The most advanced type, it not only predicts future outcomes but also suggests actions to benefit from the predictions. It relies heavily on algorithms, simulations, and optimization techniques.

Each of these analytics types serves specific purposes and can be used independently or in combination to support business strategies.

Interested in Obtaining Your Data Analyst Certificate? View The Data Analytics Online Training Offered By ACTE Right Now!

Tools Used in Analytics (Hadoop, Spark)

When it comes to processing and analyzing big data, several tools are effective. Apache Hadoop is a well-known open-source framework that allows for the distributed processing of large data sets. It works across clusters of computers using simple programming models and includes key components like HDFS for storage and MapReduce for processing. Meanwhile, Apache Spark offers a fast, in-memory data processing engine that supports various programming languages such as Java, Scala, Python, and R. It shines in analytics, including machine learning and stream processing. Other important tools are Apache Flink, Storm, and Kafka for real-time data processing, as well as NoSQL databases like Cassandra, MongoDB, and HBase for flexible data storage. Azure Data Lake complements this ecosystem by offering scalable, secure, and cost-effective storage tailored for big data workloads making it easier to unify structured and unstructured data for advanced analytics. Additionally, cloud-based analytics services from AWS, Azure, and Google Cloud provide scalable and easy-to-use options for data analysis, making them popular choices among organizations.

To Explore Data Analyst in Depth, Check Out Our Comprehensive Data Analytics Online Training To Gain Insights From Our Experts!

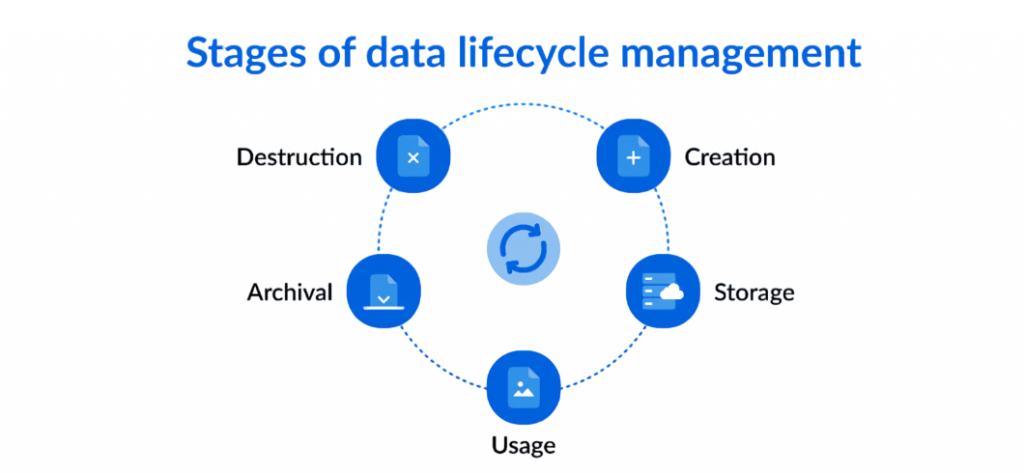

Data Lifecycle Management

The Data Lifecycle Management in big data analytics involves several stages: data generation, collection, storage, processing, analysis, and visualization. Each stage requires tools that can extract meaningful patterns from raw logs and metrics. What is Splunk Rex explains how the Rex command enables powerful field extractions using regular expressions making it easier to parse unstructured data and enrich analytics workflows.

- Data Generation: Data is produced through sensors, applications, web logs, social media, etc.

- Data Collection: Tools like Flume, Sqoop, and Kafka are used to gather data.

- Data Storage: Data is stored in distributed file systems like HDFS or cloud storage systems.

- Data Processing: Data is transformed, cleansed, and analyzed using tools like Spark and Hive.

- Data Analysis: Statistical and ML models are applied to derive insights.

- Data Visualization: Tools like Tableau, Power BI, or Kibana help in presenting the insights visually.

- Data Archival/Deletion: Old data is archived or deleted based on relevance and compliance needs.

Effective Data Lifecycle Management ensures the integrity, security, and usability of data over time.

Data Collection and Storage

Data is collected from structured sources (like RDBMS), semi-structured sources (like XML, JSON), and unstructured sources (like images, videos, social media content). Once collected, the data is stored using distributed systems and cloud-based platforms designed for scalability and speed. Data Analytics Training equips learners to navigate this complexity teaching them how to manage diverse data formats and apply analytical techniques across modern storage architectures.

- HDFS: Hadoop Distributed File System is a highly fault-tolerant storage system.

- NoSQL Databases: Used for storing semi-structured and unstructured data. Examples include MongoDB and Cassandra.

- Cloud Storage: Services like Amazon S3, Google Cloud Storage, and Azure Blob Storage offer scalable storage solutions.

Scalable storage solutions are crucial to accommodate the growing volume of data in big data environments.

Gain Your Master’s Certification in Data Analyst Training by Enrolling in Our Data Analyst Master Program Training Course Now!

Real-Time vs Batch Analytics

- Batch Analytics: Processes data in large blocks at scheduled times. It’s ideal for processing historical data where real-time analysis is not necessary. Hadoop is commonly used for batch processing.

- Real-Time Analytics: Processes data as it arrives, enabling immediate insights. Apache Kafka, Apache Storm, and Apache Flink are widely used for streaming and real-time analytics.

Choosing between real-time and batch analytics depends on business requirements. Real-time analytics is vital for applications such as fraud detection and recommendation engines.

Are You Preparing for Data Analyst Jobs? Check Out ACTE’s Data Analyst Interview Questions and Answers to Boost Your Preparation!

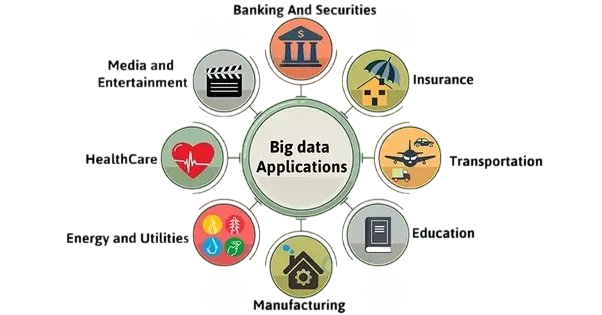

Applications in Industry

Big Data Analytics is impacting many industries. In retail, it helps businesses provide personalized recommendations based on customer behavior. It also allows for more efficient inventory management and helps analyze customer sentiment to improve services. In healthcare, predictive analytics leads to better patient outcomes by identifying risks and improving drug discovery processes. Financial institutions use big data to detect fraud, engage in algorithmic trading, and manage risks effectively.

The manufacturing sector benefits from predictive maintenance, which reduces downtime, along with quality control and improved supply chains. What is Data Pipelining explores how these industries rely on streamlined data flows connecting ingestion, transformation, and delivery stages to ensure timely, actionable insights. Telecommunications companies apply analytics for network optimization and to understand customer churn, which supports targeted marketing strategies. Lastly, in government, big data drives smart city initiatives, enhances public safety, and helps identify tax fraud. These examples show how versatile and powerful big data analytics is across various sectors, changing how industries operate and provide value.

Analytics Platforms and Cloud Tools

Cloud platforms provide end-to-end big data solutions:

- Amazon Web Services (AWS): Offers services like Amazon EMR, Redshift, and QuickSight.

- Google Cloud Platform (GCP): Tools include BigQuery, Dataflow, and AI Platform.

- Microsoft Azure: Features Azure Synapse Analytics, HDInsight, and Power BI.

These platforms offer scalability, flexibility, and managed services, enabling businesses to focus on insights rather than infrastructure.

Challenges in Implementation

Implementing Big Data Analytics comes with several challenges: managing data volume, ensuring real-time processing, maintaining data quality, and integrating diverse tools across the stack. Splunk Documentation offers detailed guidance on overcoming these hurdles covering configuration tips, deduplication techniques, and best practices for scalable log analysis.

- Data Quality: Inconsistent, incomplete, or inaccurate data leads to poor insights.

- Integration: Combining data from various sources can be complex.

- Security & Privacy: Protecting sensitive data against breaches is critical.

- Scalability: Systems must handle growing data volumes efficiently.

- Skill Gaps: There is a shortage of professionals skilled in big data technologies.

- Cost Management: Maintaining big data infrastructure can be expensive.

Overcoming these challenges requires a well-thought-out strategy and investment in the right tools and people.

Conclusion

Big Data Analytics helps organizations understand large and complex data sets. By examining this data, businesses can find valuable insights that lead to better decisions, improve operations, and encourage ongoing innovation. With the growth of cloud computing, artificial intelligence, and real-time data processing, Big Data Analytics is expected to expand quickly. Companies that use these methods can achieve a significant edge over their rivals in today’s digital landscape. Data Analytics Training prepares professionals to harness these technologies equipping them with the skills to build scalable solutions, interpret complex datasets, and drive innovation across industries. The ability to convert data into useful insights allows organizations to react swiftly to market shifts, anticipate customer needs, and simplify their processes. In the end, using Big Data Analytics effectively can boost performance and growth, making it an essential tool for any organization aiming to succeed in a data-driven world.