- Introduction: Why Combine R with Hadoop?

- Understanding R and Its Limitations with Big Data

- Introduction to Hadoop and Its Strengths

- Why Integrate R with Hadoop? Key Benefits

- Popular Tools for R-Hadoop Integration

- Setting Up R with Hadoop Ecosystem: A Step-by-Step Guide

- Working with RHadoop: Practical Workflow

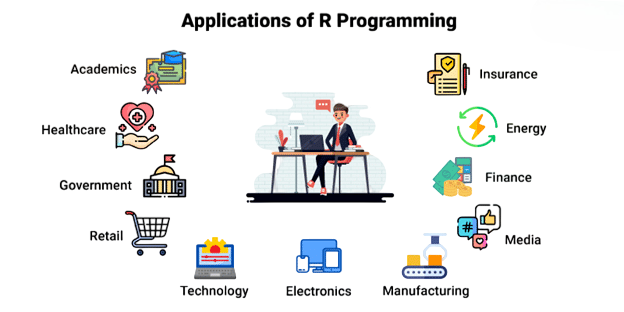

- Use Cases and Real-World Applications

- Conclusion: Is R-Hadoop Integration Right for You?

Introduction: Why Combine R with Hadoop?

R Integration with Hadoop offers a powerful approach for modern data challenges. R is one of the most popular languages for statistical computing, data analysis, and visualization, making it the go-to tool for statisticians, researchers, and data scientists. However, R struggles with scalability, especially when handling terabytes or petabytes of data. That’s where Hadoop, the open-source framework for distributed data processing, comes into play. Integrating Hadoop into your workflow through Data Science Training helps overcome these limitations enabling scalable analytics, parallel computation, and efficient data handling across massive datasets. By leveraging R Integration with Hadoop, you combine R’s analytical power with Hadoop’s distributed computing capability, creating a synergy ideal for tackling large-scale datasets. This kind of R Integration with Hadoop enables efficient, scalable solutions for modern data science and enterprise analytics.

Understanding R and Its Limitations with Big Data

R is a statistical programming language that users often commend for its diverse visualization tools, huge CRAN library, and outstanding community support, which makes it really user-friendly for data exploration and modeling. But the language is still struggling with substantially large data sets, especially because of its memory-bound design which implies that files have to be fully loaded into the main memory and, at the same time, there is no provision for parallel or distributed computing. Addressing these limitations through Apache Spark Streaming Tutorial introduces scalable, fault-tolerant stream processing enabling real-time analytics across distributed clusters without memory bottlenecks. Although R is very good at handling analytics of medium size, it is hindered by its performance limitations that become obvious in Big Data environments. As a result, one has to use a combination of integration strategies to be able to go beyond the computational limitations and achieve scalability.

Interested in Obtaining Your Data Science Certificate? View The Data Science Online Training Offered By ACTE Right Now!

Introduction to Hadoop and Its Strengths

Apache Hadoop is an open-source framework designed for reliable, scalable, and distributed computing. It allows storage and processing of massive datasets across clusters of commodity hardware. Exploring Spark’s Real-Time Parallel capabilities alongside Hadoop unlocks high-performance analytics enabling in-memory computation, fault tolerance, and seamless integration across distributed environments.

Key Components:

- HDFS (Hadoop Distributed File System): Fault-tolerant storage

- MapReduce: Distributed processing model

- YARN: Resource management

- Hive, Pig, HBase: Additional ecosystem tools for data manipulation and querying

Hadoop is ideal for batch processing of large volumes of data. Integrating it with R enables analysts to apply advanced statistical models to Big Data without leaving their preferred R environment.

To Explore Data Science in Depth, Check Out Our Comprehensive Data Science Online Training To Gain Insights From Our Experts!

Why Integrate R with Hadoop? Key Benefits

Combining R with Hadoop brings together the best of both worlds: analytical strength and distributed processing. To complement this integration, the Scala Certification Guide offers a structured pathway to mastering scalable data workflows empowering professionals to build resilient, high-performance systems using functional programming and distributed computing principles.

Benefits:

- Scalability: Analyze datasets larger than memory limits

- Efficiency: Parallel processing via MapReduce

- Cost-effective: Use commodity hardware to scale

- Advanced Analytics: Apply R’s statistical models on large data stored in HDFS

- Seamless Workflow: Continue using familiar R syntax and functions

With this integration, organizations can perform deep statistical analysis at scale, enabling smarter business decisions and more powerful machine learning.

Popular Tools for R-Hadoop Integration

Users of R who want to employ big data processing power should consider several open source projects that offer robust solutions for the integration of R into the Hadoop ecosystems. The RHadoop by Revolution Analytics is the comprehensive suite of packages like rmr2 for MapReduce jobs, rhdfs for accessing the HDFS directly, rhbase for connecting with HBase, and plyrmr for the manipulation of data. Strengthening your understanding of these tools through Data Science Training enables seamless integration of R with Hadoop empowering scalable analytics and efficient data workflows in enterprise environments. RHIPE presents another way of integration through Protocol Buffers but the configuration is more complicated. SparkR is a strong competitor that facilitates the R interface to Apache Spark and thus, provides scalable data processing capabilities. The machine learning tools of H2O can also be used for direct execution on Hadoop clusters thus distributed machine learning tasks can be started by R users without any hassle.

Gain Your Master’s Certification in Data Science Training by Enrolling in Our Data Science Master Program Training Course Now!

Setting Up R with Hadoop Ecosystem: A Step-by-Step Guide

The installation of R in Hadoop is not a simple process and the proper setup needs a few ‘must-have’ conditions. Java JDK has to be on your machine prior to starting the whole thing; this software package is the base for the entire Hadoop network. Then check that your Hadoop has been correctly set up and configured to provide the needed architecture for the processing of data. Furthermore, the installation of R together with the required development libraries for easy connection should also be in place. To complement this setup, Spark SQL and the DataFrame API offer powerful tools for querying and transforming structured data bringing speed, scalability, and flexibility to your distributed analytics pipeline.

Step-by-Step Setup:

- sudo apt install r-base r-base-dev

- install.packages(“devtools”)

- library(devtools)

- install_github(“RevolutionAnalytics/rmr2”)

- install_github(“RevolutionAnalytics/rhdfs”)

- library(rhdfs)

- hdfs.init()

- library(rmr2)

- map <- function(k, v) keyval(nchar(v), 1)

- reduce <- function(k, v) keyval(k, sum(v))

- mapreduce(input=”/user/hadoop/input.txt”

- hdfs dfs -cat /user/hadoop/output/part-00000

This simple example demonstrates counting line lengths using R’s MapReduce API. Once you’ve done this, you can scale up to more complex analytical tasks.

Are You Preparing for Data Science Jobs? Check Out ACTE’s Data Science Interview Questions and Answers to Boost Your Preparation!

Working with RHadoop: Practical Workflow

With RHadoop, you can follow a typical Big Data analysis workflow: ingesting, processing, and visualizing large-scale datasets using R’s statistical capabilities alongside Hadoop’s distributed architecture. To further streamline data transformation tasks, Apache Pig offers a high-level scripting platform making it easier to express complex data flows and integrate seamlessly with Hadoop-based pipelines.

- Data Ingestion: Use Flume or Sqoop to import data from logs or relational databases into HDFS.

- Data Preprocessing: Use R functions to clean and structure data on HDFS using mapreduce() with custom mappers and reducers.

- Exploratory Analysis: Perform distributed descriptive statistics or sampling using MapReduce.

- Model Building: Apply machine learning models like linear regression, k-means clustering, or decision trees.

- Visualization and Reporting: Pull result sets into RStudio or export them to dashboards (Shiny, Power BI, etc.).

This workflow allows analysts to maintain familiar R workflows, while running them on massive data volumes without performance bottlenecks.

Use Cases and Real-World Applications

- Telecom Churn Analysis: Telecom providers analyze millions of call records in HDFS using RHadoop, applying logistic regression and decision trees in R to predict churn.

- Retail Recommendation Engines: Retailers use R Hadoop to run association rule mining and collaborative filtering over massive purchase logs.

- Banking Fraud Detection: Banks use SparkR to detect anomalous transactions in real-time, combining R’s statistical packages with Spark’s scalability.

- Genomic Data Analysis: Biotech firms analyze petabytes of sequencing data, using R with Hadoop to run distributed statistical tests.

These examples show how R-Hadoop integration makes data science scalable across industries.

Conclusion: Is R-Hadoop Integration Right for You?

R Integration with Hadoop provides data scientists and researchers a powerful way to take their analytics to the next level, especially when handling large datasets. By utilizing tools such as RHadoop, RHIPE, and SparkR, organizations can bridge the gap between data science and data engineering, enabling distributed processing without losing R’s statistical capabilities. This approach effectively turns R into an enterprise Big Data tool, allowing teams to create scalable, statistically valid data pipelines that can solve complex analytical problems. Enhancing this capability through Data Science Training enables professionals to integrate statistical programming with real-world data engineering bridging the gap between exploratory analysis and production-grade solutions. With R Integration with Hadoop, organizations that have invested in Hadoop infrastructure can achieve advanced and efficient data analysis workflows, even if there is some initial technical complexity. Ultimately, R Integration with Hadoop empowers teams to harness the full potential of their data for enterprise-level insights.