- Introduction: The Rise of Big Data Careers

- Who is a Big Data Hadoop Professional?

- Key Job Roles in the Hadoop Ecosystem

- General Responsibilities Across All Hadoop Roles

- Responsibilities of a Hadoop Developer

- Responsibilities of a Hadoop Administrator

- Responsibilities of a Data Engineer Using Hadoop

- Essential Technical Skills for Hadoop

- Conclusion

Introduction: The Rise of Big Data Careers

In the digital age, data is the new fuel powering everything from predictive analytics to AI-driven recommendations. As organizations generate massive datasets from social media, IoT devices, customer interactions, and enterprise systems, the need for skilled professionals who can store, manage, and analyze this information has skyrocketed. This demand has created a surge in Big Data job opportunities, and Hadoop remains at the heart of this revolution. Apache Hadoop, Big Data Training a robust open-source framework for distributed storage and processing of big data, continues to play a critical role in enterprise data architecture. As a result, companies actively seek Big Data Hadoop professionals to help them derive insights, optimize operations, and maintain competitive advantage. In today’s digital age, the rise of big data has transformed how organizations operate, compete, and innovate. With the exponential growth of data generated daily, businesses across industries are increasingly relying on data-driven insights to make informed decisions Data Architect Salary in India . This surge has created a high demand for professionals skilled in data analysis, machine learning, and data engineering.

Who is a Big Data Hadoop Professional?

A Big Data Hadoop professional is someone who specializes in working with Apache Hadoop and its ecosystem components to perform tasks related to the collection, storage, processing, and analysis of large datasets. These professionals may wear different hats: developers, administrators, architects.A Big Data Hadoop professional is an expert in managing, processing, and analyzing large volumes of data using the Hadoop ecosystem Data Governance . Hadoop is an open-source framework that enables distributed storage and processing of big data across clusters of computers.

These professionals work with tools like HDFS (Hadoop Distributed File System), MapReduce, Hive, Pig, and HBase to handle complex data tasks efficiently. They design data pipelines, ensure data quality, and optimize system performance. Big Data Hadoop professionals are essential in industries that rely on large-scale data analytics,Data Engineer , helping organizations gain valuable insights and make data-driven decisions to improve operations and competitiveness.

Do You Want to Learn More About Big Data Analytics? Get Info From Our Big Data Course Training Today!

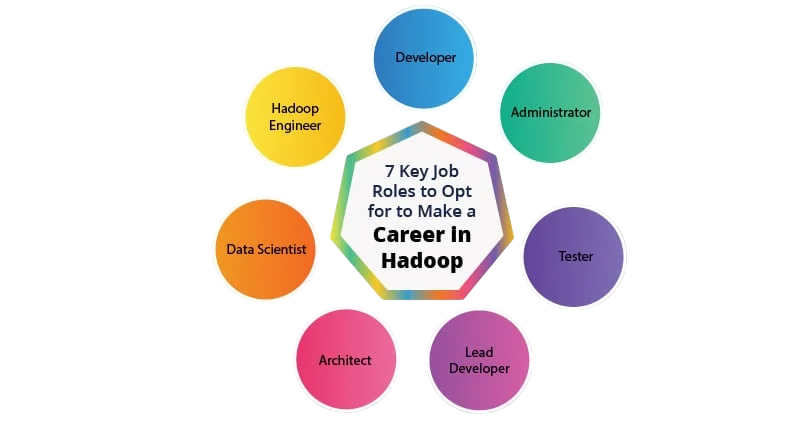

Key Job Roles in the Hadoop Ecosystem

There isn’t a one-size-fits-all role when it comes to Hadoop. Depending on the size of the organization and the maturity of its data infrastructure, Data Integration a Hadoop professional may specialize in one or more areas.

Primary Job Roles:

- Hadoop Developer

- Hadoop Administrator

- Big Data Engineer

- ETL/Data Pipeline Engineer

- Hadoop Architect

- Data Analyst (with Hadoop background)

- Machine Learning Engineer (Hadoop-supported workflows)

Each of these roles involves a unique blend of responsibilities and skills, though they all work in concert to handle the data lifecycle within a Hadoop ecosystem.

Would You Like to Know More About Big Data? Sign Up For Our Big Data Analytics Course Training Now!

General Responsibilities Across All Hadoop Roles

- Maintain and manage large-scale data processing workflows.

- Work with massive datasets using distributed systems.

- Ensure data quality, reliability, and scalability.

- Collaborate with data scientists, analysts, Big Data Analysis and business stakeholders.

- Stay updated with evolving tools like Hive, Spark, HBase, and more.

- Ensure data governance and security compliance standards.

These foundational responsibilities are crucial to keeping a data-driven organization agile, competitive, and insight-ready.

Responsibilities of a Hadoop Developer

A Hadoop Developer focuses on building applications that run on top of the Hadoop ecosystem. They write code to ingest, transform, process, and analyze data using distributed frameworks. A Hadoop Developer is responsible for designing, developing, and implementing big data solutions using the Hadoop ecosystem. Their primary role involves writing scalable and high-performance code to process large datasets using tools like MapReduce, Hive, Pig, and Spark. They are tasked with building data processing pipelines, Big Data Training, integrating data from various sources, and ensuring efficient data storage using HDFS. Additionally, Hadoop Developers work closely with data scientists and analysts to understand data requirements and translate them into technical solutions. They also optimize job performance, debug issues in data workflows, and ensure data security and reliability within the Hadoop framework. A Hadoop Developer must be comfortable with programming languages (especially Java, Scala, or Python) and should understand how data flows through distributed systems.

Gain Your Master’s Certification in Big Data Analytics Training by Enrolling in Our Big Data Analytics Master Program Training Course Now!

Responsibilities of a Hadoop Administrator

A Hadoop Administrator manages the infrastructure and operations of the Hadoop cluster. Their work ensures that the system is highly available, secure, and performant. A Hadoop Administrator is responsible for the installation, configuration, Become a Big Data Analyst and maintenance of the Hadoop ecosystem in an organization. Their key duties include managing Hadoop clusters, ensuring system availability, and performing regular health checks to monitor performance and reliability.

They handle tasks such as cluster setup, node addition or removal, and configuring Hadoop services like HDFS, YARN, Hive, and HBase. Hadoop Administrators also manage data backup and recovery, implement security measures, and troubleshoot hardware and software issues. Additionally, they optimize cluster performance, manage resource allocation, and work with developers to ensure smooth data processing workflows within the Hadoop environment.

Preparing for Big Data Analytics Job? Have a Look at Our Blog on Big Data Analytics Interview Questions & Answer To Ace Your Interview!

Responsibilities of a Data Engineer Using Hadoop

- Design and build scalable data pipelines using Hadoop tools.

- Ingest, clean, and transform large datasets from various sources.

- Use Hadoop components like HDFS, MapReduce, Hive, Pig, and Spark for data processing.

- Ensure data quality, consistency, and integrity throughout the pipeline.

- Optimize data workflows for performance and cost-efficiency Big Data Can Help You Do Wonders.

- Collaborate with data scientists, analysts, and other stakeholders to understand data requirements.

- Monitor, troubleshoot, and resolve data pipeline and job failures.

- Automate repetitive data tasks to improve system efficiency.

- Implement data security and compliance measures.

- Maintain documentation for data processes and system architecture.

Essential Technical Skills for Hadoop Professionals

To perform these roles effectively, Hadoop professionals must possess a diverse and robust technical skillset.

Core Hadoop Skills:

- HDFS: Understanding its architecture, replication, and commands.

- MapReduce: Writing and optimizing batch processing jobs.

- YARN: Resource management and job scheduling. Programming Languages.

- Java: For MapReduce and native API interactions.

- Python/Scala: For scripting and Spark development.

- SQL: Big Data is Transforming Retail Industry For writing Hive queries and interacting with databases.

- Hive: Data warehouse querying using HiveQL.

- Pig: Dataflow scripting for ETL.

- HBase: NoSQL database for random read/write access.

- Sqoop/Flume: Data ingestion from RDBMS and logs.

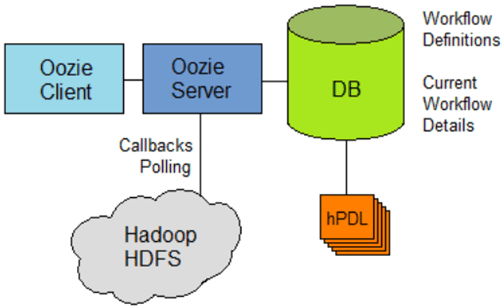

- Oozie: Workflow scheduling and job orchestration.

- Apache Spark: For in-memory processing and real-time computation.

- Kafka: Handling streaming data pipelines.

Ecosystem Tools:

Conclusion

Hadoop may be over a decade old, but it continues to form the bedrock of enterprise-level data systems. While cloud-native platforms and newer technologies are reshaping the data landscape, knowledge of Hadoop and its ecosystem remains highly valuable especially for hybrid infrastructure deployments and companies with legacy data lakes. Big Data Hadoop professionals are in a unique position to bridge the gap between traditional Big Data Training and modern data platforms. Whether your focus is development, administration, or engineering, the combination of job responsibilities and technical mastery can lead to rewarding, high-paying roles in virtually any industry. By understanding what’s expected in various Hadoop-based roles and developing the right mix of skills, professionals can unlock meaningful career opportunities and become indispensable in the age of Data Engineer Using Hadoop.