- Career Before Big Data

- Goals and Motivation

- Course Selection and Expectations

- Learning Experience

- Projects and Assignments

- Certification Preparation

- Placement Support Received

- New Job Role and Skills Used

- Learning Tips

- Summary

Career Before Big Data

Before stepping into the world of Big Data and Hadoop, Vishnu worked as a manual testing engineer at a mid-sized IT firm in Hyderabad. With over 3 years of experience, his day-to-day work involved preparing test cases, running regression test cycles, and reporting bugs. Despite being stable, the job had limited scope for growth and lacked the excitement of working on cutting-edge technologies. Data Analytics Training opened new doors empowering him to pivot into high-impact roles where data drives innovation, automation, and strategic decision-making. “I felt stuck in my career,” Vishnu recalls. “While everyone around me was moving towards automation, data science, and AI, I was still doing manual testing. I knew I had to upgrade my skills or risk being outdated in this fast-paced IT industry.”

Goals and Motivation

Vishnu’s primary motivation stemmed from witnessing the industry’s shift toward data-driven decision-making. He frequently heard about how Big Data was changing business operations, from predicting customer behavior to optimizing supply chains. Elasticsearch Nested Mapping became a key area of interest enabling him to structure complex, hierarchical data for precise querying and deeper insights across dynamic business datasets.

His goals were clear:

- Gain expertise in Hadoop and its ecosystem.

- Transition from a testing profile to a Big Data Engineer role.

- Secure a high-paying, technically challenging job in the analytics domain.

“I didn’t want to be stuck doing repetitive tasks anymore. I wanted to contribute to data-driven solutions, work on real-world business challenges, and be a part of something impactful,” he shares.

Interested in Obtaining Your Data Analyst Certificate? View The Data Analytics Online Training Offered By ACTE Right Now!

Course Selection and Expectations

After weeks of researching various institutes, going through reviews, and attending demo sessions, Vishnu chose a reputed institute known for its Big Data Hadoop training and placement support. Various Talend Products and their Features became a core part of his learning helping him understand how tools like Talend Open Studio, Talend Data Integration, and Talend Big Data simplify ETL workflows, boost scalability, and support enterprise-grade data management.

His expectations were:

- Hands-on training with real-world Hadoop tools like HDFS, MapReduce, Hive, Pig, and Spark.

- Project-based learning to build a practical portfolio.

- Placement assistance and interview preparation.

“I was looking for a program that didn’t just teach theory but actually gave me the confidence to work on production-level projects. The course structure looked robust, and the trainers had real industry experience,” he says.

To Explore Data Analyst in Depth, Check Out Our Comprehensive Data Analytics Online Training To Gain Insights From Our Experts!

Learning Experience

The course spanned over 3 months and included both weekend live classes and recorded sessions. Vishnu was impressed by the clarity of instruction and the logical flow of topics. The curriculum began with the basics of Big Data and gradually covered the Hadoop ecosystem in depth. What is Apache Pig became a key module introducing Vishnu to its scripting capabilities, data flow abstractions, and how it simplifies processing large datasets within Hadoop.

Topics covered:

- Hadoop Distributed File System (HDFS).

- MapReduce Programming.

- Hive for Data Warehousing.

- Pig for Scripting.

- Apache Spark for fast computation.

- HBase, Sqoop, Flume, and Oozie for data ingestion and workflow management.

“I loved the hands-on labs. Every module ended with an assignment that mimicked real-world scenarios. I also appreciated how the instructors were approachable and clarified even the smallest doubts with patience,” he recalls.

Projects and Assignments

Data engineering was the area in which Vishnu excelled most during his training. To demonstrate his ability working with big data, he completed three projects that were really transformative and highly interrelated. In the Retail Analysis project by means of Hadoop, he used Flume and Sqoop to ingest sales data. With the help of Hive queries, he analyzed customer behavior, thus making reports that were full of useful insights about the best-selling products and regional preferences. Data Analytics Training enabled him to master these tools bridging theory with hands-on practice to deliver actionable insights that drive retail strategy and regional targeting. His Real-time Log Processing project with Spark Streaming was all about collecting and processing the simulated server log data. They used Kafka for the data stream, and created visual representations of the irregularities and traffic patterns with the help of Grafana. Furthermore, in the Healthcare Analytics project, Vishnu utilized MapReduce jobs for cleaning and loading medical records. He discovered significant health trends by performing Hive queries on the data. While remembering these incidents, Vishnu admitted that these projects made him really comprehend the data pipeline qualities and also the skill of deriving business insights in dealing with complicated datasets.

Gain Your Master’s Certification in Data Analyst Training by Enrolling in Our Data Analyst Master Program Training Course Now!

Certification Preparation

Post the course, Vishnu aimed for the Cloudera Certified Associate (CCA) Spark and Hadoop Developer certification. The institute provided mock tests, practice scenarios, and time-boxed challenges to help learners simulate the real exam experience. He also spent weekends revising concepts and retaking the practice tests. HBase and Its Architecture was a crucial part of his preparation giving him a clear understanding of column-oriented storage, region servers, and how HBase handles massive datasets with low latency and high scalability. With focused preparation, he cleared the certification in his first attempt with flying colors. “The certification not only boosted my confidence but also strengthened my resume. Recruiters noticed it immediately,” he says with a smile.

Are You Preparing for Data Analyst Jobs? Check Out ACTE’s Data Analyst Interview Questions and Answers to Boost Your Preparation!

Placement Support Received

The training institute provided active placement assistance, including resume building, mock interviews, and job referrals across top tech firms. What is Azure Data Lake was one of the core concepts covered helping learners understand how scalable cloud storage enables big data analytics, secure access control, and seamless integration with modern data pipelines.

- Resume preparation tailored for Big Data roles.

- Mock interviews conducted by data engineering professionals.

- Daily job alerts and exclusive interviews with hiring partners.

Within 4 weeks of completing the course and certification, Vishnu started receiving interview calls. The support team kept him updated with company-specific tips and helped him prepare for technical rounds. He finally cracked a role with a Bangalore-based fintech firm as a Junior Big Data Engineer with a 70% hike in salary.

New Job Role and Skills Used

Vishnu has successfully combined his training with the data engineering position, which is very dynamic, and has been closely working with the team to lead the critical data processing initiative. He uses his knowledge effectively by creating complex MapReduce and Spark jobs to process the complicated transaction data of customers and at the same time he uses Hive and HDFS for storage and retrieval of data which is more efficient. His technical skills also include working with Sqoop to RDBMS for easy data transfer and he is also responsible for the organization’s data lake through the accurate maintenance of workflows using Oozie. What is Splunk Rex became a valuable addition to his toolkit enabling him to extract fields from unstructured log data using regular expressions, streamline search queries, and enhance operational visibility across distributed systems. Vishnu, looking back at his career, definitely says that the training for which he had to put in a lot of effort and which he thought is not going to help him at all actually turned out to be the most essential one and he also feels that he is able to accomplish the data ingestion and transformation tasks less than a fraction of the time.

Learning Tips

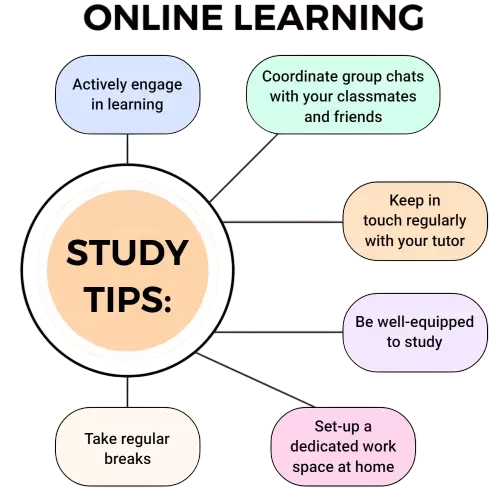

For learners entering the Big Data world, Vishnu offers these tips:

- Master the basics first: Understand the why behind Big Data and how Hadoop solves traditional database problems.

- Practice hands-on daily: Spend at least 1–2 hours on practicals. Set up your Hadoop/Spark environment locally or on cloud.

- Work on projects: Don’t just watch tutorials. Create end-to-end projects and add them to your portfolio.

- Use online resources: Combine course content with free resources like Apache documentation, GitHub repos, and community forums.

- Prepare for interviews early: Practice scenario-based questions and learn to explain your project work clearly.

Summary

Vishnu’s journey from a manual tester to a Big Data Engineer is a testament to the power of learning, perseverance, and making the right career moves. With a clear goal, the right training, and consistent effort, he was able to transform his professional life and tap into the immense opportunities the Big Data domain offers. Data Analytics Training played a pivotal role in this transformation providing the technical foundation, industry insights, and hands-on experience needed to thrive in a data-driven career. His success story inspires many others to invest in learning, embrace new technologies, and take control of their careers in today’s fast-evolving IT landscape.