- Introduction ETL Audit Process

- Overview of ETL: Extract, Transform, Load

- The Importance of ETL Auditing in Data Pipelines

- Common Issues Found During ETL Audits

- Key Metrics and Indicators for ETL Audit Trails

- Tools and Techniques for ETL Auditing

- Real-World Scenarios and Case Studies

- Challenges in Implementing ETL Audits

- Best Practices for a Robust ETL Audit Framework

- Conclusion

Introduction ETL Audit Process

The ETL audit process plays a critical role in today’s data-driven world, where organizations rely heavily on accurate, timely, and clean data for strategic decisions. Before data reaches reporting dashboards or predictive models, it often passes through complex ETL pipelines that extract data from disparate sources, transform it to match business logic, and load it into data warehouses. Conducting an ETL audit process ensures data quality, regulatory compliance, debugging, and trust-building among stakeholders. To gain mastery over these critical workflows, enrolling in Data Science Training is a strategic move empowering professionals to design robust ETL systems, implement audit mechanisms, and drive data reliability across enterprise platforms. It verifies that what enters the pipeline is correctly and consistently transformed and reaches its destination without error, duplication, or data loss.

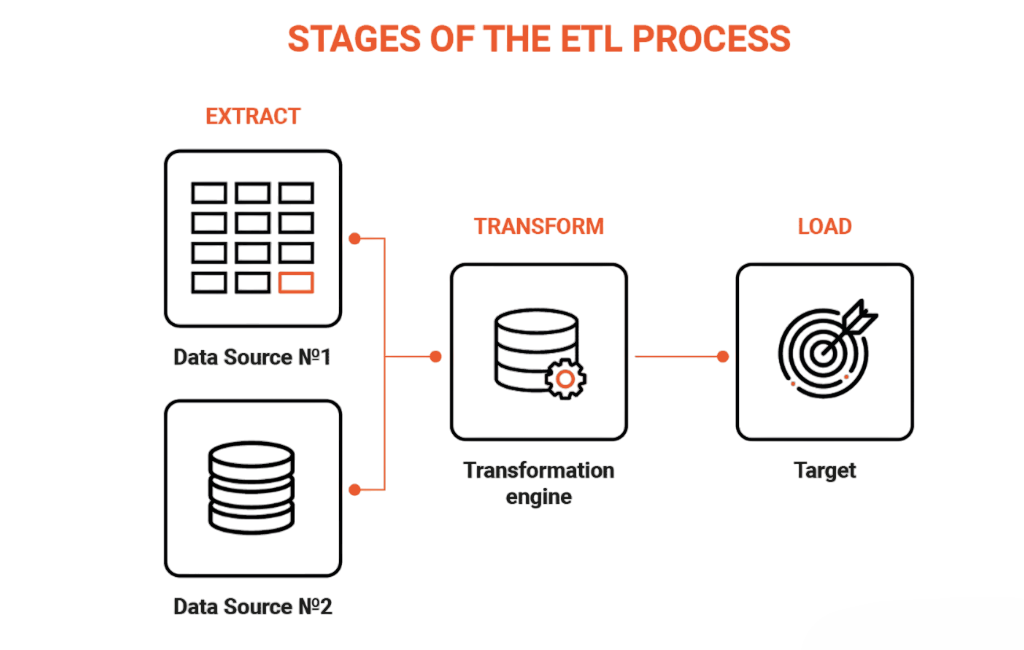

Overview of ETL: Extract, Transform, Load

The Extract, Transform, Load (ETL) framework serves as the foundation for unifying data. It is a methodical, three-part process designed for managing information effectively. The first phase involves gathering basic data from a variety of sources, including structured databases, application interfaces, flat files, and real-time data feeds. This step aims to collect information from every available source. To lead this process at scale, understanding the role of a Big Data Hadoop Architect is essential equipping professionals with the skills to design ingestion pipelines, manage diverse data formats, and architect systems that support enterprise-wide analytics. Next, the data moves to a refinement stage where it undergoes processing. This processing involves cleaning, improving, standardizing, filtering, summarizing, and merging the data to meet the specific structural and logical requirements of the target system and its business rules. Finally, in the deposition stage, this refined data is moved to its designated location, such as a data warehouse, lakehouse, or specialized reporting system, completing the unification process.

Interested in Obtaining Your Data Science Certificate? View The Data Science Online Training Offered By ACTE Right Now!

The Importance of ETL Auditing in Data Pipelines

Auditing the ETL process provides visibility and transparency across every stage of data movement. It answers questions such as: where data originated, how it was transformed, and whether it complies with business rules. To build and manage such systems effectively, understanding Are the Skills Needed to Learn Hadoop becomes essential equipping professionals with the technical foundation to implement audit trails, optimize data pipelines, and ensure enterprise-grade reliability.

- Did the data extraction happen correctly and completely?

- Were any rows dropped, modified, or duplicated during transformation?

- Was the loading process successful, and were all rows inserted into the target system?

- How long did each phase take, and where did errors or delays occur?

Without an audit trail, it’s difficult to identify the root cause of data inconsistencies, incorrect reports, or SLA violations. An ETL audit acts as a safety net, ensuring both traceability and accountability in data operations.

To Explore Data Science in Depth, Check Out Our Comprehensive Data Science Online Training To Gain Insights From Our Experts!

Common Issues Found During ETL Audits

ETL audits often uncover a range of issues that can compromise data reliability: from inconsistent transformation logic and missing lineage metadata to outdated retention policies and silent data corruption. To address these challenges effectively, understanding How to Become a Big Data Analyst is essential equipping professionals with the skills to detect anomalies, enforce governance, and build trust in enterprise data systems.

- Data Loss: Rows or columns may be unintentionally dropped during transformation or load.

- Duplicate Records: Poor join conditions or re-processing may lead to duplication.

- Format and Type Mismatches: Fields might not conform to the expected data type or format, causing downstream errors.

- Transformation Logic Errors: Business rules may be misapplied, leading to incorrect metrics or classifications.

- Late or Partial Loads: Network failures or memory limitations can prevent full data transfer.

- Unlogged Failures: Without proper exception handling, some failures may go unnoticed.

By auditing regularly, teams can detect these issues early and correct them before they affect analytics or decision-making.

Key Metrics and Indicators for ETL Audit Trails

Information captured includes record tallies at each processing checkpoint, exact time records for execution cycles, and detailed flags that signal the outcome of each job. This is backed by thorough failure reports that pinpoint specific error messages and identify corrupted records. Validation values like checksums provide an extra layer of protection for data consistency. The framework also keeps a history of transformation logic versions and related contextual information, all stored in a dedicated metadata repository. To design and manage such robust systems, enrolling in Data Science Training is a strategic move equipping professionals with the skills to implement data validation, maintain transformation lineage, and architect metadata-driven pipelines with confidence. With this data, technical teams can study historical trends, easily restore data to previous states, and conduct in-depth investigations into any processing interruptions or data discrepancies.

Gain Your Master’s Certification in Data Science Training by Enrolling in Our Data Science Master Program Training Course Now!

Tools and Techniques for ETL Auditing

Achieving complete oversight of data integration workflows can be done using various technologies, ranging from older systems to modern data frameworks. ETL platforms such as Informatica PowerCenter, IBM DataStage, and SSIS come with strong built-in tools for logging and generating audit trails. These features offer clear visibility into data manipulation processes. To fully leverage these capabilities, understanding the Essential Concepts of Big Data is critical providing the foundational knowledge needed to manage data pipelines, ensure auditability, and align transformation logic with enterprise standards. Similarly, Talend has specific components for tracking job execution and capturing operational data. On the modern side, data platforms now provide advanced auditing features. Apache NiFi tracks deep data lineage, while orchestration tools like Airflow and dbt enable detailed logging and real-time monitoring of job statuses.

Are You Preparing for Data Science Jobs? Check Out ACTE’s Data Science Interview Questions and Answers to Boost Your Preparation!

Real-World Scenarios and Case Studies

- Financial Auditing: In a global bank, ETL audit logs helped identify discrepancies in daily interest rate calculations caused by a logic change in transformation rules. The audit trail allowed rollback to previous data states and prevented regulatory penalties.

- Healthcare Data Integration: In a healthcare provider’s data warehouse, regular ETL audits uncovered data entry anomalies from EMR systems. These were traced back to inconsistent field mappings, and audits helped enforce correction rules and improve data accuracy for clinical reporting.

- Retail Analytics: A retail company used audit trails to pinpoint data drop-offs during large-scale nightly loads. Based on these insights, the ETL workflow was restructured for better performance, improving report availability by 20%.

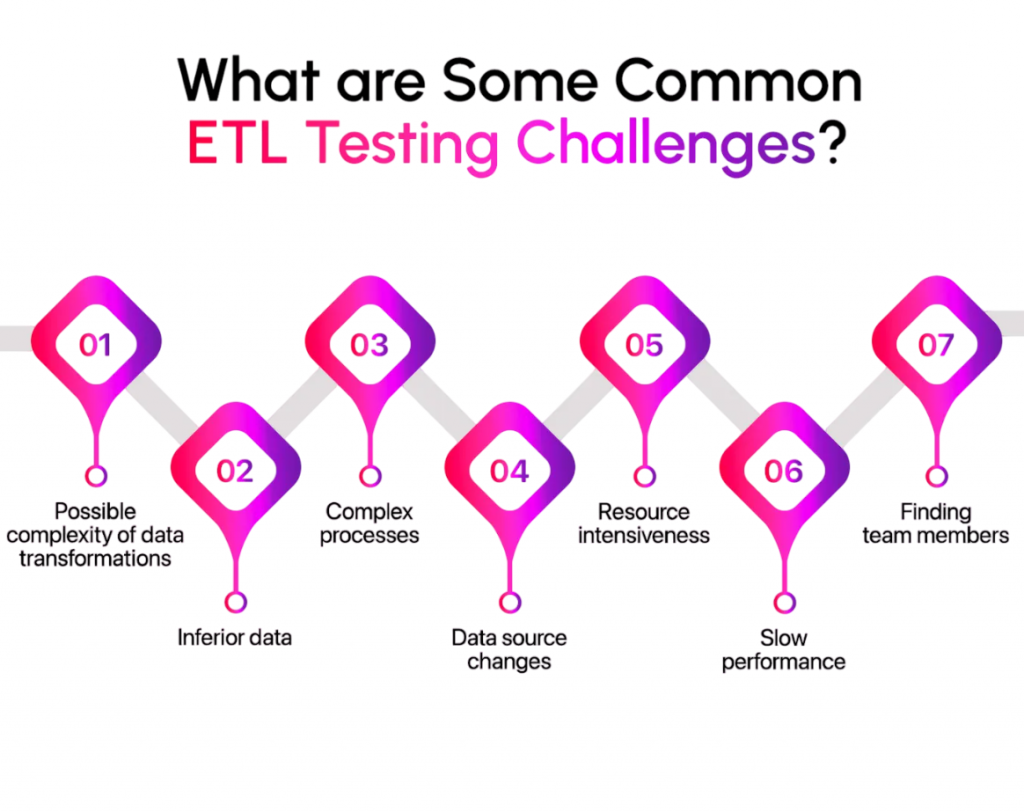

Challenges in Implementing ETL Audits

While important for data integrity, implementing ETL auditing comes with many challenges that organizations need to tackle. One major issue is the high computational cost. Careful tracking can create processing slowdowns and hurt overall system performance.

The problem worsens with the large amounts of audit data; logs can grow significantly, requiring strict data retention policies like archiving or regular deletion. Understanding how Big Data is Transforming Retail Industry helps contextualize these challenges highlighting the need for scalable audit frameworks that balance performance, compliance, and long-term data governance. Additionally, the complexity of modern data transformations adds another serious hurdle, especially when tracking a record’s path through complicated joins and nested logic.

Best Practices for a Robust ETL Audit Framework

Implementing an ETL Audit Framework is not just about logging data, it’s about designing a systematic, scalable, and actionable framework. Best practices include validation checkpoints, transformation lineage tracking, and metadata versioning. To understand How Big Is Big Data in this context is to grasp the scale and complexity that such frameworks must handle ensuring data integrity, compliance, and trust across enterprise systems.

- Implement checkpoint logging: At every ETL stage (Extract, Transform, Load).

- Use version-controlled transformation rules: Log which version was used in each run.

- Automate failure alerts: Using tools like Airflow, Slack bots, or email notifications.

- Store audit logs separately: Keep them isolated from primary data to avoid accidental tampering.

- Review audit reports regularly: Integrate into your QA or compliance cycle.

- Enable data lineage tracking: Trace back the origin of each data point.

- Secure the audit logs: Apply access control and encryption to prevent misuse.

A successful audit process becomes a strategic asset, not just a technical add-on.

Conclusion Analytics

The ETL audit process is essential in the era of real-time analytics and data-driven decision-making, where the quality of insights depends on the reliability of the data pipeline. Auditing the ETL process adds a layer of confidence, transparency, and compliance, transforming your data operations from opaque to traceable. Whether for regulatory compliance, operational resilience, or strategic intelligence, an ETL audit process helps organizations move beyond guesswork and build data trust across the enterprise. To implement these practices effectively and lead enterprise-grade data initiatives, enrolling in Data Science Training is a strategic move equipping professionals with the skills to design auditable pipelines, ensure data integrity, and align analytics with governance standards. By investing in the right tools, metrics, and practices, companies ensure that every piece of data is not just moved, but moved with accountability, making the ETL audit process a cornerstone of effective and trustworthy data management.