- Big Data Growth Trends

- Why Hadoop Online Training is Future-Proof

- Core Modules of Hadoop

- Data Storage and Processing

- Hadoop for Real-Time Data Handling

- Industry Applications

- Advanced Features in Hadoop

- Hands-On Practice

- Global Career Opportunities

- Conclusion

Big Data Growth Trends

The world is witnessing an exponential increase in data generation. From social media interactions and online transactions to sensor-generated data from IoT devices, the volume, variety, and velocity of data collectively termed as Big Data have transformed the way businesses operate. IDC predicts that global data will reach 175 zettabytes by 2025. With this data explosion, traditional storage and processing systems fall short, giving rise to the need for distributed frameworks like Hadoop. To turn this shift into a career advantage, explore Data Science Training a hands-on course that teaches learners how to manage, analyze, and extract insights from massive datasets using industry-standard tools and techniques. Organizations are actively investing in Big Data technologies to stay competitive, understand consumer behavior, and drive innovation. Hence, the demand for skilled professionals who can handle vast datasets effectively is at an all-time high.

Why Hadoop Online Training is Future-Proof

Apache Hadoop is not just a tool but an entire ecosystem for handling and analyzing massive datasets. Its ability to store structured, semi-structured, and unstructured data makes it highly versatile. Hadoop’s fault-tolerant nature, scalability, and open-source foundation ensure it adapts to evolving data demands. As businesses across domains like retail, finance, healthcare, and telecommunications shift to digital platforms, Hadoop’s relevance grows. To understand the strategic value behind this shift, explore What is Big Data Analytics a comprehensive guide that explains how organizations harness massive datasets to drive smarter decisions, improve efficiency, and unlock competitive advantage. Furthermore, Hadoop integrates easily with cloud platforms and supports real-time analytics, making it a future-proof choice for modern enterprises. Its vast developer community ensures continuous enhancements and up-to-date security protocols, ensuring longevity in a fast-changing tech landscape.

Interested in Obtaining Your Data Science Certificate? View The Data Science Online Training Offered By ACTE Right Now!

Core Modules of Hadoop

Hadoop comprises four primary modules that together create a powerful data processing framework: HDFS, YARN, MapReduce, and Hadoop Common. To complement this ecosystem with fast, in-memory processing, explore How to install Apache Spark a step-by-step guide that walks you through setting up Spark on Windows and integrating it with Hadoop for scalable analytics and real-time insights.

- HDFS (Hadoop Distributed File System): A scalable and fault-tolerant storage system that divides files into blocks and distributes them across nodes.

- MapReduce: A programming model used for processing and generating large datasets in parallel across a Hadoop cluster.

- YARN (Yet Another Resource Negotiator): Manages resources and schedules jobs across the cluster.

- Common Utilities: Libraries and functions used by other Hadoop modules to ensure data transfer, job coordination, and task tracking.

These core modules form the foundation of the Hadoop ecosystem and are critical for understanding its architecture and functionality.

To Explore Data Science in Depth, Check Out Our Comprehensive Data Science Online Training To Gain Insights From Our Experts!

Data Storage and Processing

At the heart of Hadoop lies its distributed storage and processing capabilities. HDFS stores data across multiple nodes, ensuring redundancy and high availability. Large files are split into blocks (default size of 128 MB or 256 MB) and distributed across the cluster. Each block is replicated (typically three times) to prevent data loss in case of node failure.

To learn how this architecture powers scalable analytics and fault-tolerant systems, explore Data Science Training a hands-on course that covers Hadoop fundamentals, real-time data pipelines, and the tools used in modern data science workflows. When processing data, MapReduce takes advantage of this distribution by sending computations to where the data resides. This reduces network overhead and speeds up execution. Combined, these features enable Hadoop to manage petabyte-scale datasets efficiently.

Hadoop for Real-Time Data Handling

While Hadoop originally gained fame for batch processing, newer components have enhanced its ability to handle real-time data. Tools like Apache Spark and Apache Storm work alongside Hadoop to enable streaming data processing. For example, in financial fraud detection or social media trend analysis, organizations need immediate insights. Spark processes data in memory, offering significantly faster computation than traditional MapReduce. Integrating Kafka with Hadoop enables real-time data ingestion from various sources. To understand how resource management powers these real-time analytics, explore Apache Hadoop YARN a foundational component that orchestrates cluster resources and enables scalable, multi-tenant data processing across modern big data platforms. These technologies combined give Hadoop the agility to handle both historical and real-time data, making it suitable for today’s dynamic data environments.

Gain Your Master’s Certification in Data Science Training by Enrolling in Our Data Science Master Program Training Course Now!

Industry Applications

Hadoop’s utility spans across industries: from retail and finance to healthcare and telecommunications, it enables scalable data processing and actionable insights. To understand how real-time messaging systems complement this ecosystem, explore Kafka vs RabbitMQ a comparative guide that breaks down their architectures, performance models, and ideal use cases in modern data pipelines.

- Retail: Personalized recommendations, inventory forecasting, and customer behavior analysis.

- Finance: Risk modeling, fraud detection, and algorithmic trading.

- Healthcare: Genomic data processing, predictive diagnostics, and patient record management.

- Manufacturing: Predictive maintenance, supply chain optimization, and IoT sensor data analysis.

- Telecommunications: Network performance monitoring, call data record analysis, and churn prediction.

- Government and Public Sector: Crime analysis, urban planning, and citizen services improvement.

These use cases highlight Hadoop’s adaptability and value in processing complex datasets to extract actionable insights.

Are You Preparing for Data Science Jobs? Check Out ACTE’s Data Science Interview Questions and Answers to Boost Your Preparation!

Advanced Features in Hadoop

As Hadoop has matured, several advanced capabilities have been introduced to increase its efficiency and ease of use. To understand the professionals who design and optimize these complex data ecosystems, explore Who Is a Data Architect a comprehensive guide that explains the role, responsibilities, and skills required to architect scalable, secure, and high-performance data infrastructures.

- Federation in HDFS: Allows multiple namespaces, improving scalability and isolation.

- Erasure Coding: Reduces storage overhead while maintaining fault tolerance.

- Data Encryption: Ensures secure data at rest and in transit.

- Kerberos Authentication: Provides secure user authentication.

- Resource-aware Scheduling in YARN: Allocates resources intelligently to maximize throughput.

- High Availability (HA) Architecture: Ensures system uptime through standby NameNodes.

Incorporating these features into a training curriculum prepares learners to manage real-world, enterprise-grade Hadoop environments.

Hands-On Practice

Theory alone is not enough. Effective Hadoop training includes extensive hands-on exercises that simulate real-world problems. Learners get to set up multi-node clusters, ingest large datasets using tools like Sqoop and Flume, process them using MapReduce or Spark, and analyze outputs using Hive and Pig. To understand the coordination service that keeps these distributed systems running smoothly, explore What is Apache Zookeeper a foundational guide that explains how Zookeeper manages configuration, synchronization, and naming across Hadoop ecosystems. Projects may include building a movie recommendation engine, analyzing clickstream data, or processing stock market feeds. Cloud labs, virtual machines, or sandbox environments are typically provided to facilitate this practical exposure. This not only reinforces concepts but also boosts learners’ confidence in handling Hadoop tasks independently.

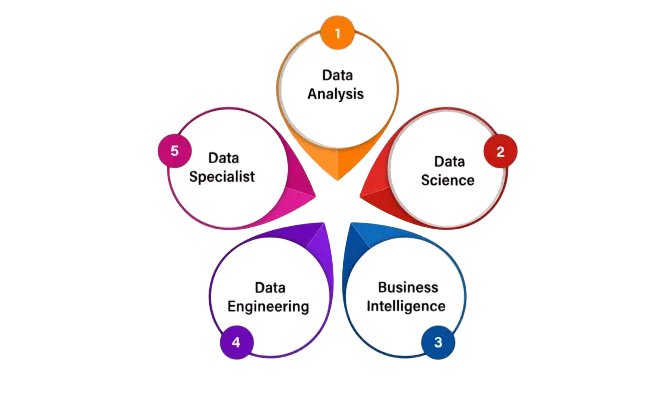

Global Career Opportunities

Hadoop professionals are in high demand around the world, showing the rising importance of Big Data. To understand the tools that enable fast, flexible querying in this ecosystem, explore Hive vs Impala a comparative guide that breaks down their performance, architecture, and use cases to help learners choose the right tool for interactive analytics.

- Hadoop Developer

- Data Engineer

- Big Data Architect

- Hadoop Administrator

- Data Analyst

Competitive Salaries:

- Freshers in India can earn about ₹6 LPA.

- Experienced specialists can make ₹25+ LPA in India.

- Salaries in the U.S. often go over $100,000 each year.

Major Recruiters: Notable companies hiring Hadoop professionals are:

- Amazon

- Accenture

- IBM

- Capgemini

- Infosys

- Netflix

Conclusion

The era of Big Data has arrived, and Hadoop sits at its core. Whether you’re an IT professional aiming to upskill or a fresher exploring a future-ready domain, Hadoop training opens up immense opportunities. With its ability to store and process vast data, support real-time analytics, and adapt to technological changes, Hadoop continues to be relevant and reliable. To turn this reliability into career-ready expertise, explore Data Science Training a hands-on course that teaches learners how to harness Hadoop’s power alongside modern tools like Spark, Python, and machine learning frameworks. By mastering Hadoop and its ecosystem tools, learners not only future-proof their careers but also contribute meaningfully to data-driven innovation across sectors. Now is the time to heed the call of the future and step into the dynamic world of Hadoop with confidence.