- Introduction: What is anInformatica ETL developer?

- Understanding ETL: The Core Concepts

- Skills Required to Become a Successful ETL Developer

- Choosing the Right ETL Tools and Technologies

- Building Your First ETL Pipeline

- Best Practices for ETL Development

- Challenges in ETL Development and How to Overcome Them

- Career Path and Opportunities for ETL Developers

- Conclusion

Introduction: What is an Informatica ETL developer?

An ETL Developer is a specialized data professional responsible for managing the process of Extracting Transforming and Loading data from various sources to target systems like databases, data warehouses, or Business Analyst Training . ETL developers play a crucial role in ensuring that raw data is processed, cleaned, and integrated into systems where it can be used for analysis, reporting, or decision-making. Informatica ETL developers typically work with large data sets and require a strong foundation in data engineering, database management, and programming. A successful ETL developer ensures that the data pipeline is efficient, reliable, and scalable.

Understanding ETL: The Core Concepts

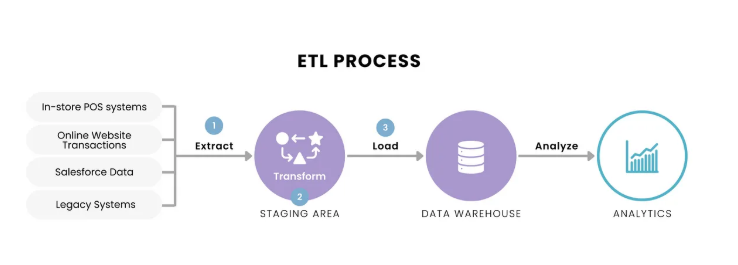

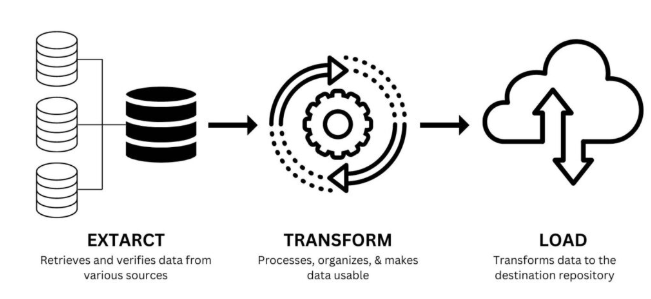

Before diving into the tools and technologies that ETL developers use, it’s crucial to understand the core concepts behind the ETL process. ETL stands for:

- Extract: The process of collecting raw data from different sources, such as databases, APIs, flat files, or cloud services. This stage is about accessing and gathering data in its raw form.

- Transform: In this stage, data is Business Analytics with Excel Fundamentals, enriched, and converted into the desired format or structure. This might include tasks like data validation, filtering, joining tables, aggregating data, and mapping data to the target schema.

- Load: The final step where the transformed data is loaded into the target system, such as a relational database, data warehouse, or a cloud data platform. This process may also include data indexing and optimizing for query performance.

Understanding the intricacies of each stage of the ETL process is essential for becoming an efficient ETL developer. Additionally, the complexity of Extracting Transforming and Loading can vary depending on the source systems, data types, and target environments involved.

Dive into Business Analyst by enrolling in this Business Analyst Training today.

Skills Required to Become a Successful ETL Developer

To excel as an Informatica ETL developer, you’ll need to build a solid set of technical and analytical skills. These skills will help you handle diverse data sources, manage large data volumes, and ensure the efficiency and scalability of ETL pipelines.

Key skills include:

- Programming: Familiarity with programming languages such as Python, SQL, Java, and Scala is essential. Python and SQL are particularly important for automating tasks and querying databases.

- SQL Knowledge: Mastery of SQL is critical since a significant portion of ETL development involves querying databases, transforming data, and performing data manipulation tasks.

- Data Modeling: Understanding how to structure and model data efficiently is necessary to ensure that the transformed data can be easily analyzed and queried in the target system.

- Data Warehousing: Familiarity with data warehouse architecture and the principles of dimensional modeling (e.g., star schema, snowflake schema) is crucial.

- ETL Tools: Knowledge of ETL tools like ECBA vs CCBA vs CBAP, Talend, Informatica, or Microsoft SSIS is a must. These tools streamline the ETL process by providing built-in components to connect, extract, transform, and load data.

- Version Control: Understanding Git or similar version control systems to track changes and collaborate effectively with teams is essential.

- Big Data Technologies: Familiarity with big data tools like Hadoop, Apache Spark, or cloud-based data platforms (e.g., AWS Redshift, Google BigQuery) can be beneficial, especially when working with large-scale datasets.

- Problem-Solving and Analytical Thinking: As an ETL workflows, you will often encounter data-related issues that require you to troubleshoot and resolve problems. Strong problem-solving skills are vital.

- Apache Spark: A powerful open-source processing engine that can handle real-time and batch data processing. It’s suitable for large-scale data transformations.

- Talend: An open-source ETL tool that offers both graphical and code-based interfaces for data integration tasks. Talend simplifies data extraction, transformation, and loading.

- Informatica PowerCenter: A comprehensive ETL solution used by enterprises for data integration. It provides rich functionalities for managing and automating ETL workflows.

- Microsoft SSIS: SQL Server Integration Services is a popular ETL tool used in SQL Server environments. It provides an intuitive interface for creating data transformation pipelines.

- Apache NiFi: A powerful data integration tool Upgraded Version of Tableau for automating the movement and transformation of data between systems.

- Cloud ETL Services: Many companies are moving to cloud-based ETL solutions like AWS Glue, Google Cloud Dataflow, and Azure Data Factory due to their scalability and seamless integration with cloud data storage services.

- Define the Scope: Clearly outline the data sources, required transformations, and the destination system.

- Extract Data: Connect to the source system (e.g., a database or flat files) using connectors or APIs and extract the raw data.

- Transform Data: Apply necessary transformations such as filtering, data cleaning, joining tables, and applying business rules. Business Analyst Training step ensures the data is in the correct format for analysis.

- Load Data: Load the transformed data into the target system. Ensure that the data is loaded efficiently and that the process handles large datasets or incremental updates.

- Test the Pipeline: Once the pipeline is built, test it by running test cases to ensure data accuracy, completeness, and performance. Perform load testing to ensure scalability.

- Monitor and Optimize: Once the pipeline is in production, use monitoring tools to track its performance and optimize as needed.

- Modular Design: Break down your ETL pipeline into smaller, reusable components that are easier to maintain and update.

- Error Handling: Implement robust error-handling mechanisms to gracefully handle issues that arise during the extraction, transformation, or loading stages.

- Data Quality Checks: Perform data quality checks to ensure that the data being processed is clean and consistent. This can include validation checks, null value handling, and duplicates removal.

- Incremental Loading: Instead of loading the entire dataset each time, load only new or modified records to reduce processing time and resource consumption.

- Parallel Processing: When dealing with large datasets, use parallel processing techniques to split the workload into smaller tasks that can be processed concurrently, improving speed.

- Version Control: Use version control systems like Git to track changes to the ETL scripts and configurations. Basic Quality Improvement Ishikawa Tools ensures proper collaboration and rollback when needed.

- Monitoring: Continuously monitor the performance of your ETL pipelines. Set up logging mechanisms to track processing times, errors, and other key metrics.

- Handling Large Datasets: Processing vast amounts of data can lead to performance bottlenecks. Use parallel processing, batch processing, or distributed computing to handle large datasets efficiently.

- Data Quality Issues: Raw data often comes with inconsistencies. Implement data quality checks and cleansing mechanisms during the transformation phase.

- Schema Changes: The source schema may change over time, breaking your ETL process. To overcome this, implement schema evolution strategies or use tools that automatically adapt to changes.

- Performance Bottlenecks: Optimize SQL queries, reduce unnecessary transformations, and consider using in-memory processing when possible.

Learn the fundamentals of Business Analyst with this Business Analyst Training .

Choosing the Right ETL Tools and Technologies

A successful ETL developer is not only skilled in programming but also proficient with the right tools and technologies that can make the development process faster and more efficient. Some common ETL tools and technologies include:

When choosing the right ETL tool, consider the complexity of your data transformation, the volume of data, and the environment (on-premise or cloud) in which you’re working. Cloud platforms are growing in popularity due to their cost-effectiveness, scalability, and integration with other cloud-based services.

Building Your First ETL Pipeline

Once you’ve familiarized yourself with the core concepts and tools, it’s time to get hands-on and build your first ETL pipeline. Here’s a high-level breakdown of the steps involved in creating an ETL pipeline:

It’s important to document each step of the pipeline to make it easy for your team to understand and maintain ETL workflows. Automating the pipeline and scheduling regular updates can help ensure consistency and reliability.

Take charge of your Business Analyst career by enrolling in ACTE’s Business Intelligence Master Program Training Course today!

Best Practices for ETL Development

To ensure your ETL pipelines are efficient, scalable, and maintainable, adhere to these best practices:

Challenges in ETL Development and How to Overcome Them

While ETL development is rewarding, it also comes with its challenges. Here are some common issues you might face and how to tackle them:

Want to ace your Business Analyst interview? Read our blog on Business Analyst Interview Questions and Answers now!

Career Path and Opportunities for ETL Developers

ETL developers are in high demand, especially in organizations that handle large volumes of data. As an ETL developer, your career path can progress significantly as you gain experience. At the entry level, you’ll focus on learning the fundamentals of Extract, Transform, Load (ETL) processes, assisting with pipeline creation, and debugging issues. As you move to a mid-level role, you’ll take on greater responsibilities by designing and building more complex data pipelines, optimizing performance, and implementing advanced data transformations. With further experience, you can advance to a senior ETL developer role, where you’ll lead data integration projects, design scalable data architectures, and mentor junior developers. At this stage, you may also work with Big Data technologies or cloud platforms such as AWS, Azure, or Google Cloud. Predictive Analytics, with substantial experience and strategic oversight, you may transition into roles like data engineer or data architect, where you’ll be responsible for designing and managing entire data ecosystems that support enterprise-wide analytics and business intelligence. ETL developers have job opportunities across a variety of industries including finance, healthcare, retail, and technology. The rapid growth of big data analytics has only intensified the demand for skilled ETL professionals, making it a promising and rewarding career path.

Conclusion

Becoming a successful ETL (Extract, Transform, Load) developer involves a blend of technical expertise, practical experience, and a deep understanding of data integration processes. By mastering the ETL pipeline, selecting the appropriate tools, and following best practices, you can create robust and scalable data systems that drive valuable business insights. As Business Analyst Training becomes increasingly integral to business decision-making, the demand for skilled ETL developers continues to rise. By staying current with the latest technologies and methodologies, you can enhance your skill set and stay ahead in this rapidly evolving field. With the growing reliance on data across industries, the career prospects for ETL developers have never been better, offering opportunities for growth and advancement in the data management space.