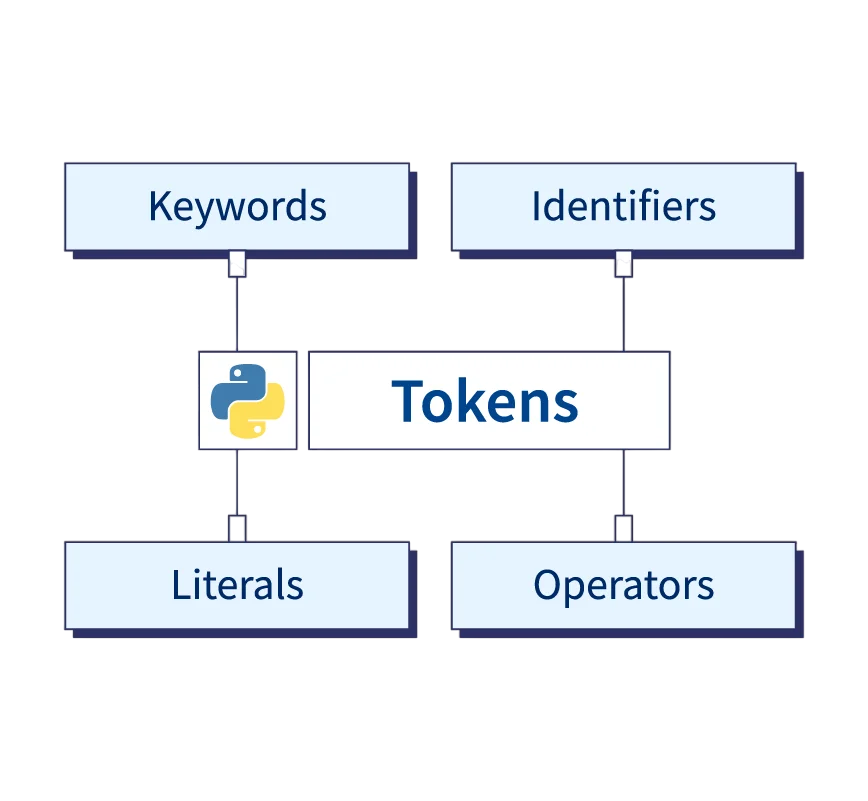

- Definition of Tokens in Programming

- Types of Python Tokens

- Identifiers and Keywords

- Literals and Operators

- Punctuators/Delimiters

- Role in Lexical Analysis

- Tokenization Process

- Examples and Classification

- Conclusion

Definition of Tokens in Programming

In programming, a token is a categorized block of text that represents a basic element of the language. In simpler terms, tokens are the words or symbols in a source code that a compiler or interpreter reads to understand what the program is supposed to do. Tokens help in breaking down a line of code into individual units that can be analyzed and executed. These tokens are then processed during lexical analysis, Full stack Training the first phase of a compiler’s front-end. Lexical analyzers or tokenizers read the source code character by character and convert it into a stream of tokens, enabling further stages like parsing and semantic analysis.Python, like every other programming language, uses a set of basic components known as tokens. Tokens are the smallest units in a program that have meaning to the compiler or interpreter. These include keywords, identifiers, literals, operators, and punctuation. Understanding tokens is crucial for every Python programmer because they form the foundation of the language’s syntax and structure. Without these building blocks, the compiler would be unable to parse and execute code efficiently.

To Earn Your Web Developer Certification, Gain Insights From Leading Data Science Experts And Advance Your Career With ACTE’s Web Developer Courses Today!

Types of Python Tokens

In Python, tokens are the smallest building blocks of the language, which the interpreter uses to understand and execute code. Every piece of a Python program whether keywords, operators, or identifiers is made up of tokens. Understanding the different types of tokens is essential for writing syntactically Throw and Throws Java correct and efficient Python code.

Here are the main types of Python tokens:

- Keywords: Keywords are reserved words that have a special meaning in Python. They form the core syntax of the language and cannot be used as identifiers (variable or function names).

- Identifiers: Identifiers are names used to identify variables, functions, classes, modules, and other objects. They must start with a letter (A-Z or a-z) or an underscore (_) followed by letters, digits (0-9), or underscores.

- Literals: Literals are constant values used directly in the code.

- Operators: Operators perform operations on variables and values.

- Delimiters (Separators) : Delimiters are symbols used to separate statements or group code blocks IPO Cycle.

- Comments : Though not technically tokens used by the interpreter for execution, comments (# comment) are important for code readability and documentation. They are ignored during program execution.

Identifiers and Keywords

Identifiers are the names used to identify variables, functions, classes, modules, and other objects in Python. An identifier must start with a letter (A-Z or a-z) or an underscore (_) followed by zero or more letters, underscores, and digits (0-9). Identifiers are case-sensitive, meaning Variable and variable would be treated as two separate identifiers. Naming conventions suggest using meaningful and descriptive names to enhance code Call a Function in Python readability and maintainability.

Examples of valid identifiers:

- my_var

- _hello123

- PythonClass

Keywords, on the other hand, are reserved words that have a predefined meaning in the language. Python has a fixed set of keywords that cannot be used as identifiers. These words are essential for defining control flow and program structure.

Some common Python keywords include:

if, else, for, while, def, class, return, import, try, except, pass

Python has over 30 keywords, and their usage must follow the syntax rules. Attempting to use a keyword as a variable name results in a syntax error.

Would You Like to Know More About Web Developer? Sign Up For Our Web Developer Courses Now!

Literals and Operators

Literals represent fixed values in a program. They can be classified into several types:

- String Literals: e.g., ‘hello’, “world”

- Numeric Literals: e.g., 10, 3.14, 0xFF

- Boolean Literals: True, False

- Special Literal: None

Literals are used when assigning values to variables or passing fixed inputs to functions. Full stack Training Python supports both single and multiline strings using triple quotes. Numeric literals can also be written in binary, octal, and hexadecimal formats. Operators are special symbols that perform operations on variables and values. Python supports several types of operators:

- Arithmetic Operators: +, -, *, /, %

- Relational Operators: ==, !=, >, <, >=, <=

- Logical Operators: and, or, not

- Assignment Operators: =, +=, -=

- Bitwise Operators: &, |, ^, ~, <<, >>

Operators allow complex expressions and logic to be written concisely. For instance, a single line of code like result = (x + y) * z uses multiple token types and operator precedence.

Punctuators/Delimiters

Punctuators also called delimiters are symbols that separate statements and group elements in a Python program. They include:

- Comma (,)

- Colon (:)

- Semicolon (;)

- Parentheses ( )

- Brackets [ ]

- Braces { }

- Period (.)

- At symbol (@)

For instance, colons are used in defining functions and control structures, and parentheses are used to call functions or group expressions Remove Duplicate Elements . Delimiters are critical for ensuring code executes in the correct logical grouping.

Are You Interested in Learning More About Web Developer? Sign Up For Our Web Developer Courses Today!

Role in Lexical Analysis

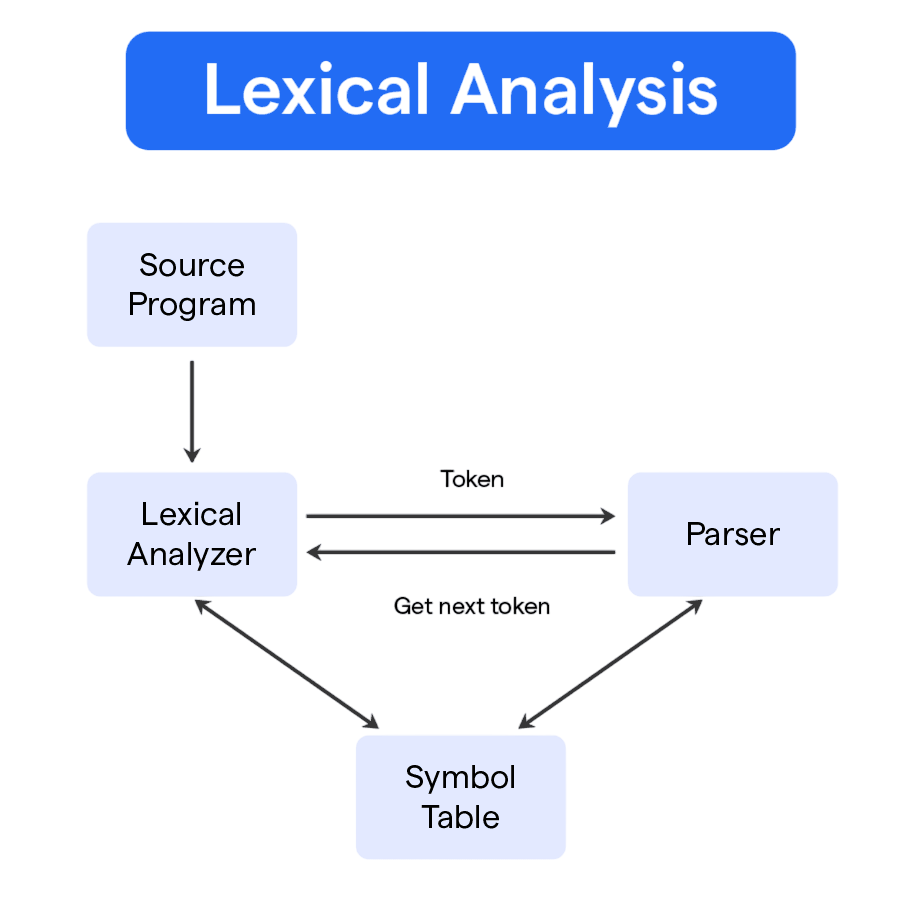

Lexical analysis is the first phase of the compilation or interpretation process. During this phase, the source code is scanned and split into tokens. The lexical analyzer identifies the types of tokens used and passes them to the next stage of the compilation process (syntax analysis). Web Developer vs Software Developer Tokens help the interpreter break down the code into manageable chunks, allowing for further syntactic and semantic analysis. This step also helps catch basic syntax errors such as unmatched parentheses or misspelled keywords.The role of tokens in lexical analysis is fundamental to how a programming language processes code.

During lexical analysis, the source code is scanned and broken down into meaningful units called tokens. These tokens represent the smallest elements like keywords, identifiers, operators, literals, and delimiters. By converting raw text into tokens, the compiler or interpreter can easily understand the structure and syntax of the code. This process simplifies parsing and error detection, ensuring the code follows the language’s rules. In Python, lexical analysis helps transform the written code into tokens that are then used for further compilation or interpretation stages.

Tokenization Process

The tokenization process in Python involves scanning the source code and dividing it into tokens. This is typically handled internally by the Python interpreter Backend Development , using lexical analysis tools. When you write a line of code, Python identifies keywords, identifiers, literals, operators, and punctuation to interpret the program.

Example:

x = 10 + 5

Tokens identified:

- x (Identifier)

- = (Assignment Operator)

- 10 (Literal)

- + (Arithmetic Operator)

- 5 (Literal)

This classification helps in understanding the structure and flow of Python programs and enables error detection and correction during development.

Examples and Classification

Let’s explore a simple Python program and break it into tokens:

- def greet(name):

- print(“Hello, ” + name)

Tokens:

- def (Keyword)

- greet (Identifier)

- ( (Delimiter)

- name (Identifier)

- ) (Delimiter)

- : (Delimiter)

- print (Identifier / Built-in function)

- “Hello, ” (Literal)

- + (Operator)

- name (Identifier)

This classification helps in understanding how Python breaks down and processes code line by line Software Developer vs Software Engineer, reinforcing the importance of correct syntax and structure.

Conclusion

Tokens are fundamental components of Python and every programming language. They allow interpreters to understand code and execute it accordingly. By categorizing code into identifiers, keywords, literals, operators, and delimiters, tokens simplify the process of lexical and syntactic analysis. Understanding the types and rules of tokens not only helps developers write clean and efficient code but also enhances debugging and code optimization efforts. From beginners to advanced programmers, Full stack Training mastering tokens is a critical step in becoming proficient in Python. By adhering to naming conventions, being aware of tokenization rules, and understanding how tokens flow through the compilation process, developers can write more robust and maintainable Python programs.